An official website of the United States government

The .gov means it’s official. Federal government websites often end in .gov or .mil. Before sharing sensitive information, make sure you’re on a federal government site.

The site is secure. The https:// ensures that you are connecting to the official website and that any information you provide is encrypted and transmitted securely.

- Publications

- Account settings

Preview improvements coming to the PMC website in October 2024. Learn More or Try it out now .

- Advanced Search

- Journal List

- HHS Author Manuscripts

Methods to Improve Reliability of Video Recorded Behavioral Data

Kim kopenhaver haidet.

School of Nursing, 307 Health & Human Development East, The Pennsylvania State University, University Park, PA 16802

Judith Tate

School of Nursing, University of Pittsburgh, Pittsburgh, PA

Dana Divirgilio-Thomas

School of Nursing, University of Pittsburgh

Ann Kolanowski

School of Nursing, The Pennsylvania State University, University Park, PA

Mary Beth Happ

Behavioral observation is a fundamental component of nursing practice and a primary source of clinical research data. The use of video technology in behavioral research offers important advantages to nurse scientists in assessing complex behaviors and relationships between behaviors. The appeal of using this method should be balanced, however, by an informed approach to reliability issues. In this paper, we focus on factors that influence reliability, such as the use of sensitizing sessions to minimize participant reactivity and the importance of training protocols for video coders. In addition, we discuss data quality, the selection and use of observational tools, calculating reliability coefficients, and coding considerations for special populations based on our collective experiences across three different populations and settings.

Behavioral observation is a fundamental component of nursing practice and a primary source of clinical research data. The use of video technology to capture behavioral observations is becoming more prevalent in nursing research because of the advantage it provides in the ability to replay and review observational data, the control of observer fatigue or drift, the ability to achieve levels of observation and analysis not afforded by real-time observations, and the relative ease of using modern sophisticated recording equipment ( Caldwell & Atwal, 2005 ; Heacock, Souder, & Chastain, 1996 ). The appeal of this method needs to be balanced, however, by an informed approach to reliability issues that include those universal to all observation methods and those unique to video recording.

In this paper, we describe techniques and strategies to ensure the reliability of behavioral data obtained via video recording. We emphasize non-technical factors influencing the reliability of such recordings. Our discussion is based on our collective experiences conducting the following studies involving three different subject populations and settings : Improving Communication with Non-speaking ICU Patients; Symptom Management ( PI: MBH )), Patient-Caregiver Communication, and ICU Outcomes ( PI: MBH ); Non-pharmacological Interventions for Behavioral Symptoms in Nursing Home Residents with Dementia ( PI: AK ); and Patterns of Stress Reactivity in Low Birth Weight Preterm Infants ( PI: KKH ).

Selecting Video Recording As a Method of Data Collection

The decision to use video recording for data collection should be informed by the purpose and aims of the study as well as the unit of behavior being measured ( Caldwell & Atwal, 2005 ). Video recordings are an excellent source of data that can be used to assess relationships between behaviors that occur in close temporal proximity to one another. For example, data from video recordings have demonstrated relationships among infant handling in the neonatal intensive care unit (NICU), facial expressions of the neonate, and simultaneously collected heart rate variability data ( Haidet, Susman, West, & Marks, 2005 ). Video recording as a means of data capture has also been used in a study focused on testing the relationship between morning-care processes and pain expression in nursing home residents with dementia ( Sloane et al., 2007 ). Video recording, however, may not be appropriate for all types of behavioral observations in clinical settings. The intrusiveness of the methodology may alter naturally occurring behaviors and, thus, limit its appropriateness in certain situations, such as interactions during emotionally-charged events or provision of direct care.

Video recording provides a high degree of reproducibility when measuring observations. Unlike real-time data collection, video recordings can be re-played any number of times. On the other hand, video recording provides only a window of what happens in real time and may lack important contextual data ( Latvala, Vuokila-Oikkonen, & Janhonen, 2000 ). Even with multiple, strategically-placed cameras, it may be difficult to capture the gestalt of a targeted behavioral observation, such as team collaboration during an emergency in a busy clinical setting.

The researcher needs to take all these factors into consideration when planning the study protocol. If the decision is in favor of using video recording for data collection, there are a number of ways to ensure the reliability of those data.

Methods To Improve Raw Data Quality

Participant reactivity is a persistent issue in any observational research, but the use of cameras means the presence of an additional “eye” ( Gross, 1991 ). Reactivity is defined as a response between the researcher and participant during data collection that affects the natural course of behavior as a result of being observed ( Paterson, 1994 ). The “Hawthorne effect” is a classic example of reactivity that can be decreased by exposing participants to longer periods of observation to acclimate them to the presence of the observer. This can be accomplished by conducting a sensitizing session or by analyzing data taped later in a predetermined “middle” segment of the session (e.g., after the first 3-5 minutes) ( Elder, 1999 ). For example, Happ, Sereika, Garrett, and Tate (2008) conducted a sensitizing session simply by collecting one more video recording of nurse-patient interactions in the ICU than necessary for measurement. The researchers did not use the first of five video-recorded sessions for outcome measurement. These strategies enable the participant to move beyond an initial period of self-consciousness and the observer to capture a more representative sample of behavior ( Rosenstein, 2002 ) Alternately, researchers might minimize participant reactivity by decreasing the presence of the additional “eye” through the use of a smaller or concealed camera (Gross).

Good planning in the observational protocol and training of research assistants are vital. Because of improvements in camera technology and the employment of trained video technicians as research assistants, we have minimal missing or lost data due to poor camera angle, camera malfunction, or poor picture quality. Skilled video technicians can be found within a university, particularly if the institution has film majors. Local technical or art schools may provide suitably skilled personnel. If trained video technicians are not available, even data collectors with limited or no videography skills can be trained to meet study standards because video technology has eliminated the need for advanced skills to obtain high quality data. Training unskilled video data collectors can be accomplished with a checklist of both operational and video quality standards prior to entering the field. We recommend a series of guided practice sessions with the camera and testing to predetermined performance criteria of all research assistants who will be responsible for operating the camera.

The length of video-recorded observation needed will vary depending on the behavioral unit, research questions, and associated measures. A reasonable representation of the behavior of interest can be obtained by sampling either by time or by event ( Casey, 2006 ). Selection of video segments for measurement should be guided by pragmatic and/or conceptually relevant criteria that can be consistently implemented across research participants and data samples. A predetermined time increment (e.g., beginning after the first 5 minutes of filming) or behavioral target (e.g., when dressing activities begin) can be used as the starting point for measurement. For example, surveillance video used to capture a naturally occurring event of interest will be longer than video recordings directed specifically to (and initiated with) a target event. A study examining gait among adults with Parkinson's disease video recorded subjects walking a predetermined distance. Length of video recordings varied by individual subject speed ( Dijkstra, Zijlstra, Scherder, & Kamsma, 2008 ). Measures can be applied to a time sample or series of time samples (e.g., 1 minute of video recording sampled every 10 minutes). Alternatively, measurement may target a specific event within a longer recording, such as a parent's arrival in the child's hospital room ( Dichter, 1999 ). There will likely be greater variability among surveillance video-recordings of hospitalized children's responses to parental visitation than in the preceding example of a planned test of gait.

Measures of human interaction and communication can be applied to relatively short, dense segments of videotaped observation. In a recent clinical trial, observations of nurse-patient interactions in the ICU were conducted systematically in the morning and afternoon based on the assumption that critical care nurses interact with patients on a regular basis ( Happ et al., 2008 ). This sampling structure also accounted for the likelihood that behaviors may change based on the time of day. Event sampling is appropriate for those phenomena that are less frequently observed, such as bathing procedures in nursing home facilities. Researchers need to carefully define the temporal and spatial boundaries of the phenomena of interest. Once the behavioral unit is established, the next step is to select appropriate measurement instruments.

Use of Observational Tools

Options for quantitative measurement of behaviors from observation span the full range of measurement levels, depending on the tool selected and the needs of the research. For example, researchers may choose to apply a nominal typology or categorization scheme such as “communication styles,” or an ordinal Likert-type scale to rate degree of success ( Happ et al., 2008 ). Behavioral measures can be as simple as frequency counts of the occurrence of a target behavior or a continuous measure of the duration of that behavior ( Roter, Larson, Fischer, Arnold, & Tulsky, 2000 ; Spencer, Coiera, Logan, 2003 ).

The first decision in selection of observational measures is whether the level of measurement requires a microanalytic or macroanalytic approach. Microanalytic approaches can produce fine discrimination between units of analysis and a variety of information, but are very time consuming, resource-intensive, and produce large datasets. Light (1988) recommended microanalysis for single-subject case designs or small-sample studies. Macroanalysis, or analysis of an entire interactional process as a whole, using global measures may be more appropriate for studies evaluating the effectiveness of an intervention.

Observational measures employ predetermined coding or rating schemes to quantify behavior ( Morrison, Phillips, & Chae 1990 ). Observational tools designed for use with video recording allow researchers to use manualized procedures and decision rules to assign codes to nonverbal behaviors, verbal expressions, or vocal utterances. For example, the Facial Action Coding System (FACS) is a microanalytic system for rating facial movements and emotional facial expressions ( Ekman, Friesen, & Hager, 2002 ). FACS coders receive specialized instruction involving 100 hours of training and practice with final competency test and certification as a trained coder ( Description of Facial Action Coding System [FACS], n.d. ). Computer software available for conducting microanalysis of video-recorded behavioral interactions include Canopus Procoder3™ (Thompson Grass Valley 2008, Inc., Burbank, CA), Noldus™ (Noldus Information Technologies, 2008, Wageningen, The Netherlands), Transana™ (Wisconsin Center for Education Research, University of Wisconsin-Madison), and Studiocode™ (Studiocode Business Group, Warriewood, Australia).

Observational checklist tools intended for use in direct observation can also be applied to video recordings, usually at the macroanalytic level. The QUALCARE Scale ( Phillips, Morrison, & Chae, 1990 ), the Discomfort Scale in Dementia of the Alzheimer's Type (DC-DAT; Hurley, Volicer, Hanrahan, Houde, & Volicer, 1992 ), the Pain Assessment in Advanced Dementia (PAINAD) scale ( Warden, Hurley, & Volicer, 2003 ), and the Premature Infant Pain Profile (PIPP) ( Stevens, Johnston, Petryshen, & Taddio, 1996 ) are examples of such observational checklists. The QUALCARE Scale was developed for direct measurement of physical, psychological, and environmental dimensions of caregiving for dependent older adults (Phillips et al.). The DC-DAT; measures discomfort (Hurley et al), and the PAINAD measures pain by observation of nonverbal patients with advanced Alzheimer's disease (Warden et al.).The PIPP was designed to measure the physiological and behavioral indicators of pain in premature infants (Stevens et al.). Video exemplars were used to train coders in the use of the DC-DAT (Hurley et al.), QUALCARE Scale ( Morrison et al., 1990 ), and the PIPP ( Stevens, 1999 ).

Not all direct observation tools can be used with video data. This is particularly true of instruments that require the observer to capture contextual observations. For example, in the Passivity in Dementia Scale (PDS), one subscale is: interaction with the environment ( Colling, 1999 ), but it is often impossible to see this interaction through the lens of a camera (i.e., resident responding to an off camera resident. One of us (A.K.) found that the PDS subscale had low reliability when used with video data in contrast to real-time observation. This experience highlights the importance of pilot testing instruments that will be used to code video data and to compute their reliability coefficients when used with video data so that appropriate selection of instruments can be made.

When measuring phenomena for which there are no established observational tools, researchers may choose to create adaptations of checklist tools that are typically applied by clinicians or self-rated by patients. For example, items from the revised Condensed-Memorial Symptom Assessment Scale (C-MSAS; Chang, Hwang, Feuerman, Kasimis, & Thaler, 2000 ) are currently being applied to video observations of nurse-patient communication in the ICU to measure symptom assessment and management (K24-NR010244). Nelson et al. (2001) revised the C-MSAS for use with nonspeaking critically ill patients. In this revision, patients endorse the presence or absence of each symptom on a checklist of 14 physical and emotional symptoms (e.g., shortness of breath, pain, thirst, worry) followed by rating the level of distress from each physical symptom or frequency of each emotional symptom experienced. In applying this symptom checklist to video observation of nurse-patient communication, only symptom presence as identified in the observed communication interaction is counted. Judgments about symptom distress or intensity are not made from the video recording. Although the previously established symptom constructs are foundational to the application of the C-MSAS checklist to video observation, new psychometric testing should be conducted for this application. In conjunction with employing appropriate observational tools for measurement, it is imperative to establish an effective training regimen for video coders.

Training, Re-training, and Retaining Video Coders

In order to achieve acceptable levels of reliability between coders, a standardized training procedure should be implemented to provide instruction on the use of instruments and the video coding process ( Castorr et al., 1990 ). We developed detailed training manuals that provide specific instructions for using each instrument. The training includes operational definitions of terms and specific interpretation of the behaviors to be rated. Training reduces variability in scoring by minimizing personal interpretation ( Curyto, Van Haitsma, & Vriesman, 2008 ; Washington & Moss, 1988 ) and the effects of prior experience with the study population ( Kaasa, Wessel, Darrah, & Bruera, 2000 ).

Some projects, do not require any specific credentials or experience for video coders. In fact, naïve coders are often the best research assistants because they may not have preconceived notions about which behaviors are “appropriate” in specific situations, and would, therefore, be more likely to note these behaviors. In other cases, clinical experience would be an asset, if not a necessity, when coding highly technical procedures ( Treloar et al., 2008 ). Regardless of background, research assistants need detailed study information to achieve reliability performance in the field ( Cambron & Evans, 2003 ). The content and length of training programs will vary according to the complexity of the protocol and the reliability of the instruments used to code data. In general, instruments with lower reliability coefficients will require that video coders have more training in their use before acceptable levels of reliability are achieved.

We begin our training by discussing the difference between an objective behavioral observation and a behavioral inference. We illustrate this critical difference by describing situations in which the context could influence coders' rating of behaviors: for example, inferring that a positive affective behavior is being expressed by a subject who is sitting next to individuals who are smiling and laughing, without noting the actual facial expression of the subject. Once this concept is grasped, coders commit to memory the behaviors and the definitions of behaviors that are included on each instrument they use. Additionally, we instruct our coders to access the data collection manuals we develop that describe in detail the behavioral units we are measuring, and we include short definitions of these behaviors on our data collection forms as memory prompts.

We then practice using each instrument with actual video data from the project. This is initially done in a group setting so we can discuss differences across coders. When coders feel confident in identifying behaviors, we ask them to rate video data individually and compare their ratings to a pre-established gold standard. Practice continues until coders achieve 80% agreement with the gold standard. The cut point for competency reliability (≥ .80) is commonly used as acceptable inter-rater reliability in research involving observational coding from videos ( de los Rios Castillo & Sosa, 2002 ; Morse, Beres, Spiers, Mayan, & Olson, 2003 ; Topf, 1986 ), however, some researchers use >70% inter-observer agreement as acceptable for new instruments ( Le May & Redfern, 1987 ).

Across all studies, we train video coders to avoid fatigue when coding tapes and to take frequent breaks to counteract drift. Coders are also asked to avoid coding tapes on days when they are emotionally upset or ill, as their own emotional state can influence the behavioral observations they make. To avoid intra-rating bias during video coding, we assign different coders to rate different behaviors in the same subject. Calibration reliability checks are completed on 10% of recordings throughout the project on each video coder. If reliability falls below 80%, retraining takes place. In one of our projects, routine booster sessions are conducted in which all raters are brought together when there is a lapse of 1 month or more in video coding because of slow enrollment. The purpose of these sessions is briefly to review behavioral definitions and their expression in order to prevent low reliability due to loss of rating skill.

Because the training and re-training of video coders is so intense in terms of time and labor, we minimize video coder attrition and subsequent fluctuations in reliability by compensating video coders for their training after 6 months of service to the project. We have found this to be a very effective and acceptable method for retention of our well-trained video coders. In addition to the establishment of an effective training protocol and acceptable levels of reliability between video coders, it is important to estimate reliability coefficients using standard methods.

Computation of Reliability Coefficients

In order to establish reliability of the instrument, it is important to measure internal consistency, and consistency of measures across time when rated by different individuals. Use of Cronbach's alpha allows the researcher to estimate the average correlation of each scale item with the other scale items used ( Kerlinger & Lee, 2000 ). The reliability of observation systems is most often defined as agreement among two or more independent raters of behavior, also known as inter-rater reliability (IRR). Kappa-type statistics, coefficient kappa (also known as Cohen's kappa) and intraclass correlation (ICC) are commonly used to quantify IRR ( Hulley, Cummings, Browner, Grady, & Newman, 2007 ).

Kappa is an estimation of the degree of consensus between raters. The calculated value will be in the range of -1 to +1 ( Landis & Koch, 1977 ). If the raters are in perfect agreement, kappa equals 1, in contrast to perfect disagreement between raters where kappa equals -1. A kappa of zero represents the same likelihood that the agreement between raters is equal to chance. An acceptable coefficient kappa (good agreement) would be a value above .75, moderate agreement is between .4 – .75, and low agreement is below .4 ( Bellieni et al., 2007 ; Laschinger, 1992 ). Kappa is calculated using the following equation:

Coefficient kappa allows for quantification of IRR as well as rater consistency, and provides a more precise determination of the reliability estimate than a simple correlation coefficient ( Hulley et al., 2007 ).

Reliable Video Coding in Special Populations

In addition to general issues for improving the reliability of video-recorded behavioral data, there are specific issues that relate to special populations.

Critically Ill Patients

There are special conditions to consider when coding data from critically ill and non-speaking participants. In the case of video-recorded communication interactions with intensive care unit (ICU) patients, several factors contribute to the complexity of the task.

An initial challenge of analyzing video recordings in critical care is to distinguish which of the participants is speaking due to camera angles that may obscure a visual record of the speaker, co-occurring conversations, and the effect of environmental noise. These environmental noises can be a radio, a television set, family members sitting in the room, clinicians outside of the room, equipment noises or monitor alarms. Yet, it is very important to listen for these environmental noises, even if this means listening to the audio several times and in smaller segments, because patients sometimes react to them. For example, a patient in the Study of Patient-Nurse Effectiveness with Assisted Communication Strategies (SPEACS) study reacted to a news current event on the television by mouthing words, but his conversational message was misinterpreted by the nurse who was concerned with the patient's immediate physical comfort needs. Data coders reviewed the video many times to accurately lip read the patient's message using the context of the background noise of the television news program to aid interpretation.

Critically ill patients tend to have oral endotracheal tubes or tracheostomy tubes preventing speech. The tube and fixation tape masks the participant's mouth movements, making it difficult to read the patient's lips. Beards can also contribute to the distortion of oral movements. Furthermore, when participants no longer have their own teeth or exhibit oral tremors, the enunciation of words may be affected, adding an additional layer of difficulty to the analysis.

In addition to mouthing words as a means of communicating, critically ill patients tend to gesture. The patient's ability to use upper motor movements is not always precise; much of the time, movements are gross. Patients' arms and hands can be swollen creating difficulty in deciphering the gestures. The coder must discern whether the patient is truly intending to use voluntary gestural communication or whether the movement is involuntary. The wrists of ICU patients may be restrained, which prevents, limits, or distorts the gesture for communication purposes. Furthermore, the position of the patient (in the bed or a bedside chair), blankets on the patient, and bed rails can also hinder or obstruct the patient's gestures.

Despite these barriers to the interpretation of patient communication in the ICU, videography offers a unique and powerful tool for recording communicative behaviors among nonspeaking critically ill patients. The ability to pause, replay, and study the interaction permits a level of understanding unavailable during real-time observation. We recommend the use of close-up camera shots and wide angle lenses, hand-held cameras to follow the action/ actors, and directional microphones to optimize the recording quality. Data collection protocols that reduce extraneous background noise before the start of recording sessions should be considered: for example, muting the television, closing the door, and posting a “video recording in progress” sign on the door to discourage interruptions. Finally, because critically ill patients can have dramatic fluctuations in illness severity, cognition, and attention, we recommend that researchers always consider measures of illness severity or acuity and delirium for use in describing the sample, eligibility screening, and/or as covariates.

Nursing Home Residents with Dementia

A second program of research focused on interventions for improving the behavioral symptoms of nursing home residents with dementia. Important outcomes in this work are displays of both positive and negative affect. A growing literature documents that persons with dementia experience a full range of emotions and not just the more negative ones of anger, anxiety or sadness ( Cotrell & Hooker, 2005 ; Hubbard, Downs, & Tester, 2003 ; Kolanowski, Hoffman, & Hofer, 2007 ). Video coders, however, often have trouble distinguishing normal age changes or changes brought on by chronic conditions, medications, or loss of dentition from facial, voice, and postural indicators of negative affect. It is essential that video coders be trained to differentiate, for example, the stooped posture resulting from degenerative arthritis from the stooped shoulders indicating depression; the wrinkled, loose skin around the eyes and horizontal furrows in the forehead due to gravitational effects of aging from the sagging facial muscles and horseshoe furrows in the forehead due to sadness; or, the loud, coarse voice quality due to hearing loss from the increased voice volume indicative of anger ( Ekman & Rosenberg, 1997 ). Although it seems intuitive that video recording coupled with blinded video coders would eliminate bias related to familiarity with the subject, the gain in objectivity may be at the expense of inaccuracy in rating. A baseline period is critical to help video coders differentiate the behavioral signs of affect from the age and disease changes typical in older adults with dementia.

Because fluctuations in affect and other behavioral outcomes are related to cognitive status, it is important to describe the sample along these characteristics. We have also found that variability in displayed negative affect (but not positive affect) increases with increasing severity of disease ( Kolanowski et al., 2007 ). This necessitates more intense measurement models (more frequent collection of data) accurately to capture the day-to-day variability in affect in this population.

Preterm Infants in Neonatal Intensive Care

A third program of research focused on the integration of biological and behavioral responses of preterm infants to environmental stressors associated with nursing care in the NICU. The goal is to investigate patterns of early stress response and stress recovery to determine the extent to which individual patterns of stress response are predictive of negative short-term health consequences. A growing literature supports the use of behavioral indicators for pain/stress measurement in preverbal infants and young children ( Ballantyne, Stevens, McAllister, Dionne, & Jack, 1999 ; Gibbins et al., 2008 ; Grunau & Oberlander, 1998 ; Hunt et al., 2004 ; Hunt et al., 2007 ; Peters et al., 2003 ).

The neonatal facial coding system (NFCS) is a behavioral rating scale with demonstrated clinical validity as a measure of pain/stress in infants ( Grunau & Oberlander, 1998 ). The measure consists of 9 distinct facial dimensions defined by upper and lower facial muscle activity. The activities include the following: brow bulge, eye squeeze, nasolabial furrow, open lips, vertical mouth stretch, horizontal mouth stretch, lip purse, taut tongue and chin quiver (Grunau & Oberlander). In the preterm infant, the lower facial muscles develop later than the upper facial muscles; there is a normal progression of development from head to toe. Thus, the preterm infant with limited lower facial muscle development most commonly displays only the upper facial activities (brow bulge, eye squeeze, and nasolabial furrow) during stress or pain evoking events ( Peters et al., 2003 ). Infant behavioral states (quiet sleep, active sleep, transition, quiet awake, active awake) as well as current level of illness are important covariates that may contribute to a diminution or robustness of behavioral responses. We recommend that researchers document behavioral state and acuity of illness at the time of observation.

The Premature Infant Pain Profile (PIPP) is a comprehensive pain/stress scale that includes both behavioral and physiological parameters and has established validity and reliability for use in preterm infants ( Bellieni et al., 2007 ; Stevens, 1999 ). The PIPP instrument consists of seven indicators, each scored on a 4-point scale. Indicators include the following: gestational age, behavioral state, heart rate and oxygen saturation responses, and upper facial behavioral responses (brow bulge, eye squeeze, nasolabial furrow; Stevens et al., 1996 ). To determine responses to stimuli, behaviors are recorded during baseline, stimulus, and recovery times. Reliability for facial coding can be established by determining the percentage of agreement per occurrence for each facial activity within 2-minute epochs ( Gibbins et al., 2008 ). Because gestational age is the important contextual indicator for rating distress in preterm infants, it is critical that video coders using PIPP are instructed on the key developmental differences between infants of varied gestational ages (Gibbins et al.). Attention should be directed at establishing intra- and inter-rater agreement for evaluating infants within each of the preterm gestational age categories under observational study (< 27 weeks, 27-29 weeks, 30-33 weeks and 34-36 weeks gestation).

The use of video technology in behavioral research offers important advantages to nurse scientists in assessing complex behaviors and relationships between behaviors. The reliability of data obtained using this method needs to be weighed against the reliability of using real-time observations. Video recordings of behavioral responses are preferable as they afford the rater with the ability to freeze frame and replay data for review and coding of behavior, thus, enhancing reliability. An additional advantage of video-recorded behavioral observations over real-time observations is the ability of the coder to take breaks as needed and minimize problems associated with observer fatigue and drift. Video-recorded behavioral data also facilitates independent rating of responses by a coder who can be blinded to the scoring of other coders, reducing intra-rater bias. In addition, video recording provides an alternate method to real-time observation when complex multi-level systems comprise the behavioral units, as with complicated physiological and behavioral scoring systems where managing all the data at once in real time would be difficult. Further, this method allows for the implementation of training of naïve observers without clinical expertise and, thus, facilitates rating to be done without preconceived judgment based solely on clinical knowledge.

The limitations associated with the use of video recording in behavioral studies include intrusiveness of the equipment, potential for higher participant reactivity, and potential loss of the larger environmental context outside the view of the lens. Researchers can minimize the difficulties associated with these limitations by using expert technicians to set up and manage the equipment and to employ additional strategies to capture the broader environmental context.

In summary, when the decision has been made to use video recording as a means to collect behavioral data, researchers need to give careful consideration to factors that enhance raw data quality, the selection of appropriate observational tools to use with video data, the development of a strong training program for video coders that includes special consideration of the population studied, and the methods used for calculation of reliability coefficients. An informed approach to video recording as a method of data collection will enhance the quality of data obtained and the findings that ultimately inform nursing practice.

Acknowledgments

Kim Kopenhaver Haidet was supported by a Johnson & Johnson Health Behaviors and Quality of Life: 2006-2007 grant, Autonomic and Behavioral Stress Responses in Low Birth Weight Preterm Infants. Ann Kolanowski was supported by a grant from the National Institute of Nursing Research (R01 NR008910), A Prescription For Enhancing Resident Quality of Life. Mary Beth Happ was supported by NINR (K24 NR010244) Symptom Management, Patient-Caregiver Communication, and Outcomes in ICU; NICHD (R01 HD042988) Improving Communication with NonSpeaking ICU Patients.

Contributor Information

Kim Kopenhaver Haidet, School of Nursing, 307 Health & Human Development East, The Pennsylvania State University, University Park, PA 16802.

Judith Tate, School of Nursing, University of Pittsburgh, Pittsburgh, PA.

Dana Divirgilio-Thomas, School of Nursing, University of Pittsburgh.

Ann Kolanowski, School of Nursing, The Pennsylvania State University, University Park, PA.

Mary Beth Happ, School of Nursing, University of Pittsburgh.

- Ballantyne M, Stevens B, McAllister M, Dionne K, Jack A. Validation of the Premature Infant Pain Profile in the clinical setting. Clinical Journal of Pain. 1999; 15 (4):297–303. [ PubMed ] [ Google Scholar ]

- Bellieni CV, Cordelli DM, Caliani C, Palazzi C, Franci N, Perrone S, et al. Inter-observer reliability of two pain scales for newborns. Early Human Development. 2007; 83 (8):549–552. [ PubMed ] [ Google Scholar ]

- Caldwell K, Atwal A. Non-participant observation: Using video tapes to collect data in nursing research. Nurse Researcher. 2005; 13 (2):42–54. [ PubMed ] [ Google Scholar ]

- Cambron JA, Evans R. Research assistants' perspective of clinical trials: Results of a focus group. Journal of Manipulative & Physiological Therapeutics. 2003; 26 (5):287–292. [ PubMed ] [ Google Scholar ]

- Casey D. Choosing an appropriate method of data collection. Nurse Researcher. 2006; 13 (3):75–92. [ PubMed ] [ Google Scholar ]

- Castorr AH, Thompson KO, Ryan JW, Phillips CY, Prescott PA, Soeken KL. The process of rater training for observational instruments: Implications for interrater reliability. Research in Nursing & Health. 1990; 13 (5):311–318. [ PubMed ] [ Google Scholar ]

- Chang VT, Hwang SS, Feuerman M, Kasimis BS, Thaler HT. The memorial symptom assessment scale short form (MSAS-SF) Cancer. 2000; 89 (5):1162–1171. [ PubMed ] [ Google Scholar ]

- Colling KB. Passive behaviors in dementia: Clinical application of the need-driven dementia-compromised behavior model. Journal of Gerontological Nursing. 1999; 25 (9):27–32. [ PubMed ] [ Google Scholar ]

- Cotrell V, Hooker K. Possible selves of individuals with Alzheimer's disease. Psychology and Aging. 2005; 20 (2):285–294. [ PubMed ] [ Google Scholar ]

- Curyto KJK, Van Haitsma K, Vriesman DK. Direct observation of behavior: A review of current measures for use with older adults with dementia. Research in Gerontological Nursing. 2008; 1 (1):1–26. [ PubMed ] [ Google Scholar ]

- de los Rios Castillo J, Sosa JJS. Well-being and medical recovery in the critical care unit: The role of the nurse-patient interaction. Salud Mental. 2002; 25 (2):21–31. [ Google Scholar ]

- Description of Facial Action Coding System (FACS) n.d. Retrieved March 19 2009, from http://face-and-emotion.com/dataface/facs/description.jsp .

- Dichter CH. Dissertation Abstracts International. Vol. 59. 1999. Toddler's responses to parental presence in the pediatric intensive care unit. (Doctoral dissertation, University of Pennsylvania, 1999) p. 5784. [ Google Scholar ]

- Dijkstra B, Zijlstra W, Scherder E, Kamsma Y. Detection of walking periods and number of steps in older adults and patients with Parkinson's disease: Accuracy of a pedometer and an accelerometry-based method. Age and Aging. 2008; 37 (4):436–441. [ PubMed ] [ Google Scholar ]

- Ekman P, Friesen WV, Hager JC. The Facial Action Coding System. 2nd. Salt Lake City, UT: Research Nexus eBook; 2002. [ Google Scholar ]

- Ekman P, Rosenberg E. What the face reveals: Basic and applied studies of spontaneous expression using the Facial Action Coding System (FACS) New York: Oxford University Press; 1997. [ Google Scholar ]

- Elder JH. Videotaped behavioral observations: Enhancing validity and reliability. Applied Nursing Research. 1999; 12 (4):206–209. [ PubMed ] [ Google Scholar ]

- Gibbins S, Stevens B, Beyene J, Chan PC, Bagg M, Asztalos E. Pain behaviours in extremely low gestational age infants. Early Human Development. 2008; 84 (7):451–458. [ PubMed ] [ Google Scholar ]

- Gross D. Issues related to validity of videotaped observational data. Western Journal of Nursing Research. 1991; 12 (5):658–663. [ PubMed ] [ Google Scholar ]

- Grunau RE, Oberlander T. Bedside application of the Neonatal Facial Coding System in pain assessment of premature neonates. Pain. 1998; 76 (3):277–286. [ PubMed ] [ Google Scholar ]

- Haidet KK, Susman EJ, West SG, Marks KH. Biobehavioral responses to handling in preterm infants. Journal of Pediatric Nursing. 2005; 20 (2):128. [ Google Scholar ]

- Happ MB, Sereika S, Garrett K, Tate JA. Use of quasi-experimental sequential cohort design in the Study of Patient-Nurse Effectiveness with Assisted Communication Strategies (SPEACS) Contemporary Clinical Trials. 2008; 29 (5):801–808. [ PMC free article ] [ PubMed ] [ Google Scholar ]

- Heacock P, Souder E, Chastain J. Subjects, data, and videotapes. Nursing Research. 1996; 45 (6):336–338. [ PubMed ] [ Google Scholar ]

- Hubbard G, Downs MG, Tester S. Including older people with dementia in research: Challenges and strategies. Aging and Mental Health. 2003; 7 (5):351–362. [ PubMed ] [ Google Scholar ]

- Hulley SB, Cummings SR, Browner WS, Grady DG, Newman TB. Designing clinical research. 3rd. Philadelphia: Lippincott, Williams & Wilkins; 2007. [ Google Scholar ]

- Hunt AA, Goldman A, Mastroyannopoulou K, Moffat V, Oulton K, Brady M. Clinical validation of the paediatric pain profile. Developmental Medicine & Child Neurology. 2004; 46 (1):9–18. [ PubMed ] [ Google Scholar ]

- Hunt AA, Wisbeach A, Seers K, Goldman A, Crichton N, Perry L, et al. Development of the paediatric pain profile: Role of video analysis and saliva cortisol in validating a tool to assess pain in children with severe neurological disability. Journal of Pain and Symptom Management. 2007; 33 (3):276–289. [ PubMed ] [ Google Scholar ]

- Hurley AC, Volicer BJ, Hanrahan PA, Houde S, Volicer L. Assessment of discomfort in advanced Alzheimer patients. Research in Nursing & Health. 1992; 15 (5):369–377. [ PubMed ] [ Google Scholar ]

- Kaasa T, Wessel J, Darrah J, Bruera E. Inter-rater reliability of formally trained and self-trained raters using the Edmonton Functional Assessment Tool. Palliative Medicine. 2000; 14 (6):509–517. [ PubMed ] [ Google Scholar ]

- Kerlinger FN, Lee HB. Foundations of behavioral research. Boston: Cengage Thomson Learning; 2000. [ Google Scholar ]

- Kolanowski A, Hoffman L, Hofer S. Concordance of self-report and informant assessment of emotional well-being in nursing home residents with dementia. The Journal of Gerontology: Psychological Sciences. 2007; 62B (1):20–27. [ PubMed ] [ Google Scholar ]

- Landis JR, Koch GG. The measurement of observer agreement for categorical data. Biometrics. 1977; 33 (1):159–174. [ PubMed ] [ Google Scholar ]

- Laschinger HK. Intraclass correlations as estimates of interrater reliability in nursing research. West Journal of Nursing Research. 1992; 14 (2):246–251. [ PubMed ] [ Google Scholar ]

- Latvala E, Vuokila-Oikkonen P, Janhonen S. Videotaped recordings as a method of participant observation in psychiatric nursing research. Journal of Advanced Nursing. 2000; 31 (5):1252–1257. [ PubMed ] [ Google Scholar ]

- Le May AC, Redfern SJ. A study of non-verbal communication between nurses and elderly patients. In: Fielding P, editor. Research in the nursing care of elderly people. Location: Wiley; 1987. pp. 171–189. [ Google Scholar ]

- Light J. Interaction involving individuals using augmentative and alternative communication systems: State of the art and future directions. Augmentative and Alternative Communication. 1988; 4 (2):66–82. [ Google Scholar ]

- Morrison EF, Phillips LR, Chae YM. The development and use of observational measurement scales. Applied Nursing Research. 1990; 3 (2):73–86. [ PubMed ] [ Google Scholar ]

- Morse JM, Beres MA, Spiers JA, Mayan M, Olson K. Identifying signals of suffering by linking verbal and facial cues. Qualitative Health Research. 2003; 13 (8):1063–1077. [ PubMed ] [ Google Scholar ]

- Nelson JE, Meier DE, Oei EJ, Nierman DM, Senzel RS, Manfredi PL, et al. Self-reported symptom experience of critically ill cancer patients receiving intensive care. Critical Care Medicine. 2001; 29 (2):277–282. [ PubMed ] [ Google Scholar ]

- Paterson BL. A framework to identify reactivity in qualitative research. Western Journal of Nursing Research. 1994; 16 (3):301–316. [ PubMed ] [ Google Scholar ]

- Peters JW, Koot HM, Grunau RE, de Boer J, van Druenen MJ, Tibboel D, et al. Neonatal Facial Coding System for assessing postoperative pain in infants: Item reduction is valid and feasible. The Clinical Journal of Pain. 2003; 19 (6):353–363. [ PubMed ] [ Google Scholar ]

- Phillips LR, Morrison EF, Chae YM. The QUALCARE Scale: Developing an instrument to measure quality of home care. International Journal of Nursing Studies. 1990; 27 (1):61–75. [ PubMed ] [ Google Scholar ]

- Rosenstein B. Video use in social science research and program evaluation. International Journal of Qualitative Methods. 2002; 1 (3):22–43. [ Google Scholar ]

- Roter DL, Larson S, Fischer GS, Arnold RM, Tulsky JA. Experts practice what they preach: A descriptive study of best and normative practices in end-of-life discussions. Archives of Internal Medicine. 2000; 160 (22):3477–3485. [ PubMed ] [ Google Scholar ]

- Sloane P, Miller L, Mitchell C, Rader J, Swafford K, Hiatt S. Provision of morning care to nursing home residents with dementia: opportunity for improvement? American Journal of Alzheimer's Disease and Other Dementias. 2007; 22 (5):369–377. [ PMC free article ] [ PubMed ] [ Google Scholar ]

- Spencer R, Coiera E, Logan P. Variation in communication loads on clinical staff in the Emergency Department. Annals of Emergency Medicine. 2003; 44 (3):268–273. [ PubMed ] [ Google Scholar ]

- Stevens B. Pain in infants. In: McCaffery M, Pasero C, editors. Pain: Clinical manual. St. Louis, MO: Mosby; 1999. pp. 626–669. [ Google Scholar ]

- Stevens B, Johnston C, Petryshen P, Taddio A. Premature Infant Pain Profile: Development and initial validation. Clinical Journal of Pain. 1996; 12 (1):13–22. [ PubMed ] [ Google Scholar ]

- Topf M. Three estimates of inter-rater reliability for nominal data. Nursing Research. 1986; 35 (4):253–255. [ PubMed ] [ Google Scholar ]

- Treloar C, Laybutt B, Jauncey M, vanBeek I, Lodge M, Malpas G, et al. Broadening discussions of “safe” in hepatitis C prevention: A close up of swabbing in an analysis of video recordings of injecting practice. International Journal of Drug Policy. 2008; 19 (1):59–65. [ PubMed ] [ Google Scholar ]

- Warden V, Hurley AC, Volicer L. Development and psychometric evaluation of the Pain Assessment in Advanced Dementia (PAINAD) scale. Journal American Medical Directors Association. 2003; 4 (1):9–15. [ PubMed ] [ Google Scholar ]

- Washington C, Moss M. Pragmatic aspects of establishing interrater reliability in research. Nursing Research. 1988; 37 (3):190–191. [ PubMed ] [ Google Scholar ]

Root out friction in every digital experience, super-charge conversion rates, and optimize digital self-service

Uncover insights from any interaction, deliver AI-powered agent coaching, and reduce cost to serve

Increase revenue and loyalty with real-time insights and recommendations delivered to teams on the ground

Know how your people feel and empower managers to improve employee engagement, productivity, and retention

Take action in the moments that matter most along the employee journey and drive bottom line growth

Whatever they’re are saying, wherever they’re saying it, know exactly what’s going on with your people

Get faster, richer insights with qual and quant tools that make powerful market research available to everyone

Run concept tests, pricing studies, prototyping + more with fast, powerful studies designed by UX research experts

Track your brand performance 24/7 and act quickly to respond to opportunities and challenges in your market

Explore the platform powering Experience Management

- Free Account

- Product Demos

- For Digital

- For Customer Care

- For Human Resources

- For Researchers

- Financial Services

- All Industries

Popular Use Cases

- Customer Experience

- Employee Experience

- Net Promoter Score

- Voice of Customer

- Customer Success Hub

- Product Documentation

- Training & Certification

- XM Institute

- Popular Resources

- Customer Stories

- Artificial Intelligence

Market Research

- Partnerships

- Marketplace

The annual gathering of the experience leaders at the world’s iconic brands building breakthrough business results, live in Salt Lake City.

- English/AU & NZ

- Español/Europa

- Español/América Latina

- Português Brasileiro

- REQUEST DEMO

- Experience Management

- Video in Qualitative Research

Try Qualtrics for free

Why use video in qualitative research.

10 min read If you or your team are running qualitative research, you may wonder if it’s useful to use video as a data source. We give you insights on the use of video in qualitative research and how best to use this data format.

What is video in qualitative research?

One of the major challenges and opportunities for organizations today is gaining a deeper and more authentic understanding of their customers.

From concerns and feedback to approval and advocacy, uncovering customer sentiment , needs and expectations is what will empower organizations to take the next step in designing, developing and improving experiences.

Most of this starts with qualitative research — e.g. focus groups, interviews, ethnographic studies — but the fundamental problem is that traditional approaches are sometimes restrictive, costly and difficult to scale.

Free eBook: The qualitative research design handbook

The challenges of traditional and disparate qualitative research

Slow: Long time to value while teams or agencies scope, recruit, design , build, execute, analyze & report out on studies. It also takes a long time to sift through qualitative data (e.g. video) and turn it into insights at scale.

Expensive: Qualitative methods of research can be costly, both when outsourcing to agencies or managing the process in-house. Organizations also have to factor in participant management costs (travel, hotels, venues, etc.).

Siloed: Data and research tools are outdated, siloed and scattered across teams and vendors, resulting in valuable data loss; no centralized approach to executing studies and incorporating customer/market insights across teams to support multiple initiatives. It also makes it hard to perform meta-analysis (e.g. perform analysis across multiple studies & methods) .

Hard to scale: Requires a lot of manual analysis; lack of in-house research & insights expertise due to cost of hiring/maintaining internal teams; heavy reliance on expertise of consultants to deliver quality insights; data from studies only used for initial research question, when it could be used by other teams.

Of course, while some organizations are using qualitative research, most rely heavily on in-person methods, manual work and a high degree of expertise to run these studies, meaning it’s near impossible to execute on data without these three things.

Alternative options that many organizations rely on:

- Agencies: Services-dependent with either an in-person or digital collection process, usually performed in a moderation style. Are slow, expensive, and result in loss of IP. Recruitment for interviews and discussion/diary studies can take a couple weeks or more. Reporting takes another couple weeks.

- In-person: Results in loss of efficiency & IP; data can’t be leveraged in concert with quantitative data easily; data can’t be reused. Very labor intensive to review and analyze all content.

- Point solutions: Technology providers for collecting synchronous or asynchronous video feedback. Can be either moderated or unprompted. Disconnected from other research and teams that could benefit from data and insights.

- Traditional qualitative studies: Organizations typically depend on traditional studies like, focus groups, personal interviews, phone calls, etc.

The reality is that the world has changed: the pandemic, coupled with the emergence of widespread remote and hybrid working, has forced researchers to move their efforts online.

Consequently, many organizations have turned to video feedback, a cost-effective type of qualitative research that can support existing methods. And it’s paying massive dividends.

History of video in qualitative research

Video in qualitative research is nothing new, and organizations have been using this response-gathering method for years to collect more authentic data from customers at scale.

It’s long been viewed as a medium through which an audiovisual record of everyday events, such as people shopping or students learning, is preserved and later made available for closer scrutiny, replay and analysis. In simple terms, video offers a window through which researchers can view more authentic and specific situations, interactions and feedback to uncover deeper and more meaningful insights.

But with the rapid shift to digital, video has quickly become a popular type of supplementary qualitative research — and is often used to support existing qualitative efforts. Plus, the growing ubiquity of video recording technologies (Zoom, Skype, Teams), has made it even easier for researchers to acquire necessary data in streamlined and more effective ways.

The reason it’s so effective is because it’s as simple as using a mobile phone to record audio and video with just a press of a button. Plus, the affordability of high-quality technical equipment, e.g. wearable microphones, and superior camera quality compared to other forms of digital recording has made video a strong supplementary component of qualitative research.

As a result, video provides respondents with the freedom and flexibility to respond at their leisure — removing any issue of time — and with smartphone technologies that are increasingly of high grade, helping to mitigate costs while increasing scale.

And the best part? Organizations can get more insightful and actionable insights from data around-the-clock, and that data can be utilized for as long as necessary.

Types of video qualitative research methods

In 2016, university researcher, Rebecca Whiting, in ‘ Who’s Behind the Lens?: A Reflexive Analysis of Roles in Participatory Video Research ’, described the four types of video research methods that are now available:

- “Participatory video research, which uses participant-generated videos, such as video diaries,

- Videography, which entails filming people in the field as a way to document their activities,

- Video content analysis, which involves analysis of material not recorded by the researcher, or

- Video elicitation, which uses footage (either created for this purpose by the researcher, or extant video) to prompt discussion.”

What’s increasingly clear is that as technology advances and cloud-based platforms provide video streaming and capture services that are increasingly accessible, researchers now have an always-on, scalable and highly effective way to capture feedback from diverse audiences.

With video feedback, researchers can get to the “why” far faster than ever before. The scope of video in qualitative research has never been wider. Let’s take a closer look at what it can do for organizations.

Scope of video in qualitative research

There are several benefits to using video feedback in qualitative research studies, namely:

- More verifiability and less researcher bias – If a video record exists of a conversation, it’s possible to review.

- More authentic responses – in an open-ended survey, some participants may feel able to describe their views better in film, than by translating it down into text. This often results in them providing more “content” (or insight) than they would have done via a traditional survey or interview.

- Perception of being faster – The perceived immediacy and speed of giving feedback by video may appeal to people with less time, who otherwise may not have responded to the survey.

- Language support – The option for people to answer in their own language can help participants open up about topics in a way that writing down their thoughts may not allow for.

- Provides visual cues – Videos can provide context and additional information if the participant provides visual cues like body language or showing something physical to the camera.

- Video has some benefits over audio recordings and field notes – A working paper from the National Center of Research Methods found three benefits: “1) its character as a real-time sequential record; 2) a fine-grained multimodal record; and 3) its durability, malleable, and share-ability.” [1]

- Empowers every team to carry out and act on insight — With video responses available for all teams to view and utilize, it becomes significantly easier for teams to act on insights and make critical changes to their experience initiatives.

How can Qualtrics help with video in qualitative research?

Qualtrics Video Feedback solution is a for-purpose benefit to the Qualtrics products that help businesses manage their video feedback and insights, right alongside traditional survey data types.

It brings insights to life by making it easy for respondents to deliver their thoughts and feelings through a medium they’re familiar and comfortable with, resulting in 6x more content than traditional open feedback.

As well as this, Qualtrics Video Feedback features built-in AI-powered analytics that enables researchers to analyze and pull sentence-level topics and sentiment from video responses to see exactly how respondents feel at scale.

Finally, customizable video editing allows researchers to compile and showcase the best clips to tell the story of the data, helping to deliver a more authentic narrative that lands with teams and key stakeholders.

Qualtrics Video Feedback provides:

- End-to-end research: Conduct all types of video feedback research with point-and-click question types, data collection, analytics and reporting — all in the same platform. This means it’s easy to compare and combine qualitative and quantitative research , as well as make insights available to all relevant stakeholders.

- Scalability and security: Easily empower teams outside the research department to conduct their own market research with intuitive tools, guided solutions, and center of excellence capabilities to overcome skill gaps and governance concerns.

- Better, faster insights: As respondents can deliver more authentic responses at their leisure (or at length), you can gather quality insights in hours and days, not weeks or months, and at an unmatched scale.

Related resources

Market intelligence 10 min read, marketing insights 11 min read, ethnographic research 11 min read, qualitative vs quantitative research 13 min read, qualitative research questions 11 min read, qualitative research design 12 min read, primary vs secondary research 14 min read, request demo.

Ready to learn more about Qualtrics?

- Methodology

- Open access

- Published: 28 July 2018

Research as storytelling: the use of video for mixed methods research

- Erica B. Walker ORCID: orcid.org/0000-0001-9258-3036 1 &

- D. Matthew Boyer 2

Video Journal of Education and Pedagogy volume 3 , Article number: 8 ( 2018 ) Cite this article

18k Accesses

5 Citations

4 Altmetric

Metrics details

Mixed methods research commonly uses video as a tool for collecting data and capturing reflections from participants, but it is less common to use video as a means for disseminating results. However, video can be a powerful way to share research findings with a broad audience especially when combining the traditions of ethnography, documentary filmmaking, and storytelling.

Our literature review focused on aspects relating to video within mixed methods research that applied to the perspective presented within this paper: the history, affordances and constraints of using video in research, the application of video within mixed methods design, and the traditions of research as storytelling. We constructed a Mind Map of the current literature to reveal convergent and divergent themes and found that current research focuses on four main properties in regards to video: video as a tool for storytelling/research, properties of the camera/video itself, how video impacts the person/researcher, and methods by which the researcher/viewer consumes video. Through this process, we found that little has been written about how video could be used as a vehicle to present findings of a study.

From this contextual framework and through examples from our own research, we present current and potential roles of video storytelling in mixed methods research. With digital technologies, video can be used within the context of research not only as data and a tool for analysis, but also to present findings and results in an engaging way.

Conclusions

In conclusion, previous research has focused on using video as a tool for data collection and analysis, but there are emerging opportunities for video to play an increased role in mixed methods research as a tool for the presentation of findings. By leveraging storytelling techniques used in documentary film, while staying true to the analytical methods of the research design, researchers can use video to effectively communicate implications of their work to an audience beyond academics and use video storytelling to disseminate findings to the public.

Using motion pictures to support ethnographic research began in the late nineteenth century when both fields were early in their development (Henley, 2010 ; “Using Film in Ethnographic Field Research, - The University of Manchester,” n.d ). While technologies have changed dramatically since the 1890s, researchers are still employing visual media to support social science research. Photographic imagery and video footage can be integral aspects of data collection, analysis, and reporting research studies. As digital cameras have improved in quality, size, and affordability, digital video has become an increasingly useful tool for researchers to gather data, aid in analysis, and present results.

Storytelling, however, has been around much longer than either video or ethnographic research. Using narrative devices to convey a message visually was a staple in the theater of early civilizations and remains an effective tool for engaging an audience today. Within the medium of video, storytelling techniques are an essential part of a documentary filmmaker’s craft. Storytelling can also be a means for researchers to document and present their findings. In addition, multimedia outputs allow for interactions beyond traditional, static text (R. Goldman, 2007 ; Tobin & Hsueh, 2007 ). Digital video as a vehicle to share research findings builds on the affordances of film, ethnography, and storytelling to create new avenues for communicating research (Heath, Hindmarsh, & Luff, 2010 ).

In this study, we look at the current literature regarding the use of video in research and explore how digital video affordances can be applied in the collection and analysis of quantitative and qualitative human subject data. We also investigate how video storytelling can be used for presenting research results. This creates a frame for how data collection and analysis can be crafted to maximize the potential use of video data to create an audiovisual narrative as part of the final deliverables from a study. As researchers we ask the question: have we leveraged the use of video to communicate our work to its fullest potential? By understanding the role of video storytelling, we consider additional ways that video can be used to not only collect and analyze data, but also to present research findings to a broader audience through engaging video storytelling. The intent of this study is to develop a frame that improves our understanding of the theoretical foundations and practical applications of using video in data collection, analysis, and the presentation of research findings.

Literature review

The review of relevant literature includes important aspects for situating this exploration of video research methods: the history, affordances and constraints of using video in research, the use of video in mixed methods design, and the traditions of research as storytelling. Although this overview provides an extensive foundation for understanding video research methods, this is not intended to serve as a meta-analysis of all publications related to video and research methods. Examples of prior work provide a conceptual and operational context for the role of video in mixed methods research and present theoretical and practical insights for engaging in similar studies. Within this context, we examine ethical and logistical/procedural concerns that arise in the design and application of video research methods, as well as the affordances and constraints of integrating video. In the following sections, the frame provided by the literature is used to view practical examples of research using video.

The history of using video in research is founded first in photography and next in film followed more recently, by digital video. All three tools provide the ability to create instant artifacts of a moment or period of time. These artifacts become data that can be analyzed at a later date, perhaps in a different place and by a different audience, giving researchers the chance to intricately and repeatedly examine the archive of information contained within. These records “enable access to the fine details of conduct and interaction that are unavailable to more traditional social science methods” (Heath et al., 2010 , p. 2).

In social science research, video has been used for a range of purposes and accompanies research observation in many situations. For example, in classroom research, video is used to record a teacher in practice and then used as a guide and prompt to interview the teacher as they reflect upon their practice (e.g. Tobin & Hsueh, 2007 ). Video captures events from a situated perspective, providing a record that “resists, at least in the first instance, reduction to categories or codes, and thus preserves the original record for repeated scrutiny” (Heath et al., 2010 , p. 6). In analysis, these audio-visual recordings allow the social science researcher the chance to reflect on their subjectivities throughout analysis and use the video as a microscope that “allow(s) actions to be observed in a detail not even accessible to the actors themselves” (Knoblauch & Tuma, 2011 , p. 417).

Examining the affordances and constraints of video in research provides a researcher the opportunity to examine the value of including video within a study . An affordance of video, when used in research, is that it allows the researcher to see an event through the camera lens either actively or passively and later share what they have seen, or more specifically, the way they saw it (Chalfen, 2011 ). Cameras can be used to capture an event in three different modes: Responsive, Interactive, and Constructive. Responsive mode is reactive. In this mode, the researcher captures and shows the viewer what is going on in front of the lens but does not directly interfere with the participants or events. Interactive mode puts the filmmaker into the storyline as a participant and allows the viewer to observe the interactions between the researcher and participant. One example of video captured in Interactive mode is an interview. In Constructive mode, the researcher reprocesses the recorded events to create an explicitly interpretive final product through the process of editing the video (MacDougall, 2011 ). All of these modes, in some way, frame or constrain what is captured and consequently shared with the audience.

Due to the complexity of the classroom-research setting, everything that happens during a study cannot be captured using video, observation, or any other medium. Video footage, like observation, is necessarily selective and has been stripped of the full context of the events, but it does provide a more stable tool for reflection than the ever-changing memories of the researcher and participants (Roth, 2007 ). Decisions regarding inclusion and exclusion are made by the researcher throughout the entire research process from the initial framing of the footage to the final edit of the video. Members of the research team should acknowledge how personal bias impacts these decisions and make their choices clear in the research protocol to ensure inclusivity (Miller & Zhou, 2007 ).

One affordance of video research is that analysis of footage can actually disrupt the initial assumptions of a study. Analysis of video can be standardized or even mechanized by seeking out predetermined codes, but it can also disclose the subjective by revealing the meaning behind actions and not just the actions themselves (S. Goldman & McDermott, 2007 ; Knoblauch & Tuma, 2011 ). However, when using subjective analysis the researcher needs to keep in mind that the footage only reveals parts of an event. Ideally, a research team has a member who acts as both a researcher and a filmmaker. That team member can provide an important link between the full context of the event and the narrower viewpoint revealed through the captured footage during the analysis phase.

Although many participants are initially camera-shy, they often find enjoyment from participating in a study that includes video (Tobin & Hsueh, 2007 ). Video research provides an opportunity for participants to observe themselves and even share their experience with others through viewing and sharing the videos. With increased accessibility of video content online and the ease of sharing videos digitally, it is vital from an ethical and moral perspective that participants understand the study release forms and how their image and words might continue to be used and disseminated for years after the study is completed.

Including video in a research study creates both affordances and constraints regarding the dissemination of results. Finding a journal for a video-based study can be difficult. Traditional journals rely heavily on static text and graphics, but newly-created media journals include rich and engaging data such as video and interactive, web-based visualizations (Heath et al., 2010 ). In addition, videos can provide opportunities for research results to reach a broader audience outside of the traditional research audience through online channels such as YouTube and Vimeo.

Use of mixed methods with video data collection and analysis can complement the design-based, iterative nature of research that includes human participants. Design-based video research allows for both qualitative and quantitative collection and analysis of data throughout the project, as various events are encapsulated for specific examination as well as analyzed comparatively for changes over time. Design research, in general, provides the structure for implementing work in practice and iterative refinement of design towards achieving research goals (Collins, Joseph, & Bielaczyc, 2004 ). Using an integrated mixed method design that cycles through qualitative and quantitative analyses as the project progresses gives researchers the opportunity to observe trends and patterns in qualitative data and quantitative frequencies as each round of analysis informs additional insights (Gliner et al., 2009 ). This integrated use also provides a structure for evaluating project fidelity in an ongoing basis through a range of data points and findings from analyses that are consistent across the project. The ability to revise procedures for data collection, systematic analysis, and presenting work does not change the data being collected, but gives researchers the opportunity to optimize procedural aspects throughout the process.

Research as storytelling refers to the narrative traditions that underpin the use of video methods to analyze in a chronological context and present findings in a story-like timeline. These traditions are evident in ethnographic research methods that journal lived experiences through a period of time and in portraiture methods that use both aesthetic and scientific language to construct a portrait (Barone & Eisner, 2012 ; Heider, 2009 ; Lawrence-Lightfoot, 2005 ; Lenette, Cox & Brough, 2013 ).

In existing research, there is also attention given to the use of film and video documentaries as sources of data (e.g. Chattoo & Das, 2014 ; Warmington, van Gorp & Grosvenor, 2011 ), however, our discussion here focuses on using media to capture information and communicate resulting narratives for research purposes. In our work, we promote a perspective on emergent storytelling that develops from data collection and analysis, allowing the research to drive the narrative, and situating it in the context from where data was collected. We rely on theories and practices of research and storytelling that leverage the affordances of participant observation and interview for the construction of narratives (Bailey & Tilley, 2002 ; de Carteret, 2008 ; de Jager, Fogarty & Tewson, 2017 ; Gallagher, 2011 ; Hancox, 2017 ; LeBaron, Jarzabkowski, Pratt & Fetzer, 2017 ; Lewis, 2011 ; Meadows, 2003 ).

The type of storytelling used with research is distinctly different from methods used with documentaries, primarily with the distinction that, while documentary filmmakers can edit their film to a predetermined narrative, research storytelling requires that the data be analyzed and reported within a different set of ethical standards (Dahlstrom, 2014 ; Koehler, 2012 ; Nichols, 2010 ). Although documentary and research storytelling use a similar audiovisual medium, creating a story for research purposes is ethically-bounded by expectations in social science communities for being trustworthy in reporting and analyzing data, especially related to human subjects. Given that researchers using video may not know what footage will be useful for future storytelling, they may need to design their data collection methods to allow for an abundance of video data, which can impact analysis timelines as well. We believe it important to note these differences in the construction of related types of stories to make overt the essential need for research to consider not only analysis but also creation of the reporting narrative when designing and implementing data collection methods.

This study uses existing literature as a frame for understanding and implementing video research methods, then employs this frame as perspective on our own work, illuminating issues related to the use of video in research. In particular, we focus on using video research storytelling techniques to design, implement, and communicate the findings of a research study, providing examples from Dr. Erica Walker’s professional experience as a documentary filmmaker as well as evidence from current and former academic studies. The intent is to improve understanding of the theoretical foundations and practical applications for video research methods and better define how those apply to the construction of story-based video output of research findings.

The study began with a systematic analysis of theories and practices, using interpretive analytic methods, with thematic coding of evidence for conceptual and operational aspects of designing and implementing video research methods. From this information, a frame was constructed that includes foundational aspects of using digital video in research as well as the practical aspects of using video to create narratives with the intent of presenting research findings. We used this frame to interpret aspects of our own video research, identifying evidence that exemplifies aspects of the frame we used.

A primary goal for the analysis of existing literature was to focus on evidentiary data that could provide examples that illuminate the concepts that underpin the understanding of how, when, and why video research methods are useful for a range of publishing and dissemination of transferable knowledge from research. This emphasis on communicating results in both theoretical and practical ways highlighted areas within the analysis for potential contextual similarities between our work and other projects. A central reason for interpreting findings and connecting them with evidence was the need to provide examples that could serve as potentially transferable findings for others using video with their research. Given the need for a fertile environment (Zhao & Frank, 2003 ) and attention to contextual differences to avoid lethal mutations (Brown & Campione, 1996 ), understand that these examples may not work for every situation, but the intent is to provide clear evidence of how video research methods can leverage storytelling to report research findings in a way that is consumable by a broader audience.

In the following section, we present findings from the review of research and practice, along with evidence from our work with video research, connecting the conceptual and operational frame to examples and teasing out aspects from existing literature.

Results and findings

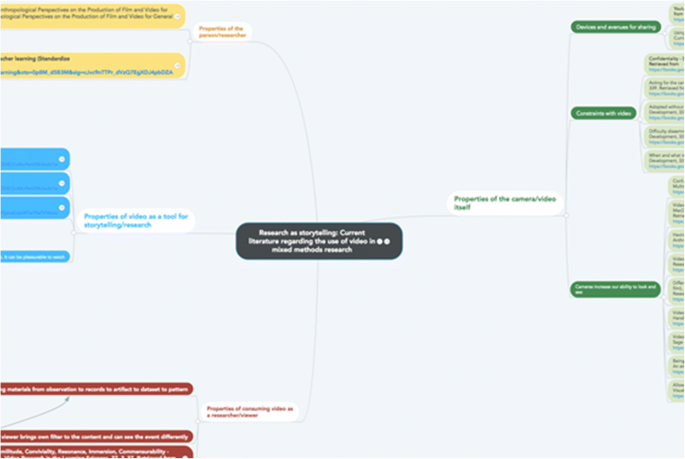

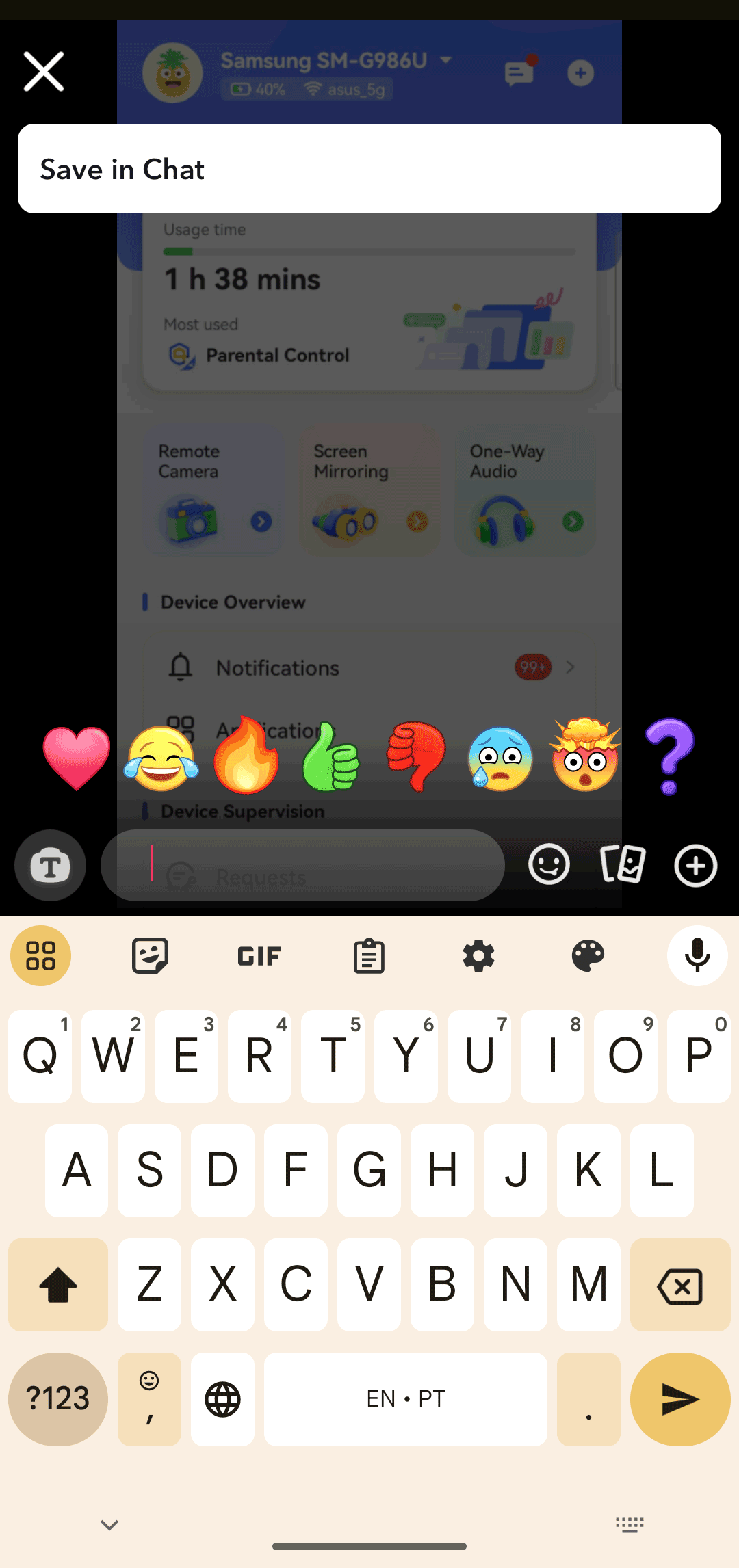

When looking at the current literature regarding the use of video in research, we developed a Mind Map to categorize convergent and divergent themes in the current literature, see Fig. 1 . Although this is far from a complete meta-analysis on video research (notably absent is a comprehensive discussion of ethical concerns regarding video research), the Mind Map focuses on four main properties in regards to video: video as a tool for storytelling/research, properties of the camera/video itself, how video impacts the person/researcher, and methods by which the researcher/viewer consumes video.

Mind Map of current literature regarding the use of video in mixed methods research. Link to the fully interactive Mind Map- http://clemsongc.com/ebwalker/mindmap/

Video, when used as a tool for research, can document and share ethnographic, epistemic, and storytelling data to participants and to the research team (R. Goldman, 2007 ; Heath et al., 2010 ; Miller & Zhou, 2007 ; Tobin & Hsueh, 2007 ). Much of the research in this area focuses on the properties (both positive and negative) inherent in the camera itself such as how video footage can increase the ability to see and experience the world, but can also act as a selective lens that separates an event from its natural context (S. Goldman & McDermott, 2007 ; Jewitt, n.d .; Knoblauch & Tuma, 2011 ; MacDougall, 2011 ; Miller & Zhou, 2007 ; Roth, 2007 ; Sossi, 2013 ).

Some research speaks to the role of the video-researcher within the context of the study, likening a video researcher to a participant-observer in ethnographic research (Derry, 2007 ; Roth, 2007 ; Sossi, 2013 ). The final category of research within the Mind Map focuses on the process of converting the video from an observation to records to artifact to dataset to pattern (Barron, 2007 ; R. Goldman, 2007 ; Knoblauch & Tuma, 2011 ; Newbury, 2011 ). Through this process of conversion, the video footage itself becomes an integral part of both the data and findings.