Society Homepage About Public Health Policy Contact

Data-driven hypothesis generation in clinical research: what we learned from a human subject study, article sidebar.

Submit your own article

Register as an author to reserve your spot in the next issue of the Medical Research Archives.

Join the Society

The European Society of Medicine is more than a professional association. We are a community. Our members work in countries across the globe, yet are united by a common goal: to promote health and health equity, around the world.

Join Europe’s leading medical society and discover the many advantages of membership, including free article publication.

Main Article Content

Hypothesis generation is an early and critical step in any hypothesis-driven clinical research project. Because it is not yet a well-understood cognitive process, the need to improve the process goes unrecognized. Without an impactful hypothesis, the significance of any research project can be questionable, regardless of the rigor or diligence applied in other steps of the study, e.g., study design, data collection, and result analysis. In this perspective article, the authors provide a literature review on the following topics first: scientific thinking, reasoning, medical reasoning, literature-based discovery, and a field study to explore scientific thinking and discovery. Over the years, scientific thinking has shown excellent progress in cognitive science and its applied areas: education, medicine, and biomedical research. However, a review of the literature reveals the lack of original studies on hypothesis generation in clinical research. The authors then summarize their first human participant study exploring data-driven hypothesis generation by clinical researchers in a simulated setting. The results indicate that a secondary data analytical tool, VIADS—a visual interactive analytic tool for filtering, summarizing, and visualizing large health data sets coded with hierarchical terminologies, can shorten the time participants need, on average, to generate a hypothesis and also requires fewer cognitive events to generate each hypothesis. As a counterpoint, this exploration also indicates that the quality ratings of the hypotheses thus generated carry significantly lower ratings for feasibility when applying VIADS. Despite its small scale, the study confirmed the feasibility of conducting a human participant study directly to explore the hypothesis generation process in clinical research. This study provides supporting evidence to conduct a larger-scale study with a specifically designed tool to facilitate the hypothesis-generation process among inexperienced clinical researchers. A larger study could provide generalizable evidence, which in turn can potentially improve clinical research productivity and overall clinical research enterprise.

Article Details

The Medical Research Archives grants authors the right to publish and reproduce the unrevised contribution in whole or in part at any time and in any form for any scholarly non-commercial purpose with the condition that all publications of the contribution include a full citation to the journal as published by the Medical Research Archives .

An official website of the United States government

The .gov means it’s official. Federal government websites often end in .gov or .mil. Before sharing sensitive information, make sure you’re on a federal government site.

The site is secure. The https:// ensures that you are connecting to the official website and that any information you provide is encrypted and transmitted securely.

- Publications

- Account settings

Preview improvements coming to the PMC website in October 2024. Learn More or Try it out now .

- Advanced Search

- Journal List

- J Korean Med Sci

- v.36(50); 2021 Dec 27

Formulating Hypotheses for Different Study Designs

Durga prasanna misra.

1 Department of Clinical Immunology and Rheumatology, Sanjay Gandhi Postgraduate Institute of Medical Sciences, Lucknow, India.

Armen Yuri Gasparyan

2 Departments of Rheumatology and Research and Development, Dudley Group NHS Foundation Trust (Teaching Trust of the University of Birmingham, UK), Russells Hall Hospital, Dudley, UK.

Olena Zimba

3 Department of Internal Medicine #2, Danylo Halytsky Lviv National Medical University, Lviv, Ukraine.

Marlen Yessirkepov

4 Department of Biology and Biochemistry, South Kazakhstan Medical Academy, Shymkent, Kazakhstan.

Vikas Agarwal

George d. kitas.

5 Centre for Epidemiology versus Arthritis, University of Manchester, Manchester, UK.

Generating a testable working hypothesis is the first step towards conducting original research. Such research may prove or disprove the proposed hypothesis. Case reports, case series, online surveys and other observational studies, clinical trials, and narrative reviews help to generate hypotheses. Observational and interventional studies help to test hypotheses. A good hypothesis is usually based on previous evidence-based reports. Hypotheses without evidence-based justification and a priori ideas are not received favourably by the scientific community. Original research to test a hypothesis should be carefully planned to ensure appropriate methodology and adequate statistical power. While hypotheses can challenge conventional thinking and may be controversial, they should not be destructive. A hypothesis should be tested by ethically sound experiments with meaningful ethical and clinical implications. The coronavirus disease 2019 pandemic has brought into sharp focus numerous hypotheses, some of which were proven (e.g. effectiveness of corticosteroids in those with hypoxia) while others were disproven (e.g. ineffectiveness of hydroxychloroquine and ivermectin).

Graphical Abstract

DEFINING WORKING AND STANDALONE SCIENTIFIC HYPOTHESES

Science is the systematized description of natural truths and facts. Routine observations of existing life phenomena lead to the creative thinking and generation of ideas about mechanisms of such phenomena and related human interventions. Such ideas presented in a structured format can be viewed as hypotheses. After generating a hypothesis, it is necessary to test it to prove its validity. Thus, hypothesis can be defined as a proposed mechanism of a naturally occurring event or a proposed outcome of an intervention. 1 , 2

Hypothesis testing requires choosing the most appropriate methodology and adequately powering statistically the study to be able to “prove” or “disprove” it within predetermined and widely accepted levels of certainty. This entails sample size calculation that often takes into account previously published observations and pilot studies. 2 , 3 In the era of digitization, hypothesis generation and testing may benefit from the availability of numerous platforms for data dissemination, social networking, and expert validation. Related expert evaluations may reveal strengths and limitations of proposed ideas at early stages of post-publication promotion, preventing the implementation of unsupported controversial points. 4

Thus, hypothesis generation is an important initial step in the research workflow, reflecting accumulating evidence and experts' stance. In this article, we overview the genesis and importance of scientific hypotheses and their relevance in the era of the coronavirus disease 2019 (COVID-19) pandemic.

DO WE NEED HYPOTHESES FOR ALL STUDY DESIGNS?

Broadly, research can be categorized as primary or secondary. In the context of medicine, primary research may include real-life observations of disease presentations and outcomes. Single case descriptions, which often lead to new ideas and hypotheses, serve as important starting points or justifications for case series and cohort studies. The importance of case descriptions is particularly evident in the context of the COVID-19 pandemic when unique, educational case reports have heralded a new era in clinical medicine. 5

Case series serve similar purpose to single case reports, but are based on a slightly larger quantum of information. Observational studies, including online surveys, describe the existing phenomena at a larger scale, often involving various control groups. Observational studies include variable-scale epidemiological investigations at different time points. Interventional studies detail the results of therapeutic interventions.

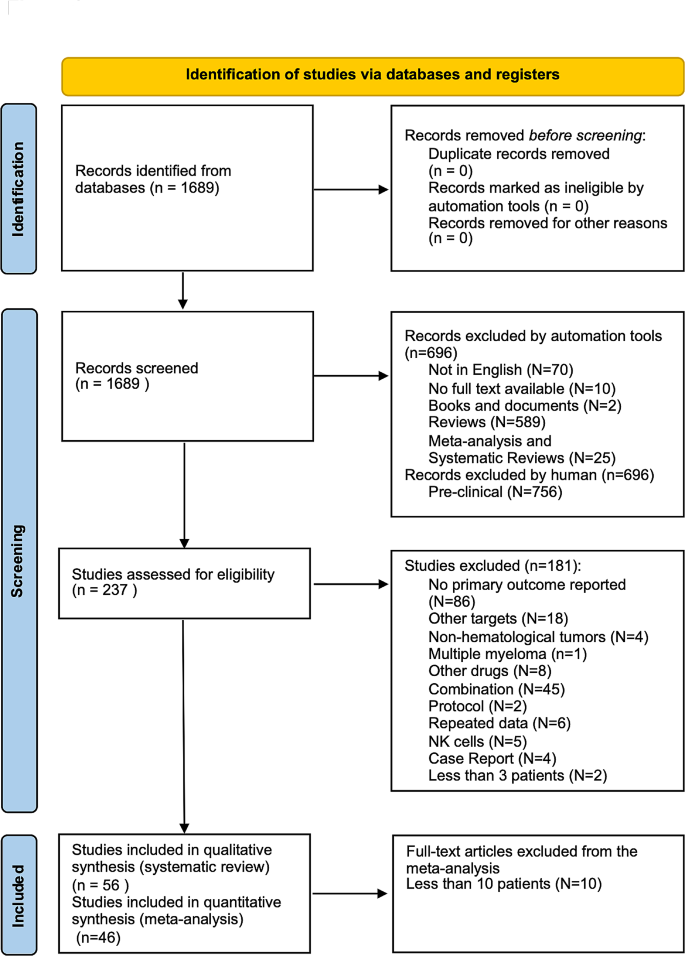

Secondary research is based on already published literature and does not directly involve human or animal subjects. Review articles are generated by secondary research. These could be systematic reviews which follow methods akin to primary research but with the unit of study being published papers rather than humans or animals. Systematic reviews have a rigid structure with a mandatory search strategy encompassing multiple databases, systematic screening of search results against pre-defined inclusion and exclusion criteria, critical appraisal of study quality and an optional component of collating results across studies quantitatively to derive summary estimates (meta-analysis). 6 Narrative reviews, on the other hand, have a more flexible structure. Systematic literature searches to minimise bias in selection of articles are highly recommended but not mandatory. 7 Narrative reviews are influenced by the authors' viewpoint who may preferentially analyse selected sets of articles. 8

In relation to primary research, case studies and case series are generally not driven by a working hypothesis. Rather, they serve as a basis to generate a hypothesis. Observational or interventional studies should have a hypothesis for choosing research design and sample size. The results of observational and interventional studies further lead to the generation of new hypotheses, testing of which forms the basis of future studies. Review articles, on the other hand, may not be hypothesis-driven, but form fertile ground to generate future hypotheses for evaluation. Fig. 1 summarizes which type of studies are hypothesis-driven and which lead on to hypothesis generation.

STANDARDS OF WORKING AND SCIENTIFIC HYPOTHESES

A review of the published literature did not enable the identification of clearly defined standards for working and scientific hypotheses. It is essential to distinguish influential versus not influential hypotheses, evidence-based hypotheses versus a priori statements and ideas, ethical versus unethical, or potentially harmful ideas. The following points are proposed for consideration while generating working and scientific hypotheses. 1 , 2 Table 1 summarizes these points.

| Points to be considered while evaluating the validity of hypotheses |

|---|

| Backed by evidence-based data |

| Testable by relevant study designs |

| Supported by preliminary (pilot) studies |

| Testable by ethical studies |

| Maintaining a balance between scientific temper and controversy |

Evidence-based data

A scientific hypothesis should have a sound basis on previously published literature as well as the scientist's observations. Randomly generated (a priori) hypotheses are unlikely to be proven. A thorough literature search should form the basis of a hypothesis based on published evidence. 7

Unless a scientific hypothesis can be tested, it can neither be proven nor be disproven. Therefore, a scientific hypothesis should be amenable to testing with the available technologies and the present understanding of science.

Supported by pilot studies

If a hypothesis is based purely on a novel observation by the scientist in question, it should be grounded on some preliminary studies to support it. For example, if a drug that targets a specific cell population is hypothesized to be useful in a particular disease setting, then there must be some preliminary evidence that the specific cell population plays a role in driving that disease process.

Testable by ethical studies

The hypothesis should be testable by experiments that are ethically acceptable. 9 For example, a hypothesis that parachutes reduce mortality from falls from an airplane cannot be tested using a randomized controlled trial. 10 This is because it is obvious that all those jumping from a flying plane without a parachute would likely die. Similarly, the hypothesis that smoking tobacco causes lung cancer cannot be tested by a clinical trial that makes people take up smoking (since there is considerable evidence for the health hazards associated with smoking). Instead, long-term observational studies comparing outcomes in those who smoke and those who do not, as was performed in the landmark epidemiological case control study by Doll and Hill, 11 are more ethical and practical.

Balance between scientific temper and controversy

Novel findings, including novel hypotheses, particularly those that challenge established norms, are bound to face resistance for their wider acceptance. Such resistance is inevitable until the time such findings are proven with appropriate scientific rigor. However, hypotheses that generate controversy are generally unwelcome. For example, at the time the pandemic of human immunodeficiency virus (HIV) and AIDS was taking foot, there were numerous deniers that refused to believe that HIV caused AIDS. 12 , 13 Similarly, at a time when climate change is causing catastrophic changes to weather patterns worldwide, denial that climate change is occurring and consequent attempts to block climate change are certainly unwelcome. 14 The denialism and misinformation during the COVID-19 pandemic, including unfortunate examples of vaccine hesitancy, are more recent examples of controversial hypotheses not backed by science. 15 , 16 An example of a controversial hypothesis that was a revolutionary scientific breakthrough was the hypothesis put forth by Warren and Marshall that Helicobacter pylori causes peptic ulcers. Initially, the hypothesis that a microorganism could cause gastritis and gastric ulcers faced immense resistance. When the scientists that proposed the hypothesis themselves ingested H. pylori to induce gastritis in themselves, only then could they convince the wider world about their hypothesis. Such was the impact of the hypothesis was that Barry Marshall and Robin Warren were awarded the Nobel Prize in Physiology or Medicine in 2005 for this discovery. 17 , 18

DISTINGUISHING THE MOST INFLUENTIAL HYPOTHESES

Influential hypotheses are those that have stood the test of time. An archetype of an influential hypothesis is that proposed by Edward Jenner in the eighteenth century that cowpox infection protects against smallpox. While this observation had been reported for nearly a century before this time, it had not been suitably tested and publicised until Jenner conducted his experiments on a young boy by demonstrating protection against smallpox after inoculation with cowpox. 19 These experiments were the basis for widespread smallpox immunization strategies worldwide in the 20th century which resulted in the elimination of smallpox as a human disease today. 20

Other influential hypotheses are those which have been read and cited widely. An example of this is the hygiene hypothesis proposing an inverse relationship between infections in early life and allergies or autoimmunity in adulthood. An analysis reported that this hypothesis had been cited more than 3,000 times on Scopus. 1

LESSONS LEARNED FROM HYPOTHESES AMIDST THE COVID-19 PANDEMIC

The COVID-19 pandemic devastated the world like no other in recent memory. During this period, various hypotheses emerged, understandably so considering the public health emergency situation with innumerable deaths and suffering for humanity. Within weeks of the first reports of COVID-19, aberrant immune system activation was identified as a key driver of organ dysfunction and mortality in this disease. 21 Consequently, numerous drugs that suppress the immune system or abrogate the activation of the immune system were hypothesized to have a role in COVID-19. 22 One of the earliest drugs hypothesized to have a benefit was hydroxychloroquine. Hydroxychloroquine was proposed to interfere with Toll-like receptor activation and consequently ameliorate the aberrant immune system activation leading to pathology in COVID-19. 22 The drug was also hypothesized to have a prophylactic role in preventing infection or disease severity in COVID-19. It was also touted as a wonder drug for the disease by many prominent international figures. However, later studies which were well-designed randomized controlled trials failed to demonstrate any benefit of hydroxychloroquine in COVID-19. 23 , 24 , 25 , 26 Subsequently, azithromycin 27 , 28 and ivermectin 29 were hypothesized as potential therapies for COVID-19, but were not supported by evidence from randomized controlled trials. The role of vitamin D in preventing disease severity was also proposed, but has not been proven definitively until now. 30 , 31 On the other hand, randomized controlled trials identified the evidence supporting dexamethasone 32 and interleukin-6 pathway blockade with tocilizumab as effective therapies for COVID-19 in specific situations such as at the onset of hypoxia. 33 , 34 Clues towards the apparent effectiveness of various drugs against severe acute respiratory syndrome coronavirus 2 in vitro but their ineffectiveness in vivo have recently been identified. Many of these drugs are weak, lipophilic bases and some others induce phospholipidosis which results in apparent in vitro effectiveness due to non-specific off-target effects that are not replicated inside living systems. 35 , 36

Another hypothesis proposed was the association of the routine policy of vaccination with Bacillus Calmette-Guerin (BCG) with lower deaths due to COVID-19. This hypothesis emerged in the middle of 2020 when COVID-19 was still taking foot in many parts of the world. 37 , 38 Subsequently, many countries which had lower deaths at that time point went on to have higher numbers of mortality, comparable to other areas of the world. Furthermore, the hypothesis that BCG vaccination reduced COVID-19 mortality was a classic example of ecological fallacy. Associations between population level events (ecological studies; in this case, BCG vaccination and COVID-19 mortality) cannot be directly extrapolated to the individual level. Furthermore, such associations cannot per se be attributed as causal in nature, and can only serve to generate hypotheses that need to be tested at the individual level. 39

IS TRADITIONAL PEER REVIEW EFFICIENT FOR EVALUATION OF WORKING AND SCIENTIFIC HYPOTHESES?

Traditionally, publication after peer review has been considered the gold standard before any new idea finds acceptability amongst the scientific community. Getting a work (including a working or scientific hypothesis) reviewed by experts in the field before experiments are conducted to prove or disprove it helps to refine the idea further as well as improve the experiments planned to test the hypothesis. 40 A route towards this has been the emergence of journals dedicated to publishing hypotheses such as the Central Asian Journal of Medical Hypotheses and Ethics. 41 Another means of publishing hypotheses is through registered research protocols detailing the background, hypothesis, and methodology of a particular study. If such protocols are published after peer review, then the journal commits to publishing the completed study irrespective of whether the study hypothesis is proven or disproven. 42 In the post-pandemic world, online research methods such as online surveys powered via social media channels such as Twitter and Instagram might serve as critical tools to generate as well as to preliminarily test the appropriateness of hypotheses for further evaluation. 43 , 44

Some radical hypotheses might be difficult to publish after traditional peer review. These hypotheses might only be acceptable by the scientific community after they are tested in research studies. Preprints might be a way to disseminate such controversial and ground-breaking hypotheses. 45 However, scientists might prefer to keep their hypotheses confidential for the fear of plagiarism of ideas, avoiding online posting and publishing until they have tested the hypotheses.

SUGGESTIONS ON GENERATING AND PUBLISHING HYPOTHESES

Publication of hypotheses is important, however, a balance is required between scientific temper and controversy. Journal editors and reviewers might keep in mind these specific points, summarized in Table 2 and detailed hereafter, while judging the merit of hypotheses for publication. Keeping in mind the ethical principle of primum non nocere, a hypothesis should be published only if it is testable in a manner that is ethically appropriate. 46 Such hypotheses should be grounded in reality and lend themselves to further testing to either prove or disprove them. It must be considered that subsequent experiments to prove or disprove a hypothesis have an equal chance of failing or succeeding, akin to tossing a coin. A pre-conceived belief that a hypothesis is unlikely to be proven correct should not form the basis of rejection of such a hypothesis for publication. In this context, hypotheses generated after a thorough literature search to identify knowledge gaps or based on concrete clinical observations on a considerable number of patients (as opposed to random observations on a few patients) are more likely to be acceptable for publication by peer-reviewed journals. Also, hypotheses should be considered for publication or rejection based on their implications for science at large rather than whether the subsequent experiments to test them end up with results in favour of or against the original hypothesis.

| Points to be considered before a hypothesis is acceptable for publication |

|---|

| Experiments required to test hypotheses should be ethically acceptable as per the World Medical Association declaration on ethics and related statements |

| Pilot studies support hypotheses |

| Single clinical observations and expert opinion surveys may support hypotheses |

| Testing hypotheses requires robust methodology and statistical power |

| Hypotheses that challenge established views and concepts require proper evidence-based justification |

Hypotheses form an important part of the scientific literature. The COVID-19 pandemic has reiterated the importance and relevance of hypotheses for dealing with public health emergencies and highlighted the need for evidence-based and ethical hypotheses. A good hypothesis is testable in a relevant study design, backed by preliminary evidence, and has positive ethical and clinical implications. General medical journals might consider publishing hypotheses as a specific article type to enable more rapid advancement of science.

Disclosure: The authors have no potential conflicts of interest to disclose.

Author Contributions:

- Data curation: Gasparyan AY, Misra DP, Zimba O, Yessirkepov M, Agarwal V, Kitas GD.

January 13, 2024

Demystifying Hypothesis Generation: A Guide to AI-Driven Insights

Hypothesis generation involves making informed guesses about various aspects of a business, market, or problem that need further exploration and testing. This article discusses the process you need to follow while generating hypothesis and how an AI tool, like Akaike's BYOB can help you achieve the process quicker and better.

What is Hypothesis Generation?

Hypothesis generation involves making informed guesses about various aspects of a business, market, or problem that need further exploration and testing. It's a crucial step while applying the scientific method to business analysis and decision-making.

Here is an example from a popular B-school marketing case study:

A bicycle manufacturer noticed that their sales had dropped significantly in 2002 compared to the previous year. The team investigating the reasons for this had many hypotheses. One of them was: “many cycling enthusiasts have switched to walking with their iPods plugged in.” The Apple iPod was launched in late 2001 and was an immediate hit among young consumers. Data collected manually by the team seemed to show that the geographies around Apple stores had indeed shown a sales decline.

Traditionally, hypothesis generation is time-consuming and labour-intensive. However, the advent of Large Language Models (LLMs) and Generative AI (GenAI) tools has transformed the practice altogether. These AI tools can rapidly process extensive datasets, quickly identifying patterns, correlations, and insights that might have even slipped human eyes, thus streamlining the stages of hypothesis generation.

These tools have also revolutionised experimentation by optimising test designs, reducing resource-intensive processes, and delivering faster results. LLMs' role in hypothesis generation goes beyond mere assistance, bringing innovation and easy, data-driven decision-making to businesses.

Hypotheses come in various types, such as simple, complex, null, alternative, logical, statistical, or empirical. These categories are defined based on the relationships between the variables involved and the type of evidence required for testing them. In this article, we aim to demystify hypothesis generation. We will explore the role of LLMs in this process and outline the general steps involved, highlighting why it is a valuable tool in your arsenal.

Understanding Hypothesis Generation

A hypothesis is born from a set of underlying assumptions and a prediction of how those assumptions are anticipated to unfold in a given context. Essentially, it's an educated, articulated guess that forms the basis for action and outcome assessment.

A hypothesis is a declarative statement that has not yet been proven true. Based on past scholarship , we could sum it up as the following:

- A definite statement, not a question

- Based on observations and knowledge

- Testable and can be proven wrong

- Predicts the anticipated results clearly

- Contains a dependent and an independent variable where the dependent variable is the phenomenon being explained and the independent variable does the explaining

In a business setting, hypothesis generation becomes essential when people are made to explain their assumptions. This clarity from hypothesis to expected outcome is crucial, as it allows people to acknowledge a failed hypothesis if it does not provide the intended result. Promoting such a culture of effective hypothesising can lead to more thoughtful actions and a deeper understanding of outcomes. Failures become just another step on the way to success, and success brings more success.

Hypothesis generation is a continuous process where you start with an educated guess and refine it as you gather more information. You form a hypothesis based on what you know or observe.

Say you're a pen maker whose sales are down. You look at what you know:

- I can see that pen sales for my brand are down in May and June.

- I also know that schools are closed in May and June and that schoolchildren use a lot of pens.

- I hypothesise that my sales are down because school children are not using pens in May and June, and thus not buying newer ones.

The next step is to collect and analyse data to test this hypothesis, like tracking sales before and after school vacations. As you gather more data and insights, your hypothesis may evolve. You might discover that your hypothesis only holds in certain markets but not others, leading to a more refined hypothesis.

Once your hypothesis is proven correct, there are many actions you may take - (a) reduce supply in these months (b) reduce the price so that sales pick up (c) release a limited supply of novelty pens, and so on.

Once you decide on your action, you will further monitor the data to see if your actions are working. This iterative cycle of formulating, testing, and refining hypotheses - and using insights in decision-making - is vital in making impactful decisions and solving complex problems in various fields, from business to scientific research.

How do Analysts generate Hypotheses? Why is it iterative?

A typical human working towards a hypothesis would start with:

1. Picking the Default Action

2. Determining the Alternative Action

3. Figuring out the Null Hypothesis (H0)

4. Inverting the Null Hypothesis to get the Alternate Hypothesis (H1)

5. Hypothesis Testing

The default action is what you would naturally do, regardless of any hypothesis or in a case where you get no further information. The alternative action is the opposite of your default action.

The null hypothesis, or H0, is what brings about your default action. The alternative hypothesis (H1) is essentially the negation of H0.

For example, suppose you are tasked with analysing a highway tollgate data (timestamp, vehicle number, toll amount) to see if a raise in tollgate rates will increase revenue or cause a volume drop. Following the above steps, we can determine:

| Default Action | “I want to increase toll rates by 10%.” |

| Alternative Action | “I will keep my rates constant.” |

| H | “A 10% increase in the toll rate will not cause a significant dip in traffic (say 3%).” |

| H | “A 10% increase in the toll rate will cause a dip in traffic of greater than 3%.” |

Now, we can start looking at past data of tollgate traffic in and around rate increases for different tollgates. Some data might be irrelevant. For example, some tollgates might be much cheaper so customers might not have cared about an increase. Or, some tollgates are next to a large city, and customers have no choice but to pay.

Ultimately, you are looking for the level of significance between traffic and rates for comparable tollgates. Significance is often noted as its P-value or probability value . P-value is a way to measure how surprising your test results are, assuming that your H0 holds true.

The lower the p-value, the more convincing your data is to change your default action.

Usually, a p-value that is less than 0.05 is considered to be statistically significant, meaning there is a need to change your null hypothesis and reject your default action. In our example, a low p-value would suggest that a 10% increase in the toll rate causes a significant dip in traffic (>3%). Thus, it is better if we keep our rates as is if we want to maintain revenue.

In other examples, where one has to explore the significance of different variables, we might find that some variables are not correlated at all. In general, hypothesis generation is an iterative process - you keep looking for data and keep considering whether that data convinces you to change your default action.

Internal and External Data

Hypothesis generation feeds on data. Data can be internal or external. In businesses, internal data is produced by company owned systems (areas such as operations, maintenance, personnel, finance, etc). External data comes from outside the company (customer data, competitor data, and so on).

Let’s consider a real-life hypothesis generated from internal data:

Multinational company Johnson & Johnson was looking to enhance employee performance and retention. Initially, they favoured experienced industry candidates for recruitment, assuming they'd stay longer and contribute faster. However, HR and the people analytics team at J&J hypothesised that recent college graduates outlast experienced hires and perform equally well. They compiled data on 47,000 employees to test the hypothesis and, based on it, Johnson & Johnson increased hires of new graduates by 20% , leading to reduced turnover with consistent performance.

For an analyst (or an AI assistant), external data is often hard to source - it may not be available as organised datasets (or reports), or it may be expensive to acquire. Teams might have to collect new data from surveys, questionnaires, customer feedback and more.

Further, there is the problem of context. Suppose an analyst is looking at the dynamic pricing of hotels offered on his company’s platform in a particular geography. Suppose further that the analyst has no context of the geography, the reasons people visit the locality, or of local alternatives; then the analyst will have to learn additional context to start making hypotheses to test.

Internal data, of course, is internal, meaning access is already guaranteed. However, this probably adds up to staggering volumes of data.

Looking Back, and Looking Forward

Data analysts often have to generate hypotheses retrospectively, where they formulate and evaluate H0 and H1 based on past data. For the sake of this article, let's call it retrospective hypothesis generation.

Alternatively, a prospective approach to hypothesis generation could be one where hypotheses are formulated before data collection or before a particular event or change is implemented.

For example:

A pen seller has a hypothesis that during the lean periods of summer, when schools are closed, a Buy One Get One (BOGO) campaign will lead to a 100% sales recovery because customers will buy pens in advance. He then collects feedback from customers in the form of a survey and also implements a BOGO campaign in a single territory to see whether his hypothesis is correct, or not.

The HR head of a multi-office employer realises that some of the company’s offices have been providing snacks at 4:30 PM in the common area, and the rest have not. He has a hunch that these offices have higher productivity. The leader asks the company’s data science team to look at employee productivity data and the employee location data. “Am I correct, and to what extent?”, he asks.

These examples also reflect another nuance, in which the data is collected differently:

- Observational: Observational testing happens when researchers observe a sample population and collect data as it occurs without intervention. The data for the snacks vs productivity hypothesis was observational.

- Experimental: In experimental testing, the sample is divided into multiple groups, with one control group. The test for the non-control groups will be varied to determine how the data collected differs from that of the control group. The data collected by the pen seller in the single territory experiment was experimental.

Such data-backed insights are a valuable resource for businesses because they allow for more informed decision-making, leading to the company's overall growth. Taking a data-driven decision, from forming a hypothesis to updating and validating it across iterations, to taking action based on your insights reduces guesswork, minimises risks, and guides businesses towards strategies that are more likely to succeed.

How can GenAI help in Hypothesis Generation?

Of course, hypothesis generation is not always straightforward. Understanding the earlier examples is easy for us because we're already inundated with context. But, in a situation where an analyst has no domain knowledge, suddenly, hypothesis generation becomes a tedious and challenging process.

AI, particularly high-capacity, robust tools such as LLMs, have radically changed how we process and analyse large volumes of data. With its help, we can sift through massive datasets with precision and speed, regardless of context, whether it's customer behaviour, financial trends, medical records, or more. Generative AI, including LLMs, are trained on diverse text data, enabling them to comprehend and process various topics.

Now, imagine an AI assistant helping you with hypothesis generation. LLMs are not born with context. Instead, they are trained upon vast amounts of data, enabling them to develop context in a completely unfamiliar environment. This skill is instrumental when adopting a more exploratory approach to hypothesis generation. For example, the HR leader from earlier could simply ask an LLM tool: “Can you look at this employee productivity data and find cohorts of high-productivity and see if they correlate to any other employee data like location, pedigree, years of service, marital status, etc?”

For an LLM-based tool to be useful, it requires a few things:

- Domain Knowledge: A human could take months to years to acclimatise to a particular field fully, but LLMs, when fed extensive information and utilising Natural Language Processing (NLP), can familiarise themselves in a very short time.

- Explainability: Explainability is its ability to explain its thought process and output to cease being a "black box".

- Customisation: For consistent improvement, contextual AI must allow tweaks, allowing users to change its behaviour to meet their expectations. Human intervention and validation is a necessary step in adoptingAI tools. NLP allows these tools to discern context within textual data, meaning it can read, categorise, and analyse data with unimaginable speed. LLMs, thus, can quickly develop contextual understanding and generate human-like text while processing vast amounts of unstructured data, making it easier for businesses and researchers to organise and utilise data effectively.LLMs have the potential to become indispensable tools for businesses. The future rests on AI tools that harness the powers of LLMs and NLP to deliver actionable insights, mitigate risks, inform decision-making, predict future trends, and drive business transformation across various sectors.

Together, these technologies empower data analysts to unravel hidden insights within their data. For our pen maker, for example, an AI tool could aid data analytics. It can look through historical data to track when sales peaked or go through sales data to identify the pens that sold the most. It can refine a hypothesis across iterations, just as a human analyst would. It can even be used to brainstorm other hypotheses. Consider the situation where you ask the LLM, " Where do I sell the most pens? ". It will go through all of the data you have made available - places where you sell pens, the number of pens you sold - to return the answer. Now, if we were to do this on our own, even if we were particularly meticulous about keeping records, it would take us at least five to ten minutes, that too, IF we know how to query a database and extract the needed information. If we don't, there's the added effort required to find and train such a person. An AI assistant, on the other hand, could share the answer with us in mere seconds. Its finely-honed talents in sorting through data, identifying patterns, refining hypotheses iteratively, and generating data-backed insights enhance problem-solving and decision-making, supercharging our business model.

Top-Down and Bottom-Up Hypothesis Generation

As we discussed earlier, every hypothesis begins with a default action that determines your initial hypotheses and all your subsequent data collection. You look at data and a LOT of data. The significance of your data is dependent on the effect and the relevance it has to your default action. This would be a top-down approach to hypothesis generation.

There is also the bottom-up method , where you start by going through your data and figuring out if there are any interesting correlations that you could leverage better. This method is usually not as focused as the earlier approach and, as a result, involves even more data collection, processing, and analysis. AI is a stellar tool for Exploratory Data Analysis (EDA). Wading through swathes of data to highlight trends, patterns, gaps, opportunities, errors, and concerns is hardly a challenge for an AI tool equipped with NLP and powered by LLMs.

EDA can help with:

- Cleaning your data

- Understanding your variables

- Analysing relationships between variables

An AI assistant performing EDA can help you review your data, remove redundant data points, identify errors, note relationships, and more. All of this ensures ease, efficiency, and, best of all, speed for your data analysts.

Good hypotheses are extremely difficult to generate. They are nuanced and, without necessary context, almost impossible to ascertain in a top-down approach. On the other hand, an AI tool adopting an exploratory approach is swift, easily running through available data - internal and external.

If you want to rearrange how your LLM looks at your data, you can also do that. Changing the weight you assign to the various events and categories in your data is a simple process. That’s why LLMs are a great tool in hypothesis generation - analysts can tailor them to their specific use cases.

Ethical Considerations and Challenges

There are numerous reasons why you should adopt AI tools into your hypothesis generation process. But why are they still not as popular as they should be?

Some worry that AI tools can inadvertently pick up human biases through the data it is fed. Others fear AI and raise privacy and trust concerns. Data quality and ability are also often questioned. Since LLMs and Generative AI are developing technologies, such issues are bound to be, but these are all obstacles researchers are earnestly tackling.

One oft-raised complaint against LLM tools (like OpenAI's ChatGPT) is that they 'fill in' gaps in knowledge, providing information where there is none, thus giving inaccurate, embellished, or outright wrong answers; this tendency to "hallucinate" was a major cause for concern. But, to combat this phenomenon, newer AI tools have started providing citations with the insights they offer so that their answers become verifiable. Human validation is an essential step in interpreting AI-generated hypotheses and queries in general. This is why we need a collaboration between the intelligent and artificially intelligent mind to ensure optimised performance.

Clearly, hypothesis generation is an immensely time-consuming activity. But AI can take care of all these steps for you. From helping you figure out your default action, determining all the major research questions, initial hypotheses and alternative actions, and exhaustively weeding through your data to collect all relevant points, AI can help make your analysts' jobs easier. It can take any approach - prospective, retrospective, exploratory, top-down, bottom-up, etc. Furthermore, with LLMs, your structured and unstructured data are taken care of, meaning no more worries about messy data! With the wonders of human intuition and the ease and reliability of Generative AI and Large Language Models, you can speed up and refine your process of hypothesis generation based on feedback and new data to provide the best assistance to your business.

Related Posts

The latest industry news, interviews, technologies, and resources.

What is Open Source AI, Exactly?

.jpg)

Analyst 2.0: How is AI Changing the Role of Data Analysts

The future belongs to those who forge a symbiotic relationship between Human Ingenuity and Machine Intelligence

From Development to Deployment: Exploring the LLMOps Life Cycle

Discover how Large Language Models (LLMs) are revolutionizing enterprise AI with capabilities like text generation, sentiment analysis, and language translation. Learn about LLMOps, the specialized practices for deploying, monitoring, and maintaining LLMs in production, ensuring reliability, performance, and security in business operations.

8 Ways By Which AI Fraud Detection Helps Financial Firms

In the era of the Digital revolution, financial systems and AI fraud detection go hand-in-hand as they share a common characteristic.

Knowledge Center

Case Studies

© 2023 Akaike Technologies Pvt. Ltd. and/or its associates and partners

Terms of Use

Privacy Policy

Terms of Service

© Akaike Technologies Pvt. Ltd. and/or its associates and partners

Hypothesis Generation and Interpretation

Design Principles and Patterns for Big Data Applications

- © 2024

- Hiroshi Ishikawa 0

Department of Systems Design, Tokyo Metropolitan University, Hino, Japan

You can also search for this author in PubMed Google Scholar

- Provides an integrated perspective on why decisions are made and how the process is modeled

- Presentation of design patterns enables use in a wide variety of big-data applications

- Multiple practical use cases indicate the broad real-world significance of the methods presented

Part of the book series: Studies in Big Data (SBD, volume 139)

2432 Accesses

This is a preview of subscription content, log in via an institution to check access.

Access this book

Subscribe and save.

- Get 10 units per month

- Download Article/Chapter or eBook

- 1 Unit = 1 Article or 1 Chapter

- Cancel anytime

- Available as EPUB and PDF

- Read on any device

- Instant download

- Own it forever

- Durable hardcover edition

- Dispatched in 3 to 5 business days

- Free shipping worldwide - see info

Tax calculation will be finalised at checkout

Other ways to access

Licence this eBook for your library

Institutional subscriptions

About this book

The novel methods and technologies proposed in Hypothesis Generation and Interpretation are supported by the incorporation of historical perspectives on science and an emphasis on the origin and development of the ideas behind their design principles and patterns.

Similar content being viewed by others

A New Kind of Science: Big Data und Algorithmen verändern die Wissenschaft

Analysis, Visualization and Exploration Scenarios: Formal Methods for Systematic Meta Studies of Big Data Applications

The Nexus Between Big Data and Decision-Making: A Study of Big Data Techniques and Technologies

- Hypothesis Generation

- Hypothesis Interpretation

- Data Engineering

- Data Science

- Data Management

- Machine Learning

- Data Mining

- Design Patterns

- Design Principles

Table of contents (8 chapters)

Front matter, basic concept.

Hiroshi Ishikawa

Science and Hypothesis

Machine learning and integrated approach, hypothesis generation by difference, methods for integrated hypothesis generation, interpretation, back matter, authors and affiliations, about the author.

He has published actively in international, refereed journals and conferences, such as ACM Transactions on Database Systems , IEEE Transactions on Knowledge and Data Engineering , The VLDB Journal , IEEE International Conference on Data Engineering, and ACM SIGSPATIAL and Management of Emergent Digital EcoSystems (MEDES). He has authored and co-authored a dozen books, including Social Big Data Mining (CRC, 2015) and Object-Oriented Database System (Springer-Verlag, 1993).

Bibliographic Information

Book Title : Hypothesis Generation and Interpretation

Book Subtitle : Design Principles and Patterns for Big Data Applications

Authors : Hiroshi Ishikawa

Series Title : Studies in Big Data

DOI : https://doi.org/10.1007/978-3-031-43540-9

Publisher : Springer Cham

eBook Packages : Computer Science , Computer Science (R0)

Copyright Information : The Editor(s) (if applicable) and The Author(s), under exclusive license to Springer Nature Switzerland AG 2024

Hardcover ISBN : 978-3-031-43539-3 Published: 02 February 2024

Softcover ISBN : 978-3-031-43542-3 Due: 15 February 2025

eBook ISBN : 978-3-031-43540-9 Published: 01 January 2024

Series ISSN : 2197-6503

Series E-ISSN : 2197-6511

Edition Number : 1

Number of Pages : XII, 372

Number of Illustrations : 52 b/w illustrations, 125 illustrations in colour

Topics : Theory of Computation , Database Management , Data Mining and Knowledge Discovery , Machine Learning , Big Data , Complex Systems

- Publish with us

Policies and ethics

- Find a journal

- Track your research

Machine Learning as a Tool for Hypothesis Generation

While hypothesis testing is a highly formalized activity, hypothesis generation remains largely informal. We propose a systematic procedure to generate novel hypotheses about human behavior, which uses the capacity of machine learning algorithms to notice patterns people might not. We illustrate the procedure with a concrete application: judge decisions about who to jail. We begin with a striking fact: The defendant’s face alone matters greatly for the judge’s jailing decision. In fact, an algorithm given only the pixels in the defendant’s mugshot accounts for up to half of the predictable variation. We develop a procedure that allows human subjects to interact with this black-box algorithm to produce hypotheses about what in the face influences judge decisions. The procedure generates hypotheses that are both interpretable and novel: They are not explained by demographics (e.g. race) or existing psychology research; nor are they already known (even if tacitly) to people or even experts. Though these results are specific, our procedure is general. It provides a way to produce novel, interpretable hypotheses from any high-dimensional dataset (e.g. cell phones, satellites, online behavior, news headlines, corporate filings, and high-frequency time series). A central tenet of our paper is that hypothesis generation is in and of itself a valuable activity, and hope this encourages future work in this largely “pre-scientific” stage of science.

This is a revised version of Chicago Booth working paper 22-15 “Algorithmic Behavioral Science: Machine Learning as a Tool for Scientific Discovery.” We gratefully acknowledge support from the Alfred P. Sloan Foundation, Emmanuel Roman, and the Center for Applied Artificial Intelligence at the University of Chicago. For valuable comments we thank Andrei Shliefer, Larry Katz and five anonymous referees, as well as Marianne Bertrand, Jesse Bruhn, Steven Durlauf, Joel Ferguson, Emma Harrington, Supreet Kaur, Matteo Magnaricotte, Dev Patel, Betsy Levy Paluck, Roberto Rocha, Evan Rose, Suproteem Sarkar, Josh Schwartzstein, Nick Swanson, Nadav Tadelis, Richard Thaler, Alex Todorov, Jenny Wang and Heather Yang, as well as seminar participants at Bocconi, Brown, Columbia, ETH Zurich, Harvard, MIT, Stanford, the University of California Berkeley, the University of Chicago, the University of Pennsylvania, the 2022 Behavioral Economics Annual Meetings and the 2022 NBER summer institute. For invaluable assistance with the data and analysis we thank Cecilia Cook, Logan Crowl, Arshia Elyaderani, and especially Jonas Knecht and James Ross. This research was reviewed by the University of Chicago Social and Behavioral Sciences Institutional Review Board (IRB20-0917) and deemed exempt because the project relies on secondary analysis of public data sources. All opinions and any errors are of course our own. The views expressed herein are those of the authors and do not necessarily reflect the views of the National Bureau of Economic Research.

MARC RIS BibTeΧ

Download Citation Data

Published Versions

Jens Ludwig & Sendhil Mullainathan, 2024. " Machine Learning as a Tool for Hypothesis Generation, " The Quarterly Journal of Economics, vol 139(2), pages 751-827.

Working Groups

Conferences, more from nber.

In addition to working papers , the NBER disseminates affiliates’ latest findings through a range of free periodicals — the NBER Reporter , the NBER Digest , the Bulletin on Retirement and Disability , the Bulletin on Health , and the Bulletin on Entrepreneurship — as well as online conference reports , video lectures , and interviews .

Have a language expert improve your writing

Run a free plagiarism check in 10 minutes, generate accurate citations for free.

- Knowledge Base

Methodology

- How to Write a Strong Hypothesis | Steps & Examples

How to Write a Strong Hypothesis | Steps & Examples

Published on May 6, 2022 by Shona McCombes . Revised on November 20, 2023.

A hypothesis is a statement that can be tested by scientific research. If you want to test a relationship between two or more variables, you need to write hypotheses before you start your experiment or data collection .

Example: Hypothesis

Daily apple consumption leads to fewer doctor’s visits.

Table of contents

What is a hypothesis, developing a hypothesis (with example), hypothesis examples, other interesting articles, frequently asked questions about writing hypotheses.

A hypothesis states your predictions about what your research will find. It is a tentative answer to your research question that has not yet been tested. For some research projects, you might have to write several hypotheses that address different aspects of your research question.

A hypothesis is not just a guess – it should be based on existing theories and knowledge. It also has to be testable, which means you can support or refute it through scientific research methods (such as experiments, observations and statistical analysis of data).

Variables in hypotheses

Hypotheses propose a relationship between two or more types of variables .

- An independent variable is something the researcher changes or controls.

- A dependent variable is something the researcher observes and measures.

If there are any control variables , extraneous variables , or confounding variables , be sure to jot those down as you go to minimize the chances that research bias will affect your results.

In this example, the independent variable is exposure to the sun – the assumed cause . The dependent variable is the level of happiness – the assumed effect .

Prevent plagiarism. Run a free check.

Step 1. ask a question.

Writing a hypothesis begins with a research question that you want to answer. The question should be focused, specific, and researchable within the constraints of your project.

Step 2. Do some preliminary research

Your initial answer to the question should be based on what is already known about the topic. Look for theories and previous studies to help you form educated assumptions about what your research will find.

At this stage, you might construct a conceptual framework to ensure that you’re embarking on a relevant topic . This can also help you identify which variables you will study and what you think the relationships are between them. Sometimes, you’ll have to operationalize more complex constructs.

Step 3. Formulate your hypothesis

Now you should have some idea of what you expect to find. Write your initial answer to the question in a clear, concise sentence.

4. Refine your hypothesis

You need to make sure your hypothesis is specific and testable. There are various ways of phrasing a hypothesis, but all the terms you use should have clear definitions, and the hypothesis should contain:

- The relevant variables

- The specific group being studied

- The predicted outcome of the experiment or analysis

5. Phrase your hypothesis in three ways

To identify the variables, you can write a simple prediction in if…then form. The first part of the sentence states the independent variable and the second part states the dependent variable.

In academic research, hypotheses are more commonly phrased in terms of correlations or effects, where you directly state the predicted relationship between variables.

If you are comparing two groups, the hypothesis can state what difference you expect to find between them.

6. Write a null hypothesis

If your research involves statistical hypothesis testing , you will also have to write a null hypothesis . The null hypothesis is the default position that there is no association between the variables. The null hypothesis is written as H 0 , while the alternative hypothesis is H 1 or H a .

- H 0 : The number of lectures attended by first-year students has no effect on their final exam scores.

- H 1 : The number of lectures attended by first-year students has a positive effect on their final exam scores.

| Research question | Hypothesis | Null hypothesis |

|---|---|---|

| What are the health benefits of eating an apple a day? | Increasing apple consumption in over-60s will result in decreasing frequency of doctor’s visits. | Increasing apple consumption in over-60s will have no effect on frequency of doctor’s visits. |

| Which airlines have the most delays? | Low-cost airlines are more likely to have delays than premium airlines. | Low-cost and premium airlines are equally likely to have delays. |

| Can flexible work arrangements improve job satisfaction? | Employees who have flexible working hours will report greater job satisfaction than employees who work fixed hours. | There is no relationship between working hour flexibility and job satisfaction. |

| How effective is high school sex education at reducing teen pregnancies? | Teenagers who received sex education lessons throughout high school will have lower rates of unplanned pregnancy teenagers who did not receive any sex education. | High school sex education has no effect on teen pregnancy rates. |

| What effect does daily use of social media have on the attention span of under-16s? | There is a negative between time spent on social media and attention span in under-16s. | There is no relationship between social media use and attention span in under-16s. |

If you want to know more about the research process , methodology , research bias , or statistics , make sure to check out some of our other articles with explanations and examples.

- Sampling methods

- Simple random sampling

- Stratified sampling

- Cluster sampling

- Likert scales

- Reproducibility

Statistics

- Null hypothesis

- Statistical power

- Probability distribution

- Effect size

- Poisson distribution

Research bias

- Optimism bias

- Cognitive bias

- Implicit bias

- Hawthorne effect

- Anchoring bias

- Explicit bias

Receive feedback on language, structure, and formatting

Professional editors proofread and edit your paper by focusing on:

- Academic style

- Vague sentences

- Style consistency

See an example

A hypothesis is not just a guess — it should be based on existing theories and knowledge. It also has to be testable, which means you can support or refute it through scientific research methods (such as experiments, observations and statistical analysis of data).

Null and alternative hypotheses are used in statistical hypothesis testing . The null hypothesis of a test always predicts no effect or no relationship between variables, while the alternative hypothesis states your research prediction of an effect or relationship.

Hypothesis testing is a formal procedure for investigating our ideas about the world using statistics. It is used by scientists to test specific predictions, called hypotheses , by calculating how likely it is that a pattern or relationship between variables could have arisen by chance.

Cite this Scribbr article

If you want to cite this source, you can copy and paste the citation or click the “Cite this Scribbr article” button to automatically add the citation to our free Citation Generator.

McCombes, S. (2023, November 20). How to Write a Strong Hypothesis | Steps & Examples. Scribbr. Retrieved August 30, 2024, from https://www.scribbr.com/methodology/hypothesis/

Is this article helpful?

Shona McCombes

Other students also liked, construct validity | definition, types, & examples, what is a conceptual framework | tips & examples, operationalization | a guide with examples, pros & cons, what is your plagiarism score.

- Business Essentials

- Leadership & Management

- Credential of Leadership, Impact, and Management in Business (CLIMB)

- Entrepreneurship & Innovation

- Digital Transformation

- Finance & Accounting

- Business in Society

- For Organizations

- Support Portal

- Media Coverage

- Founding Donors

- Leadership Team

- Harvard Business School →

- HBS Online →

- Business Insights →

Business Insights

Harvard Business School Online's Business Insights Blog provides the career insights you need to achieve your goals and gain confidence in your business skills.

- Career Development

- Communication

- Decision-Making

- Earning Your MBA

- Negotiation

- News & Events

- Productivity

- Staff Spotlight

- Student Profiles

- Work-Life Balance

- AI Essentials for Business

- Alternative Investments

- Business Analytics

- Business Strategy

- Business and Climate Change

- Creating Brand Value

- Design Thinking and Innovation

- Digital Marketing Strategy

- Disruptive Strategy

- Economics for Managers

- Entrepreneurship Essentials

- Financial Accounting

- Global Business

- Launching Tech Ventures

- Leadership Principles

- Leadership, Ethics, and Corporate Accountability

- Leading Change and Organizational Renewal

- Leading with Finance

- Management Essentials

- Negotiation Mastery

- Organizational Leadership

- Power and Influence for Positive Impact

- Strategy Execution

- Sustainable Business Strategy

- Sustainable Investing

- Winning with Digital Platforms

A Beginner’s Guide to Hypothesis Testing in Business

- 30 Mar 2021

Becoming a more data-driven decision-maker can bring several benefits to your organization, enabling you to identify new opportunities to pursue and threats to abate. Rather than allowing subjective thinking to guide your business strategy, backing your decisions with data can empower your company to become more innovative and, ultimately, profitable.

If you’re new to data-driven decision-making, you might be wondering how data translates into business strategy. The answer lies in generating a hypothesis and verifying or rejecting it based on what various forms of data tell you.

Below is a look at hypothesis testing and the role it plays in helping businesses become more data-driven.

Access your free e-book today.

What Is Hypothesis Testing?

To understand what hypothesis testing is, it’s important first to understand what a hypothesis is.

A hypothesis or hypothesis statement seeks to explain why something has happened, or what might happen, under certain conditions. It can also be used to understand how different variables relate to each other. Hypotheses are often written as if-then statements; for example, “If this happens, then this will happen.”

Hypothesis testing , then, is a statistical means of testing an assumption stated in a hypothesis. While the specific methodology leveraged depends on the nature of the hypothesis and data available, hypothesis testing typically uses sample data to extrapolate insights about a larger population.

Hypothesis Testing in Business

When it comes to data-driven decision-making, there’s a certain amount of risk that can mislead a professional. This could be due to flawed thinking or observations, incomplete or inaccurate data , or the presence of unknown variables. The danger in this is that, if major strategic decisions are made based on flawed insights, it can lead to wasted resources, missed opportunities, and catastrophic outcomes.

The real value of hypothesis testing in business is that it allows professionals to test their theories and assumptions before putting them into action. This essentially allows an organization to verify its analysis is correct before committing resources to implement a broader strategy.

As one example, consider a company that wishes to launch a new marketing campaign to revitalize sales during a slow period. Doing so could be an incredibly expensive endeavor, depending on the campaign’s size and complexity. The company, therefore, may wish to test the campaign on a smaller scale to understand how it will perform.

In this example, the hypothesis that’s being tested would fall along the lines of: “If the company launches a new marketing campaign, then it will translate into an increase in sales.” It may even be possible to quantify how much of a lift in sales the company expects to see from the effort. Pending the results of the pilot campaign, the business would then know whether it makes sense to roll it out more broadly.

Related: 9 Fundamental Data Science Skills for Business Professionals

Key Considerations for Hypothesis Testing

1. alternative hypothesis and null hypothesis.

In hypothesis testing, the hypothesis that’s being tested is known as the alternative hypothesis . Often, it’s expressed as a correlation or statistical relationship between variables. The null hypothesis , on the other hand, is a statement that’s meant to show there’s no statistical relationship between the variables being tested. It’s typically the exact opposite of whatever is stated in the alternative hypothesis.

For example, consider a company’s leadership team that historically and reliably sees $12 million in monthly revenue. They want to understand if reducing the price of their services will attract more customers and, in turn, increase revenue.

In this case, the alternative hypothesis may take the form of a statement such as: “If we reduce the price of our flagship service by five percent, then we’ll see an increase in sales and realize revenues greater than $12 million in the next month.”

The null hypothesis, on the other hand, would indicate that revenues wouldn’t increase from the base of $12 million, or might even decrease.

Check out the video below about the difference between an alternative and a null hypothesis, and subscribe to our YouTube channel for more explainer content.

2. Significance Level and P-Value

Statistically speaking, if you were to run the same scenario 100 times, you’d likely receive somewhat different results each time. If you were to plot these results in a distribution plot, you’d see the most likely outcome is at the tallest point in the graph, with less likely outcomes falling to the right and left of that point.

With this in mind, imagine you’ve completed your hypothesis test and have your results, which indicate there may be a correlation between the variables you were testing. To understand your results' significance, you’ll need to identify a p-value for the test, which helps note how confident you are in the test results.

In statistics, the p-value depicts the probability that, assuming the null hypothesis is correct, you might still observe results that are at least as extreme as the results of your hypothesis test. The smaller the p-value, the more likely the alternative hypothesis is correct, and the greater the significance of your results.

3. One-Sided vs. Two-Sided Testing

When it’s time to test your hypothesis, it’s important to leverage the correct testing method. The two most common hypothesis testing methods are one-sided and two-sided tests , or one-tailed and two-tailed tests, respectively.

Typically, you’d leverage a one-sided test when you have a strong conviction about the direction of change you expect to see due to your hypothesis test. You’d leverage a two-sided test when you’re less confident in the direction of change.

4. Sampling

To perform hypothesis testing in the first place, you need to collect a sample of data to be analyzed. Depending on the question you’re seeking to answer or investigate, you might collect samples through surveys, observational studies, or experiments.

A survey involves asking a series of questions to a random population sample and recording self-reported responses.

Observational studies involve a researcher observing a sample population and collecting data as it occurs naturally, without intervention.

Finally, an experiment involves dividing a sample into multiple groups, one of which acts as the control group. For each non-control group, the variable being studied is manipulated to determine how the data collected differs from that of the control group.

Learn How to Perform Hypothesis Testing

Hypothesis testing is a complex process involving different moving pieces that can allow an organization to effectively leverage its data and inform strategic decisions.

If you’re interested in better understanding hypothesis testing and the role it can play within your organization, one option is to complete a course that focuses on the process. Doing so can lay the statistical and analytical foundation you need to succeed.

Do you want to learn more about hypothesis testing? Explore Business Analytics —one of our online business essentials courses —and download our Beginner’s Guide to Data & Analytics .

About the Author

Tutorial Playlist

Statistics tutorial, everything you need to know about the probability density function in statistics, the best guide to understand central limit theorem, an in-depth guide to measures of central tendency : mean, median and mode, the ultimate guide to understand conditional probability.

A Comprehensive Look at Percentile in Statistics

The Best Guide to Understand Bayes Theorem

Everything you need to know about the normal distribution, an in-depth explanation of cumulative distribution function, a complete guide to chi-square test, what is hypothesis testing in statistics types and examples, understanding the fundamentals of arithmetic and geometric progression, the definitive guide to understand spearman’s rank correlation, mean squared error: overview, examples, concepts and more, all you need to know about the empirical rule in statistics, the complete guide to skewness and kurtosis, a holistic look at bernoulli distribution.

All You Need to Know About Bias in Statistics

A Complete Guide to Get a Grasp of Time Series Analysis

The Key Differences Between Z-Test Vs. T-Test

The Complete Guide to Understand Pearson's Correlation

A complete guide on the types of statistical studies, everything you need to know about poisson distribution, your best guide to understand correlation vs. regression, the most comprehensive guide for beginners on what is correlation, hypothesis testing in statistics - types | examples.

Lesson 10 of 24 By Avijeet Biswal

Table of Contents

In today’s data-driven world, decisions are based on data all the time. Hypothesis plays a crucial role in that process, whether it may be making business decisions, in the health sector, academia, or in quality improvement. Without hypothesis & hypothesis tests, you risk drawing the wrong conclusions and making bad decisions. In this tutorial, you will look at Hypothesis Testing in Statistics.

The Ultimate Ticket to Top Data Science Job Roles

What Is Hypothesis Testing in Statistics?

Hypothesis Testing is a type of statistical analysis in which you put your assumptions about a population parameter to the test. It is used to estimate the relationship between 2 statistical variables.

Let's discuss few examples of statistical hypothesis from real-life -

- A teacher assumes that 60% of his college's students come from lower-middle-class families.

- A doctor believes that 3D (Diet, Dose, and Discipline) is 90% effective for diabetic patients.

Now that you know about hypothesis testing, look at the two types of hypothesis testing in statistics.

Hypothesis Testing Formula

Z = ( x̅ – μ0 ) / (σ /√n)

- Here, x̅ is the sample mean,

- μ0 is the population mean,

- σ is the standard deviation,

- n is the sample size.

How Hypothesis Testing Works?

An analyst performs hypothesis testing on a statistical sample to present evidence of the plausibility of the null hypothesis. Measurements and analyses are conducted on a random sample of the population to test a theory. Analysts use a random population sample to test two hypotheses: the null and alternative hypotheses.

The null hypothesis is typically an equality hypothesis between population parameters; for example, a null hypothesis may claim that the population means return equals zero. The alternate hypothesis is essentially the inverse of the null hypothesis (e.g., the population means the return is not equal to zero). As a result, they are mutually exclusive, and only one can be correct. One of the two possibilities, however, will always be correct.

Your Dream Career is Just Around The Corner!

Null Hypothesis and Alternative Hypothesis

The Null Hypothesis is the assumption that the event will not occur. A null hypothesis has no bearing on the study's outcome unless it is rejected.

H0 is the symbol for it, and it is pronounced H-naught.

The Alternate Hypothesis is the logical opposite of the null hypothesis. The acceptance of the alternative hypothesis follows the rejection of the null hypothesis. H1 is the symbol for it.

Let's understand this with an example.

A sanitizer manufacturer claims that its product kills 95 percent of germs on average.

To put this company's claim to the test, create a null and alternate hypothesis.

H0 (Null Hypothesis): Average = 95%.

Alternative Hypothesis (H1): The average is less than 95%.

Another straightforward example to understand this concept is determining whether or not a coin is fair and balanced. The null hypothesis states that the probability of a show of heads is equal to the likelihood of a show of tails. In contrast, the alternate theory states that the probability of a show of heads and tails would be very different.

Become a Data Scientist with Hands-on Training!

Hypothesis Testing Calculation With Examples

Let's consider a hypothesis test for the average height of women in the United States. Suppose our null hypothesis is that the average height is 5'4". We gather a sample of 100 women and determine that their average height is 5'5". The standard deviation of population is 2.

To calculate the z-score, we would use the following formula:

z = ( x̅ – μ0 ) / (σ /√n)

z = (5'5" - 5'4") / (2" / √100)

z = 0.5 / (0.045)

We will reject the null hypothesis as the z-score of 11.11 is very large and conclude that there is evidence to suggest that the average height of women in the US is greater than 5'4".

Steps in Hypothesis Testing

Hypothesis testing is a statistical method to determine if there is enough evidence in a sample of data to infer that a certain condition is true for the entire population. Here’s a breakdown of the typical steps involved in hypothesis testing:

Formulate Hypotheses

- Null Hypothesis (H0): This hypothesis states that there is no effect or difference, and it is the hypothesis you attempt to reject with your test.

- Alternative Hypothesis (H1 or Ha): This hypothesis is what you might believe to be true or hope to prove true. It is usually considered the opposite of the null hypothesis.

Choose the Significance Level (α)

The significance level, often denoted by alpha (α), is the probability of rejecting the null hypothesis when it is true. Common choices for α are 0.05 (5%), 0.01 (1%), and 0.10 (10%).

Select the Appropriate Test

Choose a statistical test based on the type of data and the hypothesis. Common tests include t-tests, chi-square tests, ANOVA, and regression analysis. The selection depends on data type, distribution, sample size, and whether the hypothesis is one-tailed or two-tailed.

Collect Data

Gather the data that will be analyzed in the test. This data should be representative of the population to infer conclusions accurately.

Calculate the Test Statistic

Based on the collected data and the chosen test, calculate a test statistic that reflects how much the observed data deviates from the null hypothesis.

Determine the p-value

The p-value is the probability of observing test results at least as extreme as the results observed, assuming the null hypothesis is correct. It helps determine the strength of the evidence against the null hypothesis.

Make a Decision

Compare the p-value to the chosen significance level:

- If the p-value ≤ α: Reject the null hypothesis, suggesting sufficient evidence in the data supports the alternative hypothesis.

- If the p-value > α: Do not reject the null hypothesis, suggesting insufficient evidence to support the alternative hypothesis.

Report the Results

Present the findings from the hypothesis test, including the test statistic, p-value, and the conclusion about the hypotheses.

Perform Post-hoc Analysis (if necessary)

Depending on the results and the study design, further analysis may be needed to explore the data more deeply or to address multiple comparisons if several hypotheses were tested simultaneously.

Types of Hypothesis Testing

To determine whether a discovery or relationship is statistically significant, hypothesis testing uses a z-test. It usually checks to see if two means are the same (the null hypothesis). Only when the population standard deviation is known and the sample size is 30 data points or more, can a z-test be applied.

A statistical test called a t-test is employed to compare the means of two groups. To determine whether two groups differ or if a procedure or treatment affects the population of interest, it is frequently used in hypothesis testing.

Chi-Square

You utilize a Chi-square test for hypothesis testing concerning whether your data is as predicted. To determine if the expected and observed results are well-fitted, the Chi-square test analyzes the differences between categorical variables from a random sample. The test's fundamental premise is that the observed values in your data should be compared to the predicted values that would be present if the null hypothesis were true.

Hypothesis Testing and Confidence Intervals

Both confidence intervals and hypothesis tests are inferential techniques that depend on approximating the sample distribution. Data from a sample is used to estimate a population parameter using confidence intervals. Data from a sample is used in hypothesis testing to examine a given hypothesis. We must have a postulated parameter to conduct hypothesis testing.