Hypothesis Requirements

Hypotheses are a crucial part of the scientific thinking process, and most professional scientific endeavors are hypothesis-driven. That is, they seek to address a specific, measurable, and answerable question. A well-constructed hypothesis has several characteristics: it is clear, testable, falsifiable, and serves as the basis for constructing a clear set of experiments that will allow the student to discuss why it can be accepted or rejected based on the experiments. We believe that it is important for students who publish with JEI to practice rigorous scientific thinking through generating and testing hypotheses.

This means that manuscripts that merely introduce an invention, a computational method, a new machine/deep learning or AI algorithm, no matter how impressive they are, are not appropriate for JEI. Here are some common examples of unacceptable “hypotheses” relating to engineering projects:

- I hypothesize that my invention/method/machine learning model will work

- I hypothesize that I can build this invention/method/machine learning model

- I hypothesize that my machine/deep learning or AI model will be effective and yield accurate results

If your hypothesis boils down to one of the above hypotheses, your research is engineering-based. If your manuscript is related to engineering and/or computation algorithm development, please read our Guidelines for Engineering- and Machine Learning-Based Projects .

Additionally, review articles , where a review of the existing literature on a topic is presented, are not eligible for publication in JEI at this time .

This video goes over the general hypothesis requirements as they relate to research eligible for publication at JEI. It was created by one of our previous authors and current student advisory board members, Akshya Mahadevan!

When you assess whether your manuscript has a clear, well-constructed hypothesis, please ask whether it meets the following five criteria:

1. It IS NOT discovery or descriptive research

Some research is not hypothesis-driven. Terms used to describe non-hypothesis-driven research are ‘descriptive research,’ in which information is collected without a particular question in mind, and ‘discovery science,’ where large volumes of experimental data are analyzed with the goal of finding new patterns or correlations. These new observations can lead to hypothesis formation and other scientific methodologies. Some examples of discovery or descriptive research include an invention, explaining an engineered design like a program or an algorithm, mining large datasets for potential targets, or even characterizing a new species. However, if you have a pre-existing hypothesis and use large datasets to test it , this is acceptable for submission to JEI.

Another way to assess whether your research is hypothesis-driven is by analyzing the experimental setup. What variables in the experiment are independent, and which are dependent? Do the results of the dependent variable answer the scientific question? Are there positive and negative control groups?

2. It IS original

While your hypothesis does not have to be completely novel within the larger field of your research topic, it cannot be obvious to you, given the background information or experimental setup. You must have developed the hypothesis and designed experiments to test it yourself. This means that the experiments cannot be prescribed – an assigned project from an AP biology course, for example.

3. It IS NOT too general/global

Example 1: “Disease X results from the expression of virulence genes.” Instead the hypothesis should focus on the expression of a particular gene or a set of genes.

Example 2: “Quantifying X will provide significant increases in income for industry.” This is essentially untestable in an experimental setup and is really a potential outcome, not a hypothesis.

4. It IS NOT too complex

Hypothesis statements that contain words like “and” and “or” are ‘compound hypotheses’. This makes testing difficult, because while one part may be true the other may not be so. When your hypothesis has multiple parts, make sure that your experiments directly test the entire hypothesis. Possible further implications that you cannot test should be discussed in Discussion.

5. It DOES NOT misdirect to the researcher

The hypothesis should not address your capabilities. “Discovering the mechanism behind X will enable us to better detect the pathogen.” This example tests the ability of the researchers to take information and use it; this is a result of successful hypothesis-driven research, not a testable hypothesis. Instead, the hypothesis should focus on the experimental system. If it is difficult to state the hypothesis without misdirecting to the researcher, the focus of the research may be discovery science or invention-based, and should be edited to incorporate a properly formulated hypothesis.

Please contact the JEI Editorial Staff at [email protected] if you have any questions regarding the hypothesis of your research.

Loading metrics

Open Access

Perspective

Perspective: Dimensions of the scientific method

* E-mail: [email protected]

Affiliation Department of Biomedical Engineering, Georgia Institute of Technology and Emory University, Atlanta, Georgia, United States of America

- Eberhard O. Voit

Published: September 12, 2019

- https://doi.org/10.1371/journal.pcbi.1007279

- Reader Comments

The scientific method has been guiding biological research for a long time. It not only prescribes the order and types of activities that give a scientific study validity and a stamp of approval but also has substantially shaped how we collectively think about the endeavor of investigating nature. The advent of high-throughput data generation, data mining, and advanced computational modeling has thrown the formerly undisputed, monolithic status of the scientific method into turmoil. On the one hand, the new approaches are clearly successful and expect the same acceptance as the traditional methods, but on the other hand, they replace much of the hypothesis-driven reasoning with inductive argumentation, which philosophers of science consider problematic. Intrigued by the enormous wealth of data and the power of machine learning, some scientists have even argued that significant correlations within datasets could make the entire quest for causation obsolete. Many of these issues have been passionately debated during the past two decades, often with scant agreement. It is proffered here that hypothesis-driven, data-mining–inspired, and “allochthonous” knowledge acquisition, based on mathematical and computational models, are vectors spanning a 3D space of an expanded scientific method. The combination of methods within this space will most certainly shape our thinking about nature, with implications for experimental design, peer review and funding, sharing of result, education, medical diagnostics, and even questions of litigation.

Citation: Voit EO (2019) Perspective: Dimensions of the scientific method. PLoS Comput Biol 15(9): e1007279. https://doi.org/10.1371/journal.pcbi.1007279

Editor: Jason A. Papin, University of Virginia, UNITED STATES

Copyright: © 2019 Eberhard O. Voit. This is an open access article distributed under the terms of the Creative Commons Attribution License , which permits unrestricted use, distribution, and reproduction in any medium, provided the original author and source are credited.

Funding: This work was supported in part by grants from the National Science Foundation ( https://www.nsf.gov/div/index.jsp?div=MCB ) grant NSF-MCB-1517588 (PI: EOV), NSF-MCB-1615373 (PI: Diana Downs) and the National Institute of Environmental Health Sciences ( https://www.niehs.nih.gov/ ) grant NIH-2P30ES019776-05 (PI: Carmen Marsit). The funders had no role in study design, data collection and analysis, decision to publish, or preparation of the manuscript.

Competing interests: The author has declared that no competing interests exist.

The traditional scientific method: Hypothesis-driven deduction

Research is the undisputed core activity defining science. Without research, the advancement of scientific knowledge would come to a screeching halt. While it is evident that researchers look for new information or insights, the term “research” is somewhat puzzling. Never mind the prefix “re,” which simply means “coming back and doing it again and again,” the word “search” seems to suggest that the research process is somewhat haphazard, that not much of a strategy is involved in the process. One might argue that research a few hundred years ago had the character of hoping for enough luck to find something new. The alchemists come to mind in their quest to turn mercury or lead into gold, or to discover an elixir for eternal youth, through methods we nowadays consider laughable.

Today’s sciences, in stark contrast, are clearly different. Yes, we still try to find something new—and may need a good dose of luck—but the process is anything but unstructured. In fact, it is prescribed in such rigor that it has been given the widely known moniker “scientific method.” This scientific method has deep roots going back to Aristotle and Herophilus (approximately 300 BC), Avicenna and Alhazen (approximately 1,000 AD), Grosseteste and Robert Bacon (approximately 1,250 AD), and many others, but solidified and crystallized into the gold standard of quality research during the 17th and 18th centuries [ 1 – 7 ]. In particular, Sir Francis Bacon (1561–1626) and René Descartes (1596–1650) are often considered the founders of the scientific method, because they insisted on careful, systematic observations of high quality, rather than metaphysical speculations that were en vogue among the scholars of the time [ 1 , 8 ]. In contrast to their peers, they strove for objectivity and insisted that observations, rather than an investigator’s preconceived ideas or superstitions, should be the basis for formulating a research idea [ 7 , 9 ].

Bacon and his 19th century follower John Stuart Mill explicitly proposed gaining knowledge through inductive reasoning: Based on carefully recorded observations, or from data obtained in a well-planned experiment, generalized assertions were to be made about similar yet (so far) unobserved phenomena [ 7 ]. Expressed differently, inductive reasoning attempts to derive general principles or laws directly from empirical evidence [ 10 ]. An example is the 19th century epigram of the physician Rudolf Virchow, Omnis cellula e cellula . There is no proof that indeed “every cell derives from a cell,” but like Virchow, we have made the observation time and again and never encountered anything suggesting otherwise.

In contrast to induction, the widely accepted, traditional scientific method is based on formulating and testing hypotheses. From the results of these tests, a deduction is made whether the hypothesis is presumably true or false. This type of hypotheticodeductive reasoning goes back to William Whewell, William Stanley Jevons, and Charles Peirce in the 19th century [ 1 ]. By the 20th century, the deductive, hypothesis-based scientific method had become deeply ingrained in the scientific psyche, and it is now taught as early as middle school in order to teach students valid means of discovery [ 8 , 11 , 12 ]. The scientific method has not only guided most research studies but also fundamentally influenced how we think about the process of scientific discovery.

Alas, because biology has almost no general laws, deduction in the strictest sense is difficult. It may therefore be preferable to use the term abduction, which refers to the logical inference toward the most plausible explanation, given a set of observations, although this explanation cannot be proven and is not necessarily true.

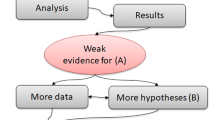

Over the decades, the hypothesis-based scientific method did experience variations here and there, but its conceptual scaffold remained essentially unchanged ( Fig 1 ). Its key is a process that begins with the formulation of a hypothesis that is to be rigorously tested, either in the wet lab or computationally; nonadherence to this principle is seen as lacking rigor and can lead to irreproducible results [ 1 , 13 – 15 ].

- PPT PowerPoint slide

- PNG larger image

- TIFF original image

The central concept of the traditional scientific method is a falsifiable hypothesis regarding some phenomenon of interest. This hypothesis is to be tested experimentally or computationally. The test results support or refute the hypothesis, triggering a new round of hypothesis formulation and testing.

https://doi.org/10.1371/journal.pcbi.1007279.g001

Going further, the prominent philosopher of science Sir Karl Popper argued that a scientific hypothesis can never be verified but that it can be disproved by a single counterexample. He therefore demanded that scientific hypotheses had to be falsifiable, because otherwise, testing would be moot [ 16 , 17 ] (see also [ 18 ]). As Gillies put it, “successful theories are those that survive elimination through falsification” [ 19 ]. Kelley and Scott agreed to some degree but warned that complete insistence on falsifiability is too restrictive as it would mark many computational techniques, statistical hypothesis testing, and even Darwin’s theory of evolution as nonscientific [ 20 ].

While the hypothesis-based scientific method has been very successful, its exclusive reliance on deductive reasoning is dangerous because according to the so-called Duhem–Quine thesis, hypothesis testing always involves an unknown number of explicit or implicit assumptions, some of which may steer the researcher away from hypotheses that seem implausible, although they are, in fact, true [ 21 ]. According to Kuhn, this bias can obstruct the recognition of paradigm shifts [ 22 ], which require the rethinking of previously accepted “truths” and the development of radically new ideas [ 23 , 24 ]. The testing of simultaneous alternative hypotheses [ 25 – 27 ] ameliorates this problem to some degree but not entirely.

The traditional scientific method is often presented in discrete steps, but it should really be seen as a form of critical thinking, subject to review and independent validation [ 8 ]. It has proven very influential, not only by prescribing valid experimentation, but also for affecting the way we attempt to understand nature [ 18 ], for teaching [ 8 , 12 ], reporting, publishing, and otherwise sharing information [ 28 ], for peer review and the awarding of funds by research-supporting agencies [ 29 , 30 ], for medical diagnostics [ 7 ], and even in litigation [ 31 ].

A second dimension of the scientific method: Data-mining–inspired induction

A major shift in biological experimentation occurred with the–omics revolution of the early 21st century. All of a sudden, it became feasible to perform high-throughput experiments that generated thousands of measurements, typically characterizing the expression or abundances of very many—if not all—genes, proteins, metabolites, or other biological quantities in a sample.

The strategy of measuring large numbers of items in a nontargeted fashion is fundamentally different from the traditional scientific method and constitutes a new, second dimension of the scientific method. Instead of hypothesizing and testing whether gene X is up-regulated under some altered condition, the leading question becomes which of the thousands of genes in a sample are up- or down-regulated. This shift in focus elevates the data to the supreme role of revealing novel insights by themselves ( Fig 2 ). As an important, generic advantage over the traditional strategy, this second dimension is free of a researcher’s preconceived notions regarding the molecular mechanisms governing the phenomenon of interest, which are otherwise the key to formulating a hypothesis. The prominent biologists Patrick Brown and David Botstein commented that “the patterns of expression will often suffice to begin de novo discovery of potential gene functions” [ 32 ].

Data-driven research begins with an untargeted exploration, in which the data speak for themselves. Machine learning extracts patterns from the data, which suggest hypotheses that are to be tested in the lab or computationally.

https://doi.org/10.1371/journal.pcbi.1007279.g002

This data-driven, discovery-generating approach is at once appealing and challenging. On the one hand, very many data are explored simultaneously and essentially without bias. On the other hand, the large datasets supporting this approach create a genuine challenge to understanding and interpreting the experimental results because the thousands of data points, often superimposed with a fair amount of noise, make it difficult to detect meaningful differences between sample and control. This situation can only be addressed with computational methods that first “clean” the data, for instance, through the statistically valid removal of outliers, and then use machine learning to identify statistically significant, distinguishing molecular profiles or signatures. In favorable cases, such signatures point to specific biological pathways, whereas other signatures defy direct explanation but may become the launch pad for follow-up investigations [ 33 ].

Today’s scientists are very familiar with this discovery-driven exploration of “what’s out there” and might consider it a quaint quirk of history that this strategy was at first widely chastised and ridiculed as a “fishing expedition” [ 30 , 34 ]. Strict traditionalists were outraged that rigor was leaving science with the new approach and that sufficient guidelines were unavailable to assure the validity and reproducibility of results [ 10 , 35 , 36 ].

From the view point of philosophy of science, this second dimension of the scientific method uses inductive reasoning and reflects Bacon’s idea that observations can and should dictate the research question to be investigated [ 1 , 7 ]. Allen [ 36 ] forcefully rejected this type of reasoning, stating “the thinking goes, we can now expect computer programs to derive significance, relevance and meaning from chunks of information, be they nucleotide sequences or gene expression profiles… In contrast with this view, many are convinced that no purely logical process can turn observation into understanding.” His conviction goes back to the 18th century philosopher David Hume and again to Popper, who identified as the overriding problem with inductive reasoning that it can never truly reveal causality, even if a phenomenon is observed time and again [ 16 , 17 , 37 , 38 ]. No number of observations, even if they always have the same result, can guard against an exception that would violate the generality of a law inferred from these observations [ 1 , 35 ]. Worse, Popper argued, through inference by induction, we cannot even know the probability of something being true [ 10 , 17 , 36 ].

Others argued that data-driven and hypothesis-driven research actually do not differ all that much in principle, as long as there is cycling between developing new ideas and testing them with care [ 27 ]. In fact, Kell and Oliver [ 34 ] maintained that the exclusive acceptance of hypothesis-driven programs misrepresents the complexities of biological knowledge generation. Similarly refuting the prominent rule of deduction, Platt [ 26 ] and Beard and Kushmerick [ 27 ] argued that repeated inductive reasoning, called strong inference, corresponds to a logically sound decision tree of disproving or refining hypotheses that can rapidly yield firm conclusions; nonetheless, Platt had to admit that inductive inference is not as certain as deduction, because it projects into the unknown. Lander compared the task of obtaining causality by induction to the problem of inferring the design of a microprocessor from input-output readings, which in a strict sense is impossible, because the microprocessor could be arbitrarily complicated; even so, inference often leads to novel insights and therefore is valuable [ 39 ].

An interesting special case of almost pure inductive reasoning is epidemiology, where hypothesis-driven reasoning is rare and instead, the fundamental question is whether data-based evidence is sufficient to associate health risks with specific causes [ 31 , 34 ].

Recent advances in machine learning and “big-data” mining have driven the use of inductive reasoning to unprecedented heights. As an example, machine learning can greatly assist in the discovery of patterns, for instance, in biological sequences [ 40 ]. Going a step further, a pithy article by Andersen [ 41 ] proffered that we may not need to look for causality or mechanistic explanations anymore if we just have enough correlation: “With enough data, the numbers speak for themselves, correlation replaces causation, and science can advance even without coherent models or unified theories.”

Of course, the proposal to abandon the quest for causality caused pushback on philosophical as well as mathematical grounds. Allen [ 10 , 35 ] considered the idea “absurd” that data analysis could enhance understanding in the absence of a hypothesis. He felt confident “that even the formidable combination of computing power with ease of access to data cannot produce a qualitative shift in the way that we do science: the making of hypotheses remains an indispensable component in the growth of knowledge” [ 36 ]. Succi and Coveney [ 42 ] refuted the “most extravagant claims” of big-data proponents very differently, namely by analyzing the theories on which machine learning is founded. They contrasted the assumptions underlying these theories, such as the law of large numbers, with the mathematical reality of complex biological systems. Specifically, they carefully identified genuine features of these systems, such as nonlinearities, nonlocality of effects, fractal aspects, and high dimensionality, and argued that they fundamentally violate some of the statistical assumptions implicitly underlying big-data analysis, like independence of events. They concluded that these discrepancies “may lead to false expectations and, at their nadir, even to dangerous social, economical and political manipulation.” To ameliorate the situation, the field of big-data analysis would need new strong theorems characterizing the validity of its methods and the numbers of data required for obtaining reliable insights. Succi and Coveney go as far as stating that too many data are just as bad as insufficient data [ 42 ].

While philosophical doubts regarding inductive methods will always persist, one cannot deny that -omics-based, high-throughput studies, combined with machine learning and big-data analysis, have been very successful [ 43 ]. Yes, induction cannot truly reveal general laws, no matter how large the datasets, but they do provide insights that are very different from what science had offered before and may at least suggest novel patterns, trends, or principles. As a case in point, if many transcriptomic studies indicate that a particular gene set is involved in certain classes of phenomena, there is probably some truth to the observation, even though it is not mathematically provable. Kepler’s laws of astronomy were arguably derived solely from inductive reasoning [ 34 ].

Notwithstanding the opposing views on inductive methods, successful strategies shape how we think about science. Thus, to take advantage of all experimental options while ensuring quality of research, we must not allow that “anything goes” but instead identify and characterize standard operating procedures and controls that render this emerging scientific method valid and reproducible. A laudable step in this direction was the wide acceptance of “minimum information about a microarray experiment” (MIAME) standards for microarray experiments [ 44 ].

A third dimension of the scientific method: Allochthonous reasoning

Parallel to the blossoming of molecular biology and the rapid rise in the power and availability of computing in the late 20th century, the use of mathematical and computational models became increasingly recognized as relevant and beneficial for understanding biological phenomena. Indeed, mathematical models eventually achieved cornerstone status in the new field of computational systems biology.

Mathematical modeling has been used as a tool of biological analysis for a long time [ 27 , 45 – 48 ]. Interesting for the discussion here is that the use of mathematical and computational modeling in biology follows a scientific approach that is distinctly different from the traditional and the data-driven methods, because it is distributed over two entirely separate domains of knowledge. One consists of the biological reality of DNA, elephants, and roses, whereas the other is the world of mathematics, which is governed by numbers, symbols, theorems, and abstract work protocols. Because the ways of thinking—and even the languages—are different in these two realms, I suggest calling this type of knowledge acquisition “allochthonous” (literally Greek: in or from a “piece of land different from where one is at home”; one could perhaps translate it into modern lingo as “outside one’s comfort zone”). De facto, most allochthonous reasoning in biology presently refers to mathematics and computing, but one might also consider, for instance, the application of methods from linguistics in the analysis of DNA sequences or proteins [ 49 ].

One could argue that biologists have employed “models” for a long time, for instance, in the form of “model organisms,” cell lines, or in vitro experiments, which more or less faithfully reflect features of the organisms of true interest but are easier to manipulate. However, this type of biological model use is rather different from allochthonous reasoning, as it does not leave the realm of biology and uses the same language and often similar methodologies.

A brief discussion of three experiences from our lab may illustrate the benefits of allochthonous reasoning. (1) In a case study of renal cell carcinoma, a dynamic model was able to explain an observed yet nonintuitive metabolic profile in terms of the enzymatic reaction steps that had been altered during the disease [ 50 ]. (2) A transcriptome analysis had identified several genes as displaying significantly different expression patterns during malaria infection in comparison to the state of health. Considered by themselves and focusing solely on genes coding for specific enzymes of purine metabolism, the findings showed patterns that did not make sense. However, integrating the changes in a dynamic model revealed that purine metabolism globally shifted, in response to malaria, from guanine compounds to adenine, inosine, and hypoxanthine [ 51 ]. (3) Data capturing the dynamics of malaria parasites suggested growth rates that were biologically impossible. Speculation regarding possible explanations led to the hypothesis that many parasite-harboring red blood cells might “hide” from circulation and therewith from detection in the blood stream. While experimental testing of the feasibility of the hypothesis would have been expensive, a dynamic model confirmed that such a concealment mechanism could indeed quantitatively explain the apparently very high growth rates [ 52 ]. In all three cases, the insights gained inductively from computational modeling would have been difficult to obtain purely with experimental laboratory methods. Purely deductive allochthonous reasoning is the ultimate goal of the search for design and operating principles [ 53 – 55 ], which strives to explain why certain structures or functions are employed by nature time and again. An example is a linear metabolic pathway, in which feedback inhibition is essentially always exerted on the first step [ 56 , 57 ]. This generality allows the deduction that a so far unstudied linear pathway is most likely (or even certain to be) inhibited at the first step. Not strictly deductive—but rather abductive—was a study in our lab in which we analyzed time series data with a mathematical model that allowed us to infer the most likely regulatory structure of a metabolic pathway [ 58 , 59 ].

A typical allochthonous investigation begins in the realm of biology with the formulation of a hypothesis ( Fig 3 ). Instead of testing this hypothesis with laboratory experiments, the system encompassing the hypothesis is moved into the realm of mathematics. This move requires two sets of ingredients. One set consists of the simplification and abstraction of the biological system: Any distracting details that seem unrelated to the hypothesis and its context are omitted or represented collectively with other details. This simplification step carries the greatest risk of the entire modeling approach, as omission of seemingly negligible but, in truth, important details can easily lead to wrong results. The second set of ingredients consists of correspondence rules that translate every biological component or process into the language of mathematics [ 60 , 61 ].

This mathematical and computational approach is distributed over two realms, which are connected by correspondence rules.

https://doi.org/10.1371/journal.pcbi.1007279.g003

Once the system is translated, it has become an entirely mathematical construct that can be analyzed purely with mathematical and computational means. The results of this analysis are also strictly mathematical. They typically consist of values of variables, magnitudes of processes, sensitivity patterns, signs of eigenvalues, or qualitative features like the onset of oscillations or the potential for limit cycles. Correspondence rules are used again to move these results back into the realm of biology. As an example, the mathematical result that “two eigenvalues have positive real parts” does not make much sense to many biologists, whereas the interpretation that “the system is not stable at the steady state in question” is readily explained. New biological insights may lead to new hypotheses, which are tested either by experiments or by returning once more to the realm of mathematics. The model design, diagnosis, refinements, and validation consist of several phases, which have been discussed widely in the biomathematical literature. Importantly, each iteration of a typical modeling analysis consists of a move from the biological to the mathematical realm and back.

The reasoning within the realm of mathematics is often deductive, in the form of an Aristotelian syllogism, such as the well-known “All men are mortal; Socrates is a man; therefore, Socrates is mortal.” However, the reasoning may also be inductive, as it is the case with large-scale Monte-Carlo simulations that generate arbitrarily many “observations,” although they cannot reveal universal principles or theorems. An example is a simulation randomly drawing numbers in an attempt to show that every real number has an inverse. The simulation will always attest to this hypothesis but fail to discover the truth because it will never randomly draw 0. Generically, computational models may be considered sets of hypotheses, formulated as equations or as algorithms that reflect our perception of a complex system [ 27 ].

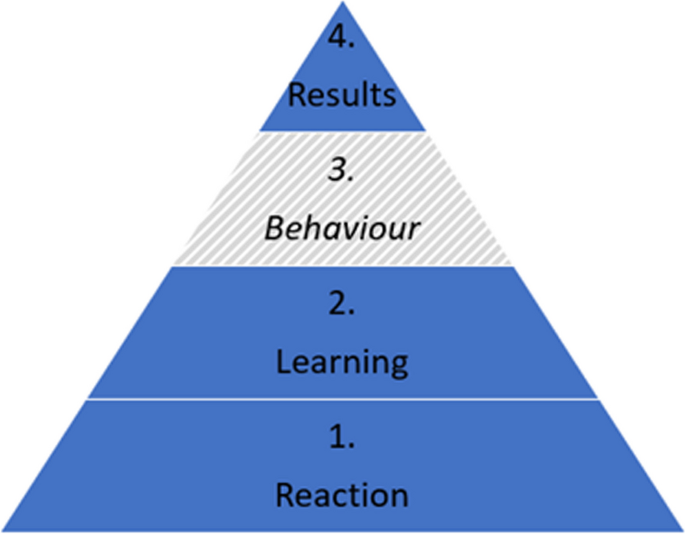

Impact of the multidimensional scientific method on learning

Almost all we know in biology has come from observation, experimentation, and interpretation. The traditional scientific method not only offered clear guidance for this knowledge gathering, but it also fundamentally shaped the way we think about the exploration of nature. When presented with a new research question, scientists were trained to think immediately in terms of hypotheses and alternatives, pondering the best feasible ways of testing them, and designing in their minds strong controls that would limit the effects of known or unknown confounders. Shaped by the rigidity of this ever-repeating process, our thinking became trained to move forward one well-planned step at a time. This modus operandi was rigid and exact. It also minimized the erroneous pursuit of long speculative lines of thought, because every step required testing before a new hypothesis was formed. While effective, the process was also very slow and driven by ingenuity—as well as bias—on the scientist’s part. This bias was sometimes a hindrance to necessary paradigm shifts [ 22 ].

High-throughput data generation, big-data analysis, and mathematical-computational modeling changed all that within a few decades. In particular, the acceptance of inductive principles and of the allochthonous use of nonbiological strategies to answer biological questions created an unprecedented mix of successes and chaos. To the horror of traditionalists, the importance of hypotheses became minimized, and the suggestion spread that the data would speak for themselves [ 36 ]. Importantly, within this fog of “anything goes,” the fundamental question arose how to determine whether an experiment was valid.

Because agreed-upon operating procedures affect research progress and interpretation, thinking, teaching, and sharing of results, this question requires a deconvolution of scientific strategies. Here I proffer that the single scientific method of the past should be expanded toward a vector space of scientific methods, with spanning vectors that correspond to different dimensions of the scientific method ( Fig 4 ).

The traditional hypothesis-based deductive scientific method is expanded into a 3D space that allows for synergistic blends of methods that include data-mining–inspired, inductive knowledge acquisition, and mathematical model-based, allochthonous reasoning.

https://doi.org/10.1371/journal.pcbi.1007279.g004

Obviously, all three dimensions have their advantages and drawbacks. The traditional, hypothesis-driven deductive method is philosophically “clean,” except that it is confounded by preconceptions and assumptions. The data-mining–inspired inductive method cannot offer universal truths but helps us explore very large spaces of factors that contribute to a phenomenon. Allochthonous, model-based reasoning can be performed mentally, with paper and pencil, through rigorous analysis, or with a host of computational methods that are precise and disprovable [ 27 ]. At the same time, they are incomparable faster, cheaper, and much more comprehensive than experiments in molecular biology. This reduction in cost and time, and the increase in coverage, may eventually have far-reaching consequences, as we can already fathom from much of modern physics.

Due to its long history, the traditional dimension of the scientific method is supported by clear and very strong standard operating procedures. Similarly, strong procedures need to be developed for the other two dimensions. The MIAME rules for microarray analysis provide an excellent example [ 44 ]. On the mathematical modeling front, no such rules are generally accepted yet, but trends toward them seem to emerge at the horizon. For instance, it seems to be becoming common practice to include sensitivity analyses in typical modeling studies and to assess the identifiability or sloppiness of ensembles of parameter combinations that fit a given dataset well [ 62 , 63 ].

From a philosophical point of view, it seems unlikely that objections against inductive reasoning will disappear. However, instead of pitting hypothesis-based deductive reasoning against inductivism, it seems more beneficial to determine how the different methods can be synergistically blended ( cf . [ 18 , 27 , 34 , 42 ]) as linear combinations of the three vectors of knowledge acquisition ( Fig 4 ). It is at this point unclear to what degree the identified three dimensions are truly independent of each other, whether additional dimensions should be added [ 24 ], or whether the different versions could be amalgamated into a single scientific method [ 18 ], especially if it is loosely defined as a form of critical thinking [ 8 ]. Nobel Laureate Percy Bridgman even concluded that “science is what scientists do, and there are as many scientific methods as there are individual scientists” [ 8 , 64 ].

Combinations of the three spanning vectors of the scientific method have been emerging for some time. Many biologists already use inductive high-throughput methods to develop specific hypotheses that are subsequently tested with deductive or further inductive methods [ 34 , 65 ]. In terms of including mathematical modeling, physics and geology have been leading the way for a long time, often by beginning an investigation in theory, before any actual experiment is performed. It will benefit biology to look into this strategy and to develop best practices of allochthonous reasoning.

The blending of methods may take quite different shapes. Early on, Ideker and colleagues [ 65 ] proposed an integrated experimental approach for pathway analysis that offered a glimpse of new experimental strategies within the space of scientific methods. In a similar vein, Covert and colleagues [ 66 ] included computational methods into such an integrated approach. Additional examples of blended analyses in systems biology can be seen in other works, such as [ 43 , 67 – 73 ]. Generically, it is often beneficial to start with big data, determine patterns in associations and correlations, then switch to the mathematical realm in order to filter out spurious correlations in a high-throughput fashion. If this procedure is executed in an iterative manner, the “surviving” associations have an increased level of confidence and are good candidates for further experimental or computational testing (personal communication from S. Chandrasekaran).

If each component of a blended scientific method follows strict, commonly agreed guidelines, “linear combinations” within the 3D space can also be checked objectively, per deconvolution. In addition, guidelines for synergistic blends of component procedures should be developed. If we carefully monitor such blends, time will presumably indicate which method is best for which task and how the different approaches optimally inform each other. For instance, it will be interesting to study whether there is an optimal sequence of experiments along the three axes for a particular class of tasks. Big-data analysis together with inductive reasoning might be optimal for creating initial hypotheses and possibly refuting wrong speculations (“we had thought this gene would be involved, but apparently it isn’t”). If the logic of an emerging hypotheses can be tested with mathematical and computational tools, it will almost certainly be faster and cheaper than an immediate launch into wet-lab experimentation. It is also likely that mathematical reasoning will be able to refute some apparently feasible hypothesis and suggest amendments. Ultimately, the “surviving” hypotheses must still be tested for validity through conventional experiments. Deconvolving current practices and optimizing the combination of methods within the 3D or higher-dimensional space of scientific methods will likely result in better planning of experiments and in synergistic blends of approaches that have the potential capacity of addressing some of the grand challenges in biology.

Acknowledgments

The author is very grateful to Dr. Sriram Chandrasekaran and Ms. Carla Kumbale for superb suggestions and invaluable feedback.

- View Article

- Google Scholar

- 2. Gauch HGJ. Scientific Method in Brief. Cambridge, UK.: Cambridge University Press; 2012.

- 3. Gimbel S (Ed). Exploring the Scientific Method: Cases and Questions. Chicago, IL: The University of Chicago Press; 2011.

- PubMed/NCBI

- 8. McLelland CV. The nature of science and the scientific method. Boulder, CO: The Geological Society of America; 2006.

- 9. Ladyman J. Understanding Philosophy of Science. Abington, Oxon: Routledge; 2002.

- 16. Popper KR. Conjectures and Refutations: The Growth of Scientific Knowledge. Abingdon, Oxon: Routledge and Kegan Paul; 1963.

- 17. Popper KR. The Logic of Scientific Discovery. Abingdon, Oxon: Routledge; 2002.

- 21. Harding SE. Can theories be refuted?: Essays on the Duhem-Quine thesis. Dordrecht-Holland / Boston, MA: D. Reidel Publ. Co; 1976.

- 22. Kuhn TS. The Structure of Scientific Revolutions. Chicago, IL: University of Chicago Press; 1962.

- 37. Hume D. An enquiry concerning human understanding. Oxford, U.K.: Oxford University Press; 1748/1999.

- 38. Popper KR. Objective knowledge. An evolutionary approach. Oxford, U.K.: Oxford University Press; 1972.

- 47. von Bertalanffy L. General System Theory: Foundations, Development, Applications. New York: George Braziller; 1968.

- 48. May RM. Stability and Complexity in Model Ecosystems: Princeton University Press; 1973.

- 57. Savageau MA. Biochemical Systems Analysis: A Study of Function and Design in Molecular Biology. Reading, Mass: Addison-Wesley Pub. Co. Advanced Book Program (reprinted 2009); 1976.

- 60. Reither F. Über das Denken mit Analogien und Modellen. In: Schaefer G, Trommer G, editors. Denken in Modellen. Braunschweig, Germany: Georg Westermann Verlag; 1977.

- 61. Voit EO. A First Course in Systems Biology, 2nd Ed. New York, NY: Garland Science; 2018.

- 64. Bridgman PW. Reflections of a Physicist. New York, NY: reprinted by Kessinger Legacy Reprints, 2010; 1955.

Society Homepage About Public Health Policy Contact

Data-driven hypothesis generation in clinical research: what we learned from a human subject study, article sidebar.

Submit your own article

Register as an author to reserve your spot in the next issue of the Medical Research Archives.

Join the Society

The European Society of Medicine is more than a professional association. We are a community. Our members work in countries across the globe, yet are united by a common goal: to promote health and health equity, around the world.

Join Europe’s leading medical society and discover the many advantages of membership, including free article publication.

Main Article Content

Hypothesis generation is an early and critical step in any hypothesis-driven clinical research project. Because it is not yet a well-understood cognitive process, the need to improve the process goes unrecognized. Without an impactful hypothesis, the significance of any research project can be questionable, regardless of the rigor or diligence applied in other steps of the study, e.g., study design, data collection, and result analysis. In this perspective article, the authors provide a literature review on the following topics first: scientific thinking, reasoning, medical reasoning, literature-based discovery, and a field study to explore scientific thinking and discovery. Over the years, scientific thinking has shown excellent progress in cognitive science and its applied areas: education, medicine, and biomedical research. However, a review of the literature reveals the lack of original studies on hypothesis generation in clinical research. The authors then summarize their first human participant study exploring data-driven hypothesis generation by clinical researchers in a simulated setting. The results indicate that a secondary data analytical tool, VIADS—a visual interactive analytic tool for filtering, summarizing, and visualizing large health data sets coded with hierarchical terminologies, can shorten the time participants need, on average, to generate a hypothesis and also requires fewer cognitive events to generate each hypothesis. As a counterpoint, this exploration also indicates that the quality ratings of the hypotheses thus generated carry significantly lower ratings for feasibility when applying VIADS. Despite its small scale, the study confirmed the feasibility of conducting a human participant study directly to explore the hypothesis generation process in clinical research. This study provides supporting evidence to conduct a larger-scale study with a specifically designed tool to facilitate the hypothesis-generation process among inexperienced clinical researchers. A larger study could provide generalizable evidence, which in turn can potentially improve clinical research productivity and overall clinical research enterprise.

Article Details

The Medical Research Archives grants authors the right to publish and reproduce the unrevised contribution in whole or in part at any time and in any form for any scholarly non-commercial purpose with the condition that all publications of the contribution include a full citation to the journal as published by the Medical Research Archives .

How to Implement Hypothesis-Driven Development

Remember back to the time when we were in high school science class. Our teachers had a framework for helping us learn – an experimental approach based on the best available evidence at hand. We were asked to make observations about the world around us, then attempt to form an explanation or hypothesis to explain what we had observed. We then tested this hypothesis by predicting an outcome based on our theory that would be achieved in a controlled experiment – if the outcome was achieved, we had proven our theory to be correct.

We could then apply this learning to inform and test other hypotheses by constructing more sophisticated experiments, and tuning, evolving or abandoning any hypothesis as we made further observations from the results we achieved.

Experimentation is the foundation of the scientific method, which is a systematic means of exploring the world around us. Although some experiments take place in laboratories, it is possible to perform an experiment anywhere, at any time, even in software development.

Practicing Hypothesis-Driven Development is thinking about the development of new ideas, products and services – even organizational change – as a series of experiments to determine whether an expected outcome will be achieved. The process is iterated upon until a desirable outcome is obtained or the idea is determined to be not viable.

We need to change our mindset to view our proposed solution to a problem statement as a hypothesis, especially in new product or service development – the market we are targeting, how a business model will work, how code will execute and even how the customer will use it.

We do not do projects anymore, only experiments. Customer discovery and Lean Startup strategies are designed to test assumptions about customers. Quality Assurance is testing system behavior against defined specifications. The experimental principle also applies in Test-Driven Development – we write the test first, then use the test to validate that our code is correct, and succeed if the code passes the test. Ultimately, product or service development is a process to test a hypothesis about system behaviour in the environment or market it is developed for.

The key outcome of an experimental approach is measurable evidence and learning.

Learning is the information we have gained from conducting the experiment. Did what we expect to occur actually happen? If not, what did and how does that inform what we should do next?

In order to learn we need use the scientific method for investigating phenomena, acquiring new knowledge, and correcting and integrating previous knowledge back into our thinking.

As the software development industry continues to mature, we now have an opportunity to leverage improved capabilities such as Continuous Design and Delivery to maximize our potential to learn quickly what works and what does not. By taking an experimental approach to information discovery, we can more rapidly test our solutions against the problems we have identified in the products or services we are attempting to build. With the goal to optimize our effectiveness of solving the right problems, over simply becoming a feature factory by continually building solutions.

The steps of the scientific method are to:

- Make observations

- Formulate a hypothesis

- Design an experiment to test the hypothesis

- State the indicators to evaluate if the experiment has succeeded

- Conduct the experiment

- Evaluate the results of the experiment

- Accept or reject the hypothesis

- If necessary, make and test a new hypothesis

Using an experimentation approach to software development

We need to challenge the concept of having fixed requirements for a product or service. Requirements are valuable when teams execute a well known or understood phase of an initiative, and can leverage well understood practices to achieve the outcome. However, when you are in an exploratory, complex and uncertain phase you need hypotheses.

Handing teams a set of business requirements reinforces an order-taking approach and mindset that is flawed.

Business does the thinking and ‘knows’ what is right. The purpose of the development team is to implement what they are told. But when operating in an area of uncertainty and complexity, all the members of the development team should be encouraged to think and share insights on the problem and potential solutions. A team simply taking orders from a business owner is not utilizing the full potential, experience and competency that a cross-functional multi-disciplined team offers.

Framing hypotheses

The traditional user story framework is focused on capturing requirements for what we want to build and for whom, to enable the user to receive a specific benefit from the system.

As A…. <role>

I Want… <goal/desire>

So That… <receive benefit>

Behaviour Driven Development (BDD) and Feature Injection aims to improve the original framework by supporting communication and collaboration between developers, tester and non-technical participants in a software project.

In Order To… <receive benefit>

As A… <role>

When viewing work as an experiment, the traditional story framework is insufficient. As in our high school science experiment, we need to define the steps we will take to achieve the desired outcome. We then need to state the specific indicators (or signals) we expect to observe that provide evidence that our hypothesis is valid. These need to be stated before conducting the test to reduce biased interpretations of the results.

If we observe signals that indicate our hypothesis is correct, we can be more confident that we are on the right path and can alter the user story framework to reflect this.

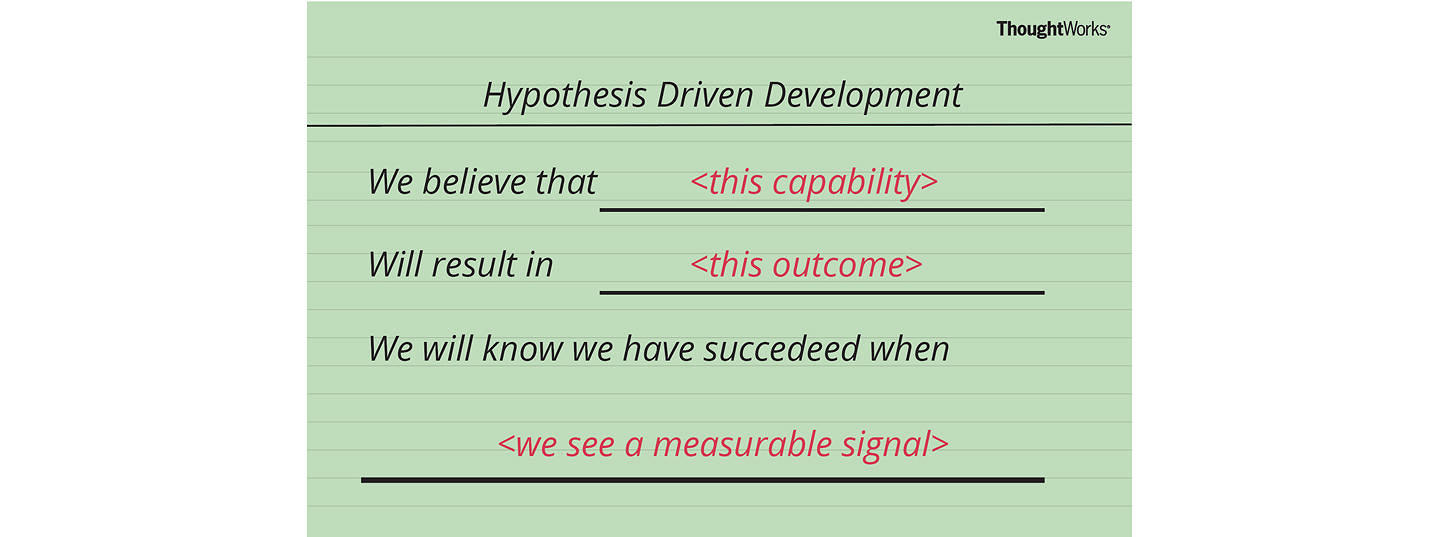

Therefore, a user story structure to support Hypothesis-Driven Development would be;

We believe < this capability >

What functionality we will develop to test our hypothesis? By defining a ‘test’ capability of the product or service that we are attempting to build, we identify the functionality and hypothesis we want to test.

Will result in < this outcome >

What is the expected outcome of our experiment? What is the specific result we expect to achieve by building the ‘test’ capability?

We will know we have succeeded when < we see a measurable signal >

What signals will indicate that the capability we have built is effective? What key metrics (qualitative or quantitative) we will measure to provide evidence that our experiment has succeeded and give us enough confidence to move to the next stage.

The threshold you use for statistically significance will depend on your understanding of the business and context you are operating within. Not every company has the user sample size of Amazon or Google to run statistically significant experiments in a short period of time. Limits and controls need to be defined by your organization to determine acceptable evidence thresholds that will allow the team to advance to the next step.

For example if you are building a rocket ship you may want your experiments to have a high threshold for statistical significance. If you are deciding between two different flows intended to help increase user sign up you may be happy to tolerate a lower significance threshold.

The final step is to clearly and visibly state any assumptions made about our hypothesis, to create a feedback loop for the team to provide further input, debate and understanding of the circumstance under which we are performing the test. Are they valid and make sense from a technical and business perspective?

Hypotheses when aligned to your MVP can provide a testing mechanism for your product or service vision. They can test the most uncertain areas of your product or service, in order to gain information and improve confidence.

Examples of Hypothesis-Driven Development user stories are;

Business story

We Believe That increasing the size of hotel images on the booking page

Will Result In improved customer engagement and conversion

We Will Know We Have Succeeded When we see a 5% increase in customers who review hotel images who then proceed to book in 48 hours.

It is imperative to have effective monitoring and evaluation tools in place when using an experimental approach to software development in order to measure the impact of our efforts and provide a feedback loop to the team. Otherwise we are essentially blind to the outcomes of our efforts.

In agile software development we define working software as the primary measure of progress.

By combining Continuous Delivery and Hypothesis-Driven Development we can now define working software and validated learning as the primary measures of progress.

Ideally we should not say we are done until we have measured the value of what is being delivered – in other words, gathered data to validate our hypothesis.

Examples of how to gather data is performing A/B Testing to test a hypothesis and measure to change in customer behaviour. Alternative testings options can be customer surveys, paper prototypes, user and/or guerrilla testing.

One example of a company we have worked with that uses Hypothesis-Driven Development is lastminute.com . The team formulated a hypothesis that customers are only willing to pay a max price for a hotel based on the time of day they book. Tom Klein, CEO and President of Sabre Holdings shared the story of how they improved conversion by 400% within a week.

Combining practices such as Hypothesis-Driven Development and Continuous Delivery accelerates experimentation and amplifies validated learning. This gives us the opportunity to accelerate the rate at which we innovate while relentlessly reducing cost, leaving our competitors in the dust. Ideally we can achieve the ideal of one piece flow: atomic changes that enable us to identify causal relationships between the changes we make to our products and services, and their impact on key metrics.

As Kent Beck said, “Test-Driven Development is a great excuse to think about the problem before you think about the solution”. Hypothesis-Driven Development is a great opportunity to test what you think the problem is, before you work on the solution.

How can you achieve faster growth?

What Is A Research (Scientific) Hypothesis? A plain-language explainer + examples

By: Derek Jansen (MBA) | Reviewed By: Dr Eunice Rautenbach | June 2020

If you’re new to the world of research, or it’s your first time writing a dissertation or thesis, you’re probably noticing that the words “research hypothesis” and “scientific hypothesis” are used quite a bit, and you’re wondering what they mean in a research context .

“Hypothesis” is one of those words that people use loosely, thinking they understand what it means. However, it has a very specific meaning within academic research. So, it’s important to understand the exact meaning before you start hypothesizing.

Research Hypothesis 101

- What is a hypothesis ?

- What is a research hypothesis (scientific hypothesis)?

- Requirements for a research hypothesis

- Definition of a research hypothesis

- The null hypothesis

What is a hypothesis?

Let’s start with the general definition of a hypothesis (not a research hypothesis or scientific hypothesis), according to the Cambridge Dictionary:

Hypothesis: an idea or explanation for something that is based on known facts but has not yet been proved.

In other words, it’s a statement that provides an explanation for why or how something works, based on facts (or some reasonable assumptions), but that has not yet been specifically tested . For example, a hypothesis might look something like this:

Hypothesis: sleep impacts academic performance.

This statement predicts that academic performance will be influenced by the amount and/or quality of sleep a student engages in – sounds reasonable, right? It’s based on reasonable assumptions , underpinned by what we currently know about sleep and health (from the existing literature). So, loosely speaking, we could call it a hypothesis, at least by the dictionary definition.

But that’s not good enough…

Unfortunately, that’s not quite sophisticated enough to describe a research hypothesis (also sometimes called a scientific hypothesis), and it wouldn’t be acceptable in a dissertation, thesis or research paper . In the world of academic research, a statement needs a few more criteria to constitute a true research hypothesis .

What is a research hypothesis?

A research hypothesis (also called a scientific hypothesis) is a statement about the expected outcome of a study (for example, a dissertation or thesis). To constitute a quality hypothesis, the statement needs to have three attributes – specificity , clarity and testability .

Let’s take a look at these more closely.

Need a helping hand?

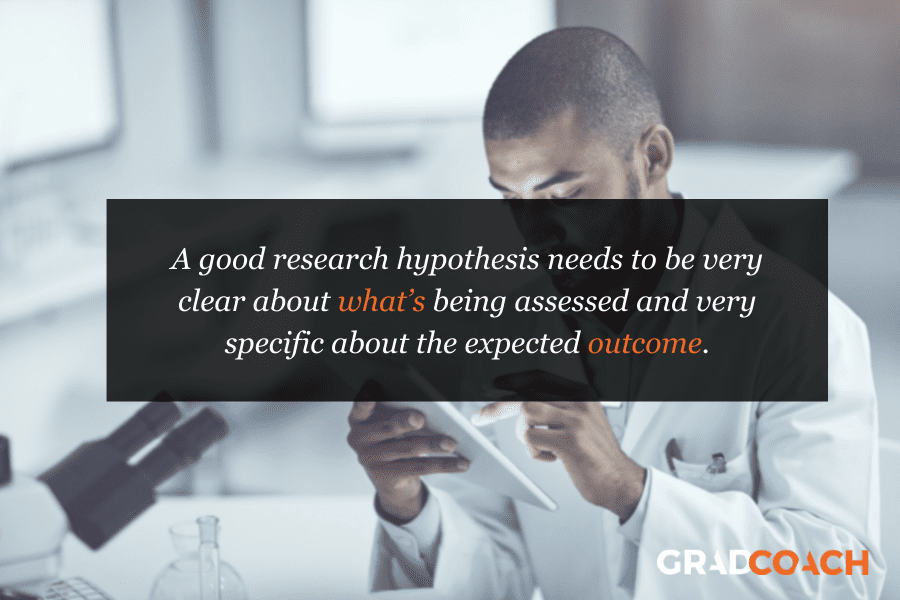

Hypothesis Essential #1: Specificity & Clarity

A good research hypothesis needs to be extremely clear and articulate about both what’ s being assessed (who or what variables are involved ) and the expected outcome (for example, a difference between groups, a relationship between variables, etc.).

Let’s stick with our sleepy students example and look at how this statement could be more specific and clear.

Hypothesis: Students who sleep at least 8 hours per night will, on average, achieve higher grades in standardised tests than students who sleep less than 8 hours a night.

As you can see, the statement is very specific as it identifies the variables involved (sleep hours and test grades), the parties involved (two groups of students), as well as the predicted relationship type (a positive relationship). There’s no ambiguity or uncertainty about who or what is involved in the statement, and the expected outcome is clear.

Contrast that to the original hypothesis we looked at – “Sleep impacts academic performance” – and you can see the difference. “Sleep” and “academic performance” are both comparatively vague , and there’s no indication of what the expected relationship direction is (more sleep or less sleep). As you can see, specificity and clarity are key.

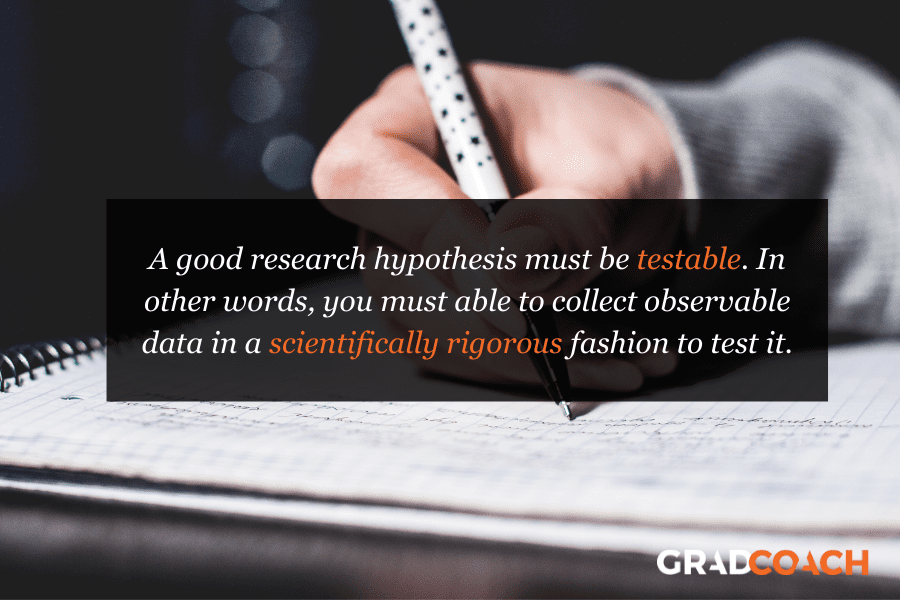

Hypothesis Essential #2: Testability (Provability)

A statement must be testable to qualify as a research hypothesis. In other words, there needs to be a way to prove (or disprove) the statement. If it’s not testable, it’s not a hypothesis – simple as that.

For example, consider the hypothesis we mentioned earlier:

Hypothesis: Students who sleep at least 8 hours per night will, on average, achieve higher grades in standardised tests than students who sleep less than 8 hours a night.

We could test this statement by undertaking a quantitative study involving two groups of students, one that gets 8 or more hours of sleep per night for a fixed period, and one that gets less. We could then compare the standardised test results for both groups to see if there’s a statistically significant difference.

Again, if you compare this to the original hypothesis we looked at – “Sleep impacts academic performance” – you can see that it would be quite difficult to test that statement, primarily because it isn’t specific enough. How much sleep? By who? What type of academic performance?

So, remember the mantra – if you can’t test it, it’s not a hypothesis 🙂

Defining A Research Hypothesis

You’re still with us? Great! Let’s recap and pin down a clear definition of a hypothesis.

A research hypothesis (or scientific hypothesis) is a statement about an expected relationship between variables, or explanation of an occurrence, that is clear, specific and testable.

So, when you write up hypotheses for your dissertation or thesis, make sure that they meet all these criteria. If you do, you’ll not only have rock-solid hypotheses but you’ll also ensure a clear focus for your entire research project.

What about the null hypothesis?

You may have also heard the terms null hypothesis , alternative hypothesis, or H-zero thrown around. At a simple level, the null hypothesis is the counter-proposal to the original hypothesis.

For example, if the hypothesis predicts that there is a relationship between two variables (for example, sleep and academic performance), the null hypothesis would predict that there is no relationship between those variables.

At a more technical level, the null hypothesis proposes that no statistical significance exists in a set of given observations and that any differences are due to chance alone.

And there you have it – hypotheses in a nutshell.

If you have any questions, be sure to leave a comment below and we’ll do our best to help you. If you need hands-on help developing and testing your hypotheses, consider our private coaching service , where we hold your hand through the research journey.

Psst... there’s more!

This post was based on one of our popular Research Bootcamps . If you're working on a research project, you'll definitely want to check this out ...

17 Comments

Very useful information. I benefit more from getting more information in this regard.

Very great insight,educative and informative. Please give meet deep critics on many research data of public international Law like human rights, environment, natural resources, law of the sea etc

In a book I read a distinction is made between null, research, and alternative hypothesis. As far as I understand, alternative and research hypotheses are the same. Can you please elaborate? Best Afshin

This is a self explanatory, easy going site. I will recommend this to my friends and colleagues.

Very good definition. How can I cite your definition in my thesis? Thank you. Is nul hypothesis compulsory in a research?

It’s a counter-proposal to be proven as a rejection

Please what is the difference between alternate hypothesis and research hypothesis?

It is a very good explanation. However, it limits hypotheses to statistically tasteable ideas. What about for qualitative researches or other researches that involve quantitative data that don’t need statistical tests?

In qualitative research, one typically uses propositions, not hypotheses.

could you please elaborate it more

I’ve benefited greatly from these notes, thank you.

This is very helpful

well articulated ideas are presented here, thank you for being reliable sources of information

Excellent. Thanks for being clear and sound about the research methodology and hypothesis (quantitative research)

I have only a simple question regarding the null hypothesis. – Is the null hypothesis (Ho) known as the reversible hypothesis of the alternative hypothesis (H1? – How to test it in academic research?

this is very important note help me much more

Hi” best wishes to you and your very nice blog”

Trackbacks/Pingbacks

- What Is Research Methodology? Simple Definition (With Examples) - Grad Coach - […] Contrasted to this, a quantitative methodology is typically used when the research aims and objectives are confirmatory in nature. For example,…

Submit a Comment Cancel reply

Your email address will not be published. Required fields are marked *

Save my name, email, and website in this browser for the next time I comment.

- Print Friendly

Thank you for visiting nature.com. You are using a browser version with limited support for CSS. To obtain the best experience, we recommend you use a more up to date browser (or turn off compatibility mode in Internet Explorer). In the meantime, to ensure continued support, we are displaying the site without styles and JavaScript.

- View all journals

- Explore content

- About the journal

- Publish with us

- Sign up for alerts

- Published: April 2009

Defining the scientific method

Nature Methods volume 6 , page 237 ( 2009 ) Cite this article

36k Accesses

32 Citations

21 Altmetric

Metrics details

The rise of 'omics' methods and data-driven research presents new possibilities for discovery but also stimulates disagreement over how science should be conducted and even how it should be defined.

Please visit methagora to view and post comments on this article.

Modern biological research methods are powerful tools in biologists' arsenal for investigating biology. But is the ability of these methods to amass extraordinary amounts of data altering the nature of scientific inquiry?

As schoolchildren we are taught that the scientific method involves a question and suggested explanation (hypothesis) based on observation, followed by the careful design and execution of controlled experiments, and finally validation, refinement or rejection of this hypothesis. Developed by thinkers including Bacon, Descartes and Pierce, this methodology has been credited with much of science's success. Modern philosophers such as Feyerabend have argued that this is not how most science is conducted, but up to now most modern scientists have subscribed to the hypothesis-centric scientific method.

Scientists' defense of this methodology has often been vigorous, likely owing to the historic success of predictive hypothesis-driven mechanistic theories in physics, the dangers inherent in 'fishing expeditions' and the likelihood of false correlations based on data from improperly designed experiments. For example, The Human Genome Project was considered by many at the time to be a serious break with the notion that proper biological research must be hypothesis-driven. But the project proceeded because others successfully argued that it would yield information vital for understanding human biology.

Methodological developments are now making it possible to obtain massive amounts of 'omics' data on a variety of biological constituents. These immense datasets allow biologists to generate useful predictions (for example, gene-finding and function or protein structure and function) using machine learning and statistics that do not take into account the underlying mechanisms that dictate design and function—considerations that would form the basis of a traditional hypothesis.

Now that the bias against data-driven investigation has weakened, the desire to simplify 'omics' data reuse has led to the establishment of minimal information requirements for different types of primary data. The hope is that this will allow new analyses and predictions using aggregated data from disparate experiments.

Last summer, the editor-in-chief of Wired , Chris Anderson, went so far as to argue that biology is too complex for hypotheses and models, and that the classical scientific method is dead. Instead, he called for these methods to be replaced by powerful correlative analyses of massive amounts of data gathered by new technologies similar to how Google Translate relies on only correlative analyses of documents on the internet.

“Hypotheses aren't simply useful tools in some potentially outmoded vision of science; they are the whole point.” Sean Carroll

This generated quite a response from the scientific community with California Institute of Technology physicist Sean Carroll arguing in Edge that “hypotheses aren't simply useful tools in some potentially outmoded vision of science; they are the whole point. Theory is understanding, and understanding our world is what science is all about.”

“Science, it turns out, is whatever scientists do.” David Goodstein

Is the generation of parts lists and correlations in the absence of functional models science? Based on the often accepted definition of the scientific method, the answer would be a qualified no. But not everyone would agree. Carroll's colleague, David Goodstein, previously stated in a Thesis article in Nature Physics that “science, it turns out, is whatever scientists do.” A philosopher would find this to be a circular and unfulfilling argument, but it is likely that many biologists who are more interested in the practical outcomes of their methods than their philosophical underpinnings would agree with this sentiment.

But the rise of methodologies that generate massive amounts of data does not dictate that biology should be data-driven. In a return to hypothesis-driven research, systems biologists are attempting to use the same 'omics' methods to generate data for use in quantitative biological models. Hypotheses are needed before data collection because model-driven quantitative analyses require rich dynamic data collected under defined conditions and stimuli.

So where does this leave us? It is likely that the high complexity of biology will actually make full biological understanding by purely correlative analysis impossible. This method works for Google because language has simple rules and low complexity. Biology has neither constraint. Correlations in large datasets may be able to provide some useful answers, but not all of them.

But 'omics' data can provide information on the size and composition of biological entities and thus determine the boundaries of the problem at hand. Biologists can then proceed to investigate function using classical hypothesis-driven experiments. It is still unclear whether even this marriage of the two methods will deliver a complete understanding of biology, but it arguably has a better chance than either method on its own.

Philosophers are free to argue whether one method is science and the other is not. Ultimately the public who funds the work and the biologists who conduct it want results that will materially impact the quality of life regardless of what the method is called.

Rights and permissions

Reprints and permissions

About this article

Cite this article.

Defining the scientific method. Nat Methods 6 , 237 (2009). https://doi.org/10.1038/nmeth0409-237

Download citation

Issue Date : April 2009

DOI : https://doi.org/10.1038/nmeth0409-237

Share this article

Anyone you share the following link with will be able to read this content:

Sorry, a shareable link is not currently available for this article.

Provided by the Springer Nature SharedIt content-sharing initiative

This article is cited by

Influence of the nutritional status on facial morphology in young japanese women.

- Chihiro Tanikawa

- Miki Kurata

- Kenji Takada

Scientific Reports (2022)

Big data in IBD: a look into the future

- Pablo Olivera

- Silvio Danese

- Laurent Peyrin-Biroulet

Nature Reviews Gastroenterology & Hepatology (2019)

Efficient modeling, simulation and coarse-graining of biological complexity with NFsim

- Michael W Sneddon

- James R Faeder

- Thierry Emonet

Nature Methods (2011)

Quick links

- Explore articles by subject

- Guide to authors

- Editorial policies

Sign up for the Nature Briefing newsletter — what matters in science, free to your inbox daily.

Hypothesis-driven science in large-scale studies: the case of GWAS

- Open access

- Published: 19 September 2021

- Volume 36 , article number 46 , ( 2021 )

Cite this article

You have full access to this open access article

- James Read ORCID: orcid.org/0000-0003-2226-0340 1 &

- Sumana Sharma ORCID: orcid.org/0000-0003-0598-2181 2

6892 Accesses

3 Citations

1 Altmetric

Explore all metrics

It is now well-appreciated by philosophers that contemporary large-scale ‘-omics’ studies in biology stand in non-trivial relationships to more orthodox hypothesis-driven approaches. These relationships have been clarified by Ratti ( 2015 ); however, there remains much more to be said regarding how an important field of genomics cited in that work—‘genome-wide association studies’ (GWAS)—fits into this framework. In the present article, we propose a revision to Ratti’s framework more suited to studies such as GWAS. In the process of doing so, we introduce to the philosophical literature novel exploratory experiments in (phospho)proteomics, and demonstrate how these experiments interplay with the above considerations.

Similar content being viewed by others

Raiders of the lost HARK: a reproducible inference framework for big data science

On Fishing for Significance and Statistician’s Degree of Freedom in the Era of Big Molecular Data

Experimental Discovery, Data Models, and Mechanisms in Biology: An Example from Mendel’s Work

Avoid common mistakes on your manuscript.

Introduction

The fields of molecular biology and genetics were transformed upon completion in 2001 of the Human Genome Project (Lander et al. 2001 ). This provided for the first time near-complete information on the genetic makeup of human beings, and marked the advent of what has become known as the ‘post-genomics’ era, defined by the availability of large-scale data sets derived from ‘genome-scale’ approaches. In turn, this has led to a shift in biological methodology, from carefully constructed hypothesis-driven research, to unbiased data-driven approaches, sometimes called ‘-omics’ studies. These studies have attracted philosophical interest in recent years: see e.g. Burian ( 2007 ); O’Malley et al. ( 2010 ); Ratti ( 2015 ); for more general philosophical discussions of large-scale data-driven approaches in contemporary post-genomics biology, see e.g. Leonelli ( 2016 ); Richardson and Stevens ( 2015 ).

Recall that -omics studies fall into three main categories: ‘genomics’, ‘transcriptomics’, and ‘proteomics’. The salient features of these three categories as as follows (we make no claim that these features exhaust any of the three categories; they are, however, the features which are relevant to the present article). Genomics is the study of the complete set of genes (composed of DNA) inside a cell. Cellular processes lead to genetic information being transcribed (copied) into molecules known as RNA. ‘Messenger RNA’ (mRNA) carries information corresponding to the genetic sequence of a gene. Transcriptomics is the study of the complete set of RNA transcripts that are produced by the genome. Finally, the information encoded in mRNA is used by cellular machinery called ribosomes to construct proteins; proteomics is the systematic study of these proteins within a cell. Proteins are the ultimate workhorses of the cell; proteomics studies aim to characterise cellular functions mediated by protein networks, in which nodes represent proteins and edges represent physical/functional interactions between them. For further background on genomics, transcriptomics, and proteomics, see Hasin et al. ( 2017 ).

Large-scale -omics studies are often described as being ‘hypothesis-free’. To take one example from genomics: advances in genome-editing techniques mean that it is now possible to generate ‘loss-of-function’ mutants in the laboratory. Such mutations are inactivating in the sense that they lead to the loss in the function of a gene within a cell. In the last few years, CRISPR-Cas9 technology has emerged, which makes it possible to create targetted loss-of-function mutants for any of the nearly 20,000 genes in the human genome (Doudna and Charpentier 2014 ). This allows researchers to ‘screen’ for a gene the loss of which leads to the phenotype of interest, thereby identifying the function of that gene. The methodological idea behind such screening approaches is that one does not require any background hypothesis as to which gene could be involved in a particular biological process, or associated with a particular phenotype: hence the widespread declaration that such approaches are ‘hypothesis-free’ (Shalem et al. 2015 ). As Burian writes, “Genomics, proteomics, and related “omics” disciplines represent a break with the ideal of hypothesis-driven science” (Burian 2007 , p. 289).

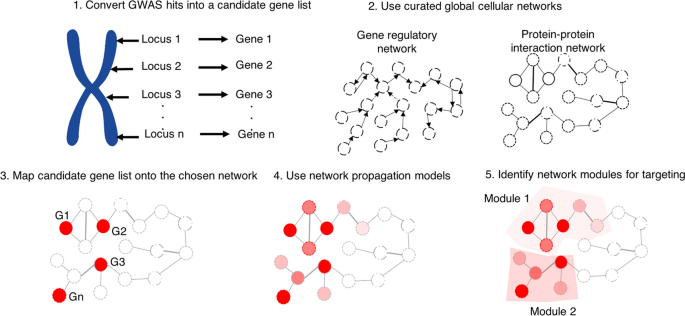

With Ratti ( 2015 ); Franklin ( 2005 ), and others, we find the terminology of ‘hypothesis-free’ to be misleading—for, in fact, such large-scale studies exhibit a Janus-faced dependence on mechanistic hypotheses of a quite standard sort. Ratti characterises such studies, and their connections with more orthodox mechanistic hypothesis-driven science, as involving three steps:

1. The generation of a preliminary set of hypotheses from an established set of premises; 2. The prioritization of some hypotheses and discarding of others by means of other premises and new evidence; 3. The search for more stringent evidence for prioritized hypotheses. (Ratti 2015 , p. 201)

In step (1), scientific hypothesising plays a role, insofar as it is used to delimit the domain of inquiry of the study. For example, a loss-of-function screen to identify the receptor for a pathogen would hypothesise that there exists a non-redundant mechanism for the interaction of the pathogen with the cells, and that the loss of this cellular factor/mechanism would lead to diminution of interaction of the pathogen with the cell surface. For the purpose of the test, such hypotheses are regarded as indubitable: they delimit the range of acceptable empirical enquiry. But there is also a forward-looking dependence of these approaches on scientific hypothesising: the results of such studies can be used to generate more specific mechanistic hypotheses, certain of which are prioritised in step (2) (based on certain additional assumptions—e.g., that there is a single cellular factor/mechanism responsible for pathogen-cell interaction in the above example), and which can then be validated in downstream analysis in step (3). For example, identification of candidate viral receptors using genome-wide loss-of-function screens can be used to generate specific hypotheses regarding the identity of the associated receptor, which can then be subject to empirical test.