- Skip to secondary menu

- Skip to main content

- Skip to primary sidebar

Statistics By Jim

Making statistics intuitive

Null Hypothesis: Definition, Rejecting & Examples

By Jim Frost 6 Comments

What is a Null Hypothesis?

The null hypothesis in statistics states that there is no difference between groups or no relationship between variables. It is one of two mutually exclusive hypotheses about a population in a hypothesis test.

- Null Hypothesis H 0 : No effect exists in the population.

- Alternative Hypothesis H A : The effect exists in the population.

In every study or experiment, researchers assess an effect or relationship. This effect can be the effectiveness of a new drug, building material, or other intervention that has benefits. There is a benefit or connection that the researchers hope to identify. Unfortunately, no effect may exist. In statistics, we call this lack of an effect the null hypothesis. Researchers assume that this notion of no effect is correct until they have enough evidence to suggest otherwise, similar to how a trial presumes innocence.

In this context, the analysts don’t necessarily believe the null hypothesis is correct. In fact, they typically want to reject it because that leads to more exciting finds about an effect or relationship. The new vaccine works!

You can think of it as the default theory that requires sufficiently strong evidence to reject. Like a prosecutor, researchers must collect sufficient evidence to overturn the presumption of no effect. Investigators must work hard to set up a study and a data collection system to obtain evidence that can reject the null hypothesis.

Related post : What is an Effect in Statistics?

Null Hypothesis Examples

Null hypotheses start as research questions that the investigator rephrases as a statement indicating there is no effect or relationship.

| Does the vaccine prevent infections? | The vaccine does not affect the infection rate. |

| Does the new additive increase product strength? | The additive does not affect mean product strength. |

| Does the exercise intervention increase bone mineral density? | The intervention does not affect bone mineral density. |

| As screen time increases, does test performance decrease? | There is no relationship between screen time and test performance. |

After reading these examples, you might think they’re a bit boring and pointless. However, the key is to remember that the null hypothesis defines the condition that the researchers need to discredit before suggesting an effect exists.

Let’s see how you reject the null hypothesis and get to those more exciting findings!

When to Reject the Null Hypothesis

So, you want to reject the null hypothesis, but how and when can you do that? To start, you’ll need to perform a statistical test on your data. The following is an overview of performing a study that uses a hypothesis test.

The first step is to devise a research question and the appropriate null hypothesis. After that, the investigators need to formulate an experimental design and data collection procedures that will allow them to gather data that can answer the research question. Then they collect the data. For more information about designing a scientific study that uses statistics, read my post 5 Steps for Conducting Studies with Statistics .

After data collection is complete, statistics and hypothesis testing enter the picture. Hypothesis testing takes your sample data and evaluates how consistent they are with the null hypothesis. The p-value is a crucial part of the statistical results because it quantifies how strongly the sample data contradict the null hypothesis.

When the sample data provide sufficient evidence, you can reject the null hypothesis. In a hypothesis test, this process involves comparing the p-value to your significance level .

Rejecting the Null Hypothesis

Reject the null hypothesis when the p-value is less than or equal to your significance level. Your sample data favor the alternative hypothesis, which suggests that the effect exists in the population. For a mnemonic device, remember—when the p-value is low, the null must go!

When you can reject the null hypothesis, your results are statistically significant. Learn more about Statistical Significance: Definition & Meaning .

Failing to Reject the Null Hypothesis

Conversely, when the p-value is greater than your significance level, you fail to reject the null hypothesis. The sample data provides insufficient data to conclude that the effect exists in the population. When the p-value is high, the null must fly!

Note that failing to reject the null is not the same as proving it. For more information about the difference, read my post about Failing to Reject the Null .

That’s a very general look at the process. But I hope you can see how the path to more exciting findings depends on being able to rule out the less exciting null hypothesis that states there’s nothing to see here!

Let’s move on to learning how to write the null hypothesis for different types of effects, relationships, and tests.

Related posts : How Hypothesis Tests Work and Interpreting P-values

How to Write a Null Hypothesis

The null hypothesis varies by the type of statistic and hypothesis test. Remember that inferential statistics use samples to draw conclusions about populations. Consequently, when you write a null hypothesis, it must make a claim about the relevant population parameter . Further, that claim usually indicates that the effect does not exist in the population. Below are typical examples of writing a null hypothesis for various parameters and hypothesis tests.

Related posts : Descriptive vs. Inferential Statistics and Populations, Parameters, and Samples in Inferential Statistics

Group Means

T-tests and ANOVA assess the differences between group means. For these tests, the null hypothesis states that there is no difference between group means in the population. In other words, the experimental conditions that define the groups do not affect the mean outcome. Mu (µ) is the population parameter for the mean, and you’ll need to include it in the statement for this type of study.

For example, an experiment compares the mean bone density changes for a new osteoporosis medication. The control group does not receive the medicine, while the treatment group does. The null states that the mean bone density changes for the control and treatment groups are equal.

- Null Hypothesis H 0 : Group means are equal in the population: µ 1 = µ 2 , or µ 1 – µ 2 = 0

- Alternative Hypothesis H A : Group means are not equal in the population: µ 1 ≠ µ 2 , or µ 1 – µ 2 ≠ 0.

Group Proportions

Proportions tests assess the differences between group proportions. For these tests, the null hypothesis states that there is no difference between group proportions. Again, the experimental conditions did not affect the proportion of events in the groups. P is the population proportion parameter that you’ll need to include.

For example, a vaccine experiment compares the infection rate in the treatment group to the control group. The treatment group receives the vaccine, while the control group does not. The null states that the infection rates for the control and treatment groups are equal.

- Null Hypothesis H 0 : Group proportions are equal in the population: p 1 = p 2 .

- Alternative Hypothesis H A : Group proportions are not equal in the population: p 1 ≠ p 2 .

Correlation and Regression Coefficients

Some studies assess the relationship between two continuous variables rather than differences between groups.

In these studies, analysts often use either correlation or regression analysis . For these tests, the null states that there is no relationship between the variables. Specifically, it says that the correlation or regression coefficient is zero. As one variable increases, there is no tendency for the other variable to increase or decrease. Rho (ρ) is the population correlation parameter and beta (β) is the regression coefficient parameter.

For example, a study assesses the relationship between screen time and test performance. The null states that there is no correlation between this pair of variables. As screen time increases, test performance does not tend to increase or decrease.

- Null Hypothesis H 0 : The correlation in the population is zero: ρ = 0.

- Alternative Hypothesis H A : The correlation in the population is not zero: ρ ≠ 0.

For all these cases, the analysts define the hypotheses before the study. After collecting the data, they perform a hypothesis test to determine whether they can reject the null hypothesis.

The preceding examples are all for two-tailed hypothesis tests. To learn about one-tailed tests and how to write a null hypothesis for them, read my post One-Tailed vs. Two-Tailed Tests .

Related post : Understanding Correlation

Neyman, J; Pearson, E. S. (January 1, 1933). On the Problem of the most Efficient Tests of Statistical Hypotheses . Philosophical Transactions of the Royal Society A . 231 (694–706): 289–337.

Share this:

Reader Interactions

January 11, 2024 at 2:57 pm

Thanks for the reply.

January 10, 2024 at 1:23 pm

Hi Jim, In your comment you state that equivalence test null and alternate hypotheses are reversed. For hypothesis tests of data fits to a probability distribution, the null hypothesis is that the probability distribution fits the data. Is this correct?

January 10, 2024 at 2:15 pm

Those two separate things, equivalence testing and normality tests. But, yes, you’re correct for both.

Hypotheses are switched for equivalence testing. You need to “work” (i.e., collect a large sample of good quality data) to be able to reject the null that the groups are different to be able to conclude they’re the same.

With typical hypothesis tests, if you have low quality data and a low sample size, you’ll fail to reject the null that they’re the same, concluding they’re equivalent. But that’s more a statement about the low quality and small sample size than anything to do with the groups being equal.

So, equivalence testing make you work to obtain a finding that the groups are the same (at least within some amount you define as a trivial difference).

For normality testing, and other distribution tests, the null states that the data follow the distribution (normal or whatever). If you reject the null, you have sufficient evidence to conclude that your sample data don’t follow the probability distribution. That’s a rare case where you hope to fail to reject the null. And it suffers from the problem I describe above where you might fail to reject the null simply because you have a small sample size. In that case, you’d conclude the data follow the probability distribution but it’s more that you don’t have enough data for the test to register the deviation. In this scenario, if you had a larger sample size, you’d reject the null and conclude it doesn’t follow that distribution.

I don’t know of any equivalence testing type approach for distribution fit tests where you’d need to work to show the data follow a distribution, although I haven’t looked for one either!

February 20, 2022 at 9:26 pm

Is a null hypothesis regularly (always) stated in the negative? “there is no” or “does not”

February 23, 2022 at 9:21 pm

Typically, the null hypothesis includes an equal sign. The null hypothesis states that the population parameter equals a particular value. That value is usually one that represents no effect. In the case of a one-sided hypothesis test, the null still contains an equal sign but it’s “greater than or equal to” or “less than or equal to.” If you wanted to translate the null hypothesis from its native mathematical expression, you could use the expression “there is no effect.” But the mathematical form more specifically states what it’s testing.

It’s the alternative hypothesis that typically contains does not equal.

There are some exceptions. For example, in an equivalence test where the researchers want to show that two things are equal, the null hypothesis states that they’re not equal.

In short, the null hypothesis states the condition that the researchers hope to reject. They need to work hard to set up an experiment and data collection that’ll gather enough evidence to be able to reject the null condition.

February 15, 2022 at 9:32 am

Dear sir I always read your notes on Research methods.. Kindly tell is there any available Book on all these..wonderfull Urgent

Comments and Questions Cancel reply

Have a language expert improve your writing

Run a free plagiarism check in 10 minutes, generate accurate citations for free.

- Knowledge Base

- Null and Alternative Hypotheses | Definitions & Examples

Null & Alternative Hypotheses | Definitions, Templates & Examples

Published on May 6, 2022 by Shaun Turney . Revised on June 22, 2023.

The null and alternative hypotheses are two competing claims that researchers weigh evidence for and against using a statistical test :

- Null hypothesis ( H 0 ): There’s no effect in the population .

- Alternative hypothesis ( H a or H 1 ) : There’s an effect in the population.

Table of contents

Answering your research question with hypotheses, what is a null hypothesis, what is an alternative hypothesis, similarities and differences between null and alternative hypotheses, how to write null and alternative hypotheses, other interesting articles, frequently asked questions.

The null and alternative hypotheses offer competing answers to your research question . When the research question asks “Does the independent variable affect the dependent variable?”:

- The null hypothesis ( H 0 ) answers “No, there’s no effect in the population.”

- The alternative hypothesis ( H a ) answers “Yes, there is an effect in the population.”

The null and alternative are always claims about the population. That’s because the goal of hypothesis testing is to make inferences about a population based on a sample . Often, we infer whether there’s an effect in the population by looking at differences between groups or relationships between variables in the sample. It’s critical for your research to write strong hypotheses .

You can use a statistical test to decide whether the evidence favors the null or alternative hypothesis. Each type of statistical test comes with a specific way of phrasing the null and alternative hypothesis. However, the hypotheses can also be phrased in a general way that applies to any test.

Receive feedback on language, structure, and formatting

Professional editors proofread and edit your paper by focusing on:

- Academic style

- Vague sentences

- Style consistency

See an example

The null hypothesis is the claim that there’s no effect in the population.

If the sample provides enough evidence against the claim that there’s no effect in the population ( p ≤ α), then we can reject the null hypothesis . Otherwise, we fail to reject the null hypothesis.

Although “fail to reject” may sound awkward, it’s the only wording that statisticians accept . Be careful not to say you “prove” or “accept” the null hypothesis.

Null hypotheses often include phrases such as “no effect,” “no difference,” or “no relationship.” When written in mathematical terms, they always include an equality (usually =, but sometimes ≥ or ≤).

You can never know with complete certainty whether there is an effect in the population. Some percentage of the time, your inference about the population will be incorrect. When you incorrectly reject the null hypothesis, it’s called a type I error . When you incorrectly fail to reject it, it’s a type II error.

Examples of null hypotheses

The table below gives examples of research questions and null hypotheses. There’s always more than one way to answer a research question, but these null hypotheses can help you get started.

| ( ) | ||

| Does tooth flossing affect the number of cavities? | Tooth flossing has on the number of cavities. | test: The mean number of cavities per person does not differ between the flossing group (µ ) and the non-flossing group (µ ) in the population; µ = µ . |

| Does the amount of text highlighted in the textbook affect exam scores? | The amount of text highlighted in the textbook has on exam scores. | : There is no relationship between the amount of text highlighted and exam scores in the population; β = 0. |

| Does daily meditation decrease the incidence of depression? | Daily meditation the incidence of depression.* | test: The proportion of people with depression in the daily-meditation group ( ) is greater than or equal to the no-meditation group ( ) in the population; ≥ . |

*Note that some researchers prefer to always write the null hypothesis in terms of “no effect” and “=”. It would be fine to say that daily meditation has no effect on the incidence of depression and p 1 = p 2 .

The alternative hypothesis ( H a ) is the other answer to your research question . It claims that there’s an effect in the population.

Often, your alternative hypothesis is the same as your research hypothesis. In other words, it’s the claim that you expect or hope will be true.

The alternative hypothesis is the complement to the null hypothesis. Null and alternative hypotheses are exhaustive, meaning that together they cover every possible outcome. They are also mutually exclusive, meaning that only one can be true at a time.

Alternative hypotheses often include phrases such as “an effect,” “a difference,” or “a relationship.” When alternative hypotheses are written in mathematical terms, they always include an inequality (usually ≠, but sometimes < or >). As with null hypotheses, there are many acceptable ways to phrase an alternative hypothesis.

Examples of alternative hypotheses

The table below gives examples of research questions and alternative hypotheses to help you get started with formulating your own.

| Does tooth flossing affect the number of cavities? | Tooth flossing has an on the number of cavities. | test: The mean number of cavities per person differs between the flossing group (µ ) and the non-flossing group (µ ) in the population; µ ≠ µ . |

| Does the amount of text highlighted in a textbook affect exam scores? | The amount of text highlighted in the textbook has an on exam scores. | : There is a relationship between the amount of text highlighted and exam scores in the population; β ≠ 0. |

| Does daily meditation decrease the incidence of depression? | Daily meditation the incidence of depression. | test: The proportion of people with depression in the daily-meditation group ( ) is less than the no-meditation group ( ) in the population; < . |

Null and alternative hypotheses are similar in some ways:

- They’re both answers to the research question.

- They both make claims about the population.

- They’re both evaluated by statistical tests.

However, there are important differences between the two types of hypotheses, summarized in the following table.

| A claim that there is in the population. | A claim that there is in the population. | |

|

| ||

| Equality symbol (=, ≥, or ≤) | Inequality symbol (≠, <, or >) | |

| Rejected | Supported | |

| Failed to reject | Not supported |

To help you write your hypotheses, you can use the template sentences below. If you know which statistical test you’re going to use, you can use the test-specific template sentences. Otherwise, you can use the general template sentences.

General template sentences

The only thing you need to know to use these general template sentences are your dependent and independent variables. To write your research question, null hypothesis, and alternative hypothesis, fill in the following sentences with your variables:

Does independent variable affect dependent variable ?

- Null hypothesis ( H 0 ): Independent variable does not affect dependent variable.

- Alternative hypothesis ( H a ): Independent variable affects dependent variable.

Test-specific template sentences

Once you know the statistical test you’ll be using, you can write your hypotheses in a more precise and mathematical way specific to the test you chose. The table below provides template sentences for common statistical tests.

| ( ) | ||

| test

with two groups | The mean dependent variable does not differ between group 1 (µ ) and group 2 (µ ) in the population; µ = µ . | The mean dependent variable differs between group 1 (µ ) and group 2 (µ ) in the population; µ ≠ µ . |

| with three groups | The mean dependent variable does not differ between group 1 (µ ), group 2 (µ ), and group 3 (µ ) in the population; µ = µ = µ . | The mean dependent variable of group 1 (µ ), group 2 (µ ), and group 3 (µ ) are not all equal in the population. |

| There is no correlation between independent variable and dependent variable in the population; ρ = 0. | There is a correlation between independent variable and dependent variable in the population; ρ ≠ 0. | |

| There is no relationship between independent variable and dependent variable in the population; β = 0. | There is a relationship between independent variable and dependent variable in the population; β ≠ 0. | |

| Two-proportions test | The dependent variable expressed as a proportion does not differ between group 1 ( ) and group 2 ( ) in the population; = . | The dependent variable expressed as a proportion differs between group 1 ( ) and group 2 ( ) in the population; ≠ . |

Note: The template sentences above assume that you’re performing one-tailed tests . One-tailed tests are appropriate for most studies.

If you want to know more about statistics , methodology , or research bias , make sure to check out some of our other articles with explanations and examples.

- Normal distribution

- Descriptive statistics

- Measures of central tendency

- Correlation coefficient

Methodology

- Cluster sampling

- Stratified sampling

- Types of interviews

- Cohort study

- Thematic analysis

Research bias

- Implicit bias

- Cognitive bias

- Survivorship bias

- Availability heuristic

- Nonresponse bias

- Regression to the mean

Hypothesis testing is a formal procedure for investigating our ideas about the world using statistics. It is used by scientists to test specific predictions, called hypotheses , by calculating how likely it is that a pattern or relationship between variables could have arisen by chance.

Null and alternative hypotheses are used in statistical hypothesis testing . The null hypothesis of a test always predicts no effect or no relationship between variables, while the alternative hypothesis states your research prediction of an effect or relationship.

The null hypothesis is often abbreviated as H 0 . When the null hypothesis is written using mathematical symbols, it always includes an equality symbol (usually =, but sometimes ≥ or ≤).

The alternative hypothesis is often abbreviated as H a or H 1 . When the alternative hypothesis is written using mathematical symbols, it always includes an inequality symbol (usually ≠, but sometimes < or >).

A research hypothesis is your proposed answer to your research question. The research hypothesis usually includes an explanation (“ x affects y because …”).

A statistical hypothesis, on the other hand, is a mathematical statement about a population parameter. Statistical hypotheses always come in pairs: the null and alternative hypotheses . In a well-designed study , the statistical hypotheses correspond logically to the research hypothesis.

Cite this Scribbr article

If you want to cite this source, you can copy and paste the citation or click the “Cite this Scribbr article” button to automatically add the citation to our free Citation Generator.

Turney, S. (2023, June 22). Null & Alternative Hypotheses | Definitions, Templates & Examples. Scribbr. Retrieved August 12, 2024, from https://www.scribbr.com/statistics/null-and-alternative-hypotheses/

Is this article helpful?

Shaun Turney

Other students also liked, inferential statistics | an easy introduction & examples, hypothesis testing | a step-by-step guide with easy examples, type i & type ii errors | differences, examples, visualizations, what is your plagiarism score.

What is The Null Hypothesis & When Do You Reject The Null Hypothesis

Julia Simkus

Editor at Simply Psychology

BA (Hons) Psychology, Princeton University

Julia Simkus is a graduate of Princeton University with a Bachelor of Arts in Psychology. She is currently studying for a Master's Degree in Counseling for Mental Health and Wellness in September 2023. Julia's research has been published in peer reviewed journals.

Learn about our Editorial Process

Saul McLeod, PhD

Editor-in-Chief for Simply Psychology

BSc (Hons) Psychology, MRes, PhD, University of Manchester

Saul McLeod, PhD., is a qualified psychology teacher with over 18 years of experience in further and higher education. He has been published in peer-reviewed journals, including the Journal of Clinical Psychology.

Olivia Guy-Evans, MSc

Associate Editor for Simply Psychology

BSc (Hons) Psychology, MSc Psychology of Education

Olivia Guy-Evans is a writer and associate editor for Simply Psychology. She has previously worked in healthcare and educational sectors.

On This Page:

A null hypothesis is a statistical concept suggesting no significant difference or relationship between measured variables. It’s the default assumption unless empirical evidence proves otherwise.

The null hypothesis states no relationship exists between the two variables being studied (i.e., one variable does not affect the other).

The null hypothesis is the statement that a researcher or an investigator wants to disprove.

Testing the null hypothesis can tell you whether your results are due to the effects of manipulating the dependent variable or due to random chance.

How to Write a Null Hypothesis

Null hypotheses (H0) start as research questions that the investigator rephrases as statements indicating no effect or relationship between the independent and dependent variables.

It is a default position that your research aims to challenge or confirm.

For example, if studying the impact of exercise on weight loss, your null hypothesis might be:

There is no significant difference in weight loss between individuals who exercise daily and those who do not.

Examples of Null Hypotheses

| Research Question | Null Hypothesis |

|---|---|

| Do teenagers use cell phones more than adults? | Teenagers and adults use cell phones the same amount. |

| Do tomato plants exhibit a higher rate of growth when planted in compost rather than in soil? | Tomato plants show no difference in growth rates when planted in compost rather than soil. |

| Does daily meditation decrease the incidence of depression? | Daily meditation does not decrease the incidence of depression. |

| Does daily exercise increase test performance? | There is no relationship between daily exercise time and test performance. |

| Does the new vaccine prevent infections? | The vaccine does not affect the infection rate. |

| Does flossing your teeth affect the number of cavities? | Flossing your teeth has no effect on the number of cavities. |

When Do We Reject The Null Hypothesis?

We reject the null hypothesis when the data provide strong enough evidence to conclude that it is likely incorrect. This often occurs when the p-value (probability of observing the data given the null hypothesis is true) is below a predetermined significance level.

If the collected data does not meet the expectation of the null hypothesis, a researcher can conclude that the data lacks sufficient evidence to back up the null hypothesis, and thus the null hypothesis is rejected.

Rejecting the null hypothesis means that a relationship does exist between a set of variables and the effect is statistically significant ( p > 0.05).

If the data collected from the random sample is not statistically significance , then the null hypothesis will be accepted, and the researchers can conclude that there is no relationship between the variables.

You need to perform a statistical test on your data in order to evaluate how consistent it is with the null hypothesis. A p-value is one statistical measurement used to validate a hypothesis against observed data.

Calculating the p-value is a critical part of null-hypothesis significance testing because it quantifies how strongly the sample data contradicts the null hypothesis.

The level of statistical significance is often expressed as a p -value between 0 and 1. The smaller the p-value, the stronger the evidence that you should reject the null hypothesis.

Usually, a researcher uses a confidence level of 95% or 99% (p-value of 0.05 or 0.01) as general guidelines to decide if you should reject or keep the null.

When your p-value is less than or equal to your significance level, you reject the null hypothesis.

In other words, smaller p-values are taken as stronger evidence against the null hypothesis. Conversely, when the p-value is greater than your significance level, you fail to reject the null hypothesis.

In this case, the sample data provides insufficient data to conclude that the effect exists in the population.

Because you can never know with complete certainty whether there is an effect in the population, your inferences about a population will sometimes be incorrect.

When you incorrectly reject the null hypothesis, it’s called a type I error. When you incorrectly fail to reject it, it’s called a type II error.

Why Do We Never Accept The Null Hypothesis?

The reason we do not say “accept the null” is because we are always assuming the null hypothesis is true and then conducting a study to see if there is evidence against it. And, even if we don’t find evidence against it, a null hypothesis is not accepted.

A lack of evidence only means that you haven’t proven that something exists. It does not prove that something doesn’t exist.

It is risky to conclude that the null hypothesis is true merely because we did not find evidence to reject it. It is always possible that researchers elsewhere have disproved the null hypothesis, so we cannot accept it as true, but instead, we state that we failed to reject the null.

One can either reject the null hypothesis, or fail to reject it, but can never accept it.

Why Do We Use The Null Hypothesis?

We can never prove with 100% certainty that a hypothesis is true; We can only collect evidence that supports a theory. However, testing a hypothesis can set the stage for rejecting or accepting this hypothesis within a certain confidence level.

The null hypothesis is useful because it can tell us whether the results of our study are due to random chance or the manipulation of a variable (with a certain level of confidence).

A null hypothesis is rejected if the measured data is significantly unlikely to have occurred and a null hypothesis is accepted if the observed outcome is consistent with the position held by the null hypothesis.

Rejecting the null hypothesis sets the stage for further experimentation to see if a relationship between two variables exists.

Hypothesis testing is a critical part of the scientific method as it helps decide whether the results of a research study support a particular theory about a given population. Hypothesis testing is a systematic way of backing up researchers’ predictions with statistical analysis.

It helps provide sufficient statistical evidence that either favors or rejects a certain hypothesis about the population parameter.

Purpose of a Null Hypothesis

- The primary purpose of the null hypothesis is to disprove an assumption.

- Whether rejected or accepted, the null hypothesis can help further progress a theory in many scientific cases.

- A null hypothesis can be used to ascertain how consistent the outcomes of multiple studies are.

Do you always need both a Null Hypothesis and an Alternative Hypothesis?

The null (H0) and alternative (Ha or H1) hypotheses are two competing claims that describe the effect of the independent variable on the dependent variable. They are mutually exclusive, which means that only one of the two hypotheses can be true.

While the null hypothesis states that there is no effect in the population, an alternative hypothesis states that there is statistical significance between two variables.

The goal of hypothesis testing is to make inferences about a population based on a sample. In order to undertake hypothesis testing, you must express your research hypothesis as a null and alternative hypothesis. Both hypotheses are required to cover every possible outcome of the study.

What is the difference between a null hypothesis and an alternative hypothesis?

The alternative hypothesis is the complement to the null hypothesis. The null hypothesis states that there is no effect or no relationship between variables, while the alternative hypothesis claims that there is an effect or relationship in the population.

It is the claim that you expect or hope will be true. The null hypothesis and the alternative hypothesis are always mutually exclusive, meaning that only one can be true at a time.

What are some problems with the null hypothesis?

One major problem with the null hypothesis is that researchers typically will assume that accepting the null is a failure of the experiment. However, accepting or rejecting any hypothesis is a positive result. Even if the null is not refuted, the researchers will still learn something new.

Why can a null hypothesis not be accepted?

We can either reject or fail to reject a null hypothesis, but never accept it. If your test fails to detect an effect, this is not proof that the effect doesn’t exist. It just means that your sample did not have enough evidence to conclude that it exists.

We can’t accept a null hypothesis because a lack of evidence does not prove something that does not exist. Instead, we fail to reject it.

Failing to reject the null indicates that the sample did not provide sufficient enough evidence to conclude that an effect exists.

If the p-value is greater than the significance level, then you fail to reject the null hypothesis.

Is a null hypothesis directional or non-directional?

A hypothesis test can either contain an alternative directional hypothesis or a non-directional alternative hypothesis. A directional hypothesis is one that contains the less than (“<“) or greater than (“>”) sign.

A nondirectional hypothesis contains the not equal sign (“≠”). However, a null hypothesis is neither directional nor non-directional.

A null hypothesis is a prediction that there will be no change, relationship, or difference between two variables.

The directional hypothesis or nondirectional hypothesis would then be considered alternative hypotheses to the null hypothesis.

Gill, J. (1999). The insignificance of null hypothesis significance testing. Political research quarterly , 52 (3), 647-674.

Krueger, J. (2001). Null hypothesis significance testing: On the survival of a flawed method. American Psychologist , 56 (1), 16.

Masson, M. E. (2011). A tutorial on a practical Bayesian alternative to null-hypothesis significance testing. Behavior research methods , 43 , 679-690.

Nickerson, R. S. (2000). Null hypothesis significance testing: a review of an old and continuing controversy. Psychological methods , 5 (2), 241.

Rozeboom, W. W. (1960). The fallacy of the null-hypothesis significance test. Psychological bulletin , 57 (5), 416.

9.1 Null and Alternative Hypotheses

The actual test begins by considering two hypotheses . They are called the null hypothesis and the alternative hypothesis . These hypotheses contain opposing viewpoints.

H 0 , the — null hypothesis: a statement of no difference between sample means or proportions or no difference between a sample mean or proportion and a population mean or proportion. In other words, the difference equals 0.

H a —, the alternative hypothesis: a claim about the population that is contradictory to H 0 and what we conclude when we reject H 0 .

Since the null and alternative hypotheses are contradictory, you must examine evidence to decide if you have enough evidence to reject the null hypothesis or not. The evidence is in the form of sample data.

After you have determined which hypothesis the sample supports, you make a decision. There are two options for a decision. They are reject H 0 if the sample information favors the alternative hypothesis or do not reject H 0 or decline to reject H 0 if the sample information is insufficient to reject the null hypothesis.

Mathematical Symbols Used in H 0 and H a :

| equal (=) | not equal (≠) greater than (>) less than (<) |

| greater than or equal to (≥) | less than (<) |

| less than or equal to (≤) | more than (>) |

H 0 always has a symbol with an equal in it. H a never has a symbol with an equal in it. The choice of symbol depends on the wording of the hypothesis test. However, be aware that many researchers use = in the null hypothesis, even with > or < as the symbol in the alternative hypothesis. This practice is acceptable because we only make the decision to reject or not reject the null hypothesis.

Example 9.1

H 0 : No more than 30 percent of the registered voters in Santa Clara County voted in the primary election. p ≤ 30 H a : More than 30 percent of the registered voters in Santa Clara County voted in the primary election. p > 30

A medical trial is conducted to test whether or not a new medicine reduces cholesterol by 25 percent. State the null and alternative hypotheses.

Example 9.2

We want to test whether the mean GPA of students in American colleges is different from 2.0 (out of 4.0). The null and alternative hypotheses are the following: H 0 : μ = 2.0 H a : μ ≠ 2.0

We want to test whether the mean height of eighth graders is 66 inches. State the null and alternative hypotheses. Fill in the correct symbol (=, ≠, ≥, <, ≤, >) for the null and alternative hypotheses.

- H 0 : μ __ 66

- H a : μ __ 66

Example 9.3

We want to test if college students take fewer than five years to graduate from college, on the average. The null and alternative hypotheses are the following: H 0 : μ ≥ 5 H a : μ < 5

We want to test if it takes fewer than 45 minutes to teach a lesson plan. State the null and alternative hypotheses. Fill in the correct symbol ( =, ≠, ≥, <, ≤, >) for the null and alternative hypotheses.

- H 0 : μ __ 45

- H a : μ __ 45

Example 9.4

An article on school standards stated that about half of all students in France, Germany, and Israel take advanced placement exams and a third of the students pass. The same article stated that 6.6 percent of U.S. students take advanced placement exams and 4.4 percent pass. Test if the percentage of U.S. students who take advanced placement exams is more than 6.6 percent. State the null and alternative hypotheses. H 0 : p ≤ 0.066 H a : p > 0.066

On a state driver’s test, about 40 percent pass the test on the first try. We want to test if more than 40 percent pass on the first try. Fill in the correct symbol (=, ≠, ≥, <, ≤, >) for the null and alternative hypotheses.

- H 0 : p __ 0.40

- H a : p __ 0.40

Collaborative Exercise

Bring to class a newspaper, some news magazines, and some internet articles. In groups, find articles from which your group can write null and alternative hypotheses. Discuss your hypotheses with the rest of the class.

This book may not be used in the training of large language models or otherwise be ingested into large language models or generative AI offerings without OpenStax's permission.

Want to cite, share, or modify this book? This book uses the Creative Commons Attribution License and you must attribute Texas Education Agency (TEA). The original material is available at: https://www.texasgateway.org/book/tea-statistics . Changes were made to the original material, including updates to art, structure, and other content updates.

Access for free at https://openstax.org/books/statistics/pages/1-introduction

- Authors: Barbara Illowsky, Susan Dean

- Publisher/website: OpenStax

- Book title: Statistics

- Publication date: Mar 27, 2020

- Location: Houston, Texas

- Book URL: https://openstax.org/books/statistics/pages/1-introduction

- Section URL: https://openstax.org/books/statistics/pages/9-1-null-and-alternative-hypotheses

© Apr 16, 2024 Texas Education Agency (TEA). The OpenStax name, OpenStax logo, OpenStax book covers, OpenStax CNX name, and OpenStax CNX logo are not subject to the Creative Commons license and may not be reproduced without the prior and express written consent of Rice University.

If you're seeing this message, it means we're having trouble loading external resources on our website.

If you're behind a web filter, please make sure that the domains *.kastatic.org and *.kasandbox.org are unblocked.

To log in and use all the features of Khan Academy, please enable JavaScript in your browser.

AP®︎/College Statistics

Course: ap®︎/college statistics > unit 10.

- Idea behind hypothesis testing

Examples of null and alternative hypotheses

- Writing null and alternative hypotheses

- P-values and significance tests

- Comparing P-values to different significance levels

- Estimating a P-value from a simulation

- Estimating P-values from simulations

- Using P-values to make conclusions

Want to join the conversation?

- Upvote Button navigates to signup page

- Downvote Button navigates to signup page

- Flag Button navigates to signup page

Video transcript

User Preferences

Content preview.

Arcu felis bibendum ut tristique et egestas quis:

- Ut enim ad minim veniam, quis nostrud exercitation ullamco laboris

- Duis aute irure dolor in reprehenderit in voluptate

- Excepteur sint occaecat cupidatat non proident

Keyboard Shortcuts

10.1 - setting the hypotheses: examples.

A significance test examines whether the null hypothesis provides a plausible explanation of the data. The null hypothesis itself does not involve the data. It is a statement about a parameter (a numerical characteristic of the population). These population values might be proportions or means or differences between means or proportions or correlations or odds ratios or any other numerical summary of the population. The alternative hypothesis is typically the research hypothesis of interest. Here are some examples.

Example 10.2: Hypotheses with One Sample of One Categorical Variable Section

About 10% of the human population is left-handed. Suppose a researcher at Penn State speculates that students in the College of Arts and Architecture are more likely to be left-handed than people found in the general population. We only have one sample since we will be comparing a population proportion based on a sample value to a known population value.

- Research Question : Are artists more likely to be left-handed than people found in the general population?

- Response Variable : Classification of the student as either right-handed or left-handed

State Null and Alternative Hypotheses

- Null Hypothesis : Students in the College of Arts and Architecture are no more likely to be left-handed than people in the general population (population percent of left-handed students in the College of Art and Architecture = 10% or p = .10).

- Alternative Hypothesis : Students in the College of Arts and Architecture are more likely to be left-handed than people in the general population (population percent of left-handed students in the College of Arts and Architecture > 10% or p > .10). This is a one-sided alternative hypothesis.

Example 10.3: Hypotheses with One Sample of One Measurement Variable Section

A generic brand of the anti-histamine Diphenhydramine markets a capsule with a 50 milligram dose. The manufacturer is worried that the machine that fills the capsules has come out of calibration and is no longer creating capsules with the appropriate dosage.

- Research Question : Does the data suggest that the population mean dosage of this brand is different than 50 mg?

- Response Variable : dosage of the active ingredient found by a chemical assay.

- Null Hypothesis : On the average, the dosage sold under this brand is 50 mg (population mean dosage = 50 mg).

- Alternative Hypothesis : On the average, the dosage sold under this brand is not 50 mg (population mean dosage ≠ 50 mg). This is a two-sided alternative hypothesis.

Example 10.4: Hypotheses with Two Samples of One Categorical Variable Section

Many people are starting to prefer vegetarian meals on a regular basis. Specifically, a researcher believes that females are more likely than males to eat vegetarian meals on a regular basis.

- Research Question : Does the data suggest that females are more likely than males to eat vegetarian meals on a regular basis?

- Response Variable : Classification of whether or not a person eats vegetarian meals on a regular basis

- Explanatory (Grouping) Variable: Sex

- Null Hypothesis : There is no sex effect regarding those who eat vegetarian meals on a regular basis (population percent of females who eat vegetarian meals on a regular basis = population percent of males who eat vegetarian meals on a regular basis or p females = p males ).

- Alternative Hypothesis : Females are more likely than males to eat vegetarian meals on a regular basis (population percent of females who eat vegetarian meals on a regular basis > population percent of males who eat vegetarian meals on a regular basis or p females > p males ). This is a one-sided alternative hypothesis.

Example 10.5: Hypotheses with Two Samples of One Measurement Variable Section

Obesity is a major health problem today. Research is starting to show that people may be able to lose more weight on a low carbohydrate diet than on a low fat diet.

- Research Question : Does the data suggest that, on the average, people are able to lose more weight on a low carbohydrate diet than on a low fat diet?

- Response Variable : Weight loss (pounds)

- Explanatory (Grouping) Variable : Type of diet

- Null Hypothesis : There is no difference in the mean amount of weight loss when comparing a low carbohydrate diet with a low fat diet (population mean weight loss on a low carbohydrate diet = population mean weight loss on a low fat diet).

- Alternative Hypothesis : The mean weight loss should be greater for those on a low carbohydrate diet when compared with those on a low fat diet (population mean weight loss on a low carbohydrate diet > population mean weight loss on a low fat diet). This is a one-sided alternative hypothesis.

Example 10.6: Hypotheses about the relationship between Two Categorical Variables Section

- Research Question : Do the odds of having a stroke increase if you inhale second hand smoke ? A case-control study of non-smoking stroke patients and controls of the same age and occupation are asked if someone in their household smokes.

- Variables : There are two different categorical variables (Stroke patient vs control and whether the subject lives in the same household as a smoker). Living with a smoker (or not) is the natural explanatory variable and having a stroke (or not) is the natural response variable in this situation.

- Null Hypothesis : There is no relationship between whether or not a person has a stroke and whether or not a person lives with a smoker (odds ratio between stroke and second-hand smoke situation is = 1).

- Alternative Hypothesis : There is a relationship between whether or not a person has a stroke and whether or not a person lives with a smoker (odds ratio between stroke and second-hand smoke situation is > 1). This is a one-tailed alternative.

This research question might also be addressed like example 11.4 by making the hypotheses about comparing the proportion of stroke patients that live with smokers to the proportion of controls that live with smokers.

Example 10.7: Hypotheses about the relationship between Two Measurement Variables Section

- Research Question : A financial analyst believes there might be a positive association between the change in a stock's price and the amount of the stock purchased by non-management employees the previous day (stock trading by management being under "insider-trading" regulatory restrictions).

- Variables : Daily price change information (the response variable) and previous day stock purchases by non-management employees (explanatory variable). These are two different measurement variables.

- Null Hypothesis : The correlation between the daily stock price change (\$) and the daily stock purchases by non-management employees (\$) = 0.

- Alternative Hypothesis : The correlation between the daily stock price change (\$) and the daily stock purchases by non-management employees (\$) > 0. This is a one-sided alternative hypothesis.

Example 10.8: Hypotheses about comparing the relationship between Two Measurement Variables in Two Samples Section

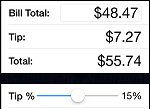

- Research Question : Is there a linear relationship between the amount of the bill (\$) at a restaurant and the tip (\$) that was left. Is the strength of this association different for family restaurants than for fine dining restaurants?

- Variables : There are two different measurement variables. The size of the tip would depend on the size of the bill so the amount of the bill would be the explanatory variable and the size of the tip would be the response variable.

- Null Hypothesis : The correlation between the amount of the bill (\$) at a restaurant and the tip (\$) that was left is the same at family restaurants as it is at fine dining restaurants.

- Alternative Hypothesis : The correlation between the amount of the bill (\$) at a restaurant and the tip (\$) that was left is the difference at family restaurants then it is at fine dining restaurants. This is a two-sided alternative hypothesis.

Want to create or adapt books like this? Learn more about how Pressbooks supports open publishing practices.

Chapter 13: Inferential Statistics

Some Basic Null Hypothesis Tests

Learning Objectives

- Conduct and interpret one-sample, dependent-samples, and independent-samples t tests.

- Interpret the results of one-way, repeated measures, and factorial ANOVAs.

- Conduct and interpret null hypothesis tests of Pearson’s r .

In this section, we look at several common null hypothesis testing procedures. The emphasis here is on providing enough information to allow you to conduct and interpret the most basic versions. In most cases, the online statistical analysis tools mentioned in Chapter 12 will handle the computations—as will programs such as Microsoft Excel and SPSS.

The t Test

As we have seen throughout this book, many studies in psychology focus on the difference between two means. The most common null hypothesis test for this type of statistical relationship is the t test . In this section, we look at three types of t tests that are used for slightly different research designs: the one-sample t test, the dependent-samples t test, and the independent-samples t test.

One-Sample t Test

The one-sample t test is used to compare a sample mean ( M ) with a hypothetical population mean (μ0) that provides some interesting standard of comparison. The null hypothesis is that the mean for the population (µ) is equal to the hypothetical population mean: μ = μ0. The alternative hypothesis is that the mean for the population is different from the hypothetical population mean: μ ≠ μ0. To decide between these two hypotheses, we need to find the probability of obtaining the sample mean (or one more extreme) if the null hypothesis were true. But finding this p value requires first computing a test statistic called t . (A test statistic is a statistic that is computed only to help find the p value.) The formula for t is as follows:

![Rendered by QuickLaTeX.com \[t=\dfrac{M-\mu_0}{\left(\dfrac{SD}{\sqrt{N}}\right)}\]](https://opentextbc.ca/researchmethods/wp-content/ql-cache/quicklatex.com-5cabf5ed8125558a7361164aadc41c78_l3.png)

Again, M is the sample mean and µ 0 is the hypothetical population mean of interest. SD is the sample standard deviation and N is the sample size.

The reason the t statistic (or any test statistic) is useful is that we know how it is distributed when the null hypothesis is true. As shown in Figure 13.1, this distribution is unimodal and symmetrical, and it has a mean of 0. Its precise shape depends on a statistical concept called the degrees of freedom, which for a one-sample t test is N − 1. (There are 24 degrees of freedom for the distribution shown in Figure 13.1.) The important point is that knowing this distribution makes it possible to find the p value for any t score. Consider, for example, a t score of +1.50 based on a sample of 25. The probability of a t score at least this extreme is given by the proportion of t scores in the distribution that are at least this extreme. For now, let us define extreme as being far from zero in either direction. Thus the p value is the proportion of t scores that are +1.50 or above or that are −1.50 or below—a value that turns out to be .14.

Fortunately, we do not have to deal directly with the distribution of t scores. If we were to enter our sample data and hypothetical mean of interest into one of the online statistical tools in Chapter 12 or into a program like SPSS (Excel does not have a one-sample t test function), the output would include both the t score and the p value. At this point, the rest of the procedure is simple. If p is less than .05, we reject the null hypothesis and conclude that the population mean differs from the hypothetical mean of interest. If p is greater than .05, we retain the null hypothesis and conclude that there is not enough evidence to say that the population mean differs from the hypothetical mean of interest. (Again, technically, we conclude only that we do not have enough evidence to conclude that it does differ.)

If we were to compute the t score by hand, we could use a table like Table 13.2 to make the decision. This table does not provide actual p values. Instead, it provides the critical values of t for different degrees of freedom ( df) when α is .05. For now, let us focus on the two-tailed critical values in the last column of the table. Each of these values should be interpreted as a pair of values: one positive and one negative. For example, the two-tailed critical values when there are 24 degrees of freedom are +2.064 and −2.064. These are represented by the red vertical lines in Figure 13.1. The idea is that any t score below the lower critical value (the left-hand red line in Figure 13.1) is in the lowest 2.5% of the distribution, while any t score above the upper critical value (the right-hand red line) is in the highest 2.5% of the distribution. Therefore any t score beyond the critical value in either direction is in the most extreme 5% of t scores when the null hypothesis is true and has a p value less than .05. Thus if the t score we compute is beyond the critical value in either direction, then we reject the null hypothesis. If the t score we compute is between the upper and lower critical values, then we retain the null hypothesis.

| One-tailed critical value | Two-tailed critical value | |

|---|---|---|

| 3 | 2.353 | 3.182 |

| 4 | 2.132 | 2.776 |

| 5 | 2.015 | 2.571 |

| 6 | 1.943 | 2.447 |

| 7 | 1.895 | 2.365 |

| 8 | 1.860 | 2.306 |

| 9 | 1.833 | 2.262 |

| 10 | 1.812 | 2.228 |

| 11 | 1.796 | 2.201 |

| 12 | 1.782 | 2.179 |

| 13 | 1.771 | 2.160 |

| 14 | 1.761 | 2.145 |

| 15 | 1.753 | 2.131 |

| 16 | 1.746 | 2.120 |

| 17 | 1.740 | 2.110 |

| 18 | 1.734 | 2.101 |

| 19 | 1.729 | 2.093 |

| 20 | 1.725 | 2.086 |

| 21 | 1.721 | 2.080 |

| 22 | 1.717 | 2.074 |

| 23 | 1.714 | 2.069 |

| 24 | 1.711 | 2.064 |

| 25 | 1.708 | 2.060 |

| 30 | 1.697 | 2.042 |

| 35 | 1.690 | 2.030 |

| 40 | 1.684 | 2.021 |

| 45 | 1.679 | 2.014 |

| 50 | 1.676 | 2.009 |

| 60 | 1.671 | 2.000 |

| 70 | 1.667 | 1.994 |

| 80 | 1.664 | 1.990 |

| 90 | 1.662 | 1.987 |

| 100 | 1.660 | 1.984 |

Thus far, we have considered what is called a two-tailed test , where we reject the null hypothesis if the t score for the sample is extreme in either direction. This test makes sense when we believe that the sample mean might differ from the hypothetical population mean but we do not have good reason to expect the difference to go in a particular direction. But it is also possible to do a one-tailed test , where we reject the null hypothesis only if the t score for the sample is extreme in one direction that we specify before collecting the data. This test makes sense when we have good reason to expect the sample mean will differ from the hypothetical population mean in a particular direction.

Here is how it works. Each one-tailed critical value in Table 13.2 can again be interpreted as a pair of values: one positive and one negative. A t score below the lower critical value is in the lowest 5% of the distribution, and a t score above the upper critical value is in the highest 5% of the distribution. For 24 degrees of freedom, these values are −1.711 and +1.711. (These are represented by the green vertical lines in Figure 13.1.) However, for a one-tailed test, we must decide before collecting data whether we expect the sample mean to be lower than the hypothetical population mean, in which case we would use only the lower critical value, or we expect the sample mean to be greater than the hypothetical population mean, in which case we would use only the upper critical value. Notice that we still reject the null hypothesis when the t score for our sample is in the most extreme 5% of the t scores we would expect if the null hypothesis were true—so α remains at .05. We have simply redefined extreme to refer only to one tail of the distribution. The advantage of the one-tailed test is that critical values are less extreme. If the sample mean differs from the hypothetical population mean in the expected direction, then we have a better chance of rejecting the null hypothesis. The disadvantage is that if the sample mean differs from the hypothetical population mean in the unexpected direction, then there is no chance at all of rejecting the null hypothesis.

Example One-Sample t Test

Imagine that a health psychologist is interested in the accuracy of university students’ estimates of the number of calories in a chocolate chip cookie. He shows the cookie to a sample of 10 students and asks each one to estimate the number of calories in it. Because the actual number of calories in the cookie is 250, this is the hypothetical population mean of interest (µ 0 ). The null hypothesis is that the mean estimate for the population (μ) is 250. Because he has no real sense of whether the students will underestimate or overestimate the number of calories, he decides to do a two-tailed test. Now imagine further that the participants’ actual estimates are as follows:

250, 280, 200, 150, 175, 200, 200, 220, 180, 250

The mean estimate for the sample ( M ) is 212.00 calories and the standard deviation ( SD ) is 39.17. The health psychologist can now compute the t score for his sample:

![Rendered by QuickLaTeX.com \[t=\dfrac{212-250}{\left(\dfrac{39.17}{\sqrt{10}}\right)}=-3.07\]](https://opentextbc.ca/researchmethods/wp-content/ql-cache/quicklatex.com-db8519a8464a24c9b7486a9cbd7fdc6b_l3.png)

If he enters the data into one of the online analysis tools or uses SPSS, it would also tell him that the two-tailed p value for this t score (with 10 − 1 = 9 degrees of freedom) is .013. Because this is less than .05, the health psychologist would reject the null hypothesis and conclude that university students tend to underestimate the number of calories in a chocolate chip cookie. If he computes the t score by hand, he could look at Table 13.2 and see that the critical value of t for a two-tailed test with 9 degrees of freedom is ±2.262. The fact that his t score was more extreme than this critical value would tell him that his p value is less than .05 and that he should reject the null hypothesis.

Finally, if this researcher had gone into this study with good reason to expect that university students underestimate the number of calories, then he could have done a one-tailed test instead of a two-tailed test. The only thing this decision would change is the critical value, which would be −1.833. This slightly less extreme value would make it a bit easier to reject the null hypothesis. However, if it turned out that university students overestimate the number of calories—no matter how much they overestimate it—the researcher would not have been able to reject the null hypothesis.

The Dependent-Samples t Test

The dependent-samples t test (sometimes called the paired-samples t test) is used to compare two means for the same sample tested at two different times or under two different conditions. This comparison is appropriate for pretest-posttest designs or within-subjects experiments. The null hypothesis is that the means at the two times or under the two conditions are the same in the population. The alternative hypothesis is that they are not the same. This test can also be one-tailed if the researcher has good reason to expect the difference goes in a particular direction.

It helps to think of the dependent-samples t test as a special case of the one-sample t test. However, the first step in the dependent-samples t test is to reduce the two scores for each participant to a single difference score by taking the difference between them. At this point, the dependent-samples t test becomes a one-sample t test on the difference scores. The hypothetical population mean (µ 0 ) of interest is 0 because this is what the mean difference score would be if there were no difference on average between the two times or two conditions. We can now think of the null hypothesis as being that the mean difference score in the population is 0 (µ 0 = 0) and the alternative hypothesis as being that the mean difference score in the population is not 0 (µ 0 ≠ 0).

Example Dependent-Samples t Test

Imagine that the health psychologist now knows that people tend to underestimate the number of calories in junk food and has developed a short training program to improve their estimates. To test the effectiveness of this program, he conducts a pretest-posttest study in which 10 participants estimate the number of calories in a chocolate chip cookie before the training program and then again afterward. Because he expects the program to increase the participants’ estimates, he decides to do a one-tailed test. Now imagine further that the pretest estimates are

230, 250, 280, 175, 150, 200, 180, 210, 220, 190

and that the posttest estimates (for the same participants in the same order) are

250, 260, 250, 200, 160, 200, 200, 180, 230, 240

The difference scores, then, are as follows:

+20, +10, −30, +25, +10, 0, +20, −30, +10, +50

Note that it does not matter whether the first set of scores is subtracted from the second or the second from the first as long as it is done the same way for all participants. In this example, it makes sense to subtract the pretest estimates from the posttest estimates so that positive difference scores mean that the estimates went up after the training and negative difference scores mean the estimates went down.

The mean of the difference scores is 8.50 with a standard deviation of 27.27. The health psychologist can now compute the t score for his sample as follows:

![Rendered by QuickLaTeX.com \[t=\dfrac{8.5-0}{\left(\dfrac{27.27}{\sqrt{10}}\right)}=1.11\]](https://opentextbc.ca/researchmethods/wp-content/ql-cache/quicklatex.com-37d0f432a138ce195614d7cebaabb4d8_l3.png)

If he enters the data into one of the online analysis tools or uses Excel or SPSS, it would tell him that the one-tailed p value for this t score (again with 10 − 1 = 9 degrees of freedom) is .148. Because this is greater than .05, he would retain the null hypothesis and conclude that the training program does not increase people’s calorie estimates. If he were to compute the t score by hand, he could look at Table 13.2 and see that the critical value of t for a one-tailed test with 9 degrees of freedom is +1.833. (It is positive this time because he was expecting a positive mean difference score.) The fact that his t score was less extreme than this critical value would tell him that his p value is greater than .05 and that he should fail to reject the null hypothesis.

The Independent-Samples t Test

The independent-samples t test is used to compare the means of two separate samples ( M 1 and M 2 ). The two samples might have been tested under different conditions in a between-subjects experiment, or they could be preexisting groups in a correlational design (e.g., women and men, extraverts and introverts). The null hypothesis is that the means of the two populations are the same: µ 1 = µ 2 . The alternative hypothesis is that they are not the same: µ 1 ≠ µ 2 . Again, the test can be one-tailed if the researcher has good reason to expect the difference goes in a particular direction.

The t statistic here is a bit more complicated because it must take into account two sample means, two standard deviations, and two sample sizes. The formula is as follows:

![Rendered by QuickLaTeX.com \[t=\dfrac{M_1-M_2}{\sqrt{\dfrac{{SD_1}^2}{n_1}+\dfrac{{SD_2}^2}{n_2}}}\]](https://opentextbc.ca/researchmethods/wp-content/ql-cache/quicklatex.com-0242d1ab42d68adb3bb852a45bfb9b2d_l3.png)

Notice that this formula includes squared standard deviations (the variances) that appear inside the square root symbol. Also, lowercase n 1 and n 2 refer to the sample sizes in the two groups or condition (as opposed to capital N , which generally refers to the total sample size). The only additional thing to know here is that there are N − 2 degrees of freedom for the independent-samples t test.

Example Independent-Samples t Test

Now the health psychologist wants to compare the calorie estimates of people who regularly eat junk food with the estimates of people who rarely eat junk food. He believes the difference could come out in either direction so he decides to conduct a two-tailed test. He collects data from a sample of eight participants who eat junk food regularly and seven participants who rarely eat junk food. The data are as follows:

Junk food eaters: 180, 220, 150, 85, 200, 170, 150, 190

Non–junk food eaters: 200, 240, 190, 175, 200, 300, 240

The mean for the junk food eaters is 220.71 with a standard deviation of 41.23. The mean for the non–junk food eaters is 168.12 with a standard deviation of 42.66. He can now compute his t score as follows:

![Rendered by QuickLaTeX.com \[t=\dfrac{220.71-168.12}{\sqrt{\dfrac{41.23^2}{8}+\dfrac{42.66^2}{7}}}=2.42\]](https://opentextbc.ca/researchmethods/wp-content/ql-cache/quicklatex.com-8dd797ba37fac6e3618f34946daefb5b_l3.png)

If he enters the data into one of the online analysis tools or uses Excel or SPSS, it would tell him that the two-tailed p value for this t score (with 15 − 2 = 13 degrees of freedom) is .015. Because this p value is less than .05, the health psychologist would reject the null hypothesis and conclude that people who eat junk food regularly make lower calorie estimates than people who eat it rarely. If he were to compute the t score by hand, he could look at Table 13.2 and see that the critical value of t for a two-tailed test with 13 degrees of freedom is ±2.160. The fact that his t score was more extreme than this critical value would tell him that his p value is less than .05 and that he should fail to retain the null hypothesis.

The Analysis of Variance

When there are more than two groups or condition means to be compared, the most common null hypothesis test is the analysis of variance (ANOVA) . In this section, we look primarily at the one-way ANOVA , which is used for between-subjects designs with a single independent variable. We then briefly consider some other versions of the ANOVA that are used for within-subjects and factorial research designs.

One-Way ANOVA

The one-way ANOVA is used to compare the means of more than two samples ( M 1 , M 2 … M G ) in a between-subjects design. The null hypothesis is that all the means are equal in the population: µ 1 = µ 2 =…= µ G . The alternative hypothesis is that not all the means in the population are equal.

The test statistic for the ANOVA is called F . It is a ratio of two estimates of the population variance based on the sample data. One estimate of the population variance is called the mean squares between groups (MS B ) and is based on the differences among the sample means. The other is called the mean squares within groups (MS W ) and is based on the differences among the scores within each group. The F statistic is the ratio of the MS B to the MS W and can therefore be expressed as follows:

F = MS B ÷ MS W

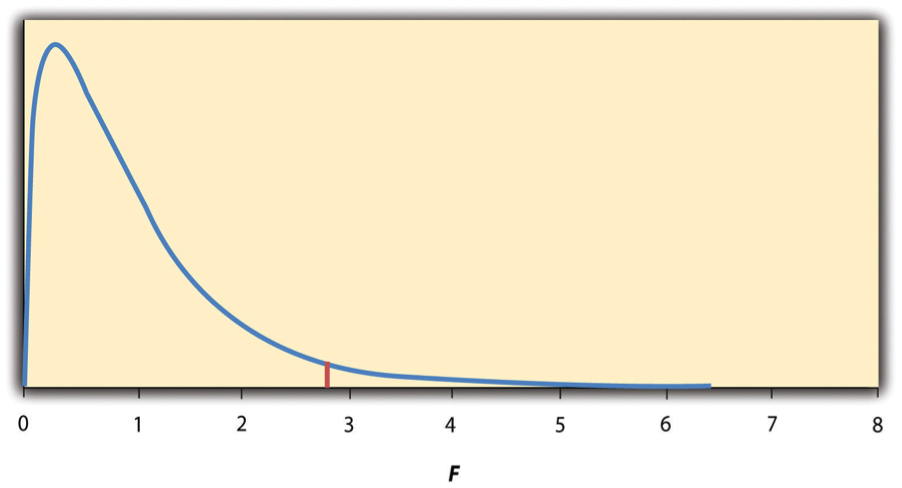

Again, the reason that F is useful is that we know how it is distributed when the null hypothesis is true. As shown in Figure 13.2, this distribution is unimodal and positively skewed with values that cluster around 1. The precise shape of the distribution depends on both the number of groups and the sample size, and there is a degrees of freedom value associated with each of these. The between-groups degrees of freedom is the number of groups minus one: df B = ( G − 1). The within-groups degrees of freedom is the total sample size minus the number of groups: df W = N − G . Again, knowing the distribution of F when the null hypothesis is true allows us to find the p value.

The online tools in Chapter 12 and statistical software such as Excel and SPSS will compute F and find the p value. If p is less than .05, then we reject the null hypothesis and conclude that there are differences among the group means in the population. If p is greater than .05, then we retain the null hypothesis and conclude that there is not enough evidence to say that there are differences. In the unlikely event that we would compute F by hand, we can use a table of critical values like Table 13.3 “Table of Critical Values of ” to make the decision. The idea is that any F ratio greater than the critical value has a p value of less than .05. Thus if the F ratio we compute is beyond the critical value, then we reject the null hypothesis. If the F ratio we compute is less than the critical value, then we retain the null hypothesis.

| 2 | 3 | 4 | |

|---|---|---|---|

| 8 | 4.459 | 4.066 | 3.838 |

| 9 | 4.256 | 3.863 | 3.633 |

| 10 | 4.103 | 3.708 | 3.478 |

| 11 | 3.982 | 3.587 | 3.357 |

| 12 | 3.885 | 3.490 | 3.259 |

| 13 | 3.806 | 3.411 | 3.179 |

| 14 | 3.739 | 3.344 | 3.112 |

| 15 | 3.682 | 3.287 | 3.056 |

| 16 | 3.634 | 3.239 | 3.007 |

| 17 | 3.592 | 3.197 | 2.965 |

| 18 | 3.555 | 3.160 | 2.928 |

| 19 | 3.522 | 3.127 | 2.895 |

| 20 | 3.493 | 3.098 | 2.866 |

| 21 | 3.467 | 3.072 | 2.840 |

| 22 | 3.443 | 3.049 | 2.817 |

| 23 | 3.422 | 3.028 | 2.796 |

| 24 | 3.403 | 3.009 | 2.776 |

| 25 | 3.385 | 2.991 | 2.759 |

| 30 | 3.316 | 2.922 | 2.690 |

| 35 | 3.267 | 2.874 | 2.641 |

| 40 | 3.232 | 2.839 | 2.606 |

| 45 | 3.204 | 2.812 | 2.579 |

| 50 | 3.183 | 2.790 | 2.557 |

| 55 | 3.165 | 2.773 | 2.540 |

| 60 | 3.150 | 2.758 | 2.525 |

| 65 | 3.138 | 2.746 | 2.513 |

| 70 | 3.128 | 2.736 | 2.503 |

| 75 | 3.119 | 2.727 | 2.494 |

| 80 | 3.111 | 2.719 | 2.486 |

| 85 | 3.104 | 2.712 | 2.479 |

| 90 | 3.098 | 2.706 | 2.473 |

| 95 | 3.092 | 2.700 | 2.467 |

| 100 | 3.087 | 2.696 | 2.463 |

Example One-Way ANOVA

Imagine that the health psychologist wants to compare the calorie estimates of psychology majors, nutrition majors, and professional dieticians. He collects the following data:

Psych majors: 200, 180, 220, 160, 150, 200, 190, 200

Nutrition majors: 190, 220, 200, 230, 160, 150, 200, 210, 195

Dieticians: 220, 250, 240, 275, 250, 230, 200, 240

The means are 187.50 ( SD = 23.14), 195.00 ( SD = 27.77), and 238.13 ( SD = 22.35), respectively. So it appears that dieticians made substantially more accurate estimates on average. The researcher would almost certainly enter these data into a program such as Excel or SPSS, which would compute F for him and find the p value. Table 13.4 shows the output of the one-way ANOVA function in Excel for these data. This table is referred to as an ANOVA table. It shows that MS B is 5,971.88, MS W is 602.23, and their ratio, F , is 9.92. The p value is .0009. Because this value is below .05, the researcher would reject the null hypothesis and conclude that the mean calorie estimates for the three groups are not the same in the population. Notice that the ANOVA table also includes the “sum of squares” ( SS ) for between groups and for within groups. These values are computed on the way to finding MS B and MS W but are not typically reported by the researcher. Finally, if the researcher were to compute the F ratio by hand, he could look at Table 13.3 and see that the critical value of F with 2 and 21 degrees of freedom is 3.467 (the same value in Table 13.4 under F crit ). The fact that his F score was more extreme than this critical value would tell him that his p value is less than .05 and that he should reject the null hypothesis.

| Between groups | 11,943.75 | 2 | 5,971.875 | 9.916234 | 0.000928 | 3.4668 |

| Within groups | 12,646.88 | 21 | 602.2321 | |||

| Total | 24,590.63 | 23 |

ANOVA Elaborations

Post hoc comparisons.

When we reject the null hypothesis in a one-way ANOVA, we conclude that the group means are not all the same in the population. But this can indicate different things. With three groups, it can indicate that all three means are significantly different from each other. Or it can indicate that one of the means is significantly different from the other two, but the other two are not significantly different from each other. It could be, for example, that the mean calorie estimates of psychology majors, nutrition majors, and dieticians are all significantly different from each other. Or it could be that the mean for dieticians is significantly different from the means for psychology and nutrition majors, but the means for psychology and nutrition majors are not significantly different from each other. For this reason, statistically significant one-way ANOVA results are typically followed up with a series of post hoc comparisons of selected pairs of group means to determine which are different from which others.

One approach to post hoc comparisons would be to conduct a series of independent-samples t tests comparing each group mean to each of the other group means. But there is a problem with this approach. In general, if we conduct a t test when the null hypothesis is true, we have a 5% chance of mistakenly rejecting the null hypothesis (see Section 13.3 “Additional Considerations” for more on such Type I errors). If we conduct several t tests when the null hypothesis is true, the chance of mistakenly rejecting at least one null hypothesis increases with each test we conduct. Thus researchers do not usually make post hoc comparisons using standard t tests because there is too great a chance that they will mistakenly reject at least one null hypothesis. Instead, they use one of several modified t test procedures—among them the Bonferonni procedure, Fisher’s least significant difference (LSD) test, and Tukey’s honestly significant difference (HSD) test. The details of these approaches are beyond the scope of this book, but it is important to understand their purpose. It is to keep the risk of mistakenly rejecting a true null hypothesis to an acceptable level (close to 5%).

Repeated-Measures ANOVA

Recall that the one-way ANOVA is appropriate for between-subjects designs in which the means being compared come from separate groups of participants. It is not appropriate for within-subjects designs in which the means being compared come from the same participants tested under different conditions or at different times. This requires a slightly different approach, called the repeated-measures ANOVA . The basics of the repeated-measures ANOVA are the same as for the one-way ANOVA. The main difference is that measuring the dependent variable multiple times for each participant allows for a more refined measure of MS W . Imagine, for example, that the dependent variable in a study is a measure of reaction time. Some participants will be faster or slower than others because of stable individual differences in their nervous systems, muscles, and other factors. In a between-subjects design, these stable individual differences would simply add to the variability within the groups and increase the value of MS W . In a within-subjects design, however, these stable individual differences can be measured and subtracted from the value of MS W . This lower value of MS W means a higher value of F and a more sensitive test.

Factorial ANOVA