Subscribe to the PwC Newsletter

Join the community, add a new evaluation result row, object detection.

4079 papers with code • 101 benchmarks • 275 datasets

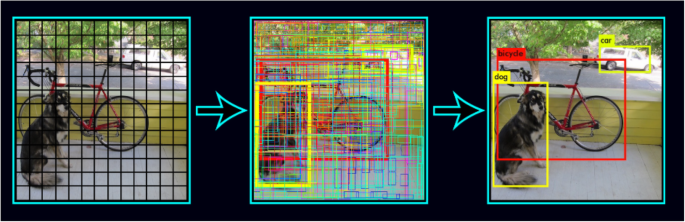

Object Detection is a computer vision task in which the goal is to detect and locate objects of interest in an image or video. The task involves identifying the position and boundaries of objects in an image, and classifying the objects into different categories. It forms a crucial part of vision recognition, alongside image classification and retrieval .

The state-of-the-art methods can be categorized into two main types: one-stage methods and two stage-methods:

One-stage methods prioritize inference speed, and example models include YOLO, SSD and RetinaNet.

Two-stage methods prioritize detection accuracy, and example models include Faster R-CNN, Mask R-CNN and Cascade R-CNN.

The most popular benchmark is the MSCOCO dataset. Models are typically evaluated according to a Mean Average Precision metric.

( Image credit: Detectron )

Benchmarks Add a Result

| Trend | Dataset | Best Model | -->Paper | Code | Compare |

|---|---|---|---|---|---|

| Co-DETR | -->|||||

| Co-DETR | -->|||||

| EVA | -->|||||

| Cascade Eff-B7 NAS-FPN (Copy Paste pre-training, single-scale) | -->|||||

| Relation-DETR (Swin-L 2x) | -->|||||

| InternImage-H | -->|||||

| MaxViT-B | -->|||||

| TridentNet | -->|||||

| YOLOX-L | -->|||||

| YOLOX-L | -->|||||

| UniverseNet-20.08 | -->|||||

| YOLOv8x | -->|||||

| CAFR | -->|||||

| Co-DETR (single-scale) | -->|||||

| CAFR | -->|||||

| YOLOv8+SCALE | -->|||||

| ERGO-12 | -->|||||

| Synth Pretrained Faster R-CNN ResNeXt-101-FPN | -->|||||

| VSTAM | -->|||||

| PRB-FPN | -->|||||

| InternImage-H | -->|||||

| DNTR | -->|||||

| Mask RCNN R50 | -->|||||

| Swin-T (ImageNet-1k pretrain) | -->|||||

| Grounding DINO 1.5 Pro | -->|||||

| PVT (Pyramid Vision Transformer; trained on PeopleArt and PopArt) | -->|||||

| Cascade R-CNN (R50-FPN) | -->|||||

| MMPedestron | -->|||||

| MMPedestron | -->|||||

| MMPedestron | -->|||||

| Patches | -->|||||

| Patches | -->|||||

| PANet++ | -->|||||

| LeapMotor_Det | -->|||||

| PP-YOLOE-plus | -->|||||

| Co-DETR (single-scale) | -->|||||

| YOLOv5x | -->|||||

| Patches | -->|||||

| IterDet (Faster RCNN, ResNet50, 2 iterations) | -->|||||

| YOLOv8-M | -->|||||

| AP (%) | -->|||||

| MOAT-2 | -->|||||

| Logo-Yolo | -->|||||

| GHOST | -->|||||

| UGainS | -->|||||

| LRPz | -->|||||

| RANet(Radar) | -->|||||

| DETReg (MDef-DETR) | -->|||||

| YOLOv5s | -->|||||

| OBSS YOLOv5+Track Boosting (Including Synthetic Data) | -->|||||

| YOLT | -->|||||

| Grounding DINO 1.5 Pro | -->|||||

| DASS-Detector (YOLOX XL) | -->|||||

| ScaleDet | -->|||||

| MILA | -->|||||

| YoloV8 | -->|||||

| CDDMSL | -->|||||

| Attention-based Joint Detection of Object and Semantic Part | -->|||||

| CDSSD | -->|||||

| Vote3Deep | -->|||||

| Vote3Deep | -->|||||

| Vote3Deep | -->|||||

| Vote3Deep | -->|||||

| Vote3Deep | -->|||||

| Vote3Deep | -->|||||

| PNe within NGC1380 & NGC1404 | -->|||||

| RepPoints + Self-adaptation | -->|||||

| UniverseNet | -->|||||

| YOLOv5 | -->|||||

| EfficientDet-D2 | -->|||||

| EfficientDet-D2 | -->|||||

| EfficientDet-D2 | -->|||||

| EfficientDet-D2 | -->|||||

| EfficientDet-D2 | -->|||||

| RL [10] Lpixel | -->|||||

| MS-PAD | -->|||||

| Retina-UNet-Ag | -->|||||

| YOLT | -->|||||

| Aquavision | -->|||||

| GLIP-T | -->|||||

| CDDMSL | -->|||||

| S-RCNN+Ours | -->|||||

| V2F-Net | -->|||||

| PP-YOLOE | -->|||||

| YOLO | -->|||||

| ScaleDet | -->|||||

| ScaleDet | -->|||||

| SSOD + Crop (L + U) | -->|||||

| SSOD + Crop (L + U) | -->|||||

| SSOD + Crop (L + U) | -->|||||

| TarDAL | -->|||||

| TinyissimoYOLO-v8 | -->|||||

| DAS | -->|||||

| R^3-CNN | -->|||||

| CDDMSL | -->|||||

| CDDMSL | -->|||||

| CDDMSL | -->|||||

| CDDMSL | -->|||||

| CDDMSL | -->|||||

| yolov8x-seg | -->|||||

| --> |

Most implemented papers

Deep residual learning for image recognition.

Deep residual nets are foundations of our submissions to ILSVRC & COCO 2015 competitions, where we also won the 1st places on the tasks of ImageNet detection, ImageNet localization, COCO detection, and COCO segmentation.

YOLOv3: An Incremental Improvement

At 320x320 YOLOv3 runs in 22 ms at 28. 2 mAP, as accurate as SSD but three times faster.

YOLO9000: Better, Faster, Stronger

On the 156 classes not in COCO, YOLO9000 gets 16. 0 mAP.

Focal Loss for Dense Object Detection

Our novel Focal Loss focuses training on a sparse set of hard examples and prevents the vast number of easy negatives from overwhelming the detector during training.

YOLOv4: Optimal Speed and Accuracy of Object Detection

There are a huge number of features which are said to improve Convolutional Neural Network (CNN) accuracy.

SSD: Single Shot MultiBox Detector

weiliu89/caffe • 8 Dec 2015

Experimental results on the PASCAL VOC, MS COCO, and ILSVRC datasets confirm that SSD has comparable accuracy to methods that utilize an additional object proposal step and is much faster, while providing a unified framework for both training and inference.

Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks

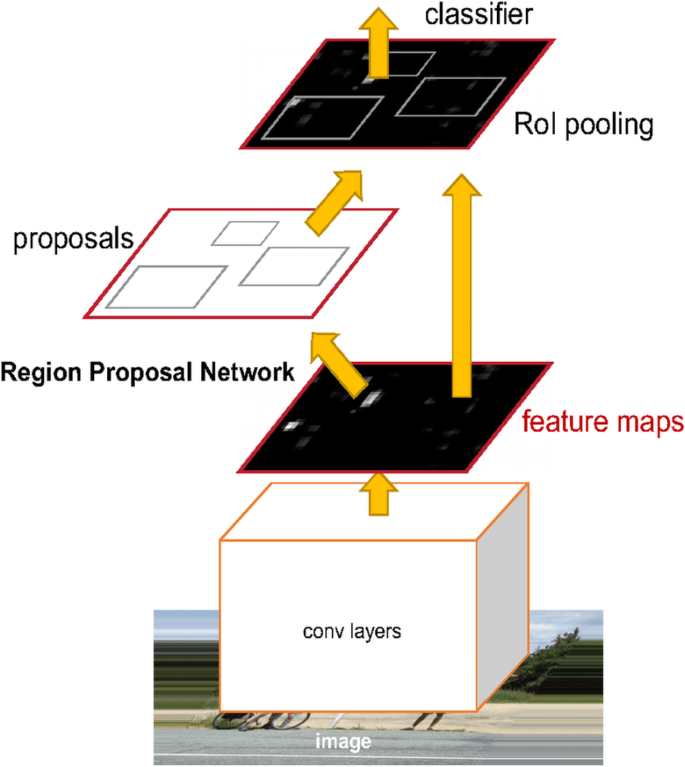

In this work, we introduce a Region Proposal Network (RPN) that shares full-image convolutional features with the detection network, thus enabling nearly cost-free region proposals.

Our approach efficiently detects objects in an image while simultaneously generating a high-quality segmentation mask for each instance.

MobileNets: Efficient Convolutional Neural Networks for Mobile Vision Applications

We present a class of efficient models called MobileNets for mobile and embedded vision applications.

MobileNetV2: Inverted Residuals and Linear Bottlenecks

In this paper we describe a new mobile architecture, MobileNetV2, that improves the state of the art performance of mobile models on multiple tasks and benchmarks as well as across a spectrum of different model sizes.

We apologize for the inconvenience...

To ensure we keep this website safe, please can you confirm you are a human by ticking the box below.

If you are unable to complete the above request please contact us using the below link, providing a screenshot of your experience.

https://ioppublishing.org/contacts/

IEEE Account

- Change Username/Password

- Update Address

Purchase Details

- Payment Options

- Order History

- View Purchased Documents

Profile Information

- Communications Preferences

- Profession and Education

- Technical Interests

- US & Canada: +1 800 678 4333

- Worldwide: +1 732 981 0060

- Contact & Support

- About IEEE Xplore

- Accessibility

- Terms of Use

- Nondiscrimination Policy

- Privacy & Opting Out of Cookies

A not-for-profit organization, IEEE is the world's largest technical professional organization dedicated to advancing technology for the benefit of humanity. © Copyright 2024 IEEE - All rights reserved. Use of this web site signifies your agreement to the terms and conditions.

Thank you for visiting nature.com. You are using a browser version with limited support for CSS. To obtain the best experience, we recommend you use a more up to date browser (or turn off compatibility mode in Internet Explorer). In the meantime, to ensure continued support, we are displaying the site without styles and JavaScript.

- View all journals

- Explore content

- About the journal

- Publish with us

- Sign up for alerts

- Open access

- Published: 29 August 2024

Adaptive condition-aware high-dimensional decoupling remote sensing image object detection algorithm

- Chenshuai Bai 1 ,

- Xiaofeng Bai 1 ,

- Kaijun Wu 1 &

- Yuanjie Ye 2

Scientific Reports volume 14 , Article number: 20090 ( 2024 ) Cite this article

Metrics details

- Classification and taxonomy

- Computational biology and bioinformatics

- Computational models

- Image processing

Remote Sensing Image Object Detection (RSIOD) faces the challenges of multi-scale objects, dense overlap of objects and uneven data distribution in practical applications. In order to solve these problems, this paper proposes a YOLO-ACPHD RSIOD algorithm. The algorithm adopts Adaptive Condition Awareness Technology (ACAT), which can dynamically adjust the parameters of the convolution kernel, so as to adapt to the objects of different scales and positions. Compared with the traditional fixed convolution kernel, this dynamic adjustment can better adapt to the diversity of scale, direction and shape of the object, thus improving the accuracy and robustness of Object Detection (OD). In addition, a High-Dimensional Decoupling Technology (HDDT) is used to reduce the amount of calculation to 1/N by performing deep convolution on the input data and then performing spatial convolution on each channel. When dealing with large-scale Remote Sensing Image (RSI) data, this reduction in computation can significantly improve the efficiency of the algorithm and accelerate the speed of OD, so as to better adapt to the needs of practical application scenarios. Through the experimental verification of the RSOD RSI data set, the YOLO-ACPHD model in this paper shows very satisfactory performance. The F1 value reaches 0.99, the Precision value reaches 1, the Precision-Recall value reaches 0.994, the Recall value reaches 1, and the mAP value reaches 99.36 \(\%\) , which indicates that the model shows the highest level in the accuracy and comprehensiveness of OD.

Similar content being viewed by others

Centralised visual processing center for remote sensing target detection

MPE-YOLO: enhanced small target detection in aerial imaging

MwdpNet: towards improving the recognition accuracy of tiny targets in high-resolution remote sensing image

Introduction.

OD in RSI is an essential yet complex endeavor, confronted with numerous obstacles in real-world applications. Over the past years, significant advancements have been witnessed in the study of RSI, leading to data that exhibit extensive multi-scale attributes, substantial object overlays, and non-uniform data dispersion, which brings many difficulties to OD 1 , 2 .

OD algorithm flow chart.

Figure 1 depicts the schematic of an OD model. RSIOD is one of the important research directions in the field of computer vision. In RSI, due to the diversity of the scale, direction and shape of the object, as well as the complex background interference, the OD task faces many challenges 3 , 4 , 5 . With the development of remote sensing technology and deep learning, methods for RSIOD have emerged in an endless stream, and remarkable results have been achieved 6 , 7 , 8 , 9 , 10 , 11 , 12 , 13 , 14 , 15 , 16 , 17 . These methods effectively improve the OD performance in RSI by introducing different network structures, feature fusion strategies and optimization techniques. Zhang et al. 18 can effectively detect directional objects in RSI through covariant network and equivariant contrast learning. Aiming at the problem of directional OD in RSI, this method adopts the strategy of covariant network and equivariant contrast learning, so as to improve the accuracy and accuracy of detection. Wang et al. 19 introduced the improved YOLOv5n lightweight remote sensing aircraft OD network, which achieves efficient OD in RSI with low computational complexity and is suitable for resource-constrained scenarios. This method effectively improves the detection accuracy and performance of aircraft objects in RSI through the improved YOLOv5 n network. Huang et al. 20 used cross-image context information to improve the accuracy and robustness of salient OD in RSI, and provided new ideas and methods for further research in the field of salient OD in RSI. Huo et al. 21 improved the accuracy and performance of salient OD in RSI through global and multi-scale aggregation networks, which is suitable for RSI analysis in various complex scenes. Aiming at the problems of background interference and small OD difficulty in OD in RSI, Dong et al. 22 adopted the strategy of background separation and small object compensation, which improved the accuracy and robustness of detection. By introducing explicit semantic guidance, Liu et al. 23 can effectively detect small objects in RSI, improve the accuracy and efficiency of detection, and provide a new and effective method for the detection of small objects in RSI. Guo et al. 24 proposed a fully deformable convolution network for ship detection in RSI. This network can better adapt to the irregular shape of the ship in the RSI, thereby improving the accuracy and robustness of the detection. By introducing deformable convolution, significant improvements have been made in the detection of objects such as ships, which provides new ideas and methods for the field of OD in RSI. Liu et al. 25 used a translation window mechanism to process image information, and Swin Transformer introduced a hierarchical structure and a translation window mechanism, which effectively captured the global and local information in the image and achieved better visual representation. Ni et al. 26 combined multiple feature fusion and learning methods to improve the classification accuracy of scenes in synthetic aperture radar images. It can not only improve the accuracy of image classification, but also enhance the ability to understand and characterize complex scenes in synthetic aperture radar images. It has important application value. Luo et al. 27 introduced the channel attention mechanism to enhance the channel information in the Feature Pyramid Network (FPN). This mechanism can effectively improve the performance of OD, so that the model can better adapt to the OD tasks in different scales and complex scenes in RSI. Gao et al. 28 proposed a method of global perception and local refinement to achieve accurate detection of objects in RSI at different scales. This method can effectively capture the global and local information in the image, and improve the detection accuracy and robustness of the object in the RSI. Ming et al. 29 improved the accuracy of OD in any direction by learning dynamic anchors to adapt to objects with different angles and shapes. This strategy effectively solves the problem that the traditional fixed anchor point is difficult to adapt to various object shape and direction changes, and provides new ideas and methods for OD tasks in RSI. Qian et al. 30 proposed a bounding box regression method, which aims to build a bridge between the two, to achieve information sharing and conversion between object-oriented detection and horizontal OD, and then improve the detection accuracy. Yu et al. 31 introduced an algorithm of Attention-Based FPN and its application in ship detection in RSI. The A-FPN algorithm improves the performance and accuracy of ship OD in RSI through the design of feature pyramid network. These methods effectively improve the OD performance in RSI by introducing different network structures, feature fusion strategies and optimization techniques. Among them, including rotation perception and multi-scale convolution neural network 32 , hierarchical region generation 33 and multi-scale feature fusion 34 , network structure with rotation perception 35 and loss function, anchor-free OD method, etc. 36 , 37 , 38 . These methods have achieved remarkable results in different aspects, and provide effective solutions for RSIOD tasks. However, there are still some challenges, such as object scale change and complex background interference, which need to be further studied and improved.

Therefore, this paper proposes an OD algorithm called YOLO-ACPHD(YOLOv8-Adaptive Condition Awareness Technology High-Dimensional Decoupling Technology, YOLO-ACPHD). The algorithm uses ACAT to adapt to objects of different scales and locations by dynamically adjusting the parameters of the convolution kernel, solving the challenges of multi-scale objects and dense overlap of objects. Instead of using fixed convolution kernels, ACAT adaptive generates convolution kernels of different shapes and sizes by analyzing the characteristics of the input data. Using the HDDT, the calculation is performed by means of separate convolution, that is, the input data is first deeply convoluted, and then each channel is spatially convoluted. HDDT can reduce the amount of calculation to 1/N, where N is the number of convolution kernels. Through these improvements, the YOLO-ACPHD algorithm has better applicability, accuracy, and robustness in dealing with RSIOD tasks.

Data and methods

Data set introduction.

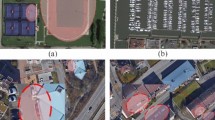

Figure 2 depicts the RSOD data set, an open resource tailored for the detection of minute objects within RSI. The sample image of the RSOD RSI data set includes: aircraft, including 4993 aircraft in 446 images; oiltank, including 1586 oil tanks in 165 images; overpass, including 180 overpasses in 176 images; playground, containing 191 playgrounds in 189 images.

RSOD RSI data sample diagram.

YOLO-ACPHD module

The YOLO-ACPHD model proposed in this paper mainly expands the following techniques to improve the detection effect and robustness.

The ACAT module can dynamically adjust the parameters of the convolution kernel to adapt to objects of different scales and positions. In RSI, the scale, direction and shape of objects are different, so it is difficult to adjust them effectively based on fixed convolution function. Employing a convolution neural network that leverages the attributes of training instances, this approach facilitates the automated learning of convolution neural networks rooted in these characteristics. Furthermore, it gives rise to multi-level and multi-aspect convolution neural networks, all grounded in the nuances of the training data. it enhances the adaptability and accuracy to complex scenes.

Through the deep convolution of each channel, the HDDT model carries on the convolution operation between each channel. Compared with the conventional convolution algorithm, the computing time of HDDT algorithm is reduced to 1/N, where N is the number of convolution kernels. Optimizing computational efficacy in the handling of extensive RSI significantly accelerates object identification by minimizing computational requirements.

By using ACAT and HDDT modules, the YOLO-ACPHD RSIOD algorithm can be more flexible to adapt to objects of different scales and locations and at the same time improve the computational efficiency of the algorithm, Consequently, the accuracy of identification and the resilience are significantly improved in the context of complex and dynamic RSI.

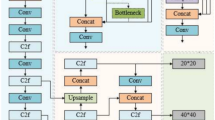

Research on RSIOD methods based on YOLO-ACPHD variety of advanced OD techniques are used to improve the performance and robustness of the algorithm. As shown in Fig. 3 .

YOLO-ACPHD overall network architecture.

ACAT and HDDT modules are used to improve the adaptability and computational efficiency of the algorithm. By adaptively adjusting the characteristics of each region and each region, the ACAT model enhances the adaptive ability and accuracy of various features. HDDT algorithm uses segmented convolution operation, which not only keeps the spatial information, but also improves the robustness to objects. The research results of this project will lay a foundation for the research of RSI data analysis and analysis methods for complex scenes.

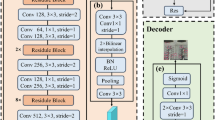

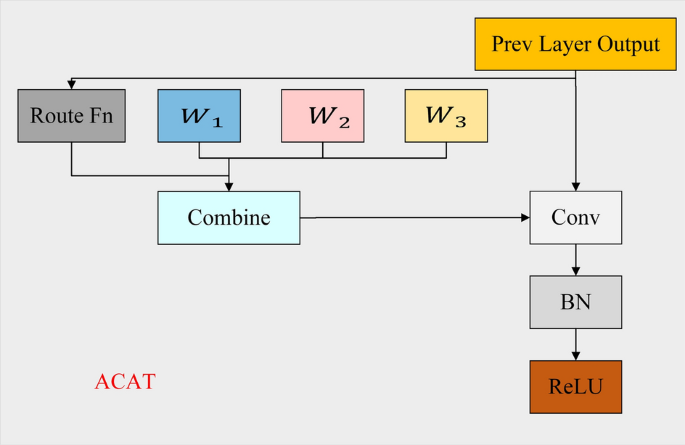

Adaptive Condition Awareness Technology

As depicted in Fig. 4 , within the standard Convolution layer, a uniform convolution kernel, referred to also as a weight, is applied across all input instances. This means that the convolution operation is the same regardless of the content of the input example. In the ACAT layer, the Convolution kernel assumes an adaptive role, transforming dynamically based on the input sample at hand. This means that each input example can have its own unique convolution kernel. The ACAT layer is designed to adaptively learn and adjust the convolution kernel according to the features and context information of the input examples, aiming to enhance the model’s capacity for expression and its generalization capabilities. This is achieved by conceptualizing the convolution kernel in relation to the input instance, the ACAT layer can share and learn different convolution kernel parameters between different examples so that the model can better adapt to the diverse input data.

Conditional parameter convolution diagram.

In ACAT, the convolution kernel is parameterized as a function of the input example. Particularly, the parameters for the convolution kernel can be derived utilizing the subsequent equation.

Here x denotes the output of the previous layer, n denotes that the ACAT layer has n convolution kernels \((W_{i} )\) , \(\sigma\) denotes the activation function, \(\alpha _{i}= r_{i}(x)\) represents a sample-dependent weighted parameter 39 .

Traditional convolution layers typically enhance their capacity through augmenting the kernel’s height/width or the quantity of Icano channels. Nevertheless, during convolution, every extra parameter necessitates more multiplication-addition operations, a computation burden that scales linearly with the image’s pixel count. In the ACAT layer, before applying convolution, a convolution kernel is calculated for each example as a linear combination. Each convolution kernel only needs to be calculated once, but it is applied to many different positions of the input image. The integration of n enhances the overall capability of the network, and this augmentation demands a mere insignificant computational expense. Each additional parameter requires an additional multiplication operation. As you can see from Fig. 5 , the ACAT layer is mathematically equivalent to a high-cost expert linear mixed equation in which each expert has a static convolution:

Expert linear mixed diagram.

Therefore, ACAT has the same capacity as the linear mixed expert formula of n experts, but it is computationally efficient because it only needs to calculate one expensive convolution. This formula delves into the nature of ACAT and links it to previous work on conditional calculations and expert mixing. In ACAT, the path selection function of each instance is very critical.

A routing function is designed that has high computational efficiency, can effectively distinguish input examples, and is easy to explain. The algorithm uses three steps to solve the problem, namely: global average common, fully connected layer, Sigmoid activation. The route weighting is calculated as follows:

For the input x , the global average pooling is first performed, and then the right is multiplied by a matrix R (the purpose of the matrix is to map the dimensions to n experts to achieve the subsequent linear combination). Finally, the weights on each dimension are reduced to the [0, 1] interval by sigmoid. Therefore, different routing weight vectors are obtained according to the different input x .

- High-dimensional decoupling technology

Based on the HDDT, the standard \(3 \times 3\) convolution operation is decomposed into two steps: deep convolution and point convolution, as shown in Fig. 6 . The traditional \(3 \times 3\) convolution performs both channel and spatial direction calculations in one step. The high-dimensional decoupling technique decomposes the calculation into two steps: deep convolution and point convolution.

Each input channel undergoes an individual convolution process with its exclusive convolution filter. Consequently, every channel is equipped with its own convolution kernel for distinct data processing, thereby conducting the convolution operation across the channel dimension. Point convolution is used to create a linear combination of deep convolution outputs. Point convolution uses \(1 \times 1\) convolution kernels to linearly combine the output channels of deep convolution. This process can be seen as the dimension reduction and fusion of channel dimensions.

Standard convolution. Assuming that the convolution kernel size is \(D_{k}\times D_{k}\) , the input channel is M , the output channel is N , and the output feature map size is \(D_{F}\times D_{F}\) , then after the standard convolution, it can be calculated, the number of parameters is \(D_{k}\times D_{k} \times M \times N\) , the amount of calculation is \(D_{k}\times D_{k} \times M \times N \times D_{F}\times D_{F}\) .

Deep convolution. The convolution kernel of deep convolution is a single channel mode, and each channel of the input needs to be convoluted, so that the output feature map with the same number of channels as the input feature map will be obtained. That is, the number of input feature map channels = the number of convolution kernels = the number of output feature maps. The convolution size of deep convolution is \(D_{k}\times D_{k} \times 1\) , the number of convolution kernels is M , and each must do \(D_{F}\times D_{F}\) multiplication operations. Parameter is \(D_{F}\times D_{F} \times M\) , calculation amount is \(D_{k}\times D_{k} \times M \times D_{F}\times D_{F}\) .

Pointwise convolution. Pointwise convolution W / H dimension unchanged, change the channel. According to deep convolution, the number of input feature map channels = the number of convolution kernels = the number of output feature maps, which will lead to too few output feature maps (too few channels of output feature maps, which can be regarded as the number of output feature maps is 1 and the number of channels is 3), which may affect the effectiveness of information. At this time, point-by-point convolution is needed. Point wise Convolution (PWConv) is essentially a \(1\times 1\) convolution kernel for dimension elevation. The convolution size of point-by-point convolution is \(1\times 1 \times M\) the number of convolution kernels is N , and each must do \(D_{F}\times D_{F}\) multiplication operations. Parameter is \(M\times N\) , calculation amount is \(M \times N \times D_{F}\times D_{F}\) .

HDDT consists of deep convolution and point-by-point convolution. Deep convolution is used to extract spatial features, and point-by-point convolution is used to extract channel features. HDDT groups convolutions on the feature dimension, performs independent deep convolutions on each channel, and aggregates all channels using a \(1\times 1\) convolution before output. Parameter is \(D_{k}\times D_{k} \times M + M \times N\) , calculation amount is \(D_{k}\times D_{k} \times M \times D_{F}\times D_{F} + M \times N \times D_{F}\times D_{F}\) .

Comparison between standard convolution and HDDT.

In general, N is larger, \(\frac{1}{N}\) is negligible, \(D_{k}\) represents the size of the convolution kernel, if \(D_{k}\) , \(\frac{1}{{D_{k}^{2}}}=\frac{1}{9}\) , if we use the convolution kernel of the commonly used \(3\times 3\) , then the number of parameters and calculations using HDDT is reduced to about one-ninth of the original.

In summary, the deep separable convolution based on high-dimensional decoupling technology decomposes the standard \(3\times 3\) convolution into two steps: deep convolution and point convolution. This decomposition can improve computational efficiency and make better use of model parameters and computing resources 40 .

When the standard convolution filter K of size \(W^{\prime }\times H^{\prime } \times M\times N\) is applied to the input feature mapping F of size \(W\times H \times M\) , an output feature mapping O of size \(D_{f}\times D_{f}\times M\) can be obtained. In standard convolution, each filter K is a tensor of size \(W^{\prime }\times H^{\prime } \times M\) . The filter is convoluted with the input feature mapping F to obtain an output feature mapping O with a size of \(D_{f}\times D_{f}\times M\) , where N is the number of filters. Each slice of the filter K with a size of \(D_{f}\times D_{f}\times N\) is multiplied by the input feature map F element by element, and the results are added to obtain a feature map with a size of \(D_{f}\times D_{f}\times M\) .

In summary, a standard convolution filter of size \(W^{\prime }\times H^{\prime } \times M\times N\) is applied to the input feature mapping of size \(W\times H \times M\) , and an output feature mapping of size W can be generated. In the context of Convolution technique, individual units multiply the input feature map, cumulatively aggregating the values across each section to derive the resulting output.

In the high-dimensional decoupling technology, the above calculation is decomposed into two steps. The first step is to apply \(3\times 3\) deep convolution K to each input channel. Specifically, for the input feature mapping F with a size of \(W\times H \times M\) , \(3\times 3\) deep convolution kernels are used to perform convolution operations with each channel of the input feature mapping. In this way, M output feature maps of size \(W\times H\) will be obtained.

The second step is point convolution, which is used to create a linear combination of deep convolution outputs. Using the convolution kernel of \(1\times 1\) , the M output feature maps are linearly combined by point-by-point convolution \(\hat{K}\) to obtain an output feature map of \(W\times H\) size. This process is equivalent to dimensionality reduction and fusion of channel dimensions.

Deep convolution and point convolution play different roles in generating new features. In this context, deep convolution serves to discern the spatial relationships inherent within an image, essentially extracting details such as edges, textures, and other pertinent information. By employing profound convolution across channels, the algorithm enhances its capacity to discern the image’s spatial architecture and distill a wealth of information from it. Conversely, point convolution is typically employed to seize the interdependencies that exist among distinct channels. By using the convolution kernel function of \(1\times 1\) and the point convolution method, the channel dimensions of each characteristic graph are linearly synthesized to achieve the interaction and fusion between channels. This operation can help the network learn the correlation and importance between different channels, thereby generating new features with more representational capabilities.

Index curve analysis

Analysis of f1 value curve.

As shown in Fig. 7 , the F1 value curve comparison of YOLOv8 series algorithms in RSIOD task is shown. F1 value is the comprehensive evaluation index of Precision and Recall, which is the harmonic mean of the two. The F1 value comprehensively considers the accuracy and recall of the classifier, and gives the same weight to Precision and Recall. The computational expression for the F1 value is as follows.

The value of F1 is between 0 and 1, and the closer the value is to 1, the better the performance of the model is. The F1 value is suitable for the case of an unbalanced class distribution. When the performance of the classifier is quite different in positive and negative examples, the F1 value can better evaluate the performance of the model.

F1 value curve.

Among them, Fig. 7 a YOLOv8 algorithm has the best F1 value of 0.93 when the Confidence value is 0.502; Fig. 7 b YOLOv8MobileOne algorithm has the best F1 value of 0.99 when the Confidence value is 0.518; Fig. 7 c YOLOv8SWinTransformer algorithm has the best F1 value of 0.92 when the Confidence value is 0.471; Fig. 7 d YOLOv8-GCE algorithm has the best F1 value of 0.99 when the Confidence value is 0.523; Fig. 7 e YOLOv8-GCOD algorithm has the best F1 value of 0.98 when the Confidence value is 0.627; Fig. 7 f YOLO-ACPHD algorithm has the best F1 value of 0.99 when the Confidence value is 0.531.

Precision value curve analysis

As shown in Fig. 8 , the Precision value curve comparison of the YOLOv8 series algorithms in the RSIOD task is shown. Precision: Precision refers to the proportion of positive samples predicted by the classifier to the actual positive samples. It measures the accuracy of the model in samples that are predicted to be positive. The calculation formula is as follows.

Precision value curve.

Among them, Fig. 8 a YOLOv8 algorithm has the best Precision value of 0.99 when the Confidence value is 1; Fig. 8 b YOLOv8MobileOne algorithm has the best Precision value of 1 when the Confidence value is 0.917; Fig. 8 c YOLOv8SWinTransformer algorithm has the best Precision value of 0.98 when the Confidence value is 1; Fig. 8 d YOLOv8-GCE algorithm has the best Precision value of 1 when the Confidence value is 0.911; Fig. 8 e YOLOv8-GCOD algorithm has the best Precision value of 1 when the Confidence value is 0.924; Fig. 8 f. The YOLO-ACPHD algorithm has the best Precision value of 1 when the Confidence value is 0.947.

Precision-Recall value curve analysis

As shown in Fig. 9 , the Precision-Recall value curve comparison of the YOLOv8 series algorithms in the RSIOD task is demonstrated. Precision-Recall curve. Precision-Recall curve is an evaluation index that comprehensively considers Precision and Recall. It draws a curve with Recall as the abscissa and Precision as the ordinate. The coordinates of each point on the curve represent the accuracy under different recall rates. The purpose of plotting such a curve is to visualize how the model’s effectiveness fluctuates across varying classification thresholds. Ideally, an optimal model performance is indicated when the curve hugs the top-right corner, implying high Precision and Recall values.

Precision-Recall value curve.

Among them, Fig. 9 a YOLOv8 algorithm has the best Precision-Recall value of 0.994 at [email protected]; Fig. 9 b YOLOv8MobileOne algorithm has the best Precision-Recall value of 0.992 at [email protected]; Fig. 9 c YOLOv8SWinTransformer algorithm has the best Precision-Recall value of 0.945 at [email protected]; Fig. 9 d YOLOv8-GCE algorithm has the best Precision-Recall value of 0.993 at [email protected]; Fig. 9 e YOLOv8-GCOD algorithm has the best Precision-Recall value of 0.994 at [email protected]; Fig. 9 f YOLO-ACPHD algorithm has the best Precision-Recall value of 0.994 at [email protected].

Recall value curve analysis

As shown in Fig. 10 , the Recall value curve comparison of the YOLOv8 series algorithms in the RSIOD task is shown. Recall: Recall refers to the proportion of samples that are correctly predicted as positive by the classifier. It measures the recall rate of the model for positive samples. The mathematical expression for its computation is as follows.

Recall value curve.

Among them, Fig. 10 a YOLOv8 algorithm has the best Recall value of 0.98 when the Confidence value is 0.0; Fig. 10 b YOLOv8MobileOne algorithm has the best Recall value of 1 when Confidence value is 0.0; Fig. 10 c YOLOv8SWinTransformer algorithm has the best Recall value of 0.98 when the Confidence value is 0.0; Fig. 10 d YOLOv8-GCE algorithm has the best Recall value of 1 when the Confidence value is 0.0; Fig. 10 e YOLOv8-GCOD algorithm has the best Recall value of 1 when the Confidence value is 0.0; Fig. 10 f YOLO-ACPHD algorithm has the best Recall value of 1 when the Confidence value is 0.0.

Comparative experiments

As shown in Fig. 11 , the comparison results of the mean Average Precision (mAP) indicators of different OD models on the RSOD RSI data set. This dot plot clearly reflects the performance differences of various models on the RSOD data set. The YOLO series models (YOLOv3, YOLOv4, YOLOv5s, YOLOv7 and YOLOv8) performed well in the RSOD task. In particular, two modules (ACAT and HDDT) are added to the YOLOv5s and YOLOv7 models respectively, which significantly improves the performance of the original model and achieves a higher mAP value. Among them, the mAP of YOLOv7-HDDT model is 98.56 \(\%\) , which shows its strong ability in the field of RSIOD. In addition, YOLO-ACPHD(ours) performed particularly well in the RSOD task, with a mAP value of 99.36 \(\%\) . This shows that our model has achieved significant advantages. In addition to the YOLO series models, other models (CBD-E, CornerNet, MFC and RetinaNet) also show certain performance. However, compared with the YOLO series models, their mAP values are generally low, indicating that the YOLO series models have better generalization ability and robustness in RSIOD tasks.

Contrast experimental point-line diagram of RSOD RSI.

Table 1 shows in detail the comparative experimental results of different YOLO series and other OD algorithms on the RSOD RSI data set. These algorithms are evaluated on four key metrics. mAP/ \(\%\) , Parameters/M, FLOPs/G and FPS. It can be seen from the table that different algorithms show significant differences in performance. In terms of mAP, YOLOv5s, various improvements of YOLOv5s (ACAT, HDDT, SA, CSSGD), YOLOv7 and its improvements (ACAT, HDDT), and YOLO-ACPHD(ours) all showed high accuracy, exceeding 95 \(\%\) , of which YOLO-ACPHD(ours) reached the highest 99.36 \(\%\) . This shows that the proposed algorithm has good recognition ability in the OD task of RSI. In terms of Parameters/M and FLOPs/G, although high precision is usually accompanied by high computational costs, some algorithms such as YOLOv8, YOLOv5s, YOLOv7 and YOLO-ACPHD(ours) also control the increase in the number of parameters and floating-point operations while maintaining high precision, showing better computational efficiency. In particular, YOLO-ACPHD(ours) has a relatively low number of parameters and floating-point operations while maintaining the highest accuracy. In terms of FPS, this is an important indicator to measure the real-time performance of the algorithm. It can be seen from the table that YOLOv8, YOLOv5s, YOLOv7 and YOLO-ACPHD(ours) all have high FPS values, exceeding 100 frames/second, which indicates that these algorithms have good real-time performance in OD tasks of RSI. In particular, YOLO-ACPHD(ours) achieves the highest FPS value of 141.38 frames/s while maintaining high accuracy and low computational cost. It provides an efficient and accurate solution for the OD task of RSI.

Ablation experiment

As shown in Fig. 12 , we conducted detailed ablation experiments to evaluate the impact of different modules on the performance of the YOLOv8 model on the RSOD RSI dataset. The experimental results show that the performance of the YOLOv8 model has been significantly improved after the introduction of HDDT, from the original 95.06 \(\%\) mAP to 99.20 \(\%\) . This improved version is named YOLOv8-HDDT. Further, we incorporated ACAT into YOLOv8-HDDT to obtain YOLO-ACPHD(ours). As shown in the dot-line diagram in Fig. 12 , YOLO-ACPHD(ours) reaches 99.36 \(\%\) on the mAP performance index, which once again proves the effectiveness of the ACAT module in improving the detection accuracy of the model. Figure 12 intuitively shows the change trend of YOLOv8 ’s mAP performance index on the RSOD RSI data set when gradually adding different modules. Through this series of experiments, we have fully verified the significant role of HDDT and ACAT in improving the accuracy of remote sensing OD.

Ablation experimental point-line diagram of RSOD RSI.

Table 2 shows in detail the performance comparison results of different modules based on the YOLOv8 basic model on the RSOD RSI data set. It can be seen from the table that with the gradual optimization of the YOLOv8 model, the performance of the model has been improved on multiple key indicators. In terms of mAP/ \(\%\) , YOLOv8-HDDT has a significant improvement compared with the original YOLOv8 model, from 95.06 to 99.20 \(\%\) , showing the positive impact of HDDT module on the accuracy of model detection. The final YOLO-ACPHD(ours) further improved the mAP to 99.36 \(\%\) , indicating that the introduction of the ACAT module brought more accurate detection results to the model. In terms of Parameters/M, although the performance of the model has been improved, both YOLOv8-HDDT and YOLO-ACPHD(ours) have successfully maintained relatively low parameters, which are 5.40 M and 5.42 M, respectively, compared with the original YOLOv8 (5.92M). This shows that the model maintains good computational efficiency while improving performance, which is conducive to deployment in practical applications. In terms of FLOPs/G, YOLOv8-HDDT and YOLO-ACPHD(ours) also showed optimization effects, and FLOPs decreased from the original 13.77 G to 12.69 G and 12.47 G, respectively. This means that the amount of calculation required for the model to perform forward propagation is reduced, and the running speed of the model is further improved. In terms of FPS, YOLOv8-HDDT and YOLO-ACPHD(ours) have achieved great improvement. YOLOv8-HDDT improves FPS from 126.50 to 137.21, and YOLO-ACPHD(ours) reaches 141.38, indicating that the model achieves faster detection speed while maintaining high accuracy and low computational cost, and meets the needs of real-time detection of RSI.

Experimental results

RSOD RSI detection effect diagram.

As shown in Fig. 13 , the prediction results of the YOLO-ACPHD algorithm model designed in this paper on the RSOD data set show remarkable results. In this image, the (a) section clearly shows the label sketch map of each object in the data set, providing us with a benchmark to compare and evaluate the performance of the algorithm. The (b) part intuitively shows the actual detection effect of the algorithm after running on the verification set. In order to fully demonstrate the accuracy and reliability of the algorithm, we specially selected the most challenging object-the aircraft object which is the most difficult to detect completely as an example. By showing the comparison of 12 sets of labels and detection effects, we can clearly see that the YOLO-ACPHD algorithm proposed in this paper shows extremely high accuracy in both the location of the object and the shape recognition of the object. Almost every aircraft object is accurately detected by the algorithm without deviation. This achievement not only proves the powerful performance of the algorithm on known data sets, but more importantly, it shows the excellent generalization ability of the algorithm on unseen data. Whether in the training set or in new and unknown images, the algorithm can detect and locate the object object stably and accurately, thus playing a huge role in practical applications.

In this paper, the YOLO-ACPHD algorithm model is proposed. By using adaptive conditional sensing technology and HDDT, the challenges of multi-scale objects, dense overlap of objects and uneven data distribution in RSIOD are significantly improved. The experimental results on the RSOD data set show that the YOLO-ACPHD model has an F1 value of 0.99, a Precision value of 1, a Precision-Recall value of 0.994, a Recall value of 1, and a mAP value of 99.36 \(\%\) , showing the highest performance. It is considered to be an effective method and can achieve better performance on the RSOD RSI data set. However, future research can further optimize the adaptive conditional sensing technology to improve the detection effect of small objects, and improve the operation of HDDT to reduce the amount of calculation and improve the speed. At the same time, network pruning, quantization and other methods can be introduced to reduce the complexity of the model, and other OD techniques and model structures can be explored to improve the accuracy and robustness of the algorithm.

Data availibility

Public data warehouse. RSOD data set: https://github.com/RSIA-LIESMARS-WHU/RSOD-Dataset-

Xu, F., Liu, J., Sun, H., Wang, T. & Wang, X. Research progress on vessel detection using optical remote sensing image. Opt. Precis. Eng. 29 , 916–931 (2021).

Article Google Scholar

Huang, Z., Wu, F., Fu, Y., Zhang, Y. & Jiang, X. Review of deep learning-based algorithms for ship target detection from remote sensing images. Opt. Precis. Eng. 31 , 2295–2318 (2023).

Wang, Y., Ma, L. & Tian, Y. State-of-the-art of ship detection and recognition in optical remotely sensed imagery. Acta Autom. Sin. 37 , 1029–1039 (2011).

CAS Google Scholar

Bai, C., Bai, X. & Wu, K. A review: Remote sensing image object detection algorithm based on deep learning. Electronics 12 , 4902 (2023).

Gui, S., Song, S., Qin, R. & Tang, Y. Remote sensing object detection in the deep learning era—a review. Rem. Sens. 16 , 327 (2024).

Article ADS Google Scholar

Fu, K. et al. Rotation-aware and multi-scale convolutional neural network for object detection in remote sensing images. ISPRS J. Photogramm. Remote. Sens. 161 , 294–308 (2020).

Li, Q. et al. Hierarchical region based convolution neural network for multiscale object detection in remote sensing images. In IGARSS 2018-2018 IEEE International Geoscience and Remote Sensing Symposium 4355–4358 (Ieee, 2018).

Zhang, Z., Liu, Y., Liu, T., Lin, Z. & Wang, S. Dagn: A real-time uav remote sensing image vehicle detection framework. IEEE Geosci. Remote Sens. Lett. 17 , 1884–1888 (2019).

Shi, F., Zhang, T. & Zhang, T. Orientation-aware vehicle detection in aerial images via an anchor-free object detection approach. IEEE Trans. Geosci. Remote Sens. 59 , 5221–5233 (2020).

Liu, S., Zhang, L., Lu, H. & He, Y. Center-boundary dual attention for oriented object detection in remote sensing images. IEEE Trans. Geosci. Remote Sens. 60 , 1–14 (2021).

Song, K., Huang, P., Lin, Z. & Lv, T. An oriented anchor-free object detector including feature fusion and foreground enhancement for remote sensing images. Rem. Sens. Lett 12 , 397–407 (2021).

Sagar, A. S., Chen, Y., Xie, Y. & Kim, H. S. Msa r-cnn: A comprehensive approach to remote sensing object detection and scene understanding. Expert Syst. Appl. 241 , 122788 (2024).

Liu, C., Zhang, S., Hu, M. & Song, Q. Object detection in remote sensing images based on adaptive multi-scale feature fusion method. Rem. Sens. 16 , 907 (2024).

Zhang, G., Yu, W. & Hou, R. Mfil-fcos: A multi-scale fusion and interactive learning method for 2d object detection and remote sensing image detection. Rem. Sens. 16 , 936 (2024).

Zhang, T. et al. Multistage enhancement network for tiny object detection in remote sensing images. IEEE Trans. Geosci. Rem. Sens. 62 , 1–12 (2024).

ADS CAS Google Scholar

Zhang, F., Shi, Y., Xiong, Z. & Zhu, X. X. Few-shot object detection in remote sensing: Lifting the curse of incompletely annotated novel objects. IEEE Trans. Geosci. Rem. Sens. 2024 , 856 (2024).

Google Scholar

Li, Y. et al. Remote sensing micro-object detection under global and local attention mechanism. Rem. Sens. 16 , 644 (2024).

Zhang, Y. et al. Co-ecl: Covariant network with equivariant contrastive learning for oriented object detection in remote sensing images. Rem. Sens. 16 , 516 (2024).

Wang, J., Bai, Z., Zhang, X. & Qiu, Y. A lightweight remote sensing aircraft object detection network based on improved yolov5n. Rem. Sens. 16 , 857 (2024).

Huang, K., Li, N., Huang, J. & Tian, C. Exploiting memory-based cross-image contexts for salient object detection in optical remote sensing images. IEEE Trans. Geosci. Rem. Sens. 2024 , 87 (2024).

Huo, L., Hou, J., Feng, J., Wang, W. & Liu, J. Global and multiscale aggregate network for saliency object detection in optical remote sensing images. Rem. Sens. 16 , 624 (2024).

Dong, Y., Yang, H., Liu, S., Gao, G. & Li, C. Optical remote sensing object detection based on background separation and small object compensation strategy. IEEE J. Sel. Top. Appl. Earth Observ. Rem. Sens. 2024 , 123 (2024).

Liu, D., Zhang, J., Qi, Y., Wu, Y. & Zhang, Y. A tiny object detection method based on explicit semantic guidance for remote sensing images. IEEE Geosci. Rem. Sens. Lett. 2024 , 478 (2024).

Guo, H., Bai, H., Yuan, Y. & Qin, W. Fully deformable convolutional network for ship detection in remote sensing imagery. Rem. Sens. 14 , 1850 (2022).

Liu, Z. et al. Swin transformer: Hierarchical vision transformer using shifted windows. In Proceedings of the IEEE/CVF International Conference on Computer Vision 10012–10022 (2021).

Ni, K., Liu, P. & Wang, P. Compact global-local convolutional network with multifeature fusion and learning for scene classification in synthetic aperture radar imagery. IEEE J. Sel. Top. Appl. Earth Observ. Rem. Sens. 14 , 7284–7296 (2021).

Luo, Y. et al. Ce-fpn: Enhancing channel information for object detection. Multimedia Tools Appl. 81 , 30685–30704 (2022).

Gao, T. et al. Global to local: A scale-aware network for remote sensing object detection. IEEE Trans. Geosci. Rem. Sens. 2023 , 14 (2023).

Ming, Q., Zhou, Z., Miao, L., Zhang, H. & Li, L. Dynamic anchor learning for arbitrary-oriented object detection. In Proceedings of the AAAI Conference on Artificial Intelligence, vol. 35 2355–2363 (2021).

Qian, X. et al. Building a bridge of bounding box regression between oriented and horizontal object detection in remote sensing images. IEEE Trans. Geosci. Rem. Sens. 61 , 1–9 (2023).

Yu, Y. et al. Attention-based feature pyramid networks for ship detection of optical remote sensing image. J. Rem. Sens. Chin. 24 , 107–115 (2020).

Cao, X. et al. Health status recognition method for rotating machinery based on multi-scale hybrid features and improved convolutional neural networks. Sensors 23 , 5688 (2023).

Article ADS PubMed PubMed Central Google Scholar

Gao, L. et al. Scenehgn: Hierarchical graph networks for 3d indoor scene generation with fine-grained geometry. IEEE Trans. Pattern Anal. Mach. Intell. 45 , 8902 (2023).

Article PubMed Google Scholar

Wu, Y. et al. Panet: A point-attention based multi-scale feature fusion network for point cloud registration. IEEE Trans. Instrum. Meas. 2023 , 75 (2023).

Hua, W. et al. Sood: Towards semi-supervised oriented object detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition 15558–15567 (2023).

Yang, M., Yuan, W. & Xu, G. Yolox target detection model can identify and classify several types of tea buds with similar characteristics. Sci. Rep. 14 , 2855 (2024).

Article ADS CAS PubMed PubMed Central Google Scholar

Yu, J., Li, S., Zhou, S. & Wang, H. Msia-net: A lightweight infrared target detection network with efficient information fusion. Entropy 25 , 808 (2023).

Xinxin, L., Zuojun, L., Chaofang, H. & Changshou, X. Light-weight multi-target detection and tracking algorithm based on m3-yolov5. In 2023 42nd Chinese Control Conference (CCC) 8159–8164 (IEEE, 2023).

Yang, B., Bender, G., Le, Q. V. & Ngiam, J. Condconv: Conditionally parameterized convolutions for efficient inference. Adv. Neural Inf. Process. Syst. 2019 , 32 (2019).

Guo, Y., Li, Y., Wang, L. & Rosing, T. Depthwise convolution is all you need for learning multiple visual domains. In Proceedings of the AAAI Conference on Artificial Intelligence, vol. 33 8368–8375 (2019).

Download references

Acknowledgements

The author thanks Professor Wu for his experimental guidance and help.

This work has been supported by the Natural Science Foundation Key Project of Gansu Province under Grant No. 23JRRA860, the Inner Mongolia Key R and D and Achievement Transformation Project under Grant 2023YFSH0043 and 2023YFDZ0043, the Key Research and Development Project of Lanzhou Jiaotong University ( ZDYF2304 ) and the Key Talent Project of Gansu Province.

Author information

Authors and affiliations.

School of Electronic and Information Engineering, Lanzhou Jiaotong University, Lanzhou, 730070, China

Chenshuai Bai, Xiaofeng Bai & Kaijun Wu

Department of Mechanical and Electrical Engineering, Guangzhou City Polytechnic, Guangzhou, 510000, China

You can also search for this author in PubMed Google Scholar

Contributions

Chenshuai Bai: have made significant contributions to the techniques or methods used and research concepts of the articles. Xiaofeng Bai: have made significant contributions to the design of the articles. Kaijun Wu: have made significant contributions to the critical revision of the articles. Yuanjie Ye: have made significant contributions to the data collection of the articles.The final draft read and approved by all authors.

Corresponding author

Correspondence to Yuanjie Ye .

Ethics declarations

Competing interests.

The authors declare no competing interests.

Additional information

Publisher's note.

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/ .

Reprints and permissions

About this article

Cite this article.

Bai, C., Bai, X., Wu, K. et al. Adaptive condition-aware high-dimensional decoupling remote sensing image object detection algorithm. Sci Rep 14 , 20090 (2024). https://doi.org/10.1038/s41598-024-71001-5

Download citation

Received : 20 March 2024

Accepted : 23 August 2024

Published : 29 August 2024

DOI : https://doi.org/10.1038/s41598-024-71001-5

Share this article

Anyone you share the following link with will be able to read this content:

Sorry, a shareable link is not currently available for this article.

Provided by the Springer Nature SharedIt content-sharing initiative

- Remote sensing image

- Object detection

- Condition awareness technology

By submitting a comment you agree to abide by our Terms and Community Guidelines . If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.

Quick links

- Explore articles by subject

- Guide to authors

- Editorial policies

Sign up for the Nature Briefing newsletter — what matters in science, free to your inbox daily.

Information

- Author Services

Initiatives

You are accessing a machine-readable page. In order to be human-readable, please install an RSS reader.

All articles published by MDPI are made immediately available worldwide under an open access license. No special permission is required to reuse all or part of the article published by MDPI, including figures and tables. For articles published under an open access Creative Common CC BY license, any part of the article may be reused without permission provided that the original article is clearly cited. For more information, please refer to https://www.mdpi.com/openaccess .

Feature papers represent the most advanced research with significant potential for high impact in the field. A Feature Paper should be a substantial original Article that involves several techniques or approaches, provides an outlook for future research directions and describes possible research applications.

Feature papers are submitted upon individual invitation or recommendation by the scientific editors and must receive positive feedback from the reviewers.

Editor’s Choice articles are based on recommendations by the scientific editors of MDPI journals from around the world. Editors select a small number of articles recently published in the journal that they believe will be particularly interesting to readers, or important in the respective research area. The aim is to provide a snapshot of some of the most exciting work published in the various research areas of the journal.

Original Submission Date Received: .

- Active Journals

- Find a Journal

- Proceedings Series

- For Authors

- For Reviewers

- For Editors

- For Librarians

- For Publishers

- For Societies

- For Conference Organizers

- Open Access Policy

- Institutional Open Access Program

- Special Issues Guidelines

- Editorial Process

- Research and Publication Ethics

- Article Processing Charges

- Testimonials

- Preprints.org

- SciProfiles

- Encyclopedia

Article Menu

- Subscribe SciFeed

- Recommended Articles

- Google Scholar

- on Google Scholar

- Table of Contents

Find support for a specific problem in the support section of our website.

Please let us know what you think of our products and services.

Visit our dedicated information section to learn more about MDPI.

JSmol Viewer

Dense small object detection based on an improved yolov7 model, 1. introduction, 2. related work, 3. proposed method, 3.1. more focus on small object detection head, 3.2. multi-scale feature extraction module, 3.3. spd conv downsampling, 3.4. elan model optimization, 4. experiments and analysis of results, 4.1. data set, 4.2. experimental settings, 4.3. evaluation metrics, 4.4. experimental analysis of different improvement points, 4.4.1. improved detection head for yolov7, 4.4.2. c3r2n multi-scale feature extraction module, 4.4.3. introduce spd conv downsampling module, 4.4.4. adjusting the number of channels of the feature fusion module, 4.5. ablation experiments, 4.6. comparative experiments, 4.7. visual analysis, 5. conclusions, author contributions, data availability statement, acknowledgments, conflicts of interest.

- Amit, Y.; Felzenszwalb, P.; Girshick, R. Object detection. In Computer Vision: A Reference Guide ; Springer: New York, NY, USA, 2021; pp. 875–883. [ Google Scholar ]

- Al Shibli, A.H.N.; Al-Harthi, R.A.A.; Palanisamy, R. Accurate Movement Detection of Artificially Intelligent Security Objects. Eur. J. Electr. Eng. Comput. Sci. 2023 , 7 , 49–53. [ Google Scholar ] [ CrossRef ]

- Altaher, A.W.; Hussein, A.H. Intelligent security system detects the hidden objects in the smart grid. Indones. J. Electr. Eng. Comput. Sci. (IJEECS) 2020 , 19 , 188–195. [ Google Scholar ] [ CrossRef ]

- Usamentiaga, R.; Lema, D.G.; Pedrayes, O.D.; Garcia, D.F. Automated surface defect detection in metals: A comparative review of object detection and semantic segmentation using deep learning. IEEE Trans. Ind. Appl. 2022 , 58 , 4203–4213. [ Google Scholar ] [ CrossRef ]

- Wang, X.; Jia, X.; Jiang, C.; Jiang, S. A wafer surface defect detection method built on generic object detection network. Digit. Signal Process. 2022 , 130 , 103718. [ Google Scholar ] [ CrossRef ]

- Feng, D.; Harakeh, A.; Waslander, S.L.; Dietmayer, K. A review and comparative study on probabilistic object detection in autonomous driving. IEEE Trans. Intell. Transp. Syst. 2021 , 23 , 9961–9980. [ Google Scholar ] [ CrossRef ]

- Chen, X.; Kundu, K.; Zhang, Z.; Ma, H.; Fidler, S.; Urtasun, R. Monocular 3d object detection for autonomous driving. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 2147–2156. [ Google Scholar ]

- Cheng, G.; Han, J. A survey on object detection in optical remote sensing images. ISPRS J. Photogramm. Remote. Sens. 2016 , 117 , 11–28. [ Google Scholar ] [ CrossRef ]

- Li, K.; Wan, G.; Cheng, G.; Meng, L.; Han, J. Object detection in optical remote sensing images: A survey and a new benchmark. ISPRS J. Photogramm. Remote. Sens. 2020 , 159 , 296–307. [ Google Scholar ] [ CrossRef ]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You only look once: Unified, real-time object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [ Google Scholar ]

- Redmon, J.; Farhadi, A. YOLO9000: Better, faster, stronger. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 7263–7271. [ Google Scholar ]

- Redmon, J.; Farhadi, A. Yolov3: An incremental improvement. arXiv 2018 , arXiv:1804.02767. [ Google Scholar ]

- Bochkovskiy, A.; Wang, C.Y.; Liao, H.Y.M. Yolov4: Optimal speed and accuracy of object detection. arXiv 2020 , arXiv:2004.10934. [ Google Scholar ]

- Jocher, G. YOLOv5 by Ultralytics. 2020. Available online: https://github.com/ultralytics/yolov5 (accessed on 25 August 2024).

- Li, C.; Li, L.; Jiang, H.; Weng, K.; Geng, Y.; Li, L.; Ke, Z.; Li, Q.; Cheng, M.; Nie, W.; et al. YOLOv6: A single-stage object detection framework for industrial applications. arXiv 2022 , arXiv:2209.02976. [ Google Scholar ]

- Wang, C.Y.; Bochkovskiy, A.; Liao, H.Y.M. YOLOv7: Trainable bag-of-freebies sets new state-of-the-art for real-time object detectors. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023; pp. 7464–7475. [ Google Scholar ]

- Jocher, G.; Chaurasia, A.; Qiu, J. Ultralytics YOLO. 2023. Available online: https://github.com/ultralytics/ultralytics (accessed on 25 August 2024).

- Wang, C.Y.; Yeh, I.H.; Liao, H.Y.M. Yolov9: Learning what you want to learn using programmable gradient information. arXiv 2024 , arXiv:2402.13616. [ Google Scholar ]

- Wang, A.; Chen, H.; Liu, L.; Chen, K.; Lin, Z.; Han, J.; Ding, G. Yolov10: Real-time end-to-end object detection. arXiv 2024 , arXiv:2405.14458. [ Google Scholar ]

- Zhang, M.; Pang, K.; Gao, C.; Xin, M. Multi-scale aerial target detection based on densely connected inception ResNet. IEEE Access 2020 , 8 , 84867–84878. [ Google Scholar ] [ CrossRef ]

- Du, D.; Zhu, P.; Wen, L.; Bian, X.; Lin, H.; Hu, Q.; Peng, T.; Zheng, J.; Wang, X.; Zhang, Y.; et al. VisDrone-DET2019: The vision meets drone object detection in image challenge results. In Proceedings of the IEEE/CVF International Conference on Computer Vision Workshops, Seoul, Republic of Korea, 27–28 October 2019. [ Google Scholar ]

- Xu, Q.; Lin, R.; Yue, H.; Huang, H.; Yang, Y.; Yao, Z. Research on small target detection in driving scenarios based on improved yolo network. IEEE Access 2020 , 8 , 27574–27583. [ Google Scholar ] [ CrossRef ]

- Xu, D.; Wu, Y. Improved YOLO-V3 with DenseNet for multi-scale remote sensing target detection. Sensors 2020 , 20 , 4276. [ Google Scholar ] [ CrossRef ]

- Zhu, X.; Lyu, S.; Wang, X.; Zhao, Q. TPH-YOLOv5: Improved YOLOv5 based on transformer prediction head for object detection on drone-captured scenarios. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, BC, Canada, 11–17 October 2021; pp. 2778–2788. [ Google Scholar ]

- Wang, Z.Z.; Xie, K.; Zhang, X.Y.; Chen, H.Q.; Wen, C.; He, J.B. Small-object detection based on yolo and dense block via image super-resolution. IEEE Access 2021 , 9 , 56416–56429. [ Google Scholar ] [ CrossRef ]

- Zhang, X.; Feng, Y.; Zhang, S.; Wang, N.; Mei, S. Finding nonrigid tiny person with densely cropped and local attention object detector networks in low-altitude aerial images. IEEE J. Sel. Top. Appl. Earth Obs. Remote. Sens. 2022 , 15 , 4371–4385. [ Google Scholar ] [ CrossRef ]

- Huang, M.; Zhang, Y.; Chen, Y. Small target detection model in aerial images based on TCA-YOLOv5m. IEEE Access 2022 , 11 , 3352–3366. [ Google Scholar ] [ CrossRef ]

- Zhao, H.; Zhang, H.; Zhao, Y. Yolov7-sea: Object detection of maritime uav images based on improved yolov7. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, Waikoloa, HI, USA, 2–7 February 2023; pp. 233–238. [ Google Scholar ]

- Bao, W. Remote-sensing Small-target Detection Based on Feature-dense Connection. J. Phys. Conf. Ser. 2023 , 2640 , 012009. [ Google Scholar ] [ CrossRef ]

- Sui, J.; Chen, D.; Zheng, X.; Wang, H. A new algorithm for small target detection from the perspective of unmanned aerial vehicles. IEEE Access 2024 , 12 , 29690–29697. [ Google Scholar ] [ CrossRef ]

- Gao, S.H.; Cheng, M.M.; Zhao, K.; Zhang, X.Y.; Yang, M.H.; Torr, P. Res2net: A new multi-scale backbone architecture. IEEE Trans. Pattern Anal. Mach. Intell. 2019 , 43 , 652–662. [ Google Scholar ] [ CrossRef ] [ PubMed ]

- Sunkara, R.; Luo, T. No more strided convolutions or pooling: A new CNN building block for low-resolution images and small objects. In Proceedings of the Joint European Conference on Machine Learning and Knowledge Discovery in Databases, Grenoble, France, 19–23 September 2022; Springer: Cham, Swizterland, 2023; pp. 443–459. [ Google Scholar ]

- Zhang, Z. Drone-YOLO: An efficient neural network method for target detection in drone images. Drones 2023 , 7 , 526. [ Google Scholar ] [ CrossRef ]

- Zhao, L.; Zhu, M. MS-YOLOv7: YOLOv7 based on multi-scale for object detection on UAV aerial photography. Drones 2023 , 7 , 188. [ Google Scholar ] [ CrossRef ]

Click here to enlarge figure

| Parameters | Value |

|---|---|

| image size | 640 × 640 |

| epochs | 300 |

| warmup | 3 |

| batch size | 16 |

| optimizer | Adam |

| learning rate | 0.01 |

| momentum | 0.937 |

| weight decay | 0.0005 |

| Experiment | mAP(s)% | mAP(m)% | mAP(l)% | % | Parameters/M |

|---|---|---|---|---|---|

| 1 | 18.6 | 38.7 | 48.8 | 48.8 | 36.53 |

| 2 | 21.0 | 40.7 | 47.8 | 50.5 | 37.10 |

| 3 | 21.3 | 40.8 | 47.2 | 50.9 | 15.49 |

| 4 | 21.6 | 40.7 | 49.0 | 51.4 | 11.31 |

| Experiment | % | :0.95% | Parameters/M |

|---|---|---|---|

| 1 | 52.1 | 30.4 | 10.24 |

| 2 | 51.8 | 30.4 | 10.22 |

| 3 | 51.7 | 30.3 | 10.22 |

| 4 | 51.2 | 29.7 | 10.37 |

| 5 | 51.9 | 30.4 | 9.30 |

| 6 | 51.3 | 29.8 | 9.08 |

| 7 | 50.7 | 29.6 | 9.01 |

| Baseline | 51.4 | 30.0 | 11.31 |

| Experiment | mAP(s)% | mAP(m)% | mAP(l)% | % | Parameters/M |

|---|---|---|---|---|---|

| 1 | 23.0 | 43.3 | 46.8 | 53.8 | 10.10 |

| 2 | 22.9 | 42.3 | 46.5 | 53.4 | 10.65 |

| Baseline | 21.7 | 41.5 | 46.1 | 51.9 | 9.30 |

| Experiment | mAP(s)% | mAP(m)% | mAP(l)% | % | Parameters/M |

|---|---|---|---|---|---|

| Baseline | 23.0 | 43.3 | 46.8 | 53.8 | 10.10 |

| 1 | 24.2 | 43.3 | 49.6 | 55.1 | 10.89 |

| Method | % | :0.95% | Parameters/M | |

|---|---|---|---|---|

| A | YOLOv7 | 48.8 | 27.6 | 36.53 |

| B | A+P2 | 50.5 | 29.9 | 37.10 |

| C | B-P5 | 51.4 | 30.0 | 11.31 |

| D | C+C3R2N | 51.9 | 30.3 | 9.30 |

| E | D+SPD Conv | 53.8 | 31.8 | 10.10 |

| F | E+Channel(Ours) | 55.1 | 32.5 | 10.89 |

| Method | % | :0.95% | Parameters/M |

|---|---|---|---|

| YOLOv5s | 33.1 | 17.9 | 7.04 |

| YOLOv5x | 39.9 | 23.5 | 86.23 |

| YOLOv6s | 37.1 | 22.0 | 16.30 |

| YOLOv6l | 41.0 | 25.0 | 51.98 |

| YOLOv7m | 48.8 | 27.6 | 36.53 |

| YOLOv7x | 50.0 | 28.5 | 70.84 |

| YOLOv8s | 39.5 | 23.5 | 11.13 |

| YOLOv8x | 45.4 | 27.9 | 68.13 |

| YOLOv9s | 41.0 | 24.8 | 9.60 |

| YOLOv9e | 47.5 | 29.1 | 57.72 |

| YOLOv10s | 39.0 | 23.3 | 8.07 |

| YOLOv10x | 45.7 | 28.2 | 31.67 |

| Drone-YOLOs | 44.3 | 27.0 | 10.9 |

| Drone-YOLOl | 51.3 | 31.9 | 76.2 |

| MS-YOLO | 53.1 | 31.3 | 79.7 |

| Ours | 55.1 | 32.5 | 10.89 |

| The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

Share and Cite

Chen, X.; Deng, L.; Hu, C.; Xie, T.; Wang, C. Dense Small Object Detection Based on an Improved YOLOv7 Model. Appl. Sci. 2024 , 14 , 7665. https://doi.org/10.3390/app14177665

Chen X, Deng L, Hu C, Xie T, Wang C. Dense Small Object Detection Based on an Improved YOLOv7 Model. Applied Sciences . 2024; 14(17):7665. https://doi.org/10.3390/app14177665

Chen, Xun, Linyi Deng, Chao Hu, Tianyi Xie, and Chengqi Wang. 2024. "Dense Small Object Detection Based on an Improved YOLOv7 Model" Applied Sciences 14, no. 17: 7665. https://doi.org/10.3390/app14177665

Article Metrics

Article access statistics, further information, mdpi initiatives, follow mdpi.

Subscribe to receive issue release notifications and newsletters from MDPI journals

- Open access

- Published: 17 October 2020

Object detection in real time based on improved single shot multi-box detector algorithm

- Ashwani Kumar 1 ,

- Zuopeng Justin Zhang 2 &

- Hongbo Lyu 3

EURASIP Journal on Wireless Communications and Networking volume 2020 , Article number: 204 ( 2020 ) Cite this article

30k Accesses

103 Citations

3 Altmetric

Metrics details

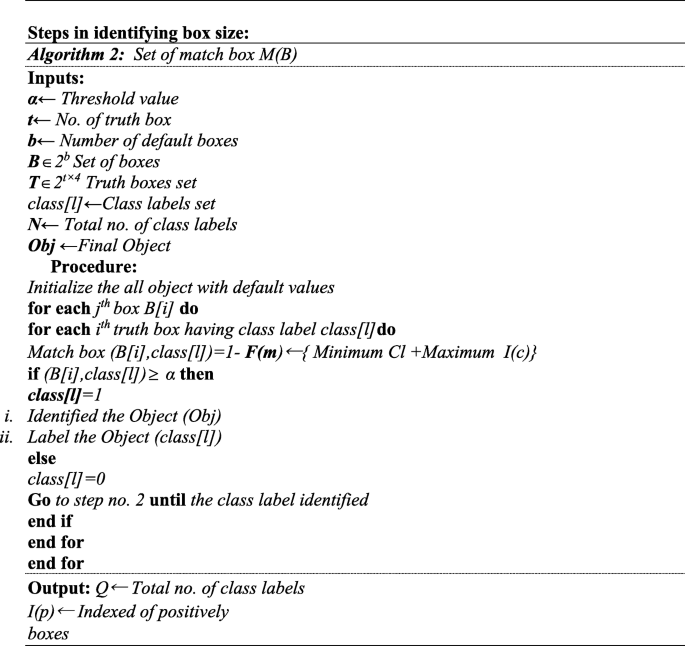

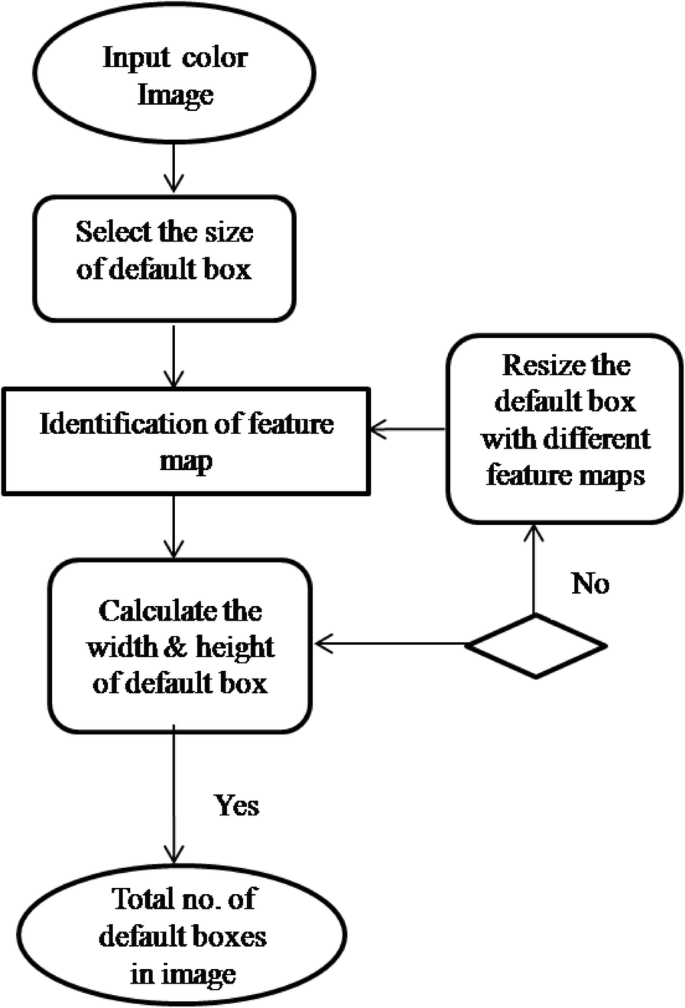

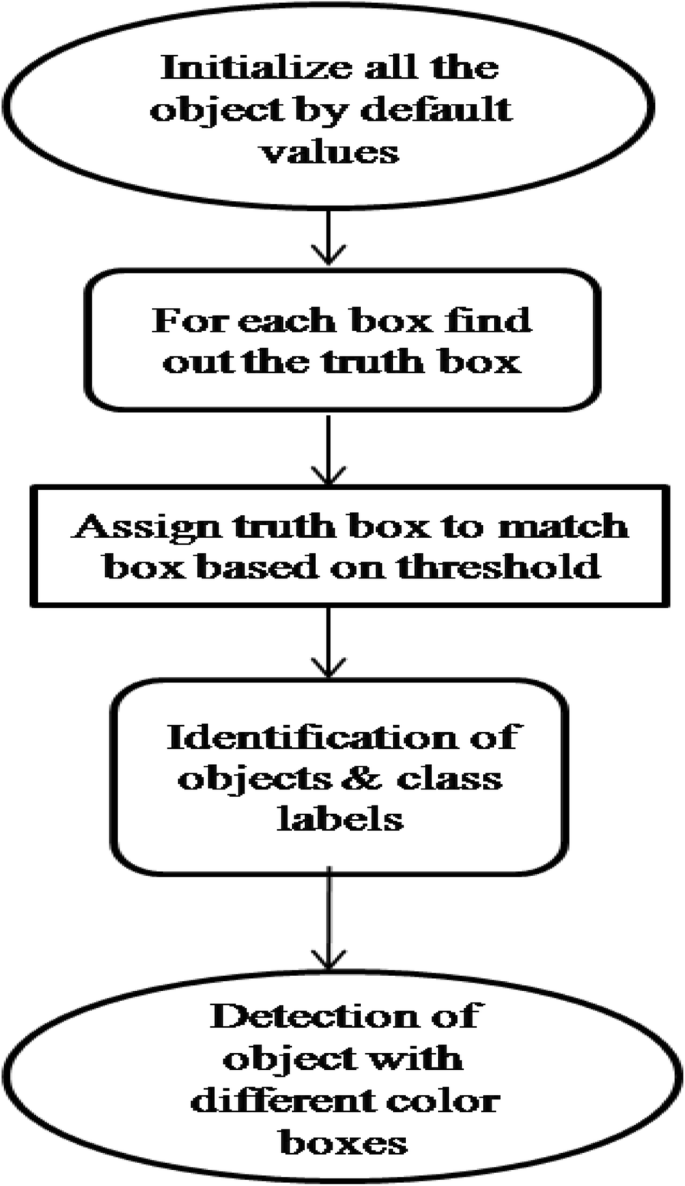

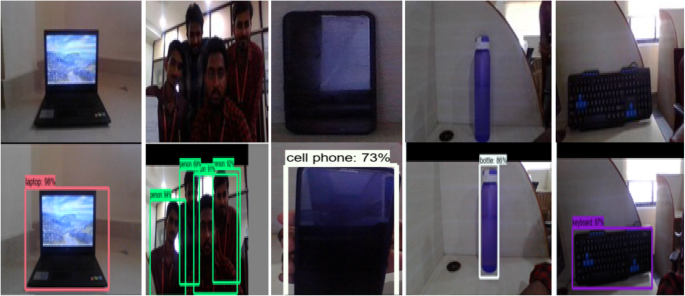

In today’s scenario, the fastest algorithm which uses a single layer of convolutional network to detect the objects from the image is single shot multi-box detector (SSD) algorithm. This paper studies object detection techniques to detect objects in real time on any device running the proposed model in any environment. In this paper, we have increased the classification accuracy of detecting objects by improving the SSD algorithm while keeping the speed constant. These improvements have been done in their convolutional layers, by using depth-wise separable convolution along with spatial separable convolutions generally called multilayer convolutional neural networks. The proposed method uses these multilayer convolutional neural networks to develop a system model which consists of multilayers to classify the given objects into any of the defined classes. The schemes then use multiple images and detect the objects from these images, labeling them with their respective class label. To speed up the computational performance, the proposed algorithm is applied along with the multilayer convolutional neural network which uses a larger number of default boxes and results in more accurate detection. The accuracy in detecting the objects is checked by different parameters such as loss function, frames per second (FPS), mean average precision (mAP), and aspect ratio. Experimental results confirm that our proposed improved SSD algorithm has high accuracy.

1 Introduction

The information age has witnessed the rapid development of wireless network technology, which has attracted the attention of researchers and practitioners due to its unique characteristics such as flexible structure and efficiency. As wireless network technology continues to evolve, it has brought great convenience to people’s life and work with its powerful technical capabilities. Wireless networks have gradually facilitated the main stream of people’s online life. At the same time, the advent of 5G network will further enable the greater development and more advanced applications of wireless network technology. The future generations of wireless networks will provide strong support for related applications such as Internet of Things (IoT) and virtual reality (VR). Many of these applications connect to each other and transmit information within networks based on the detection of specific target objects. In order to achieve a comprehensive network connection between people and people, things and people, and things and things, one of the key tasks of future applications is to identify the target in a real-time manner in the wireless networks [ 1 ].

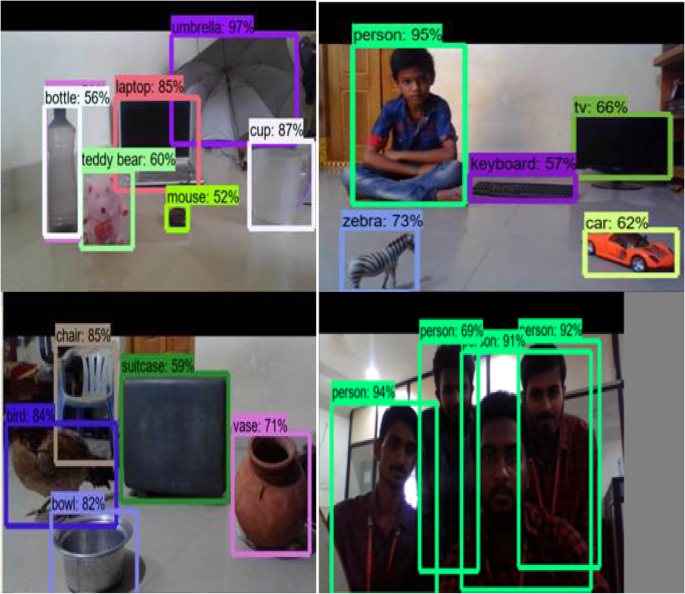

Identifying each object in a picture or scene with the help of computer/software is called object detection. Object detection is one of the most important problems in the area of wireless network computer vision. It is the basis of complex vision tasks such as target tracking and scene understanding and is widely used in wireless networks. The task of object detection is to determine whether there are objects belonging to the specified category in the image. If it exists, then the subsequent task is to identify its category and location information. Traditional object detection algorithms are mainly devoted to the detection of a few types of targets, such as pedestrian detection [ 2 ] and infrared target detection [ 3 ]. Due to the recent advance of deep learning technology [ 4 ], especially after the appearance of the deep convolution neural network (CNN) technology, object detection algorithms have made a breakthrough development. Within these algorithms, three major methods widely adopted in this field are You Only Look Once (YOLO), single shot multi-box detector (SSD), and faster region CNN (F-RCNN) [ 5 ].

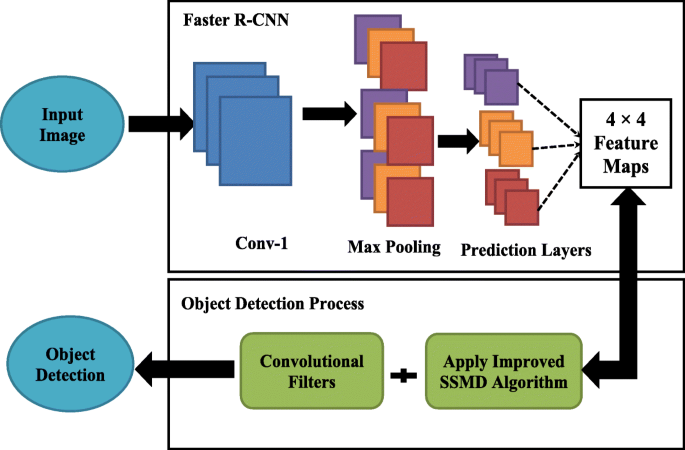

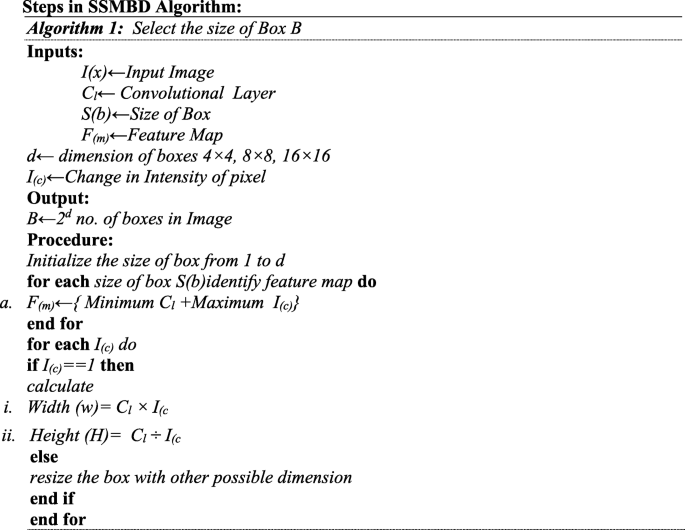

However, with the upcoming of 5G, the characteristics of wireless network, such as massive data, service evolution, data diversification, and uneven spatial-temporal distribution of data, have posed severe challenges to object detection under a real-time environment. Besides, real-time object detection also needs to be completed on any device and in any environment. To address the challenges, this paper proposes object detection technique to detect objects in real time with a model that can be executed on any device in any environment. Specifically, our proposed method applies convolutional neural networks to develop a model that consists of multiple layers to classify the given objects into several defined classes. Based on the recent advancement in deep learning with image processing, the proposed schemes then use multiple images and detect the objects from these images, labeling them with their respective class label. These images can be from videos which are fed into the model we prepared, and the training of the model takes place until the error rate is reduced to an acceptable level. To speed up the computational performance of the object detection technique, we have used improved single shot multi-box detector (SSD) algorithm along with the faster region convolutional neural network. We also conduct experiments to check the accuracy of our proposed method in detecting the objects with different parameters including loss function, mean average precision (mAP), and frames per second. The experiment results demonstrate that the proposed model has a high performance in detect accurate objects for real-time applications.

Specifically, this research makes contributions to the existing literature by improving the accuracy of SSD algorithm for detecting smaller objects. SSD algorithm works well in detecting large objects but is less accurate in detecting smaller objects. Hence, we modify the SSD algorithm to achieve acceptable accuracy for detecting smaller objects. The images or scenes are taken from web cameras and we have used Pascal visual object class (VOC) and common objects in context (COCO) datasets to carry out experiments. We capture object detection (OD) datasets from our center for image processing lab. We make use of different libraries to form a network and use tensorflow-GPU 1.5. For experimental setup, tensorflow directory, SSD MobilenetV1 FPN Feature Extractor, tensorflow object detection API, and anaconda virtual environment are used. This entire setup enables us to produce real-time object detection in a better way.

The rest of this paper is organized as follows. The next section summarizes related work with a focus on the existing techniques of object detection. The third section discusses about the improved SSD algorithm. The fourth section represents the experimental results. The fifth section describes discussion and analysis, limitations, and future research directions. The final section concludes the paper.

2 Related work

2.1 computer vision detection.

In 2012, Alex [ 6 ] used the deep CNN Alex Net to win the championship in the task of ILSVRC 2012 image classification, which was superior to the traditional algorithms. Then scholars began to study the application of deep CNN in object detection. They used Alex Netto construct algorithms, such as R-CNN [ 7 , 8 , 9 ], YOLO [ 5 ], SSD [ 10 ], and others, which resulted in a surging research stream of computer vision detection.

Girshick et al. [ 8 ] proposed a method R-CNN by successfully combining region proposals with CNNs, which improves mean average precision (mAP) by more than 30%. The next year Girshick [ 11 ] named a new algorithm faster R-CNN, which employs spatial pyramid pooling networks. But it had a bottleneck in region proposal computation. In order to overcome this disadvantage, Ren et al. [ 12 ] successfully introduced a Region Proposal Network (RPN) that shares full-image convolutional features with the detection network. Cao et al. [ 13 ] proposed a rotation invariant faster R-CNN target detection algorithm. By adding regularization constraints to the target function of the model, the invariance of the target CNN feature rotation is enhanced. The model improved the accuracy by an average of 2.4%. Dai et al. [ 14 ] proposed position-sensitive score maps to address a dilemma between translation-invariance in image classification and translation variance in object detection and successfully executed them 2.5–20 times faster than the F-RCNN counterpart. Lin et al. [ 15 ] developed a top-down architecture with lateral connections, called Feature Pyramid Network (FPN), by building high-level semantic feature maps at all scales. All these algorithms successfully solved the problem in object detection. However, there are still defects in accuracy and speed for wireless network object detection applications.