Information

- Author Services

Initiatives

You are accessing a machine-readable page. In order to be human-readable, please install an RSS reader.

All articles published by MDPI are made immediately available worldwide under an open access license. No special permission is required to reuse all or part of the article published by MDPI, including figures and tables. For articles published under an open access Creative Common CC BY license, any part of the article may be reused without permission provided that the original article is clearly cited. For more information, please refer to https://www.mdpi.com/openaccess .

Feature papers represent the most advanced research with significant potential for high impact in the field. A Feature Paper should be a substantial original Article that involves several techniques or approaches, provides an outlook for future research directions and describes possible research applications.

Feature papers are submitted upon individual invitation or recommendation by the scientific editors and must receive positive feedback from the reviewers.

Editor’s Choice articles are based on recommendations by the scientific editors of MDPI journals from around the world. Editors select a small number of articles recently published in the journal that they believe will be particularly interesting to readers, or important in the respective research area. The aim is to provide a snapshot of some of the most exciting work published in the various research areas of the journal.

Original Submission Date Received: .

- Active Journals

- Find a Journal

- Proceedings Series

- For Authors

- For Reviewers

- For Editors

- For Librarians

- For Publishers

- For Societies

- For Conference Organizers

- Open Access Policy

- Institutional Open Access Program

- Special Issues Guidelines

- Editorial Process

- Research and Publication Ethics

- Article Processing Charges

- Testimonials

- Preprints.org

- SciProfiles

- Encyclopedia

Article Menu

- Subscribe SciFeed

- Google Scholar

- on Google Scholar

- Table of Contents

Find support for a specific problem in the support section of our website.

Please let us know what you think of our products and services.

Visit our dedicated information section to learn more about MDPI.

JSmol Viewer

Recent advances in robotics and intelligent robots applications.

Conflicts of Interest

List of contributions.

- Bai, X.W.; Kong, D.Y.; Wang, Q.; Yu, X.H; Xie, X.X. Bionic Design of a Miniature Jumping Robot. Appl. Sci. 2023 , 13 , 4534. https://doi.org/10.3390/app13074534 .

- Yang, M.Y.; Xu, L.; Tan, X.; Shen, H.H. A Method Based on Blackbody to Estimate Actual Radiation of Measured Cooperative Target Using an Infrared Thermal Imager. Appl. Sci. 2023 , 13 , 4832. https://doi.org/10.3390/app13084832 .

- Dai, S.; Song, K.F.; Wang, Y.L.; Zhang, P.J. Two-Dimensional Space Turntable Pitch Axis Trajectory Prediction Method Based on Sun Vector and CNN-LSTM Model. Appl. Sci. 2023 , 13 , 4939. https://doi.org/10.3390/app13084939 .

- Gao, L.Y.; Xiao, S.L.; Hu, C.H.; Yan, Y. Hyperspectral Image Classification Based on Fusion of Convolutional Neural Network and Graph Network. Appl. Sci. 2023 , 13 , 7143. https://doi.org/10.3390/app13127143 .

- Yang, T.; Xu, F.; Zeng, S.; Zhao, S.J.; Liu, Y.W.; Wang, Y.B. A Novel Constant Damping and High Stiffness Control Method for Flexible Space Manipulators Using Luenberger State Observer. Appl. Sci. 2023 , 13 , 7954. https://doi.org/10.3390/app13137954 .

- Ma, Z.L.; Zhao, Q.L.; Che, X.; Qi, X.D.; Li, W.X.; Wang, S.X. An Image Denoising Method for a Visible Light Camera in a Complex Sky-Based Background. Appl. Sci. 2023 , 13 , 8484. https://doi.org/10.3390/app13148484 .

- Liu, L.D.; Long, Y.J.; Li, G.N.; Nie, T.; Zhang, C.C.; He, B. Fast and Accurate Visual Tracking with Group Convolution and Pixel-Level Correlation. Appl. Sci. 2023 , 13 , 9746. https://doi.org/10.3390/app13179746 .

- Kee, E.; Chong, J.J.; Choong, Z.J.; Lau, M. Development of Smart and Lean Pick-and-Place System Using EfficientDet-Lite for Custom Dataset. Appl. Sci. 2023 , 13 , 11131. https://doi.org/10.3390/app132011131 .

- Li, Y.F.; Wang, Q.H.; Liu, Q. Developing a Static Kinematic Model for Continuum Robots Using Dual Quaternions for Efficient Attitude and Trajectory Planning. Appl. Sci. 2023 , 13 , 11289. https://doi.org/10.3390/app132011289 .

- Yu, J.; Zhang, Y.; Qi, B.; Bai, X.T.; Wu, W.; Liu, H.X. Analysis of the Slanted-Edge Measurement Method for the Modulation Transfer Function of Remote Sensing Cameras. Appl. Sci. 2023 , 13 , 13191. https://doi.org/10.3390/app132413191 .

- Jia, L.F.; Zeng, S.; Feng, L.; Lv, B.H.; Yu, Z.Y.; Huang, Y.P. Global Time-Varying Path Planning Method Based on Tunable Bezier Curves. Appl. Sci. 2023 , 13 , 13334. https://doi.org/10.3390/app132413334 .

- Lin, H.Y.; Quan, P.K.; Liang, Z.; Wei, D.B.; Di, S.C. Low-Cost Data-Driven Robot Collision Localization Using a Sparse Modular Point Matrix. Appl. Sci. 2024 , 14 , 2131. https://doi.org/10.3390/app14052131 .

- Cai, R.G.; Li, X. Path Planning Method for Manipulators Based on Improved Twin Delayed Deep Deterministic Policy Gradient and RRT*. Appl. Sci. 2024 , 14 , 2765. https://doi.org/10.3390/app14072765 .

- Muñoz-Barron, B.; Sandoval-Castro, X.Y.; Castillo-Castaneda, E.; Laribi, M.A. Characterization of a Rectangular-Cut Kirigami Pattern for Soft Material Tuning. Appl. Sci. 2024 , 14 , 3223. https://doi.org/10.3390/app14083223 .

- Garcia, E.; Jimenez, M.A.; Santos, P.G.D.; Armada, M. The evolution of robotics research. IEEE Robot. Autom. Mag. 2007 , 14 , 90–103. [ Google Scholar ] [ CrossRef ]

- Murphy, R.R. Introduction to AI Robotics ; MIT Press: Cambridge, UK; London, UK, 2019. [ Google Scholar ]

- Cadena, C.; Carlone, L.; Carrillo, H.; Latif, Y.; Scaramuzza, D.; Neira, J.; Reid, I.; Leonard, J.J. Past, present, and future of simultaneous localization and mapping: Toward the robust-perception age. IEEE Trans. Robot. 2016 , 32 , 1309–1332. [ Google Scholar ] [ CrossRef ]

- Nof, S.Y. Handbook of Industrial Robotics ; Wiley Press: New York, NY, USA, 1999. [ Google Scholar ]

- Kleeberger, K.; Bormann, R.; Kraus, W.; Huber, M.F. A survey on learning-based robotic grasping. Curr. Robot. Rep. 2020 , 1 , 239–249. [ Google Scholar ] [ CrossRef ]

- Iida, F.; Laschi, C. Soft robotics: Challenges and perspectives. Procedia Computer Science 2011 , 7 , 99–102. [ Google Scholar ] [ CrossRef ]

- Pfeifer, R.; Lungarella, M.; Iida, F. Self-organization, embodiment, and biologically inspired robotics. Science 2007 , 318 , 1088–1093. [ Google Scholar ] [ CrossRef ]

- Hsia, T.C.S.; Lasky, T.A.; Guo, Z.Y. Robust independent joint controller design for industrial robot manipulators. IEEE Trans. Ind. Electron. 1991 , 38 , 21–25. [ Google Scholar ] [ CrossRef ]

- Song, Q.; Zhao, Q.L.; Wang, S.X.; Liu, Q.; Chen, X.H. Dynamic path planning for unmanned vehicles based on fuzzy logic and improved ant colony optimization. IEEE Access 2020 , 8 , 62107–62115. [ Google Scholar ] [ CrossRef ]

- Wang, S.; Chen, X.H.; Ding, G.Y.; Li, Y.Y.; Xu, W.C.; Zhao, Q.L.; Gong, Y.; Song, Q. A Lightweight Localization Strategy for LiDAR-Guided Autonomous Robots with Artificial Landmarks. Sensors 2021 , 21 , 4479. [ Google Scholar ] [ CrossRef ] [ PubMed ]

- Gasparetto, A.; Boscariol, P.; Lanzutti, A.; Vidoni, R. Path planning and trajectory planning algorithms: A general overview. In Motion and Operation Planning of Robotic Systems: Background and Practical Approaches ; Springer: Berlin/Heidelberg, Germany, 2015. [ Google Scholar ]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention is all you need. Adv. Neural Inf. Process. Syst. 2017 , 30 . [ Google Scholar ]

- Flores-Abad, A.; Ma, O.; Pham, K.; Ulrich, S. A review of space robotics technologies for on-orbit servicing. Prog. Aerosp. Sci. 2014 , 68 , 1–26. [ Google Scholar ] [ CrossRef ]

- Ma, O.; Dang, H.; Pham, K. On-orbit identification of inertia properties of spacecraft using a robotic arm. J. Guid. Control. Dyn. 2008 , 31 , 1761–1771. [ Google Scholar ] [ CrossRef ]

- Wei, L.; Zhang, L.; Gong, X.; Ma, D.M. Design and optimization for main support structure of a large-area off-axis three-mirror space camera. Appl. Opt. 2017 , 56 , 1094–1100. [ Google Scholar ] [ CrossRef ] [ PubMed ]

| The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

Share and Cite

Song, Q.; Zhao, Q. Recent Advances in Robotics and Intelligent Robots Applications. Appl. Sci. 2024 , 14 , 4279. https://doi.org/10.3390/app14104279

Song Q, Zhao Q. Recent Advances in Robotics and Intelligent Robots Applications. Applied Sciences . 2024; 14(10):4279. https://doi.org/10.3390/app14104279

Song, Qi, and Qinglei Zhao. 2024. "Recent Advances in Robotics and Intelligent Robots Applications" Applied Sciences 14, no. 10: 4279. https://doi.org/10.3390/app14104279

Article Metrics

Article access statistics, further information, mdpi initiatives, follow mdpi.

Subscribe to receive issue release notifications and newsletters from MDPI journals

- Email Alert

论文 全文 图 表 新闻

- Abstracting/Indexing

- Journal Metrics

- Current Editorial Board

- Early Career Advisory Board

- Previous Editor-in-Chief

- Past Issues

- Current Issue

- Special Issues

- Early Access

- Online Submission

- Information for Authors

- Share facebook twitter google linkedin

IEEE/CAA Journal of Automatica Sinica

- JCR Impact Factor: 15.3 , Top 1 (SCI Q1) CiteScore: 23.5 , Top 2% (Q1) Google Scholar h5-index: 77, TOP 5

| Y. Tong, H. Liu, and Z. Zhang, “Advancements in humanoid robots: A comprehensive review and future prospects,” , vol. 11, no. 2, pp. 301–328, Feb. 2024. doi: |

| Y. Tong, H. Liu, and Z. Zhang, “Advancements in humanoid robots: A comprehensive review and future prospects,” , vol. 11, no. 2, pp. 301–328, Feb. 2024. doi: |

Advancements in Humanoid Robots: A Comprehensive Review and Future Prospects

Doi: 10.1109/jas.2023.124140.

- Yuchuang Tong , , ,

- Haotian Liu , ,

- Zhengtao Zhang , ,

Yuchuang Tong (Member, IEEE) received the Ph.D. degree in mechatronic engineering from the State Key Laboratory of Robotics, Shenyang Institute of Automation (SIA), Chinese Academy of Sciences (CAS) in 2022. Currently, she is an Assistant Professor with the Institute of Automation, Chinese Academy of Sciences. Her research interests include humanoid robots, robot control and human-robot interaction. Dr. Tong has authored more than ten publications in journals and conference proceedings in the areas of her research interests. She was the recipient of the Best Paper Award from 2020 International Conference on Robotics and Rehabilitation Intelligence, the Dean’s Award for Excellence of CAS and the CAS Outstanding Doctoral Dissertation

Haotian Liu received the B.Sc. degree in traffic equipment and control engineering from Central South University in 2021. He is currently a Ph.D. candidate in control science and control engineering at the CAS Engineering Laboratory for Industrial Vision and Intelligent Equipment Technology, Institute of Automation, Chinese Academy of Sciences (IACAS) and University of Chinese Academy of Sciences (UCAS). His research interests include robotics, intelligent control and machine learning

Zhengtao Zhang (Member, IEEE) received the B.Sc. degree in automation from the China University of Petroleum in 2004, the M.Sc. degree in detection technology and automatic equipment from the Beijing Institute of Technology in 2007, and the Ph.D. degree in control science and engineering from the Institute of Automation, Chinese Academy of Sciences in 2010. He is currently a Professor with the CAS Engineering Laboratory for Industrial Vision and Intelligent Equipment Technology, IACAS. His research interests include industrial vision inspection, and intelligent robotics

- Corresponding author: Yuchuang Tong, e-mail: [email protected] ; Zhengtao Zhang, e-mail: [email protected]

- Accepted Date: 2023-11-26

This paper provides a comprehensive review of the current status, advancements, and future prospects of humanoid robots, highlighting their significance in driving the evolution of next-generation industries. By analyzing various research endeavors and key technologies, encompassing ontology structure, control and decision-making, and perception and interaction, a holistic overview of the current state of humanoid robot research is presented. Furthermore, emerging challenges in the field are identified, emphasizing the necessity for a deeper understanding of biological motion mechanisms, improved structural design, enhanced material applications, advanced drive and control methods, and efficient energy utilization. The integration of bionics, brain-inspired intelligence, mechanics, and control is underscored as a promising direction for the development of advanced humanoid robotic systems. This paper serves as an invaluable resource, offering insightful guidance to researchers in the field, while contributing to the ongoing evolution and potential of humanoid robots across diverse domains.

- Future trends and challenges ,

- humanoid robots ,

- human-robot interaction ,

- key technologies ,

- potential applications

| [1] | , vol. 4, no. 26, p. eaaw3520, Jan. 2019. doi: |

| [2] | , vol. 610, no. 7931, pp. 283–289, Oct. 2022. doi: |

| [3] | , New Delhi, India, 2019, pp. 1–6. |

| [4] | , vol. 6, no. 54, p. eabd9461, May 2021. doi: |

| [5] | , vol. 39, no. 4, pp. 3332–3346, 2023. doi: |

| [6] | , vol. 23, no. 3, p. 1506, Jan. 2023. doi: |

| [7] | , vol. 15, no. 2, pp. 419–433, Jun. 2023. doi: |

| [8] | , vol. 8, no. 10, pp. 6435–6442, Oct. 2023. doi: |

| [9] | , vol. 34, p. e20, Dec. 2019. doi: |

| [10] | , vol. 16, no. 2, Apr. 2019. |

| [11] | , vol. 11, no. 4, pp. 555–573, Feb. 2019. doi: |

| [12] | . Dordrecht, The Netherlands: Springer, 2019. |

| [13] | . 2nd ed. Cham, Germany: Springer, 2016. |

| [14] | , vol. 10, no. 1, p. 5489, Dec. 2019. doi: |

| [15] | , Coimbatore, India, 2018, pp. 555–560. |

| [16] | , vol. 65, no. 3, pp. 147–159, Jul. 1991. doi: |

| [17] | , vol. 115, no. 5, pp. 431–438, Oct. 2021. doi: |

| [18] | , vol. 6, no. 2, pp. 159–168, Apr. 2002. doi: |

| [19] | , Daejeon, South Korea, 2008, pp. 155–160. |

| [20] | , vol. 29, no. 4, p. 73, Jul. 2010. |

| [21] | , vol. 48, no. 11, pp. 2919–2924, Aug. 2015. doi: |

| [22] | ( ), vol. 42, no. 5, pp. 728–743, Sept. 2012. doi: |

| [23] | , vol. 41, pp. 147–155, May 2013. doi: |

| [24] | , Tehran, Iran, 2019, pp. 498–503. |

| [25] | , vol. 34, no. 21–22, pp. 1338–1352, Jun. 2020. doi: |

| [26] | , vol. 34, no. 21–22, pp. 1370–1379, Aug. 2020. doi: |

| [27] | , Shenzhen, China, 2013, pp. 1276–1281. |

| [28] | , vol. 47, no. 2-3, pp. 129–141, Jun. 2004. doi: |

| [29] | , vol. 13, no. 12, pp. 3835–3851, Dec. 2020. |

| [30] | , vol. 39, no. 3, pp. 1706–1727, Jun. 2023. doi: |

| [31] | , vol. 3, no. 2, pp. 93–100, Jun. 1984. doi: |

| [32] | , Stuttgart, Germany, 2018, pp. 97–102. |

| [33] | , Taipei, China, 2010, pp. 3617–3622. |

| [34] | , Long Beach, USA, 2005, pp. 167–173. |

| [35] | , vol. 365, no. 1850, pp. 11–19, Jan. 2007. |

| [36] | , Nagoya, Japan, 2004, pp. 23–28. |

| [37] | , London, UK, 2000, pp. 285–293. |

| [38] | , Kobe, Japan, 2003, pp. 938–943. |

| [39] | , Beijing, China, 2006, pp. 1428–1433. |

| [40] | , Lausanne, Switzerland, 2002, pp. 2478–2483. |

| [41] | , vol. 43, no. 3, pp. 253–270, Mar. 2008. doi: |

| [42] | , vol. 40, no. 3, pp. 429–455, Mar. 2016. doi: |

| [43] | , vol. 46, pp. 1441–1448, Dec. 2015. doi: |

| [44] | , vol. 30, no. 4, pp. 372–377, May 2012. doi: |

| [45] | , Hong Kong, China, 2014, pp. 5983–5989. |

| [46] | , vol. 7, p. 62, May 2020. doi: |

| [47] | , 2023. DOI: |

| [48] | , vol. 52, no. 10, pp. 11267–11280, Oct. 2022. doi: |

| [49] | , vol. 20, no. 1, pp. 1–18, Jan. 2023. doi: |

| [50] | , vol. 27, no. 5, pp. 3463–3473, Oct. 2022. doi: |

| [51] | humanoid robot: An open-systems platform for research in cognitive development,” , vol. 23, no. 8–9, pp. 1125–1134, Oct.–Nov. 2010. doi: |

| [52] | , vol. 21, no. 10, pp. 1151–1175, Oct. 2007. doi: |

| [53] | , vol. 55, no. 5, pp. 2111–2120, May 2008. doi: |

| [54] | , vol. 36, no. 2, pp. 517–536, Apr. 2020. doi: |

| [55] | , vol. 31, no. 9, pp. 1094–1113, Jul. 2012. doi: |

| [56] | , vol. 32, no. 9, pp. 4013–4025, Sept. 2021. doi: |

| [57] | , vol. 7, no. 65, p. eabm6010, Apr. 2022. doi: |

| [58] | , vol. 35, no. 1, pp. 78–94, Feb. 2019. doi: |

| [59] | , vol. 26, no. 5, pp. 2700–2711, Oct. 2021. doi: |

| [60] | , vol. 67, no. 8, pp. 6629–6638, Aug. 2020. doi: |

| [61] | , vol. 7, no. 2, pp. 2977–2984, Apr. 2022. doi: |

| [62] | , vol. 29, no. 5, pp. 412–419, Oct. 2002. doi: |

| [63] | , Pasadena, USA, 2008, pp. 905–910. |

| [64] | , Busan, South Korea, 2006, pp. I-31–I-34. |

| [65] | , Orlando, USA, 2006, pp. 76–81. |

| [66] | , New Orleans, USA, 2004, pp. 1083–1090. |

| [67] | , Montreal, Canada, 2019, pp. 277–283. |

| [68] | , C. Pradalier, R. Siegwart, and G. Hirzinger, Eds. Berlin, Heidelberg, Germany: Springer, 2011, pp. 301–314. |

| [69] | , vol. 4, no. 2, pp. 1431–1438, Apr. 2019. doi: |

| [70] | , Vilamoura-Algarve, Portugal, 2012, pp. 3687–3692. |

| [71] | , Tokyo, Japan, 2013, pp. 935–940. |

| [72] | , Osaka, Japan, 2012, pp. 1–6. |

| [73] | , vol. 35, no. S2, pp. ii24–ii26, Sept. 2006. |

| [74] | , Leuven, Belgium, 1998, pp. 1321–1326. |

| [75] | , vol. 26, no. 4, pp. 260–266, Jun. 1999. doi: |

| [76] | , Barcelona, Spain, 2005, pp. 629–634. |

| [77] | , Osaka, Japan, 2010, pp. 73–74. |

| [78] | , San Diego, USA, 2007, pp. 2578–2579. |

| [79] | , Nice, France, 2008, pp. 779–786. |

| [80] | , Toyama, Japan, 2009, pp. 1125–1130. |

| [81] | , vol. 25, no. 2, pp. 414–425, Apr. 2009. doi: |

| [82] | , Big Sky, USA, 2003, pp. 3939–3947. |

| [83] | , Taipei, China, 2003, pp. 2543–2548. |

| [84] | , Shanghai, China, 2011, pp. 2178–2183. |

| [85] | , vol. 14, no. 2-3, pp. 179–197, Mar. 2003. |

| [86] | , vol. 28, no. 1, pp. 35–42, Feb. 2001. doi: |

| [87] | , Las Vegas, USA, 2020, pp. 3739–3746. |

| [88] | , Philadelphia, USA, 2019, pp. 4559–4566. |

| [89] | , Kansas City, USA, 2016, pp. 340–350. |

| [90] | , vol. 9, no. 12, Dec. 2017. |

| [91] | , Nanjing, China, 2014, pp. 8518–8523. |

| [92] | , Prague, Czech Republic, 2021, pp. 6–9. |

| [93] | , vol. 31, no. 10, pp. 1117–1133, Aug. 2012. doi: |

| [94] | , Orlando, USA, 2008, pp. 69621F. |

| [95] | , vol. 10, no. 1, p. 47, Jan. 2021. |

| [96] | , Edinburgh, UK, 2016, pp. 998602. |

| [97] | , vol. 32, no. 2, pp. 192–208, Mar. 2015. doi: |

| [98] | , Atlanta, USA, 2013, pp. 307–314. |

| [99] | , Tokyo, Japan, 2013, pp. 2479–2484. |

| [100] | , vol. 365, no. 1850, pp. 79–107, Jan. 2007. |

| [101] | , Cancun, Mexico, 2016, pp. 876–883. |

| [102] | , vol. 35, no. 6, pp. 504–506, Oct. 2008. doi: |

| [103] | , vol. 100, no. 8, pp. 2410–2428, Aug. 2012. doi: |

| [104] | , vol. 32, no. 3, pp. 397–419, May 2015. doi: |

| [105] | , Boston, USA, 2012, pp. 391–398. |

| [106] | , vol. 9, no. 4, p. 1250027, Dec. 2012. doi: |

| [107] | , Stockholm, Sweden, 2016, pp. 1817–1824. |

| [108] | , Karlsruhe, Germany, 2013, pp. 673–678. |

| [109] | , Tokyo, Japan, 2013, pp. 4145–4151. |

| [110] | , St. Louis, USA, 2009, pp. 5481–5486. |

| [111] | , A. Morecki, G. Bianchi, and C. Rzymkowski, Eds. Vienna, Austria: Springer, 2000, pp. 307–312. |

| [112] | , vol. 23, no. 2, pp. 74–80, Jun. 2016. doi: |

| [113] | , Daejeon, South Korea, 2008, pp. 477–483. |

| [114] | , Karlsruhe, Germany, 2013, pp. 5253–5258. |

| [115] | , Cluj-Napoca, Romania, 2022, pp. 127–131. |

| [116] | , Koscielisko, Poland, 2017, pp. 1–6. |

| [117] | , Chengdu, China, 2018, pp. 112–117. |

| [118] | , Orlando, USA, 1993, pp. 567–572. |

| [119] | , Seville, Spain, 2002, pp. 2451–2456. |

| [120] | , vol. 53, no. 4, pp. 411–434, Nov. 2021. doi: |

| [121] | , vol. 34, no. 1, pp. 1–17, Feb. 2018. doi: |

| [122] | , vol. 6, no. 8, Jan. 2014. |

| [123] | , vol. 101, pp. 34–44, Mar. 2018. doi: |

| [124] | , Hong Kong, China, 2014, pp. 6212–6217. |

| [125] | , 2001, pp. 164–167. |

| [126] | , vol. 27, no. 3, pp. 223–232, Feb. 2013. doi: |

| [127] | , Kobe, Japan, 2009, pp. 769–774. |

| [128] | , Madrid, Spain, 2014, pp. 959–966. |

| [129] | , Madrid, Spain, 2017, pp. 66–78. |

| [130] | , Seoul, South Korea, 2015, pp. 33–40. |

| [131] | , vol. 55, pp. 38–53, Nov. 2018. doi: |

| [132] | , Tehran, Iran, 2017, pp. 132–137. |

| [133] | , Beijing, China, 2018, pp. 747–754. |

| [134] | , vol. 17, no. 5, p. 2050021, Oct. 2020. doi: |

| [135] | , Kobe, Japan, 2009, pp. 2516–2521. |

| [136] | , Boston, USA, 2012, pp. 417–417. |

| [137] | , Mexico City, Mexico, 2015, pp. 1–6. |

| [138] | , Madrid, Spain, 2014, pp. 916–923. |

| [139] | , Baltimore, USA, 2012, pp. 199–206. |

| [140] | , vol. 10, p. 450, Feb. 2019. doi: |

| [141] | , Naples, Italy, 2019, pp. 445–446. |

| [142] | , Atlanta, USA, 2013, pp. 1–7. |

| [143] | , Catania, Italy, 2003, pp. 485–492. |

| [144] | , Barcelona, Spain, 2005, pp. 1431–1436. |

| [145] | , Tsukuba, Japan, 2005, pp. 135–140. |

| [146] | , Tsukuba, Japan, 2005, pp. 321–326. |

| [147] | , vol. 22, no. 2-3, pp. 159–190, Mar. 2008. doi: |

| [148] | , Taipei, China, 2003, pp. 2472–2477. |

| [149] | , Wuhan, China, 2017, pp. 286–297. |

| [150] | , Shanghai, China, 2002, pp. 1265–1269. |

| [151] | , Pittsburgh, USA, 2007, pp. 577–582. |

| [152] | , vol. 26, no. 1, pp. 109–116, Jan. 2008. doi: |

| [153] | , vol. 36, no. 7, pp. 784–795, Feb. 2019. doi: |

| [154] | , vol. 28, no. 10, p. 103002, Sept. 2019. doi: |

| [155] | , vol. 6, no. 3, p. eaav8219, Jan. 2020. doi: |

| [156] | , vol. 18, no. 4, pp. 764–785, Aug. 2021. doi: |

| [157] | , vol. 53, no. 1, pp. 17–94, Jan. 2020. doi: |

| [158] | , vol. 24, no. 1, pp. 104–121, Feb. 2021. doi: |

| [159] | , vol. 27, no. 3, pp. 574–588, Mar. 2019. doi: |

| [160] | , vol. 9, no. 2, pp. 113–125, Jun. 2001. doi: |

| [161] | , vol. 28, no. 3, p. 60, Aug. 2009. |

| [162] | , vol. 24, no. 4, pp. 532–543, Aug. 2005. doi: |

| [163] | , H. Akiyama, O. Obst, C. Sammut, and F. Tonidandel, Eds. Cham, Germany: Springer, 2018, pp. 423–434. |

| [164] | , vol. 17, no. 1, p. 94, Jan. 2017. doi: |

| [165] | , Portland, USA, 2017, pp. 101631U. |

| [166] | , Paris, France, 2012, pp. 337–344. |

| [167] | , vol. 5, pp. 1–31, May 2022. doi: |

| [168] | , Detroit, USA, 1999, pp. 368–374. |

| [169] | , Washington, USA, 2002, pp. 1404–1409. |

| [170] | , vol. 14, p. 600885, Jan. 2021. doi: |

| [171] | , Singapore, Singapore, 2018, pp. 436–441. |

| [172] | , vol. 8, no. 8, pp. 5031–5038, Aug. 2023. doi: |

| [173] | , vol. 33, no. 5, p. e5999, Mar. 2021. doi: |

| [174] | , Karon Beach, Thailand, 2011, pp. 2241–2242. |

| [175] | , vol. 13, no. 2, p. 77, Mar.–Apr. 2016. doi: |

| [176] | , Vilamoura-Algarve, Portugal, 2012, pp. 4019–4026. |

| [177] | , vol. 13, p. 70, Aug. 2019. doi: |

| [178] | , vol. 6, no. 4, pp. 8261–8268, Oct. 2021. doi: |

| [179] | , vol. 6, no. 4, pp. 8561–8568, Oct. 2021. doi: |

| [180] | , Seoul, South Korea, 2015, pp. 1013–1019. |

| [181] | , vol. 7, no. 2, pp. 2779–2786, Apr. 2022. doi: |

| [182] | , vol. 67, no. 9, p. e17306, Sept. 2021. doi: |

| [183] | , Genova, Italy, 2006, pp. 200–207. |

| [184] | , Taipei, China, 2003, pp. 1620–1626. |

| [185] | , vol. 34, no. 21–22, pp. 1353–1369, Nov. 2020. doi: |

| [186] | , vol. 158, p. 104269, Dec. 2022. doi: |

| [187] | , vol. 31, pp. 17–32, Feb. 2019. doi: |

| [188] | , vol. 51, no. 4, pp. 2332–2341, Apr. 2021. doi: |

| [189] | , vol. 7, no. 3, pp. 8225–8232, Jul. 2022. doi: |

| [190] | , vol. 38, no. 6, p. 206, Dec. 2019. |

| [191] | , M. Diehl and K. Mombaur, Eds. Berlin, Heidelberg, Germany: Springer, 2006, vol. 340, pp. 299–324. |

| [192] | , vol. 32, no. 6, pp. 907–934, Sept. 2014. doi: |

| [193] | , vol. 4, no. 2, pp. 2116–2123, Apr. 2019. doi: |

| [194] | , vol. 18, no. 2, pp. 484–494, Apr. 2021. doi: |

| [195] | , vol. 86, pp. 13–28, Dec. 2016. doi: |

| [196] | , Madrid, Spain, 2018, pp. 8227–8232. |

| [197] | , vol. 31, no. 5, pp. 2231–2244, Sept. 2023. doi: |

| [198] | , vol. 39, no. 2, pp. 905–922, Apr. 2023. doi: |

| [199] | , vol. 8, no. 7, pp. 4307–4314, Jul. 2023. doi: |

| [200] | , vol. 39, no. 4, pp. 3154–3166, Aug. 2023. doi: |

| [201] | , vol. 28, no. 6, pp. 3029–3040, Dec. 2023. doi: |

| [202] | , vol. 8, no. 1, p. 67, Feb. 2023. doi: |

| [203] | , vol. 71, no. 2, pp. 1708–1717, Feb. 2024. doi: |

| [204] | , vol. 11, pp. 20284–20297, Feb. 2023. doi: |

| [205] | , vol. 8, no. 5, pp. 3039–3046, May 2023. doi: |

| [206] | , vol. 34, no. 4, pp. 953–965, Aug. 2018. doi: |

| [207] | , vol. 10, no. 2, pp. 1401–1413, Jan. 2023. doi: |

| [208] | , vol. 28, no. 2, pp. 322–329, Apr. 2023. doi: |

| [209] | , vol. 11, no. 1, p. 1332, Mar. 2020. doi: |

| [210] | , Orlando, USA, 2008, pp. 6–11. |

| [211] | , vol. 7, no. 13, p. 20005484, Jul. 2020. |

| [212] | , vol. 17, no. 7, pp. 4492–4502, Jul. 2021. doi: |

| [213] | , vol. 390, pp. 260–267, May 2020. doi: |

| [214] | , vol. 67, no. 10, pp. 8608–8617, Oct. 2020. doi: |

| [215] | , vol. 29, no. 1, pp. 10–24, Jan. 2018. doi: |

| [216] | , vol. 39, no. 1, pp. 3–20, Jan. 2020. doi: |

| [217] | , vol. 9, no. 3, pp. 318–333, Jun. 2005. doi: |

| [218] | , vol. 55, no. 3, pp. 1444–1452, Mar. 2008. doi: |

| [219] | , vol. 18, no. 3, pp. 1864–1872, Mar. 2022. doi: |

| [220] | , vol. 22, no. 30, pp. 1–82, Jan. 2021. |

| [221] | , vol. 50, no. 10, pp. 3701–3712, Oct. 2020. doi: |

| [222] | , vol. 13, no. 6, pp. 1235–1252, Sept. 2021. doi: |

| [223] | , vol. 3, no. 4, pp. 3247–3254, Oct. 2018. doi: |

| [224] | , Anchorage, USA, 2010, pp. 2369–2374. |

| [225] | , vol. 84, pp. 1–16, Dec. 2016. doi: |

| [226] | , vol. 7, p. 61, Jun. 2020. doi: |

| [227] | , Baltimore, USA, 2022, pp. 8387–8406. |

| [228] | , vol. 4, no. 3, pp. 2407–2414, Jul. 2019. doi: |

| [229] | , vol. 13, no. 1, pp. 105–117, Mar. 2021. doi: |

| [230] | , vol. 13, no. 1, pp. 162–170, Mar. 2021. doi: |

| [231] | , vol. 3, no. 6, pp. 233–242, Jun. 1999. doi: |

| [232] | , vol. 24, no. 3, pp. 1117–1128, Jun. 2019. doi: |

| [233] | , vol. 30, no. 3, pp. 777–787, Mar. 2019. doi: |

| [234] | , vol. 451, pp. 205–214, Sept. 2021. doi: |

| [235] | , vol. 62, no. 10, pp. 1517–1530, Oct. 2014. doi: |

| [236] | , vol. 61, no. 12, pp. 1323–1334, Dec. 2013. doi: |

| [237] | , vol. 21, no. 4, p. 1278, Feb. 2021. doi: |

| [238] | , vol. 77, no. 2, pp. 257–286, Feb. 1989. doi: |

| [239] | , vol. 46, no. 3, pp. 706–717, Mar. 2016. doi: |

| [240] | , Washington, USA, 2002, pp. 1398–1403. |

| [241] | , vol. 38, no. 7, pp. 833–852, May 2019. doi: |

| [242] | , vol. 7, no. 2, pp. 4917–4923, Apr. 2022. doi: |

| [243] | , Honolulu, USA, 2019, pp. 7749–7758. |

| [244] | , Macao, China, 2019, pp. 2692–2700. |

| [245] | , Honolulu, USA, 2017, pp. 1256–1261. |

| [246] | , Portland, USA, 2019, pp. 1536–1540. |

| [247] | , Xiamen, China, 2019, pp. 102–109. |

| [248] | , Stockholm, Sweden, 2018, pp. 2204–2206. |

| [249] | , vol. 2019, p. 4834516, Apr. 2019. |

| [250] | , Canberra, Australia, 2020, pp. 241–249. |

| [251] | , vol. 602, pp. 328–350, Jul. 2022. doi: |

| [252] | , vol. 5, no. 4, pp. 5355–5362, Oct. 2020. doi: |

| [253] | , vol. 63, no. 9, pp. 2787–2802, Sept. 2018. doi: |

| [254] | , New York, USA, 2016, pp. 49–58. |

| [255] | , Stockholm, Sweden, 2016, pp. 512–519. |

| [256] | , vol. 520, pp. 1–14, May 2020. doi: |

| [257] | , vol. 388, pp. 60–69, May 2020. doi: |

| [258] | , Barcelona, Spain, 2016, pp. 4572–4580. |

| [259] | , vol. 457, pp. 365–376, Oct. 2021. doi: |

| [260] | , Baltimore, USA, 2022, pp. 24725–24742. |

| [261] | , vol. 44, no. 10, pp. 6968–6980, Oct. 2022. doi: |

| [262] | , Xi’an, China, 2021, pp. 2797–2803. |

| [263] | , Ginowan, Japan, 2022, pp. 714–721. |

| [264] | , vol. 22, pp. 1–49, Apr. 2021. |

| [265] | , vol. 38, no. 2-3, pp. 126–145, Mar. 2019. doi: |

| [266] | , vol. 26, no. 1, pp. 1–20, Feb. 2010. doi: |

| [267] | , vol. 31, no. 34, p. 1803637, Aug. 2019. doi: |

| [268] | , vol. 20, no. 14, pp. 7525–7531, Jul. 2020. doi: |

| [269] | , vol. 46, no. 15, pp. 23592–23598, Oct. 2020. doi: |

| [270] | , vol. 19, no. 2, pp. 58–71, Jun. 2012. doi: |

| [271] | , Stockholm, Sweden, 2016, pp. 4851–4858. |

| [272] | , Rome, Italy, 2007, pp. 2162–2168. |

| [273] | , New Orleans, USA, 2004, pp. 592–597. |

| [274] | , vol. 33, no. 9, pp. 1251–1270, Aug. 2014. doi: |

| [275] | , vol. 28, no. 2, pp. 427–439, Apr. 2012. doi: |

| [276] | , vol. 29, no. 2, pp. 331–345, Apr. 2013. doi: |

| [277] | , Nagoya Aichi, Japan, 2007, pp. 228–235. |

| [278] | , Barcelona, Spain, 2005, pp. 1066–1071. |

| [279] | ( ), vol. 32, no. 1, pp. 57–65, Feb. 2002. doi: |

| [280] | , vol. 54, no. 12, pp. 1005–1014, Dec. 2006. doi: |

| [281] | , San Diego, USA, 2007, pp. 798–805. |

| [282] | , vol. 13, no. 1, pp. 24–32, Mar. 2005. doi: |

| [283] | , Zurich, Switzerland, 2007, pp. 768–773. |

| [284] | , vol. 6, no. 2, pp. 170–187, Jun. 2001. doi: |

| [285] | , vol. 8, no. 3, pp. 401–409, Sept. 2003. doi: |

| [286] | , Kobe, Japan, 2009, pp. 2972–2978. |

| [287] | , Xi’an, China, 2021, pp. 1622–1628. |

| [288] | , vol. 11, no. 10, pp. 10226–10236, Feb. 2019. doi: |

| [289] | , Kobe, Japan, 2009, pp. 2118–2123. |

| [290] | , Seoul, South Korea, 2015, pp. 610–615. |

| [291] | , Montreal, Canada, 2019, pp. 4303–4309. |

| [292] | , vol. 46, no. 3, pp. 655–667, Mar. 2016. doi: |

| [293] | , vol. 43, no. 5, pp. 535–551, Aug. 2016. doi: |

| [294] | , vol. 51, no. 7, pp. 3824–3835, Jul. 2021. doi: |

| [295] | , vol. 32, p. e1, 2017. doi: |

| [296] | , vol. 34, no. 2, pp. 229–240, Mar. 2017. doi: |

| [297] | , vol. 26, no. 1, pp. 11–17, Feb. 2011. doi: |

| [298] | , New Orleans, USA, 2004, pp. 1713-1718. |

| [299] | , Seoul, South Korea, 2015, pp. 623–630. |

| [300] | , vol. 32, no. 2, pp. 275–292, Mar. 2015. doi: |

| [301] | , vol. 93, pp. 157–163, Apr. 2019. doi: |

| [302] | , vol. 34, no. 5, pp. 518–526, Sept. 2022. doi: |

| [303] | , vol. 29, no. 3, pp. 269–290, Apr. 2020. doi: |

| [304] | , vol. 10, no. 1, p. e033096, 2020. doi: |

| [305] | , vol. 56, no. 4, pp. 535–556, Aug. 2019. doi: |

| [306] | , vol. 24, no. 3, pp. 354–371, Aug. 2021. doi: |

| [307] | , Vienna, Austria, 2017, pp. 332–340. |

| [308] | , vol. 33, no. 4, pp. 507–518, Jul. 2019. doi: |

| [309] | , vol. 32, no. 3, pp. 1367–1383, Apr. 2020. doi: |

| [310] | , vol. 85, p. 104309, Aug. 2021. doi: |

| [311] | , vol. 44, no. 10, pp. 1309–1317, Sept. 2022. doi: |

| [312] | , vol. 8, pp. 75264–75278, Apr. 2020. doi: |

| [313] | , F. De la Prieta, R. Gennari, M. Temperini, T. Di Mascio, P. Vittorini, Z. Kubincova, E. Popescu, D. R. Carneiro, L. Lancia, and A. Addone, Eds. Cham, Germany: Springer, 2022, pp. 217–226. |

| [314] | , vol. 111, p. 103749, Nov. 2020. doi: |

| [315] | , vol. 3, no. 1, p. e000371, Jan. 2019. doi: |

| [316] | , vol. 8, no. 9, pp. 5624–5631, Sept. 2023. doi: |

| [317] | , vol. 8, no. 2, p. 258, Jun. 2023. doi: |

| [318] | , vol. 52, no. 4, pp. 964–974, Mar. 2019. doi: |

| [319] | , vol. 28, no. 5, pp. 1131–1144, Oct. 2012. doi: |

| [320] | , vol. 48, no. 6, pp. 1741–1786, Jan. 2019. doi: |

| [321] | , vol. 4, p. 8, Feb. 2023. doi: |

| [322] | , vol. 5, p. 1, Jan. 2018. doi: |

| [323] | , vol. 32, no. 6, p. 1906171, Feb. 2020. doi: |

| [324] | , vol. 19, no. 5, pp. 3305–3312, Apr. 2019. doi: |

| [325] | , vol. 107, no. 10, pp. 2011–2015, Oct. 2019. doi: |

| [326] | , vol. 107, no. 2, pp. 247–252, Feb. 2019. doi: |

| [327] | , vol. 10, no. 20, p. 2207273, Jul. 2023. doi: |

| [328] | , vol. 298, p. 122111, Jul. 2023. doi: |

| [329] | (MXene) for electronic skin,” , vol. 7, no. 44, pp. 25314–25323, Oct. 2019. doi: |

| [330] | , vol. 20, no. 3, pp. 873–899, May 2023. doi: |

| [331] | , vol. 18, no. 3, pp. 501–533, Jun. 2021. doi: |

| [332] | , vol. 67, no. 5, pp. 3819–3829, May 2020. doi: |

| [333] | , vol. 15, no. 2, pp. 287–300, Apr. 2007. doi: |

| [334] | , vol. 66, no. 10, pp. 7788–7799, Oct. 2019. doi: |

| [335] | , vol. 4, no. 26, p. eaao4900, Jan. 2019. doi: |

| [336] | , vol. 49, no. 12, pp. 4097–4127, Dec. 2019. doi: |

| [337] | , vol. 35, no. 1, pp. 64–77, Feb. 2019. doi: |

| [338] | , Matsue, Japan, 2016, pp. 110–111. |

| [339] | , vol. 118, no. 7, pp. 3862–3886, Mar. 2018. doi: |

| [340] | , vol. 10, no. 4, pp. 61–68, Nov. 2010. doi: |

| [341] | , vol. 146, no. 2, pp. 267–281, May 1946. doi: |

| [342] | , vol. 28, no. 1, pp. 15–23, Feb. 2000. doi: |

| [343] | , vol. 113, no. 19, pp. 6583–6599, Mar. 2009. doi: |

| [344] | , vol. 1, no. 1, p. 18, Jun. 2011. doi: |

| [345] | , vol. 115, no. 15, pp. 7502–7542, Jun. 2015. doi: |

| [346] | , vol. 116, no. 16, pp. 9305–9374, Jul. 2016. doi: |

| [347] | , vol. 93, no. 9, pp. 771–780, Sept. 2017. doi: |

| [348] | , vol. 19, no. 21, p. 4740, Oct. 2019. doi: |

| [349] | , Tampere, Finland, 2020, pp. 1–6. |

| [350] | , Big Sky, USA, 2021, pp. 1-8. |

| [351] | , Madrid, Spain, 2018, pp. 5018–5025. |

| [352] | , vol. 23, no. 7, p. 3625, Mar. 2023. doi: |

| [353] | , vol. 50, no. 4, pp. 699–704, Aug. 2003. doi: |

| [354] | , vol. 22, no. 4, pp. 637–649, Aug. 2006. doi: |

| [355] | , vol. 3, no. 1, pp. 15–25, Jan. 2016. doi: |

| [356] | , vol. 21, no. 24, pp. 7351–7362, Dec. 2017. doi: |

| [357] | , vol. 102, pp. 274–286, Jan. 2020. doi: |

| [358] | , vol. 12, no. 6, pp. 1179–1201, Dec. 2020. doi: |

| [359] | , vol. 7, no. 2, pp. 5520–5527, Apr. 2022. doi: |

| [360] | , vol. 22, no. 3, pp. 724–734, May 2020. doi: |

| [361] | , vol. 90, pp. 308–314, Jan. 2019. doi: |

| [362] | , vol. 25, no. 10, p. 10LT01, Sept. 2016. doi: |

| [363] | , vol. 5, no. 3, pp. 4345–4351, Jul. 2020. doi: |

| [364] | , vol. 5, no. 7, pp. 2190–2208, Jul. 2022. doi: |

| [365] | , vol. 8, no. 8, pp. 5172–5179, Aug. 2023. doi: |

| [366] | , 2023. DOI: |

| [367] | , vol. 27, no. 3, pp. 401–410, Jun. 2011. doi: |

| [368] | , vol. 30, pp. 262–272, Jan. 2014. doi: |

| [369] | , vol. 48, pp. 56–66, May 2018. doi: |

| [370] | , , , vol. 24, no. 5, pp. 294–299, May 2021. doi: |

Proportional views

通讯作者: 陈斌, [email protected]

沈阳化工大学材料科学与工程学院 沈阳 110142

Figures( 7 ) / Tables( 5 )

Article Metrics

- PDF Downloads( 948 )

- Abstract views( 3929 )

- HTML views( 190 )

- The current state, advancements and future prospects of humanoid robots are outlined

- Fundamental techniques including structure, control, learning and perception are investigated

- This paper highlights the potential applications of humanoid robots

- This paper outlines future trends and challenges in humanoid robot research

- Copyright © 2022 IEEE/CAA Journal of Automatica Sinica

- 京ICP备14019135号-24

- E-mail: [email protected] Tel: +86-10-82544459, 10-82544746

- Address: 95 Zhongguancun East Road, Handian District, Beijing 100190, China

Export File

- Figure 1. Historical progression of humanoid robots.

- Figure 2. The mapping knowledge domain of humanoid robots. (a) Co-citation analysis; (b) Country and institution analysis; (c) Cluster analysis of keywords.

- Figure 3. The number of papers varies with each year.

- Figure 4. Research status of humanoid robots

- Figure 5. Comparison of Child-size and Adult-size humanoid robots

- Figure 6. Potential applications of humanoid robots.

- Figure 7. Key technologies of humanoid robots.

Robotics and artificial intelligence

Intelligent machines could shape the future of science and society.

Updated 25 July 2024

Image credit: Peter Crowther

At the end of the twentieth century, computing was transformed from the preserve of laboratories and industry to a ubiquitous part of everyday life. We are now living through the early stages of a similarly rapid revolution in robotics and artificial intelligence — and the effect on society could be just as enormous.

This collection will be updated throughout 2024, with stories from journalists and research from across the Nature Portfolio journals . Check back throughout the year for the latest additions, or sign up to Nature Briefing: AI and Robotics to receive weekly email updates on this collection and other goings-on in AI and robotics.

Original journalism from Nature .

LATEST FEATURE

AI is vulnerable to attack. Can it ever be used safely?

The models that underpin artificial-intelligence systems such as ChatGPT can be subject to attacks that elicit harmful behaviour. Making them safe will not be easy. By Simon Makin

25 July 2024

Are robots the solution to the crisis in older-person care?

Social robots that promise companionship and stimulation for older people and those with dementia are attracting investment, but some question their benefits. By Tammy Worth

25 April 2024

Robot, repair thyself: laying the foundations for self-healing machines

Advances in materials science and sensing could deliver robots that can mend themselves and feel pain. By Simon Makin

29 February 2024

This cyborg cockroach could be the future of earthquake search and rescue

From drivable bionic animals to machines made from muscle, biohybrid robots are on their way to a variety of uses. By Liam Drew

7 December 2023

A test of artificial intelligence

With debate raging over the abilities of modern AI systems, scientists are struggling to effectively assess machine intelligence. By Michael Eisenstein

14 September 2023

How robots can learn to follow a moral code

Ethical artificial intelligence aims to impart human values on machine-learning systems. By Neil Savage

26 October 2023

Robots need better batteries

As mobile machines travel further from the grid, they'll need lightweight and efficient power sources. By Jeff Hecht

29 June 2023

Synthetic data could be better than real data

Machine-generated data sets could improve privacy and representation in artificial intelligence, if researchers can find the right balance between accuracy and fakery. By Neil Savage

27 April 2023

Why artificial intelligence needs to understand consequences

A machine with a grasp of cause and effect could learn more like a human, through imagination and regret. By Neil Savage

24 February 2023

Abandoned: The human cost of neurotechnology failure

When the makers of electronic implants abandon their projects, people who rely on the devices have everything to lose. By Liam Drew

6 December 2022

Bioinspired robots walk, swim, slither and fly

Engineers look to nature for ideas on how to make robots move through the world. By Neil Savage

29 September 2022

Learning over a lifetime

Artificial-intelligence researchers turn to lifelong learning in the hopes of making machine intelligence more adaptable. By Neil Savage

20 July 2022

Teaching robots to touch

Robots have become increasingly adept at interacting with the world around them. But to fulfil their potential, they also need a sense of touch. By Marcus Woo

26 May 2022

Miniature medical robots step out from sci-fi

Tiny machines that deliver therapeutic payloads to precise locations in the body are the stuff of science fiction. But some researchers are trying to turn them into a clinical reality. By Anthony King

29 March 2022

Breaking into the black box of artificial intelligence

Scientists are finding ways to explain the inner workings of complex machine-learning models. By Neil Savage

Eager for more?

Good news — more stories on robotics and artificial intelligence will be published here throughout the year. Click below to sign up for weekly email updates from Nature Briefing: AI and Robotics .

Research and reviews

Curated from the Nature Portfolio journals.

Nature is pleased to acknowledge financial support from FII Institute in producing this Outlook supplement. Nature maintains full independence in all editorial decisions related to the content. About this content.

The supporting organization retains sole responsibility for the following message:

FII Institute is a global non-profit foundation with an investment arm and one agenda: Impact on Humanity. Committed to ESG principles, we foster the brightest minds and transform ideas into real-world solutions in five focus areas: AI and Robotics, Education, Healthcare, and Sustainability.

We are in the right place at the right time – when decision makers, investors, and an engaged generation of youth come together in aspiration, energized and ready for change. We harness that energy into three pillars – THINK, XCHANGE, ACT – and invest in the innovations that make a difference globally.

Join us to own, co-create and actualize a brighter, more sustainable future for humanity.

Visit the FII Institute website .

SPONSOR FEATURES

Sponsor retains sole responsibility for the content of the below articles.

Will ChatGPT give us a lesson in education?

There might be a learning curve as AI tools grow in popularity, but this technology offers teachers opportunities to help pupils acquire new skills around formulating questions and in critical thinking.

The challenge of making moral machines

Artificial intelligence has the potential to improve industries, markets and lives – but only if we can trust the algorithms.

- Privacy Policy

- Use of cookies

- Legal notice

- Terms & Conditions

- Accessibility statement

Advertisement

Robots in Healthcare: a Scoping Review

- Medical and Surgical Robotics (F Ernst, Section Editor)

- Open access

- Published: 22 October 2022

- Volume 3 , pages 271–280, ( 2022 )

Cite this article

You have full access to this open access article

- Ahmed Ashraf Morgan 1 ,

- Jordan Abdi 1 ,

- Mohammed A. Q. Syed 2 ,

- Ghita El Kohen 3 ,

- Phillip Barlow 4 &

- Marcela P. Vizcaychipi 1

13k Accesses

34 Citations

8 Altmetric

Explore all metrics

Purpose of Review

Robots are increasingly being adopted in healthcare to carry out various tasks that enhance patient care. This scoping review aims to establish the types of robots being used in healthcare and identify where they are deployed.

Recent Findings

Technological advancements have enabled robots to conduct increasingly varied and complex roles in healthcare. For instance, precision tasks such as improving dexterity following stroke or assisting with percutaneous coronary intervention.

This review found that robots have played 10 main roles across a variety of clinical environments. The two predominant roles were surgical and rehabilitation and mobility. Although robots were mainly studied in the surgical theatre and rehabilitation unit, other settings ranged from the hospital ward to inpatient pharmacy. Healthcare needs are constantly evolving, as demonstrated by COVID-19, and robots may assist in adapting to these changes. The future will involve increased telepresence and infrastructure systems will have to improve to allow for this.

Similar content being viewed by others

Robotics in Healthcare: A Survey

Assistive robotic systems in nursing care: a scoping review

Medical and Assistive Robotics in Global Health

Explore related subjects.

- Artificial Intelligence

- Medical Imaging

Avoid common mistakes on your manuscript.

Introduction

Since the advent of the COVID-19 pandemic, the healthcare industry has been flooded with novel technologies to assist the delivery of care in unprecedented circumstances. [ 1 , 2 ] Staff vacancy levels increased, [ 3 , 4 ] social restrictions curtailed many traditional means of care delivery, [ 5 ••] and stringent infection control measures brought new challenges to human-delivered care [ 6 ]. Although many of the challenges that the pandemic brought onto healthcare have subsided, staff burnout, [ 7 ] an increasingly elderly population, [ 8 ] and backlog strains [ 9 , 10 ] caused by the pandemic have meant that staff shortages persist across healthcare systems across the world.

Robotic systems have long been cited to be able to alleviate workforce pressures, not least in healthcare. [ 11 ] Such systems can include remote presence robots for virtual consultations or transportation robots for automated delivery of equipment within hospitals. In addition to supporting hospitals, robotic systems can offer the ability to support clinical practice in a variety of specialties. Examples include exoskeletons that assist stroke patients in mobilisation and surgical robots that allow surgeons to remotely perform operations. It is important to understand the landscape of roles that robots have in healthcare to inform the research and development of the future.

This scoping review aims to establish the types of robots being used in healthcare and identify where they are deployed by way of qualitative analysis of the literature. Through this, predictions can be made for the future of robotics.

Methodology

The protocol for this scoping review was conducted in accordance with the principles of the Cochrane Handbook for Systematic Reviews of Interventions [ 12 ].

Search Strategy

The following bibliographical databases were searched: CINAHL, Cochrane Library, Embase, MEDLINE, and Scopus using medical subject headings (MeSH or where appropriate, the database-specific thesaurus equivalent) or text word terms. The database search query was composed of two search concepts: the intervention (robots) and the context (clinical setting). Free text terms for the intervention included: “service robot*”, “surgical robot*” and “socially assistive robot*”; their associated MeSH term was “Robotics”. The names of specific robot systems were also searched for. The free words used for the context included the following: “Inpatient setting”, “outpatient setting”, “pharmacy”, “trauma centre”, “acute centre”, "rehabilitation hospital”, “geriatric hospital” and “field hospital”; their associated MeSH term was “Hospitals”. The use of the asterisk (*) enables the word to be treated as a prefix. For example, “elder*” will represent “elderly” and “eldercare” amongst others (Supplementary Material A ). Additional studies were selected through a free search (Google Scholar) and from reference lists of selected publications and relevant reviews. The search was conducted on 11th March 2022.

Study Selection

Two reviewers (AM and MS) independently screened the publications in a three-step assessment process: the title, abstract and full text, and selections were made in accordance with inclusion and exclusion criteria. Inclusion: physical robot, used within a healthcare setting. Exclusion: review/meta-analysis, non-English, technical report, wrong setting, wrong intervention (e.g. artificial intelligence, no robot), full manuscript not available. All publications collected during the database search, free search and reference list harvesting were scored on a 3-point scale (0, not relevant; 1, possibly relevant; 2, very relevant) and those with a combined score of 2 between the reviews would make it through to the next round of scoring. All publications with a total score of 0 were excluded. A publication with a combined score of 1 indicated a disagreement between the reviewers and would be resolved through discussion. At the end of the full-text screening round, a final set of publications to be included into the review was acquired. Cohen’s kappa coefficient was calculated to ascertain the agreement between the reviewers in the title, abstract and full-text screening phases.

Data Extraction

The data extraction form was designed in line with the PICO approach (participants, intervention, comparator and outcomes). This process was conducted by 4 reviewers (JA, AM, GE and MPV) according to the same extraction pro forma. All clinical outcome measures reported in selected studies were extracted. Data extraction included, in addition to outcomes, the number of participants, participant age group, specific robot(s) used, study setting, study design, comparators and specialty.

Duplicate reports of the same study may be present in different journals, manuscripts or conference proceedings and may each focus on different outcome measures or include a follow up data point. The data extraction process was conducted on the most comprehensive report of a given study.

Data Synthesis and Analysis

The identified robots were grouped in this review by their predominant role. These groupings were created by the authors and are not outwardly referenced or defined by the studies from which they are identified. Data that are not clearly defined in the studies, such as robot name, were labelled “n/a”.

Search Results

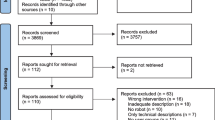

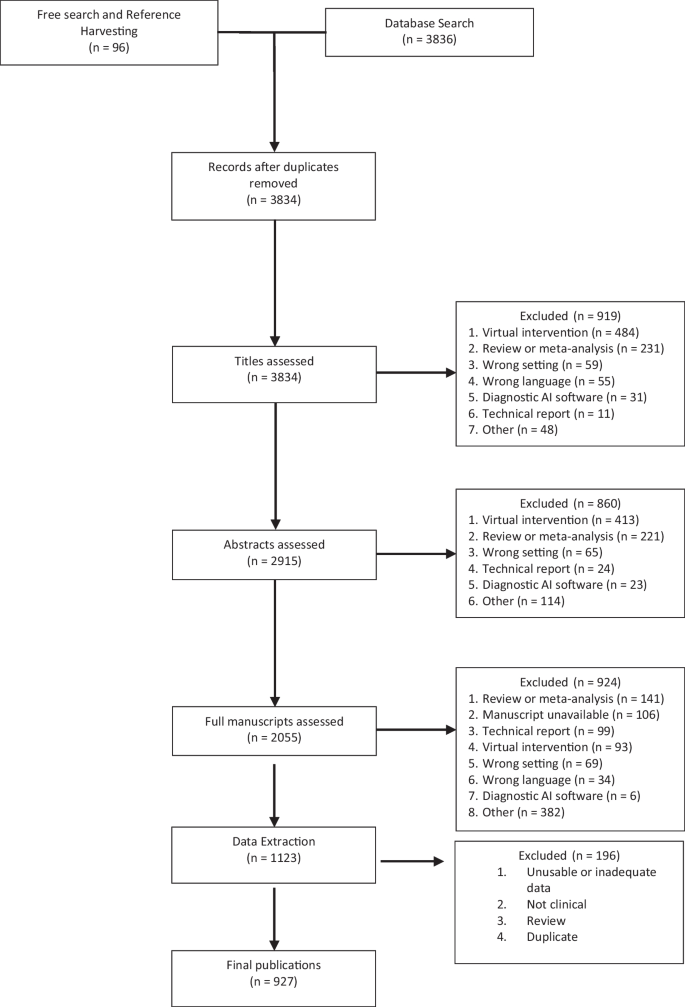

The database search yielded 3836 publications and a further 96 were included from reference harvesting and the free search. Duplicate publications were removed ( n = 98) and following three screening phases, 1123 publications were eligible for inclusion in the review. During data extraction, further 196 manuscripts were removed due to duplication, missing data, reviews, non-clinical evaluation with healthy participants or without enough appropriate data to extract, leaving a total of 927 original studies. The literature search is illustrated through the PRISMA flow diagram [ 13 ] in Fig. 1 , which highlights the review process and reasons for exclusion.

PRISMA diagram of selection process

The inter-rater agreement between the reviewers was calculated to be 0.23 for the title screen, 0.46 for the abstract screen and 0.53 the final report, demonstrating fair, moderate and moderate correlation between the reviewers respectively according to Cohen’s Kappa coefficient [ 14 ].

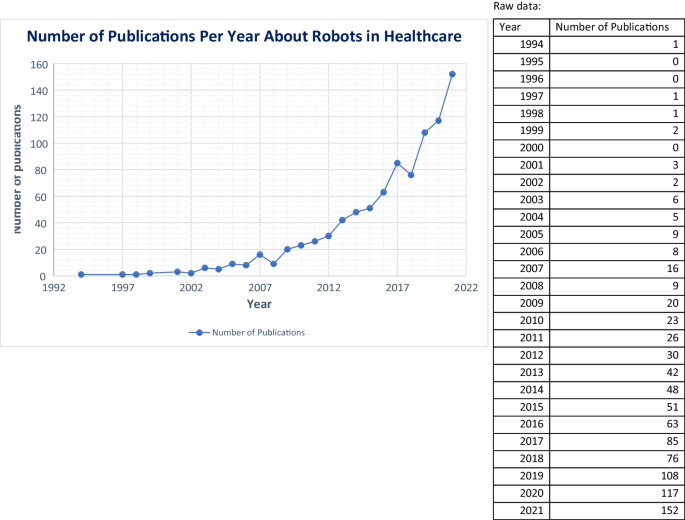

The included studies have publication dates ranging from 1994 to 2022, with between 0 and 152 publications per year. The median number of publications per year was 16 (IQR = 46). The number of publications peaked in 2021, with the number being 585% higher than 10 years prior. The publications per year can be seen in Fig. 2 . A full list of the final studies can be found in Supplementary Material B . Of the included studies, 65% were observational. The name of the robot evaluated was not clearly stated in 19% of publications. Of these, 89% were surgical robots.

Number of publications released per year about robots in healthcare

Participants and Settings

A total of 5,173,190 participants were included in the studies. Fifty-three percent of publications included fewer than 45 participants, with the larger populations generally coming from publications that analysed data from national databases. Eighty-nine percent of the manuscripts focused on adult populations, with only 7% solely including paediatrics. The specialties with most publications were stroke ( n = 194, 21%), urology ( n = 149, 16%) and general surgery ( n = 137, 15%).

A range of clinical settings was used, but the two most common were the surgical theatre ( n = 498) and the rehabilitation unit ( n = 353). Catheterisation labs ( n = 17), pharmacies ( n = 16) and general wards ( n = 10) were next in line. The remaining 4% of publications included elderly care units ( n = 7), outpatient clinics ( n = 6) and pathology labs ( n = 4). Table 1 provides a further breakdown of settings.

Identified Robots and Their Roles in Healthcare

One hundred and seventy-one named robots were identified. The da Vinci Surgical System (Intuitive Surgical, USA) was most frequently studied ( n = 291); the Lokomat® (Hocoma, Switzerland) ( n = 72) and Hybrid Assistive Limb (HAL) (Cyberdyne, Japan) ( n = 46) followed. A list of all identified and named robots can be found in Supplementary Material C .

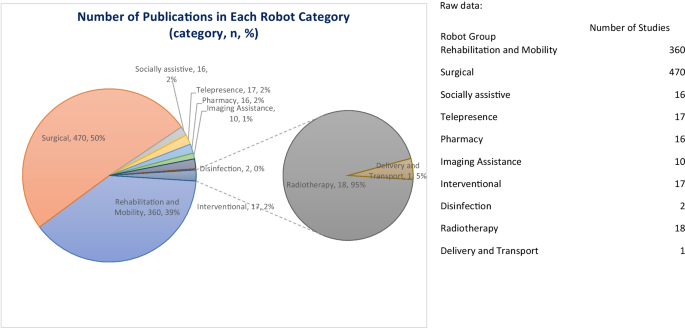

The identified robots were categorised by their role, leading to the formation of 10 different groups. These groups represent the 10 overarching roles that robots have been found to have within healthcare. Table 2 summarises the robot groups, the number of robots found in each and the most common robot(s). Figure 3 shows the number of publications within each robot group.

The number of studies in each of the 10 robot groups

Surgical robots can be used to assist in performing surgical procedures. Their specific roles within surgery are varied, ranging from instrument control to automated surgical table movement. This is a well-explored role, making up 51% of included studies and with 19 named robots identified. Most studies within this category are observational in nature (90%).

The da Vinci Surgical System is the predominant robot in use and thus has the largest literature base behind it. The system provides instruments that can be controlled by a surgeon through a console to perform minimally invasive surgery. It can be used in procedures including cholecystectomy, pancreatectomy and prostatectomy. For example, Jensen et al. [ 15 ] carried out a retrospective cohort study with 103 patients and compared robot assisted anti-reflux surgery with the da Vinci Surgical system to conventional laparoscopy and evaluated peri-operative outcomes. Other robotic systems that have been studied include the ROBODOC® Surgical System (Curexo Technology, USA) which was used in orthopaedics to plan and carry out total knee arthroplasties, [ 16 ] and Robotized Stereotactic Assistant (ROSA®) (Zimmer Biomet, France) which can assist with neurosurgical procedures such as intracranial electrode implantation [ 17 ].

Some of the identified robots can also assist with biopsy. For example, the iSR’obot™ Mona Lisa (Biobot Surgical, Singapore) can assist with visualisation and robotic needle guidance in prostate biopsy. One included publication studied this robot prospectively in a group of 86 men undergoing prostate biopsy with the researchers primarily evaluating detection of clinically significant prostate cancer [ 18 ].

Rehabilitation and Mobility

Rehabilitation and mobility robots are those that can physically assist or assess patients to aid in achieving goals. They can function to improve dexterity, achieve rehabilitation targets or aid in mobilisation. These robots may be used in the inpatient setting as well as in community rehabilitation centres. Rehabilitation is one of the major roles of robots in healthcare, making up 39% of reviewed manuscripts. This group of robots had the highest proportion of interventional studies, with 75% of all interventional studies originating from this group.

There are 102 named robots within this group, and they can be used for a variety of functions. Most are used for their ability to provide physical support to patients, assisting with rehabilitation. This can include single-joint or whole-body support. Others may be used for posture training through robotic tilt tables or for mobilisation through robotic wheelchairs.

The most common robot, Lokomat®, is a gait orthosis robot that can be used for rehabilitation in disorders such as stroke. Its primary role is to increase lower limb strength and range of motion. One study that evaluated this robot came from Husemann et al. [ 19 ] who carried out a randomised controlled trial with 30 acute stroke patients and compared those receiving conventional physiotherapy alone to those receiving conventional plus Lokomat therapy and evaluated outcomes such as ambulation ability. The second most studied robot, HAL, is a powered exoskeleton with multiple variants including a lower limb and single-joint version. Studies predominantly explore its use in neurological rehabilitation, but research is also present in areas such as post-operative rehabilitation.

Two studies showed robots being used to evaluate different patient parameters, such as gait speed. Hunova (Movendo Technology, Italy) is a robot that can be used for trunk and lower limb rehabilitation but can also be used for sensorimotor assessment such as limits of stability. An example of this robot being used was demonstrated by Cella et al. [ 20 ] who utilised the robot to obtain patient parameters that could be used in a fall risk assessment model within the elderly community, with the idea that robotic assessment can augment clinical evaluation and provide more robust data.

Radiotherapy

Radiotherapy robots can be used to assist with delivery of radiotherapy. This review identified one robot in this group: Cyberknife (Accuray, USA) ( n = 18). This robot can assist with application of radiotherapy and image guidance to manage conditions such as liver and orbital metastases. All publications were observational with no comparator groups. One such publication was from Staehler et al. [ 21 ] who carried out a prospective case–control trial with 40 patients with renal tumour and evaluated safety and efficacy of Cyberknife use.

Telepresence

A core feature of the telepresence robotic group is the ability to allow individuals to have a remote presence through means of the robot. The robot may be used for activities such as remote ward rounds, remote surgical mentoring or remote assessment of histology slides. This group included 17 publications with the most common robots being remote presence (RP) (InTouch Technologies, USA) and Double (Double Robotics, USA). Double is a self-driving robot with two wheels and a video interface. Croghan et al. [ 22 ] used this robot for surgical ward rounds with a remote consultant surgeon and compared the experience to conventional ward rounds.

Interventional

Separate from their surgical counterpart, robots from this group are used to assist with interventional procedures. This includes procedures such as ablation in atrial fibrillation, percutaneous coronary intervention (PCI) and neuro-endovascular intervention. Their function can range from catheter guidance to stent positioning. There were 17 publications included that cover nine robots, with the most common being the Niobe System (Stereotaxis, USA) and Hansen Sensei Robotic Catheter System (Hansen Medical, USA), followed by the Corpath systems (GRX and 200) (Corindus, USA). The Niobe system uses robotically controlled magnets to allow for catheter direction. Arya et al. [ 23 ] carried out a case–control study comparing the Niobe system with conventional manual catheter navigation and evaluated effectiveness and safety in managing atrial fibrillation. The Corpath 200 system has been used for procedures such as PCI, [ 24 ] with robotic catheter guidance and the GRX system has also been reported to be used in endo-neurovascular procedures [ 25 •].

Socially Assistive

Socially assistive robots can take multiple forms, such as humanoid or animal-like, and work to provide support in areas traditionally done by humans such as companionship and service provision. Nine robots across 16 studies were included with the most popular being PARO (AIST, Japan) followed by Pepper (SoftBank Robotics, Japan) and NAO (SoftBank Robotics, Japan). PARO is a robotic seal that can move and make sounds in addition to responding to stimuli. Hung et al. [ 26 ] studied dementia patient perception of PARO on the hospital ward and its potential benefits. Pepper is a humanoid robot with a touch screen, capable of interacting with people through conversation. Boumans et al. [ 27 ] explored the use of Pepper in outpatient clinics with a randomised clinical trial. They compared human and Pepper-mediated patient interviews and evaluated patient perception following this.

There are a group of robots with the specific role of assisting with the management and delivery of pharmacy services. This includes drug storage, dispensing and compounding. For example, a robot may assist in preparation of cytotoxic drugs with the goal of reducing errors and minimising operator risk. Sixteen manuscripts with 10 robots were included. BD Rowa™ Vmax (BD Rowa, Germany) and APOTECA Chemo (Loccioni Humancare, Italy) were the most frequently studied robots. The BD Rowa™ Vmax is an automated system that allows for storage of medication and dispensing at the request of a user. Berdot et al. [ 28 ] used this system in a teaching hospital pharmacy and evaluated the return on investment including the rate of dispensing errors. The APOTECA Chemo system can be used to automate the production of chemotherapeutic treatment. Buning et al. [ 29 ] explored the environmental contamination of APOTECA Chemo compared to conventional drug compounding.

Imaging Assistance

Robots in this group have been specifically used for their ability to assist in carrying out imaging in different areas of medicine. Ten publications were included, with 8 robots in total. They predominantly include robotic camera holders in theatre but can also include robotic microscopes in neurosurgery and transcranial magnetic stimulation robots. Soloassist® (AKTORmed, Germany) and Freehand® (Freehand, UK), robotic camera controllers, were the most common in literature. Robotic camera holders may be controlled by various inputs such as voice and a joystick. In one publication, Soloassist was compared to a human scope assistant in colorectal cancer and safety and feasibility were assessed [ 30 ].

Disinfection

Robots may be used to disinfect clinical areas such as the ward or outpatient clinic. This group included 2 studies that evaluated the robotic systems LightStrike™ (Xenex, USA) and Ultra Violet Disinfection Robot® (UVD-Robot) (Clean Room Solutions). Both systems use ultraviolet (UV) light for disinfection of rooms, with the UVD-R being able to move autonomously. UVD-R was explored by Astrid et al. [ 31 ] who analysed its ability to disinfect waiting rooms in hospital outpatient clinics and compared this to conventional manual disinfection.

Delivery and Transport

There exists a role for robots in the transfer of items between areas. One publication was included that explored a delivery robot in the intensive care unit (ICU) [ 32 ]. The TUG Automated Delivery System (Aethon, USA) is a robot that after being loaded by an operator was used to autonomously deliver drugs from the pharmacy department to the ICU.

Evaluation of Robots in Clinical Settings

There has been an explosion of publications about the use of robots in healthcare in the past few years. This coincides with the COVID-19 pandemic, which highlighted a need for robots to carry out roles in challenging environments. It can also be linked with the ongoing development of technologies and the promise of robots alleviating the healthcare works’ burden and improving patient outcomes. The successful implementation of a robotic system is multifactorial, driven by social need, regulatory approval and the financial impact of deploying the system. Once introduced into healthcare, the durability and ongoing use of the robot are difficult to predict. Certain systems may go on to see long-term use, whilst others are underutilised or removed from practice. The outcome may be related to ease of use, perceived and objective benefit or availability of a newer system. Following successful introduction, robotic systems go on to be used for a variety of roles.

Ten overarching roles for robots in healthcare were identified in this review: surgical, rehabilitation and mobility, radiotherapy, socially assistive, telepresence, pharmacy, disinfection, delivery and transport, interventional and imaging assistance. In each group, robots may have different sub-roles, such as a focus on upper limb or lower limb strengthening in the rehabilitation category or for drug compounding or dispensing within the pharmacy category. These 10 groups have been created to consolidate a variety of robots, but it should be noted that there is an overlap between them as a robot may have multiple functions. For example, the low-intensity collimated ultrasound (LICU) system is categorised as an interventional robot with the primary role of ablation in conditions such as atrial fibrillation [ 33 •]. However, it also involves automated ultrasound (US) imaging which overlaps with the imaging assistance group. These roles allow robots to be used across a range of healthcare settings.

Certain robot groups have a well-defined area of use. For instance, the surgical group is unsurprisingly found predominantly within the hospital theatre setting. However, other robot groups are not so restricted to a well-circumscribed area. The pharmacy and socially assistive group of robots are such examples, which can be found in both inpatient and outpatient settings. Although numerous environments have been identified, most publications evaluated robots within only two: the theatre and rehabilitation unit. Robots have been less well explored in other settings, such as ED and ICU. This may be because some environments are more unpredictable, with fewer repetitive tasks that are well suited for a robot. The use of robots in more challenging and less controlled environments is a potential area for further research.

No matter the setting or role of the robot, a similar benefit is found with all robotic systems. They allow for a task to be carried out with less direct involvement of a human. The socially assistive and telepresence groups are good examples of this. This means that robots can be used in situations where services are needed but with restrictions on human presence. For instance, COVID-19 provides a clear example of where telepresence robots may be used to safely conduct remote ward rounds.

Quality of Selected Studies

This review did not exclude publications based on quality of methodology. Most studies were observational, with the interventional design being mainly used with rehabilitation and mobility robots. Many studies included in this review are also descriptive, with retrospectively defined outcomes. This highlights a need for further high-quality interventional studies to establish the potential benefits of robots across a range of roles. Additionally, a large portion of studies, outside of those using national databases, is of a small sample size. This, combined with the observational nature, reduces the overall quality of the dataset.

Review Strengths and Limitations

Review strengths include the large number of publications analysed and broad scope of the subject. This large dataset provides a comprehensive overview of the field of robotics in healthcare, and for synthesis of the data to establish the main robot roles in practice. As no limit was placed on date of publication, trends can also be established.

Given the broad area of exploration, there is a risk of missing relevant studies. Although many robots have been included, there will be some used in clinical practice that have not been identified by this review. However, it is unlikely that the missing robots will have a major impact on the 10 robot groups identified, given the substantial number of papers reviewed.

Several robots have multiple editions, but these were counted as singular entities, precluding more detailed analysis of each edition. Additionally, some publications did not specify the name of the robot used, and so there may be unique robots that were not identified in this review. For the same reason, some robots may be more commonly studied than described in this review. However, given the significant disparity in number of publications behind the predominant robots and those below them, the big picture is unlikely to drastically change. Finally, it should also be noted that there is a possibility of overlapping patient populations, with some studies utilising similar datasets.

Future of Robotics

The future of robots in healthcare predominantly lies with remote presence, and the performance of tasks detached from human presence. For instance, safe disinfection of a clinical environment or ward rounds with an at home specialist. Robots will allow for people to be present with increasing flexibility. This will aid in providing consistent services that are resilient to change and easy to adapt. For instance, a well-established robotic system that allows for remote surgery or telepresence ward rounds could mean that care can continue to be provided in a consistent manner during a pandemic.

To fully realise a future of widespread robot adoption, the necessary infrastructure must be developed. The best robotic system may be foiled by a poor internet connection. Investment in the systems that allow robots to operate is vital. The adoption of certain robot groups is also more likely to be seen due to the barriers of implementation. A socially assistive robot that moves on two wheels is likely much cheaper and easier to implement, especially in areas with fewer resources, compared to a large drug dispensing or surgical robot. Therefore, these more complex robots may struggle to see widespread use. It is important to focus on robots that are more likely to be globally utilised and have far-reaching effects, especially with scarcity of human resources. This is even more important when in crisis.

With ongoing technological advancements, robots may also be developed to carry out new functions. The roles described in this review arise from robots that have been used in a current clinical setting, but there are robots in development or pre-clinical evaluation that may yet be introduced. Advancement in the areas of artificial intelligence may lead to socially assistive robots that can function more independently and perform more complex tasks. Evolving technology such as augmented reality with haptic feedback may also provide a new scope for telepresence, such as remote physical guidance during a complex procedure.

Generally, there is a need to further evaluate the financial and clinical impact of robots with high-quality studies, larger population groups and an interventional design where possible. A need also exists to evaluate the use of robots in different populations and settings.

The evidence base for the use of robots in healthcare is expanding, and robots are being used across a range of specialties and settings. Ten overall roles for robots were identified, with the best explored being surgical and rehabilitation roles. However, there is a need for further high-quality research, particularly with less well-established robot roles such as disinfection. The future of robots lies in remote presence and the ability to carry out tasks in challenging environments; this will depend on the development of robust infrastructure and network capabilities to allow for successful adoption.

Papers of particular interest, published recently, have been highlighted as: • Of importance •• Of major importance

Budd J, Miller BS, Manning EM, Lampos V, Zhuang M, Edelstein M, et al. Digital technologies in the public-health response to COVID-19. Nat Med. 2020;26(8):1183–92.

Article Google Scholar

Dunlap DR, Santos RS, Lilly CM, Teebagy S, Hafer NS, Buchholz BO, et al. COVID-19: a gray swan’s impact on the adoption of novel medical technologies. Humanit Soc Sci Commun. 2022;9(1):1–9.

Schmitt N, Mattern E, Cignacco E, Seliger G, König-Bachmann M, Striebich S, et al. Effects of the COVID-19 pandemic on maternity staff in 2020 – a scoping review. BMC Health Serv Res. 2021;27(21):1364.

White EM, Wetle TF, Reddy A, Baier RR. Front-line nursing home staff experiences during the COVID-19 pandemic. J Am Med Dir Assoc. 2021;22(1):199–203.

•• Chiesa V, Antony G, Wismar M, Rechel B. COVID-19 pandemic: health impact of staying at home, social distancing and ‘lockdown’ measures—a systematic review of systematic reviews. J Public Health. 2021;43(3):e462–81. This review highlights the impact of COVID-19 restrictions, including impaired healthcare delivery and the potential benefit of telemedicine .

Luciani LG, Mattevi D, Cai T, Giusti G, Proietti S, Malossini G. Teleurology in the time of COVID-19 pandemic: here to stay? Urology. 2020;140:4–6.

Lluch C, Galiana L, Doménech P, Sansó N. The impact of the COVID-19 pandemic on burnout, compassion fatigue, and compassion satisfaction in healthcare personnel: a systematic review of the literature published during the first year of the pandemic. Healthcare. 2022;10(2):364. https://doi.org/10.1093/pubmed/fdab102 .

World Population Ageing 2020 Highlights | Population Division [Internet]. [cited 2022 Jul 3]. Available from: https://www.un.org/development/desa/pd/news/world-population-ageing-2020-highlights .

Duffy SW, Seedat F, Kearins O, Press M, Walton J, Myles J, et al. The projected impact of the COVID-19 lockdown on breast cancer deaths in England due to the cessation of population screening: a national estimation. Br J Cancer. 2022;126(9):1355–61.

Ya-Ping Jin, P, Canizares M, El-Defrawy S, Buys YM (2022) Predicted backlog in ophthalmic surgeries associated with the COVID-19 pandemic in Ontario in 2020: a time-series modelling analysis. Can J Ophthalmol. 0(0). https://www.un.org/development/desa/pd/es/news/world-population-ageing-2020-highlights .

Abdi J, Al-Hindawi A, Ng T, Vizcaychipi MP. Scoping review on the use of socially assistive robot technology in elderly care. BMJ Open. 2018;8(2):e018815.

Cochrane handbook for systematic reviews of interventions [Internet]. [cited 2022 Aug 3]. https://doi.org/10.1016/j.jcjo.2022.06.020 .

Moher D, Liberati A, Tetzlaff J, Altman DG, PRISMA Group. Preferred reporting items for systematic reviews and meta-analyses: the PRISMA statement. PLoS Med. 2009;6(7):e1000097.

McHugh ML. Interrater reliability: the kappa statistic. Biochem Med (Zagreb). 2012;22(3):276–82. https://training.cochrane.org/handbook/current .

Article MathSciNet Google Scholar

Jensen JS, Antonsen HK, Durup J. Two years of experience with robot-assisted anti-reflux surgery: a retrospective cohort study. Int J Surg. 2017;39:260–6.

Stulberg BN, Zadzilka JD. Active robotic technologies for total knee arthroplasty. Arch Orthop Trauma Surg. 2021;141(12):2069–75.

De Benedictis A, Trezza A, Carai A, Genovese E, Procaccini E, Messina R, et al. Robot-assisted procedures in pediatric neurosurgery. Neurosurg Focus. 2017;42(5):E7.

Miah S, Servian P, Patel A, Lovegrove C, Skelton L, Shah TT, et al. A prospective analysis of robotic targeted MRI-US fusion prostate biopsy using the centroid targeting approach. J Robotic Surg. 2020;14(1):69–74.

Husemann B, Müller F, Krewer C, Heller S, Koenig E. Effects of locomotion training with assistance of a robot-driven gait orthosis in hemiparetic patients after stroke: a randomized controlled pilot study. Stroke. 2007;38(2):349–54.

Cella A, De Luca A, Squeri V, Parodi S, Vallone F, Giorgeschi A et al (2020) Development and validation of a robotic multifactorial fall-risk predictive model: a one-year prospective study in community-dwelling older adults. Bouffanais R, editor. PLoS ONE 15(6):e0234904.

Staehler M, Bader M, Schlenker B, Casuscelli J, Karl A, Roosen A, et al. Single fraction radiosurgery for the treatment of renal tumors. J Urol. 2015;193(3):771–5.

Croghan SM, Carroll P, Ridgway PF, Gillis AE, Reade S. Robot-assisted surgical ward rounds: virtually always there. BMJ Health Care Inform. 2018;25(1):41–56. https://www.ncbi.nlm.nih.gov/pmc/articles/PMC7316263/ .

Arya A, Zaker-Shahrak R, Sommer P, Bollmann A, Wetzel U, Gaspar T, et al. Catheter ablation of atrial fibrillation using remote magnetic catheter navigation: a case-control study. Europace. 2011;13(1):45–50.

Kapur V, Smilowitz NR, Weisz G. Complex robotic-enhanced percutaneous coronary intervention: complex robotic-enhanced PCI. Cathet Cardiovasc Intervent. 2014;83(6):915–21.

• Mendes Pereira V, Cancelliere NM, Nicholson P, Radovanovic I, Drake KE, Sungur JM, et al. First-in-human, robotic-assisted neuroendovascular intervention. J NeuroIntervent Surg. 2020;12(4):338–40. The authors describe the first in human use of a robotic system for a neuroendovascular intervention, highlighting feasibility and potential benefits such as reduced operator radiation exposure .

Hung L, Gregorio M, Mann J, Wallsworth C, Horne N, Berndt A, et al. Exploring the perceptions of people with dementia about the social robot PARO in a hospital setting. Dementia. 2021;20(2):485–504.

Boumans R, van Meulen F, van Aalst W, Albers J, Janssen M, Peters-Kop M, et al. Quality of care perceived by older patients and caregivers in integrated care pathways with interviewing assistance from a social robot: noninferiority randomized controlled trial. J Med Internet Res. 2020;22(9):e18787.