JAMA Guide to Statistics and Methods

Explore this JAMA essay series that explains the basics of statistical techniques used in clinical research, to help clinicians interpret and critically appraise the medical literature.

Publication

Article type.

This JAMA Guide to Statistics and Methods article explains the test-negative study design, an observational study design routinely used to estimate vaccine effectiveness, and examines its use in a study that estimated the performance of messenger RNA boosters against the Omicron variant.

This JAMA Guide to Statistics and Methods article discusses accounting for competing risks in clinical research.

This JAMA Guide to Statistics and Methods article explains effect score analyses, an approach for evaluating the heterogeneity of treatment effects, and examines its use in a study of oxygen-saturation targets in critically ill patients.

This JAMA Guide to Statistics and Methods explains the use of historical controls—persons who had received a specific control treatment in a previous study—when randomizing participants to that control treatment in a subsequent trial may not be practical or ethical.

This JAMA Guide to Statistics and Methods discusses the early stopping of clinical trials for futility due to lack of evidence supporting the desired benefit, evidence of harm, or practical issues that make successful completion unlikely.

This JAMA Guide to Statistics and Methods explains sequential, multiple assignment, randomized trial (SMART) study designs, in which some or all participants are randomized at 2 or more decision points depending on the participant’s response to prior treatment.

This JAMA Guide to Statistics and Methods article examines conditional power, calculated while a trial is ongoing and based on both the currently observed data and an assumed treatment effect for future patients.

This Guide to Statistics and Methods describes the use of target trial emulation to design an observational study so it preserves the advantages of a randomized clinical trial, points out the limitations of the method, and provides an example of its use.

This Guide to Statistics and Methods provides an overview of the use of adjustment for baseline characteristics in the analysis of randomized clinical trials and emphasizes several important considerations.

This Guide to Statistics and Methods provides an overview of regression models for ordinal outcomes, including an explanation of why they are used and their limitations.

This Guide to Statistics and Methods provides an overview of patient-reported outcome measures for clinical research, emphasizes several important considerations when using them, and points out their limitations.

This JAMA Guide to Statistics and Methods discusses instrumental variable analysis, a method designed to reduce or eliminate unobserved confounding in observational studies, with the goal of achieving unbiased estimation of treatment effects.

This JAMA Guide to Statistics and Methods describes collider bias, illustrates examples in directed acyclic graphs, and explains how it can threaten the internal validity of a study and the accurate estimation of causal relationships in randomized clinical trials and observational studies.

This JAMA Guide to Statistics and Methods discusses the CONSERVE guidelines, which address how to report extenuating circumstances that lead to a modification in trial design, conduct, or analysis.

This JAMA Guide to Statistics and Methods discusses the basics of causal directed acyclic graphs, which are useful tools for communicating researchers’ understanding of the potential interplay among variables and are commonly used for mediation analysis.

This JAMA Guide to Statistics and Methods discusses cardinality matching, a method for finding the largest possible number of matched pairs in an observational data set, with the goal of balanced and representative samples of study participants between groups.

This Guide to Statistics and Methods discusses the various approaches to estimating variability in treatment effects, including heterogeneity of treatment effect, which was used to assess the association between surgery to close patent foramen ovale and risk of recurrent stroke in patients who presented with a stroke in a related JAMA article.

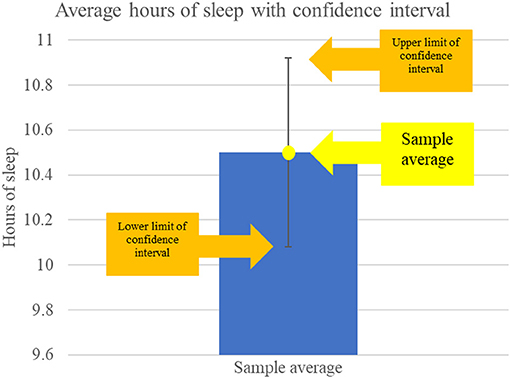

This Guide to Statistics and Methods describes how confidence intervals can be used to help in the interpretation of nonsignificant findings across all study designs.

This JAMA Guide to Statistics and Methods describes why interim analyses are performed during group sequential trials, provides examples of the limitations of interim analyses, and provides guidance on interpreting the results of interim analyses performed during group sequential trials.

This JAMA Guide to Statistics and Methods describes how ACC/AHA guidelines are formatted to rate class (denoting strength of a recommendation) and level (indicating the level of evidence on which a recommendation is based) and summarizes the strengths and benefits of this rating system in comparison with other commonly used ones.

Select Your Interests

Customize your JAMA Network experience by selecting one or more topics from the list below.

- Academic Medicine

- Acid Base, Electrolytes, Fluids

- Allergy and Clinical Immunology

- American Indian or Alaska Natives

- Anesthesiology

- Anticoagulation

- Art and Images in Psychiatry

- Artificial Intelligence

- Assisted Reproduction

- Bleeding and Transfusion

- Caring for the Critically Ill Patient

- Challenges in Clinical Electrocardiography

- Climate and Health

- Climate Change

- Clinical Challenge

- Clinical Decision Support

- Clinical Implications of Basic Neuroscience

- Clinical Pharmacy and Pharmacology

- Complementary and Alternative Medicine

- Consensus Statements

- Coronavirus (COVID-19)

- Critical Care Medicine

- Cultural Competency

- Dental Medicine

- Dermatology

- Diabetes and Endocrinology

- Diagnostic Test Interpretation

- Drug Development

- Electronic Health Records

- Emergency Medicine

- End of Life, Hospice, Palliative Care

- Environmental Health

- Equity, Diversity, and Inclusion

- Facial Plastic Surgery

- Gastroenterology and Hepatology

- Genetics and Genomics

- Genomics and Precision Health

- Global Health

- Guide to Statistics and Methods

- Hair Disorders

- Health Care Delivery Models

- Health Care Economics, Insurance, Payment

- Health Care Quality

- Health Care Reform

- Health Care Safety

- Health Care Workforce

- Health Disparities

- Health Inequities

- Health Policy

- Health Systems Science

- History of Medicine

- Hypertension

- Images in Neurology

- Implementation Science

- Infectious Diseases

- Innovations in Health Care Delivery

- JAMA Infographic

- Law and Medicine

- Leading Change

- Less is More

- LGBTQIA Medicine

- Lifestyle Behaviors

- Medical Coding

- Medical Devices and Equipment

- Medical Education

- Medical Education and Training

- Medical Journals and Publishing

- Mobile Health and Telemedicine

- Narrative Medicine

- Neuroscience and Psychiatry

- Notable Notes

- Nutrition, Obesity, Exercise

- Obstetrics and Gynecology

- Occupational Health

- Ophthalmology

- Orthopedics

- Otolaryngology

- Pain Medicine

- Palliative Care

- Pathology and Laboratory Medicine

- Patient Care

- Patient Information

- Performance Improvement

- Performance Measures

- Perioperative Care and Consultation

- Pharmacoeconomics

- Pharmacoepidemiology

- Pharmacogenetics

- Pharmacy and Clinical Pharmacology

- Physical Medicine and Rehabilitation

- Physical Therapy

- Physician Leadership

- Population Health

- Primary Care

- Professional Well-being

- Professionalism

- Psychiatry and Behavioral Health

- Public Health

- Pulmonary Medicine

- Regulatory Agencies

- Reproductive Health

- Research, Methods, Statistics

- Resuscitation

- Rheumatology

- Risk Management

- Scientific Discovery and the Future of Medicine

- Shared Decision Making and Communication

- Sleep Medicine

- Sports Medicine

- Stem Cell Transplantation

- Substance Use and Addiction Medicine

- Surgical Innovation

- Surgical Pearls

- Teachable Moment

- Technology and Finance

- The Art of JAMA

- The Arts and Medicine

- The Rational Clinical Examination

- Tobacco and e-Cigarettes

- Translational Medicine

- Trauma and Injury

- Treatment Adherence

- Ultrasonography

- Users' Guide to the Medical Literature

- Vaccination

- Venous Thromboembolism

- Veterans Health

- Women's Health

- Workflow and Process

- Wound Care, Infection, Healing

- Register for email alerts with links to free full-text articles

- Access PDFs of free articles

- Manage your interests

- Save searches and receive search alerts

Thank you for visiting nature.com. You are using a browser version with limited support for CSS. To obtain the best experience, we recommend you use a more up to date browser (or turn off compatibility mode in Internet Explorer). In the meantime, to ensure continued support, we are displaying the site without styles and JavaScript.

- View all journals

- Explore content

- About the journal

- Publish with us

- Sign up for alerts

Statistics articles within Scientific Reports

Article 26 August 2024 | Open Access

Quantification of the time-varying epidemic growth rate and of the delays between symptom onset and presenting to healthcare for the mpox epidemic in the UK in 2022

- Robert Hinch

- , Jasmina Panovska-Griffiths

- & Christophe Fraser

Investigating the causal relationship between wealth index and ICT skills: a mediation analysis approach

- Tarikul Islam

- & Nabil Ahmed Uthso

Article 24 August 2024 | Open Access

Statistical analysis of the effect of socio-political factors on individual life satisfaction

- , Isra Hasan

- & Ayman Alzaatreh

Article 23 August 2024 | Open Access

Improving the explainability of autoencoder factors for commodities through forecast-based Shapley values

- Roy Cerqueti

- , Antonio Iovanella

- & Saverio Storani

Article 20 August 2024 | Open Access

Defect detection of printed circuit board assembly based on YOLOv5

- Minghui Shen

- , Yujie Liu

- & Ye Jiang

Breaking the silence: leveraging social interaction data to identify high-risk suicide users online using network analysis and machine learning

- Damien Lekkas

- & Nicholas C. Jacobson

Stochastic image spectroscopy: a discriminative generative approach to hyperspectral image modelling and classification

- Alvaro F. Egaña

- , Alejandro Ehrenfeld

- & Jorge F. Silva

Article 15 August 2024 | Open Access

Data-driven risk analysis of nonlinear factor interactions in road safety using Bayesian networks

- Cinzia Carrodano

Article 13 August 2024 | Open Access

Momentum prediction models of tennis match based on CatBoost regression and random forest algorithms

- Xingchen Lv

- , Dingyu Gu

- & Yanfang li

Article 12 August 2024 | Open Access

Numerical and machine learning modeling of GFRP confined concrete-steel hollow elliptical columns

- Haytham F. Isleem

- , Tang Qiong

- & Ali Jahami

Experimental investigation of the distribution patterns of micro-scratches in abrasive waterjet cutting surface

- & Quan Wen

Article 07 August 2024 | Open Access

PMANet : a time series forecasting model for Chinese stock price prediction

- , Weisi Dai

- & Yunjing Zhao

Article 06 August 2024 | Open Access

Grasshopper platform-assisted design optimization of fujian rural earthen buildings considering low-carbon emissions reduction

- & Yang Ding

Article 03 August 2024 | Open Access

Effects of dietary fish to rapeseed oil ratio on steatosis symptoms in Atlantic salmon ( Salmo salar L) of different sizes

- D. Siciliani

- & Å. Krogdahl

A model-free and distribution-free multi-omics integration approach for detecting novel lung adenocarcinoma genes

- Shaofei Zhao

- & Guifang Fu

Article 01 August 2024 | Open Access

Intrinsic dimension as a multi-scale summary statistics in network modeling

- Iuri Macocco

- , Antonietta Mira

- & Alessandro Laio

A new possibilistic-based clustering method for probability density functions and its application to detecting abnormal elements

- Hung Tran-Nam

- , Thao Nguyen-Trang

- & Ha Che-Ngoc

Article 30 July 2024 | Open Access

A dynamic customer segmentation approach by combining LRFMS and multivariate time series clustering

- Shuhai Wang

- , Linfu Sun

- & Yang Yu

Article 29 July 2024 | Open Access

Field evaluation of a volatile pyrethroid spatial repellent and etofenprox treated clothing for outdoor protection against forest malaria vectors in Cambodia

- Élodie A. Vajda

- , Amanda Ross

- & Neil F. Lobo

Study on crease recovery property of warp-knitted jacquard spacer shoe upper material

- & Shiyu Peng

Article 27 July 2024 | Open Access

Calibration estimation of population total using multi-auxiliary information in the presence of non-response

- , Anant Patel

- & Menakshi Pachori

Simulation-based prior knowledge elicitation for parametric Bayesian models

- Florence Bockting

- , Stefan T. Radev

- & Paul-Christian Bürkner

Article 26 July 2024 | Open Access

Modelling Salmonella Typhi in high-density urban Blantyre neighbourhood, Malawi, using point pattern methods

- Jessie J. Khaki

- , James E. Meiring

- & Emanuele Giorgi

Exogenous variable driven deep learning models for improved price forecasting of TOP crops in India

- G. H. Harish Nayak

- , Md Wasi Alam

- & Chandan Kumar Deb

Generalization of cut-in pre-crash scenarios for autonomous vehicles based on accident data

- , Xinyu Zhu

- & Chang Xu

Article 19 July 2024 | Open Access

Automated PD-L1 status prediction in lung cancer with multi-modal PET/CT fusion

- Ronrick Da-ano

- , Gustavo Andrade-Miranda

- & Catherine Cheze Le Rest

Article 17 July 2024 | Open Access

Optimizing decision-making with aggregation operators for generalized intuitionistic fuzzy sets and their applications in the tech industry

- Muhammad Wasim

- , Awais Yousaf

- & Hamiden Abd El-Wahed Khalifa

Article 15 July 2024 | Open Access

Putting ICAP to the test: how technology-enhanced learning activities are related to cognitive and affective-motivational learning outcomes in higher education

- Christina Wekerle

- , Martin Daumiller

- & Ingo Kollar

The impact of national savings on economic development: a focused study on the ten poorest countries in Sub-Saharan Africa

Article 13 July 2024 | Open Access

Regularized ensemble learning for prediction and risk factors assessment of students at risk in the post-COVID era

- Zardad Khan

- , Amjad Ali

- & Saeed Aldahmani

Article 12 July 2024 | Open Access

Eigen-entropy based time series signatures to support multivariate time series classification

- Abhidnya Patharkar

- , Jiajing Huang

- & Naomi Gades

Article 11 July 2024 | Open Access

Exploring usage pattern variation of free-floating bike-sharing from a night travel perspective

- , Xianke Han

- & Lili Li

Early mutational signatures and transmissibility of SARS-CoV-2 Gamma and Lambda variants in Chile

- Karen Y. Oróstica

- , Sebastian B. Mohr

- & Seba Contreras

Article 10 July 2024 | Open Access

Optimizing the location of vaccination sites to stop a zoonotic epidemic

- Ricardo Castillo-Neyra

- , Sherrie Xie

- & Michael Z. Levy

Article 08 July 2024 | Open Access

Integrating socio-psychological factors in the SEIR model optimized by a genetic algorithm for COVID-19 trend analysis

- Haonan Wang

- , Danhong Wu

- & Junhui Zhang

Article 05 July 2024 | Open Access

Research on bearing fault diagnosis based on improved genetic algorithm and BP neural network

- Zenghua Chen

- , Lingjian Zhu

- & Gang Xiong

Article 04 July 2024 | Open Access

Employees’ pro-environmental behavior in an organization: a case study in the UAE

- Nadin Alherimi

- , Zeki Marva

- & Ayman Alzaaterh

Article 03 July 2024 | Open Access

The predictive capability of several anthropometric indices for identifying the risk of metabolic syndrome and its components among industrial workers

- Ekaterina D. Konstantinova

- , Tatiana A. Maslakova

- & Svetlana Yu. Ogorodnikova

Article 02 July 2024 | Open Access

A bayesian spatio-temporal dynamic analysis of food security in Africa

- Adusei Bofa

- & Temesgen Zewotir

Research on the influencing factors of promoting flipped classroom teaching based on the integrated UTAUT model and learning engagement theory

- & Wang He

Article 28 June 2024 | Open Access

Peak response regularization for localization

- , Jinzhen Yao

- & Qintao Hu

Article 25 June 2024 | Open Access

Prediction and reliability analysis of shear strength of RC deep beams

- Khaled Megahed

Multistage time-to-event models improve survival inference by partitioning mortality processes of tracked organisms

- Suresh A. Sethi

- , Alex L. Koeberle

- & Kenneth Duren

Article 24 June 2024 | Open Access

Summarizing physical performance in professional soccer: development of a new composite index

- José M. Oliva-Lozano

- , Mattia Cefis

- & Ricardo Resta

Finding multifaceted communities in multiplex networks

- László Gadár

- & János Abonyi

Article 22 June 2024 | Open Access

Utilizing Bayesian inference in accelerated testing models under constant stress via ordered ranked set sampling and hybrid censoring with practical validation

- Atef F. Hashem

- , Naif Alotaibi

- & Alaa H. Abdel-Hamid

Predicting chronic wasting disease in white-tailed deer at the county scale using machine learning

- Md Sohel Ahmed

- , Brenda J. Hanley

- & Krysten L. Schuler

Article 21 June 2024 | Open Access

Properties, quantile regression, and application of bounded exponentiated Weibull distribution to COVID-19 data of mortality and survival rates

- Shakila Bashir

- , Bushra Masood

- & Iram Saleem

Article 20 June 2024 | Open Access

Cox proportional hazards regression in small studies of predictive biomarkers

- , V. H. Nguyen

- & M. Hauptmann

Article 17 June 2024 | Open Access

Multivariate testing and effect size measures for batch effect evaluation in radiomic features

- Hannah Horng

- , Christopher Scott

- & Russell T. Shinohara

Browse broader subjects

- Mathematics and computing

Quick links

- Explore articles by subject

- Guide to authors

- Editorial policies

Top 9 Statistical Tools Used in Research

Well-designed research requires a well-chosen study sample and a suitable statistical test selection . To plan an epidemiological study or a clinical trial, you’ll need a solid understanding of the data . Improper inferences from it could lead to false conclusions and unethical behavior . And given the ocean of data available nowadays, it’s often a daunting task for researchers to gauge its credibility and do statistical analysis on it.

With that said, thanks to all the statistical tools available in the market that help researchers make such studies much more manageable. Statistical tools are extensively used in academic and research sectors to study human, animal, and material behaviors and reactions.

Statistical tools aid in the interpretation and use of data. They can be used to evaluate and comprehend any form of data. Some statistical tools can help you see trends, forecast future sales, and create links between causes and effects. When you’re unsure where to go with your study, other tools can assist you in navigating through enormous amounts of data.

What is Statistics? And its Importance in Research

Statistics is the study of collecting, arranging, and interpreting data from samples and inferring it to the total population. Also known as the “Science of Data,” it allows us to derive conclusions from a data set. It may also assist people in all industries in answering research or business queries and forecast outcomes, such as what show you should watch next on your favorite video app.

Statistics is a technique that social scientists, such as psychologists, use to examine data and answer research questions. Scientists raise a wide range of questions that statistics can answer. Moreover, it provides credibility and legitimacy to research. If two research publications are presented, one without statistics and the other with statistical analysis supporting each assertion, people will choose the latter.

Statistical Tools Used in Research

Researchers often cannot discern a simple truth from a set of data. They can only draw conclusions from data after statistical analysis. On the other hand, creating a statistical analysis is a difficult task. This is when statistical tools come into play. Researchers can use statistical tools to back up their claims, make sense of a vast set of data, graphically show complex data, or help clarify many things in a short period.

Let’s go through the top 9 best statistical tools used in research below:

SPSS first stores and organizes the data, then compile the data set to generate appropriate output. SPSS is intended to work with a wide range of variable data formats.

R is a statistical computing and graphics programming language that you may use to clean, analyze and graph your data. It is frequently used to estimate and display results by researchers from various fields and lecturers of statistics and research methodologies. It’s free, making it an appealing option, but it relies upon programming code rather than drop-down menus or buttons.

Many big tech companies are using SAS due to its support and integration for vast teams. Setting up the tool might be a bit time-consuming initially, but once it’s up and running, it’ll surely streamline your statistical processes.

Moreover, MATLAB provides a multi-paradigm numerical computing environment, which means that the language may be used for both procedural and object-oriented programming. MATLAB is ideal for matrix manipulation, including data function plotting, algorithm implementation, and user interface design, among other things. Last but not least, MATLAB can also run programs written in other programming languages.

Tableau is a data visualization program that is among the most competent on the market. In data analytics, the approach of data visualization is commonly employed. In only a few minutes, you can use Tableau to produce the best data visualization for a large amount of data. As a result, it aids the data analyst in making quick decisions. It has a large number of online analytical processing cubes, cloud databases, spreadsheets, and other tools. It also provides users with a drag-and-drop interface. As a result, the user must drag and drop the data set sheet into Tableau and set the filters according to their needs.

Some of the highlights of Tableau are:

7. MS EXCEL:

Microsoft Excel is undoubtedly one of the best and most used statistical tools for beginners looking to do basic data analysis. It provides data analytics specialists with cutting-edge solutions and can be used for both data visualization and simple statistics. Furthermore, it is the most suitable statistical tool for individuals who wish to apply fundamental data analysis approaches to their data.

You can apply various formulas and functions to your data in Excel without prior knowledge of statistics. The learning curve is great, and even freshers can achieve great results quickly since everything is just a click away. This makes Excel a great choice not only for amateurs but beginners as well.

8. RAPIDMINER:

RapidMiner is a valuable platform for data preparation, machine learning, and the deployment of predictive models. RapidMiner makes it simple to develop a data model from the beginning to the end. It comes with a complete data science suite. Machine learning, deep learning, text mining, and predictive analytics are all possible with it.

9. APACHE HADOOP:

So, if you have massive data on your hands and want something that doesn’t slow you down and works in a distributed way, Hadoop is the way to go.

Learn more about Statistics and Key Tools

Elasticity of Demand Explained in Plain Terms

An introduction to statistical power and a/b testing.

Statistical power is an integral part of A/B testing. And in this article, you will learn everything you need to know about it and how it is applied in A/B testing. A/B

What Data Analytics Tools Are And How To Use Them

When it comes to improving the quality of your products and services, data analytic tools are the antidotes. Regardless, people often have questions. What are data analytic tools? Why are

Learn More…

As an IT Engineer, who is passionate about learning and sharing. I have worked and learned quite a bit from Data Engineers, Data Analysts, Business Analysts, and Key Decision Makers almost for the past 5 years. Interested in learning more about Data Science and How to leverage it for better decision-making in my business and hopefully help you do the same in yours.

Recent Posts

An official website of the United States government

The .gov means it’s official. Federal government websites often end in .gov or .mil. Before sharing sensitive information, make sure you’re on a federal government site.

The site is secure. The https:// ensures that you are connecting to the official website and that any information you provide is encrypted and transmitted securely.

- Publications

- Account settings

- My Bibliography

- Collections

- Citation manager

Save citation to file

Email citation, add to collections.

- Create a new collection

- Add to an existing collection

Add to My Bibliography

Your saved search, create a file for external citation management software, your rss feed.

- Search in PubMed

- Search in NLM Catalog

- Add to Search

Descriptive Statistics: Reporting the Answers to the 5 Basic Questions of Who, What, Why, When, Where, and a Sixth, So What?

Affiliation.

- 1 From the Department of Surgery and Perioperative Care, Dell Medical School at the University of Texas at Austin, Austin, Texas.

- PMID: 28891910

- DOI: 10.1213/ANE.0000000000002471

Descriptive statistics are specific methods basically used to calculate, describe, and summarize collected research data in a logical, meaningful, and efficient way. Descriptive statistics are reported numerically in the manuscript text and/or in its tables, or graphically in its figures. This basic statistical tutorial discusses a series of fundamental concepts about descriptive statistics and their reporting. The mean, median, and mode are 3 measures of the center or central tendency of a set of data. In addition to a measure of its central tendency (mean, median, or mode), another important characteristic of a research data set is its variability or dispersion (ie, spread). In simplest terms, variability is how much the individual recorded scores or observed values differ from one another. The range, standard deviation, and interquartile range are 3 measures of variability or dispersion. The standard deviation is typically reported for a mean, and the interquartile range for a median. Testing for statistical significance, along with calculating the observed treatment effect (or the strength of the association between an exposure and an outcome), and generating a corresponding confidence interval are 3 tools commonly used by researchers (and their collaborating biostatistician or epidemiologist) to validly make inferences and more generalized conclusions from their collected data and descriptive statistics. A number of journals, including Anesthesia & Analgesia, strongly encourage or require the reporting of pertinent confidence intervals. A confidence interval can be calculated for virtually any variable or outcome measure in an experimental, quasi-experimental, or observational research study design. Generally speaking, in a clinical trial, the confidence interval is the range of values within which the true treatment effect in the population likely resides. In an observational study, the confidence interval is the range of values within which the true strength of the association between the exposure and the outcome (eg, the risk ratio or odds ratio) in the population likely resides. There are many possible ways to graphically display or illustrate different types of data. While there is often latitude as to the choice of format, ultimately, the simplest and most comprehensible format is preferred. Common examples include a histogram, bar chart, line chart or line graph, pie chart, scatterplot, and box-and-whisker plot. Valid and reliable descriptive statistics can answer basic yet important questions about a research data set, namely: "Who, What, Why, When, Where, How, How Much?"

PubMed Disclaimer

Similar articles

- Fundamentals of Research Data and Variables: The Devil Is in the Details. Vetter TR. Vetter TR. Anesth Analg. 2017 Oct;125(4):1375-1380. doi: 10.1213/ANE.0000000000002370. Anesth Analg. 2017. PMID: 28787341 Review.

- Repeated Measures Designs and Analysis of Longitudinal Data: If at First You Do Not Succeed-Try, Try Again. Schober P, Vetter TR. Schober P, et al. Anesth Analg. 2018 Aug;127(2):569-575. doi: 10.1213/ANE.0000000000003511. Anesth Analg. 2018. PMID: 29905618 Free PMC article.

- Preparing for the first meeting with a statistician. De Muth JE. De Muth JE. Am J Health Syst Pharm. 2008 Dec 15;65(24):2358-66. doi: 10.2146/ajhp070007. Am J Health Syst Pharm. 2008. PMID: 19052282 Review.

- Summarizing and presenting numerical data. Pupovac V, Petrovecki M. Pupovac V, et al. Biochem Med (Zagreb). 2011;21(2):106-10. doi: 10.11613/bm.2011.018. Biochem Med (Zagreb). 2011. PMID: 22135849

- Introduction to biostatistics: Part 2, Descriptive statistics. Gaddis GM, Gaddis ML. Gaddis GM, et al. Ann Emerg Med. 1990 Mar;19(3):309-15. doi: 10.1016/s0196-0644(05)82052-9. Ann Emerg Med. 1990. PMID: 2310070

- Canadian midwives' perspectives on the clinical impacts of point of care ultrasound in obstetrical care: A concurrent mixed-methods study. Johnston BK, Darling EK, Malott A, Thomas L, Murray-Davis B. Johnston BK, et al. Heliyon. 2024 Mar 5;10(6):e27512. doi: 10.1016/j.heliyon.2024.e27512. eCollection 2024 Mar 30. Heliyon. 2024. PMID: 38533003 Free PMC article.

- Validation and psychometric testing of the Chinese version of the prenatal body image questionnaire. Wang Q, Lin J, Zheng Q, Kang L, Zhang X, Zhang K, Lin R, Lin R. Wang Q, et al. BMC Pregnancy Childbirth. 2024 Feb 1;24(1):102. doi: 10.1186/s12884-024-06281-w. BMC Pregnancy Childbirth. 2024. PMID: 38302902 Free PMC article.

- Cracking the code: uncovering the factors that drive COVID-19 standard operating procedures compliance among school management in Malaysia. Ahmad NS, Karuppiah K, Praveena SM, Ali NF, Ramdas M, Mohammad Yusof NAD. Ahmad NS, et al. Sci Rep. 2024 Jan 4;14(1):556. doi: 10.1038/s41598-023-49968-4. Sci Rep. 2024. PMID: 38177620 Free PMC article.

- Comparison of Nonneurological Structures at Risk During Anterior-to-Psoas Versus Transpsoas Surgical Approaches Using Abdominal CT Imaging From L1 to S1. Razzouk J, Ramos O, Harianja G, Carter M, Mehta S, Wycliffe N, Danisa O, Cheng W. Razzouk J, et al. Int J Spine Surg. 2023 Dec 26;17(6):809-815. doi: 10.14444/8542. Int J Spine Surg. 2023. PMID: 37748918 Free PMC article.

- CT-based analysis of oblique lateral interbody fusion from L1 to L5: location of incision, feasibility of safe corridor approach, and influencing factors. Razzouk J, Ramos O, Mehta S, Harianja G, Wycliffe N, Danisa O, Cheng W. Razzouk J, et al. Eur Spine J. 2023 Jun;32(6):1947-1952. doi: 10.1007/s00586-023-07555-1. Epub 2023 Apr 28. Eur Spine J. 2023. PMID: 37118479

- Search in MeSH

Related information

- Cited in Books

LinkOut - more resources

Full text sources.

- Ingenta plc

- Ovid Technologies, Inc.

- Wolters Kluwer

Other Literature Sources

- scite Smart Citations

- Citation Manager

NCBI Literature Resources

MeSH PMC Bookshelf Disclaimer

The PubMed wordmark and PubMed logo are registered trademarks of the U.S. Department of Health and Human Services (HHS). Unauthorized use of these marks is strictly prohibited.

Effective Use of Statistics in Research – Methods and Tools for Data Analysis

Remember that impending feeling you get when you are asked to analyze your data! Now that you have all the required raw data, you need to statistically prove your hypothesis. Representing your numerical data as part of statistics in research will also help in breaking the stereotype of being a biology student who can’t do math.

Statistical methods are essential for scientific research. In fact, statistical methods dominate the scientific research as they include planning, designing, collecting data, analyzing, drawing meaningful interpretation and reporting of research findings. Furthermore, the results acquired from research project are meaningless raw data unless analyzed with statistical tools. Therefore, determining statistics in research is of utmost necessity to justify research findings. In this article, we will discuss how using statistical methods for biology could help draw meaningful conclusion to analyze biological studies.

Table of Contents

Role of Statistics in Biological Research

Statistics is a branch of science that deals with collection, organization and analysis of data from the sample to the whole population. Moreover, it aids in designing a study more meticulously and also give a logical reasoning in concluding the hypothesis. Furthermore, biology study focuses on study of living organisms and their complex living pathways, which are very dynamic and cannot be explained with logical reasoning. However, statistics is more complex a field of study that defines and explains study patterns based on the sample sizes used. To be precise, statistics provides a trend in the conducted study.

Biological researchers often disregard the use of statistics in their research planning, and mainly use statistical tools at the end of their experiment. Therefore, giving rise to a complicated set of results which are not easily analyzed from statistical tools in research. Statistics in research can help a researcher approach the study in a stepwise manner, wherein the statistical analysis in research follows –

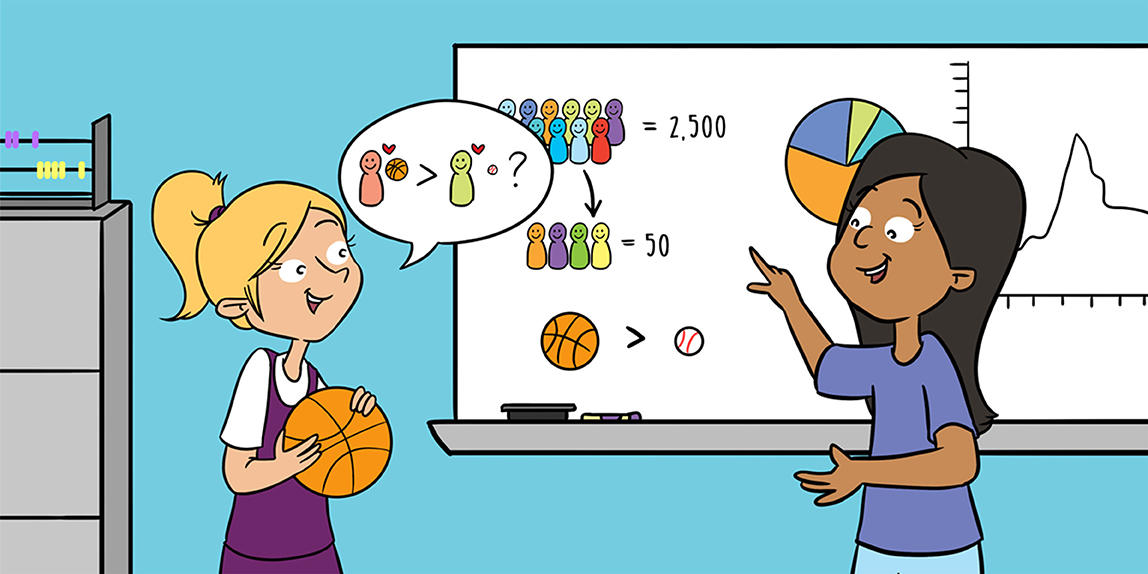

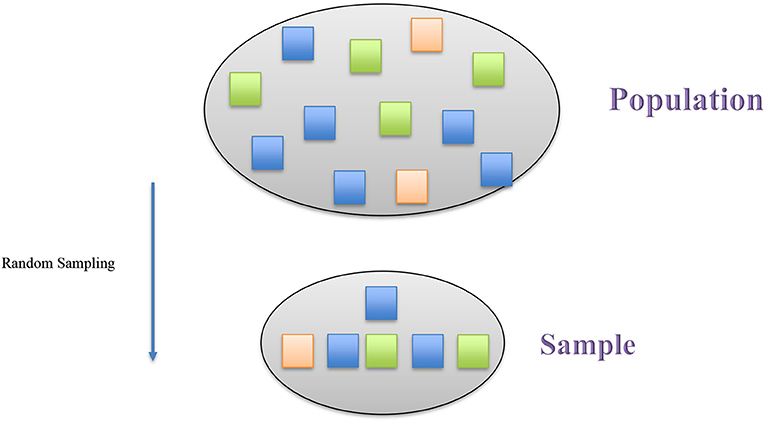

1. Establishing a Sample Size

Usually, a biological experiment starts with choosing samples and selecting the right number of repetitive experiments. Statistics in research deals with basics in statistics that provides statistical randomness and law of using large samples. Statistics teaches how choosing a sample size from a random large pool of sample helps extrapolate statistical findings and reduce experimental bias and errors.

2. Testing of Hypothesis

When conducting a statistical study with large sample pool, biological researchers must make sure that a conclusion is statistically significant. To achieve this, a researcher must create a hypothesis before examining the distribution of data. Furthermore, statistics in research helps interpret the data clustered near the mean of distributed data or spread across the distribution. These trends help analyze the sample and signify the hypothesis.

3. Data Interpretation Through Analysis

When dealing with large data, statistics in research assist in data analysis. This helps researchers to draw an effective conclusion from their experiment and observations. Concluding the study manually or from visual observation may give erroneous results; therefore, thorough statistical analysis will take into consideration all the other statistical measures and variance in the sample to provide a detailed interpretation of the data. Therefore, researchers produce a detailed and important data to support the conclusion.

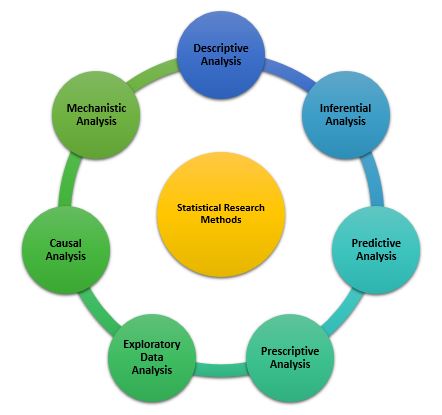

Types of Statistical Research Methods That Aid in Data Analysis

Statistical analysis is the process of analyzing samples of data into patterns or trends that help researchers anticipate situations and make appropriate research conclusions. Based on the type of data, statistical analyses are of the following type:

1. Descriptive Analysis

The descriptive statistical analysis allows organizing and summarizing the large data into graphs and tables . Descriptive analysis involves various processes such as tabulation, measure of central tendency, measure of dispersion or variance, skewness measurements etc.

2. Inferential Analysis

The inferential statistical analysis allows to extrapolate the data acquired from a small sample size to the complete population. This analysis helps draw conclusions and make decisions about the whole population on the basis of sample data. It is a highly recommended statistical method for research projects that work with smaller sample size and meaning to extrapolate conclusion for large population.

3. Predictive Analysis

Predictive analysis is used to make a prediction of future events. This analysis is approached by marketing companies, insurance organizations, online service providers, data-driven marketing, and financial corporations.

4. Prescriptive Analysis

Prescriptive analysis examines data to find out what can be done next. It is widely used in business analysis for finding out the best possible outcome for a situation. It is nearly related to descriptive and predictive analysis. However, prescriptive analysis deals with giving appropriate suggestions among the available preferences.

5. Exploratory Data Analysis

EDA is generally the first step of the data analysis process that is conducted before performing any other statistical analysis technique. It completely focuses on analyzing patterns in the data to recognize potential relationships. EDA is used to discover unknown associations within data, inspect missing data from collected data and obtain maximum insights.

6. Causal Analysis

Causal analysis assists in understanding and determining the reasons behind “why” things happen in a certain way, as they appear. This analysis helps identify root cause of failures or simply find the basic reason why something could happen. For example, causal analysis is used to understand what will happen to the provided variable if another variable changes.

7. Mechanistic Analysis

This is a least common type of statistical analysis. The mechanistic analysis is used in the process of big data analytics and biological science. It uses the concept of understanding individual changes in variables that cause changes in other variables correspondingly while excluding external influences.

Important Statistical Tools In Research

Researchers in the biological field find statistical analysis in research as the scariest aspect of completing research. However, statistical tools in research can help researchers understand what to do with data and how to interpret the results, making this process as easy as possible.

1. Statistical Package for Social Science (SPSS)

It is a widely used software package for human behavior research. SPSS can compile descriptive statistics, as well as graphical depictions of result. Moreover, it includes the option to create scripts that automate analysis or carry out more advanced statistical processing.

2. R Foundation for Statistical Computing

This software package is used among human behavior research and other fields. R is a powerful tool and has a steep learning curve. However, it requires a certain level of coding. Furthermore, it comes with an active community that is engaged in building and enhancing the software and the associated plugins.

3. MATLAB (The Mathworks)

It is an analytical platform and a programming language. Researchers and engineers use this software and create their own code and help answer their research question. While MatLab can be a difficult tool to use for novices, it offers flexibility in terms of what the researcher needs.

4. Microsoft Excel

Not the best solution for statistical analysis in research, but MS Excel offers wide variety of tools for data visualization and simple statistics. It is easy to generate summary and customizable graphs and figures. MS Excel is the most accessible option for those wanting to start with statistics.

5. Statistical Analysis Software (SAS)

It is a statistical platform used in business, healthcare, and human behavior research alike. It can carry out advanced analyzes and produce publication-worthy figures, tables and charts .

6. GraphPad Prism

It is a premium software that is primarily used among biology researchers. But, it offers a range of variety to be used in various other fields. Similar to SPSS, GraphPad gives scripting option to automate analyses to carry out complex statistical calculations.

This software offers basic as well as advanced statistical tools for data analysis. However, similar to GraphPad and SPSS, minitab needs command over coding and can offer automated analyses.

Use of Statistical Tools In Research and Data Analysis

Statistical tools manage the large data. Many biological studies use large data to analyze the trends and patterns in studies. Therefore, using statistical tools becomes essential, as they manage the large data sets, making data processing more convenient.

Following these steps will help biological researchers to showcase the statistics in research in detail, and develop accurate hypothesis and use correct tools for it.

There are a range of statistical tools in research which can help researchers manage their research data and improve the outcome of their research by better interpretation of data. You could use statistics in research by understanding the research question, knowledge of statistics and your personal experience in coding.

Have you faced challenges while using statistics in research? How did you manage it? Did you use any of the statistical tools to help you with your research data? Do write to us or comment below!

Frequently Asked Questions

Statistics in research can help a researcher approach the study in a stepwise manner: 1. Establishing a sample size 2. Testing of hypothesis 3. Data interpretation through analysis

Statistical methods are essential for scientific research. In fact, statistical methods dominate the scientific research as they include planning, designing, collecting data, analyzing, drawing meaningful interpretation and reporting of research findings. Furthermore, the results acquired from research project are meaningless raw data unless analyzed with statistical tools. Therefore, determining statistics in research is of utmost necessity to justify research findings.

Statistical tools in research can help researchers understand what to do with data and how to interpret the results, making this process as easy as possible. They can manage large data sets, making data processing more convenient. A great number of tools are available to carry out statistical analysis of data like SPSS, SAS (Statistical Analysis Software), and Minitab.

nice article to read

Holistic but delineating. A very good read.

Rate this article Cancel Reply

Your email address will not be published.

Enago Academy's Most Popular Articles

- Promoting Research

- Thought Leadership

- Trending Now

How Enago Academy Contributes to Sustainable Development Goals (SDGs) Through Empowering Researchers

The United Nations Sustainable Development Goals (SDGs) are a universal call to action to end…

- Reporting Research

Research Interviews: An effective and insightful way of data collection

Research interviews play a pivotal role in collecting data for various academic, scientific, and professional…

Planning Your Data Collection: Designing methods for effective research

Planning your research is very important to obtain desirable results. In research, the relevance of…

- Language & Grammar

Best Plagiarism Checker Tool for Researchers — Top 4 to choose from!

While common writing issues like language enhancement, punctuation errors, grammatical errors, etc. can be dealt…

- Industry News

- Publishing News

2022 in a Nutshell — Reminiscing the year when opportunities were seized and feats were achieved!

It’s beginning to look a lot like success! Some of the greatest opportunities to research…

2022 in a Nutshell — Reminiscing the year when opportunities were seized and feats…

Sign-up to read more

Subscribe for free to get unrestricted access to all our resources on research writing and academic publishing including:

- 2000+ blog articles

- 50+ Webinars

- 10+ Expert podcasts

- 50+ Infographics

- 10+ Checklists

- Research Guides

We hate spam too. We promise to protect your privacy and never spam you.

- Publishing Research

- AI in Academia

- Career Corner

- Diversity and Inclusion

- Infographics

- Expert Video Library

- Other Resources

- Enago Learn

- Upcoming & On-Demand Webinars

- Peer Review Week 2024

- Open Access Week 2023

- Conference Videos

- Enago Report

- Journal Finder

- Enago Plagiarism & AI Grammar Check

- Editing Services

- Publication Support Services

- Research Impact

- Translation Services

- Publication solutions

- AI-Based Solutions

- Call for Articles

- Call for Speakers

- Author Training

- Edit Profile

I am looking for Editing/ Proofreading services for my manuscript Tentative date of next journal submission:

In your opinion, what is the most effective way to improve integrity in the peer review process?

Statistics for Research Students

(2 reviews)

Erich C Fein, Toowoomba, Australia

John Gilmour, Toowoomba, Australia

Tayna Machin, Toowoomba, Australia

Liam Hendry, Toowoomba, Australia

Copyright Year: 2022

ISBN 13: 9780645326109

Publisher: University of Southern Queensland

Language: English

Formats Available

Conditions of use.

Learn more about reviews.

Reviewed by Sojib Bin Zaman, Assistant Professor, James Madison University on 3/18/24

From exploring data in Chapter One to learning advanced methodologies such as moderation and mediation in Chapter Seven, the reader is guided through the entire process of statistical methodology. With each chapter covering a different statistical... read more

Comprehensiveness rating: 5 see less

From exploring data in Chapter One to learning advanced methodologies such as moderation and mediation in Chapter Seven, the reader is guided through the entire process of statistical methodology. With each chapter covering a different statistical technique and methodology, students gain a comprehensive understanding of statistical research techniques.

Content Accuracy rating: 5

During my review of the textbook, I did not find any notable errors or omissions. In my opinion, the material was comprehensive, resulting in an enjoyable learning experience.

Relevance/Longevity rating: 5

A majority of the textbook's content is aligned with current trends, advancements, and enduring principles in the field of statistics. Several emerging methodologies and technologies are incorporated into this textbook to enhance students' statistical knowledge. It will be a valuable resource in the long run if students and researchers can properly utilize this textbook.

Clarity rating: 5

A clear explanation of complex statistical concepts such as moderation and mediation is provided in the writing style. Examples and problem sets are provided in the textbook in a comprehensive and well-explained manner.

Consistency rating: 5

Each chapter maintains consistent formatting and language, with resources organized consistently. Headings and subheadings worked well.

Modularity rating: 5

The textbook is well-structured, featuring cohesive chapters that flow smoothly from one to another. It is carefully crafted with a focus on defining terms clearly, facilitating understanding, and ensuring logical flow.

Organization/Structure/Flow rating: 5

From basic to advanced concepts, this book provides clarity of progression, logical arranging of sections and chapters, and effective headings and subheadings that guide readers. Further, the organization provides students with a lot of information on complex statistical methodologies.

Interface rating: 5

The available formats included PDFs, online access, and e-books. The e-book interface was particularly appealing to me, as it provided seamless navigation and viewing of content without compromising usability.

Grammatical Errors rating: 5

I found no significant errors in this document, and the overall quality of the writing was commendable. There was a high level of clarity and coherence in the text, which contributed to a positive reading experience.

Cultural Relevance rating: 5

The content of the book, as well as its accompanying examples, demonstrates a dedication to inclusivity by taking into account cultural diversity and a variety of perspectives. Furthermore, the material actively promotes cultural diversity, which enables readers to develop a deeper understanding of various cultural contexts and experiences.

In summary, this textbook provides a comprehensive resource tailored for advanced statistics courses, characterized by meticulous organization and practical supplementary materials. This book also provides valuable insights into the interpretation of computer output that enhance a greater understanding of each concept presented.

Reviewed by Zhuanzhuan Ma, Assistant Professor, University of Texas Rio Grande Valley on 3/7/24

The textbook covers all necessary areas and topics for students who want to conduct research in statistics. It includes foundational concepts, application methods, and advanced statistical techniques relevant to research methodologies. read more

The textbook covers all necessary areas and topics for students who want to conduct research in statistics. It includes foundational concepts, application methods, and advanced statistical techniques relevant to research methodologies.

The textbook presents statistical methods and data accurately, with up-to-date statistical practices and examples.

Relevance/Longevity rating: 4

The textbook's content is relevant to current research practices. The book includes contemporary examples and case studies that are currently prevalent in research communities. One small drawback is that the textbook did not include the example code for conduct data analysis.

The textbook break down complex statistical methods into understandable segments. All the concepts are clearly explained. Authors used diagrams, examples, and all kinds of explanations to facilitate learning for students with varying levels of background knowledge.

The terminology, framework, and presentation style (e.g. concepts, methodologies, and examples) seem consistent throughout the book.

The textbook is well organized that each chapter and section can be used independently without losing the context necessary for understanding. Also, the modular structure allows instructors and students to adapt the materials for different study plans.

The textbook is well-organized and progresses from basic concepts to more complex methods, making it easier for students to follow along. There is a logical flow of the content.

The digital format of the textbook has an interface that includes the design, layout, and navigational features. It is easier to use for readers.

The quality of writing is very high. The well-written texts help both instructors and students to follow the ideas clearly.

The textbook does not perpetuate stereotypes or biases and are inclusive in their examples, language, and perspectives.

Table of Contents

- Acknowledgement of Country

- Accessibility Information

- About the Authors

- Introduction

- I. Chapter One - Exploring Your Data

- II. Chapter Two - Test Statistics, p Values, Confidence Intervals and Effect Sizes

- III. Chapter Three- Comparing Two Group Means

- IV. Chapter Four - Comparing Associations Between Two Variables

- V. Chapter Five- Comparing Associations Between Multiple Variables

- VI. Chapter Six- Comparing Three or More Group Means

- VII. Chapter Seven- Moderation and Mediation Analyses

- VIII. Chapter Eight- Factor Analysis and Scale Reliability

- IX. Chapter Nine- Nonparametric Statistics

Ancillary Material

About the book.

This book aims to help you understand and navigate statistical concepts and the main types of statistical analyses essential for research students.

About the Contributors

Dr Erich C. Fein is an Associate Professor at the University of Southern Queensland. He received substantial training in research methods and statistics during his PhD program at Ohio State University. He currently teaches four courses in research methods and statistics. His research involves leadership, occupational health, and motivation, as well as issues related to research methods such as the following article: “ Safeguarding Access and Safeguarding Meaning as Strategies for Achieving Confidentiality .” Click here to link to his Google Scholar profile.

Dr John Gilmour is a Lecturer at the University of Southern Queensland and a Postdoctoral Research Fellow at the University of Queensland, His research focuses on the locational and temporal analyses of crime, and the evaluation of police training and procedures. John has worked across many different sectors including PTSD, social media, criminology, and medicine.

Dr Tanya Machin is a Senior Lecturer and Associate Dean at the University of Southern Queensland. Her research focuses on social media and technology across the lifespan. Tanya has co-taught Honours research methods with Erich, and is also interested in ethics and qualitative research methods. Tanya has worked across many different sectors including primary schools, financial services, and mental health.

Dr Liam Hendry is a Lecturer at the University of Southern Queensland. His research interests focus on long-term and short-term memory, measurement of human memory, attention, learning & diverse aspects of cognitive psychology.

Contribute to this Page

Introduction: Statistics as a Research Tool

- First Online: 24 February 2021

Cite this chapter

- David Weisburd 5 , 6 ,

- Chester Britt 7 ,

- David B. Wilson 5 &

- Alese Wooditch 8

2539 Accesses

Statistics seem intimidating because they are associated with complex mathematical formulas and computations. Although some knowledge of math is required, an understanding of the concepts is much more important than an in-depth understanding of the computations. The researcher’s aim in using statistics is to communicate findings in a clear and simple form. As a result, the researcher should always choose the simplest statistic appropriate for answering the research question. Statistics offer commonsense solutions to research problems. The following principles apply to all types of statistics: (1) in developing statistics, we seek to reduce the level of error as much as possible; (2) statistics based on more information are generally preferred over those based on less information; (3) outliers present a significant problem in choosing and interpreting statistics; and (4) the researcher must strive to systematize the procedures used in data collection and analysis. There are two principal uses of statistics discussed in this book. In descriptive statistics, the researcher summarizes large amounts of information in an efficient manner. Two types of descriptive statistics that go hand in hand are measures of central tendency, which describe the characteristics of the average case, and measures of dispersion, which tell us just how typical this average case is. We use inferential statistics to make statements about a population on the basis of a sample drawn from that population.

This is a preview of subscription content, log in via an institution to check access.

Access this chapter

Subscribe and save.

- Get 10 units per month

- Download Article/Chapter or eBook

- 1 Unit = 1 Article or 1 Chapter

- Cancel anytime

- Available as PDF

- Read on any device

- Instant download

- Own it forever

- Available as EPUB and PDF

- Compact, lightweight edition

- Dispatched in 3 to 5 business days

- Free shipping worldwide - see info

- Durable hardcover edition

Tax calculation will be finalised at checkout

Purchases are for personal use only

Institutional subscriptions

Reiss, A. J., Jr. (1971). Systematic Observation of Natural Social Phenomena. Sociological Methodology, 3, 3–33. doi:10.2307/270816

Google Scholar

National Institute of Justice (2016). National Institute of Justice Annual Report: 2016. Washington, DC: U.S. Department of Justice, Office of Justice Programs, National Institute of Justice.

Download references

Author information

Authors and affiliations.

Department of Criminology, Law and Society, George Mason University, Fairfax, VA, USA

David Weisburd & David B. Wilson

Institute of Criminology, Faculty of Law, Hebrew University of Jerusalem, Jerusalem, Israel

David Weisburd

Iowa State University, Ames, IA, USA

Chester Britt

Department of Criminal Justice, Temple University, Philadelphia, PA, USA

Alese Wooditch

You can also search for this author in PubMed Google Scholar

Rights and permissions

Reprints and permissions

Copyright information

© 2020 Springer Nature Switzerland AG

About this chapter

Weisburd, D., Britt, C., Wilson, D.B., Wooditch, A. (2020). Introduction: Statistics as a Research Tool. In: Basic Statistics in Criminology and Criminal Justice. Springer, Cham. https://doi.org/10.1007/978-3-030-47967-1_1

Download citation

DOI : https://doi.org/10.1007/978-3-030-47967-1_1

Published : 24 February 2021

Publisher Name : Springer, Cham

Print ISBN : 978-3-030-47966-4

Online ISBN : 978-3-030-47967-1

eBook Packages : Law and Criminology Law and Criminology (R0)

Share this chapter

Anyone you share the following link with will be able to read this content:

Sorry, a shareable link is not currently available for this article.

Provided by the Springer Nature SharedIt content-sharing initiative

- Publish with us

Policies and ethics

- Find a journal

- Track your research

Understanding and Using Statistical Methods

Statistics is a set of tools used to organize and analyze data. Data must either be numeric in origin or transformed by researchers into numbers. For instance, statistics could be used to analyze percentage scores English students receive on a grammar test: the percentage scores ranging from 0 to 100 are already in numeric form. Statistics could also be used to analyze grades on an essay by assigning numeric values to the letter grades, e.g., A=4, B=3, C=2, D=1, and F=0.

Employing statistics serves two purposes, (1) description and (2) prediction. Statistics are used to describe the characteristics of groups. These characteristics are referred to as variables . Data is gathered and recorded for each variable. Descriptive statistics can then be used to reveal the distribution of the data in each variable.

Statistics is also frequently used for purposes of prediction. Prediction is based on the concept of generalizability : if enough data is compiled about a particular context (e.g., students studying writing in a specific set of classrooms), the patterns revealed through analysis of the data collected about that context can be generalized (or predicted to occur in) similar contexts. The prediction of what will happen in a similar context is probabilistic . That is, the researcher is not certain that the same things will happen in other contexts; instead, the researcher can only reasonably expect that the same things will happen.

Prediction is a method employed by individuals throughout daily life. For instance, if writing students begin class every day for the first half of the semester with a five-minute freewriting exercise, then they will likely come to class the first day of the second half of the semester prepared to again freewrite for the first five minutes of class. The students will have made a prediction about the class content based on their previous experiences in the class: Because they began all previous class sessions with freewriting, it would be probable that their next class session will begin the same way. Statistics is used to perform the same function; the difference is that precise probabilities are determined in terms of the percentage chance that an outcome will occur, complete with a range of error. Prediction is a primary goal of inferential statistics.

Revealing Patterns Using Descriptive Statistics

Descriptive statistics, not surprisingly, "describe" data that have been collected. Commonly used descriptive statistics include frequency counts, ranges (high and low scores or values), means, modes, median scores, and standard deviations. Two concepts are essential to understanding descriptive statistics: variables and distributions .

Statistics are used to explore numerical data (Levin, 1991). Numerical data are observations which are recorded in the form of numbers (Runyon, 1976). Numbers are variable in nature, which means that quantities vary according to certain factors. For examples, when analyzing the grades on student essays, scores will vary for reasons such as the writing ability of the student, the students' knowledge of the subject, and so on. In statistics, these reasons are called variables. Variables are divided into three basic categories:

Nominal Variables

Nominal variables classify data into categories. This process involves labeling categories and then counting frequencies of occurrence (Runyon, 1991). A researcher might wish to compare essay grades between male and female students. Tabulations would be compiled using the categories "male" and "female." Sex would be a nominal variable. Note that the categories themselves are not quantified. Maleness or femaleness are not numerical in nature, rather the frequencies of each category results in data that is quantified -- 11 males and 9 females.

Ordinal Variables

Ordinal variables order (or rank) data in terms of degree. Ordinal variables do not establish the numeric difference between data points. They indicate only that one data point is ranked higher or lower than another (Runyon, 1991). For instance, a researcher might want to analyze the letter grades given on student essays. An A would be ranked higher than a B, and a B higher than a C. However, the difference between these data points, the precise distance between an A and a B, is not defined. Letter grades are an example of an ordinal variable.

Interval Variables

Interval variables score data. Thus the order of data is known as well as the precise numeric distance between data points (Runyon, 1991). A researcher might analyze the actual percentage scores of the essays, assuming that percentage scores are given by the instructor. A score of 98 (A) ranks higher than a score of 87 (B), which ranks higher than a score of 72 (C). Not only is the order of these three data points known, but so is the exact distance between them -- 11 percentage points between the first two, 15 percentage points between the second two and 26 percentage points between the first and last data points.

Distributions

A distribution is a graphic representation of data. The line formed by connecting data points is called a frequency distribution. This line may take many shapes. The single most important shape is that of the bell-shaped curve, which characterizes the distribution as "normal." A perfectly normal distribution is only a theoretical ideal. This ideal, however, is an essential ingredient in statistical decision-making (Levin, 1991). A perfectly normal distribution is a mathematical construct which carries with it certain mathematical properties helpful in describing the attributes of the distribution. Although frequency distribution based on actual data points seldom, if ever, completely matches a perfectly normal distribution, a frequency distribution often can approach such a normal curve.

The closer a frequency distribution resembles a normal curve, the more probable that the distribution maintains those same mathematical properties as the normal curve. This is an important factor in describing the characteristics of a frequency distribution. As a frequency distribution approaches a normal curve, generalizations about the data set from which the distribution was derived can be made with greater certainty. And it is this notion of generalizability upon which statistics is founded. It is important to remember that not all frequency distributions approach a normal curve. Some are skewed. When a frequency distribution is skewed, the characteristics inherent to a normal curve no longer apply.

Making Predictions Using Inferential Statistics

Inferential statistics are used to draw conclusions and make predictions based on the descriptions of data. In this section, we explore inferential statistics by using an extended example of experimental studies. Key concepts used in our discussion are probability, populations, and sampling.

Experiments

A typical experimental study involves collecting data on the behaviors, attitudes, or actions of two or more groups and attempting to answer a research question (often called a hypothesis). Based on the analysis of the data, a researcher might then attempt to develop a causal model that can be generalized to populations.

A question that might be addressed through experimental research might be "Does grammar-based writing instruction produce better writers than process-based writing instruction?" Because it would be impossible and impractical to observe, interview, survey, etc. all first-year writing students and instructors in classes using one or the other of these instructional approaches, a researcher would study a sample – or a subset – of a population. Sampling – or the creation of this subset of a population – is used by many researchers who desire to make sense of some phenomenon.

To analyze differences in the ability of student writers who are taught in each type of classroom, the researcher would compare the writing performance of the two groups of students.

Dependent Variables

In an experimental study, a variable whose score depends on (or is determined or caused by) another variable is called a dependent variable. For instance, an experiment might explore the extent to which the writing quality of final drafts of student papers is affected by the kind of instruction they received. In this case, the dependent variable would be writing quality of final drafts.

Independent Variables

In an experimental study, a variable that determines (or causes) the score of a dependent variable is called an independent variable. For instance, an experiment might explore the extent to which the writing quality of final drafts of student papers is affected by the kind of instruction they received. In this case, the independent variable would be the kind of instruction students received.

Probability

Beginning researchers most often use the word probability to express a subjective judgment about the likelihood, or degree of certainty, that a particular event will occur. People say such things as: "It will probably rain tomorrow." "It is unlikely that we will win the ball game." It is possible to assign a number to the event being predicted, a number between 0 and 1, which represents degree of confidence that the event will occur. For example, a student might say that the likelihood an instructor will give an exam next week is about 90 percent, or .9. Where 100 percent, or 1.00, represents certainty, .9 would mean the student is almost certain the instructor will give an exam. If the student assigned the number .6, the likelihood of an exam would be just slightly greater than the likelihood of no exam. A rating of 0 would indicate complete certainty that no exam would be given(Shoeninger, 1971).

The probability of a particular outcome or set of outcomes is called a p-value . In our discussion, a p-value will be symbolized by a p followed by parentheses enclosing a symbol of the outcome or set of outcomes. For example, p(X) should be read, "the probability of a given X score" (Shoeninger). Thus p(exam) should be read, "the probability an instructor will give an exam next week."

A population is a group which is studied. In educational research, the population is usually a group of people. Researchers seldom are able to study every member of a population. Usually, they instead study a representative sample – or subset – of a population. Researchers then generalize their findings about the sample to the population as a whole.

Sampling is performed so that a population under study can be reduced to a manageable size. This can be accomplished via random sampling, discussed below, or via matching.

Random sampling is a procedure used by researchers in which all samples of a particular size have an equal chance to be chosen for an observation, experiment, etc (Runyon and Haber, 1976). There is no predetermination as to which members are chosen for the sample. This type of sampling is done in order to minimize scientific biases and offers the greatest likelihood that a sample will indeed be representative of the larger population. The aim here is to make the sample as representative of the population as possible. Note that the closer a sample distribution approximates the population distribution, the more generalizable the results of the sample study are to the population. Notions of probability apply here. Random sampling provides the greatest probability that the distribution of scores in a sample will closely approximate the distribution of scores in the overall population.

Matching is a method used by researchers to gain accurate and precise results of a study so that they may be applicable to a larger population. After a population has been examined and a sample has been chosen, a researcher must then consider variables, or extrinsic factors, that might affect the study. Matching methods apply when researchers are aware of extrinsic variables before conducting a study. Two methods used to match groups are:

Precision Matching

In precision matching , there is an experimental group that is matched with a control group. Both groups, in essence, have the same characteristics. Thus, the proposed causal relationship/model being examined allows for the probabilistic assumption that the result is generalizable.

Frequency Distribution

Frequency distribution is more manageable and efficient than precision matching. Instead of one-to-one matching that must be administered in precision matching, frequency distribution allows the comparison of an experimental and control group through relevant variables. If three Communications majors and four English majors are chosen for the control group, then an equal proportion of three Communications major and four English majors should be allotted to the experiment group. Of course, beyond their majors, the characteristics of the matched sets of participants may in fact be vastly different.

Although, in theory, matching tends to produce valid conclusions, a rather obvious difficulty arises in finding subjects which are compatible. Researchers may even believe that experimental and control groups are identical when, in fact, a number of variables have been overlooked. For these reasons, researchers tend to reject matching methods in favor of random sampling.

Statistics can be used to analyze individual variables, relationships among variables, and differences between groups. In this section, we explore a range of statistical methods for conducting these analyses.

Statistics can be used to analyze individual variables, relationships among variables, and differences between groups.

Analyzing Individual Variables

The statistical procedures used to analyze a single variable describing a group (such as a population or representative sample) involve measures of central tendency and measures of variation . To explore these measures, a researcher first needs to consider the distribution , or range of values of a particular variable in a population or sample. Normal distribution occurs if the distribution of a population is completely normal. When graphed, this type of distribution will look like a bell curve; it is symmetrical and most of the scores cluster toward the middle. Skewed Distribution simply means the distribution of a population is not normal. The scores might cluster toward the right or the left side of the curve, for instance. Or there might be two or more clusters of scores, so that the distribution looks like a series of hills.

Once frequency distributions have been determined, researchers can calculate measures of central tendency and measures of variation. Measures of central tendency indicate averages of the distribution, and measures of variation indicate the spread, or range, of the distribution (Hinkle, Wiersma and Jurs 1988).

Measures of Central Tendency

Central tendency is measured in three ways: mean , median and mode . The mean is simply the average score of a distribution. The median is the center, or middle score within a distribution. The mode is the most frequent score within a distribution. In a normal distribution, the mean, median and mode are identical.

| Student | # of Crayons | |

|---|---|---|

| A | 8 | |

| B | 16 | |

| C | 16 | |

| D | 32 | |

| E | 32 | |

| F | 32 | |

| G | 48 | |

| H | 48 | |

| J | 56 |

Measures of Variation

Measures of variation determine the range of the distribution, relative to the measures of central tendency. Where the measures of central tendency are specific data points, measures of variation are lengths between various points within the distribution. Variation is measured in terms of range, mean deviation, variance, and standard deviation (Hinkle, Wiersma and Jurs 1988).

The range is the distance between the lowest data point and the highest data point. Deviation scores are the distances between each data point and the mean.

Mean deviation is the average of the absolute values of the deviation scores; that is, mean deviation is the average distance between the mean and the data points. Closely related to the measure of mean deviation is the measure of variance .

Variance also indicates a relationship between the mean of a distribution and the data points; it is determined by averaging the sum of the squared deviations. Squaring the differences instead of taking the absolute values allows for greater flexibility in calculating further algebraic manipulations of the data. Another measure of variation is the standard deviation .

Standard deviation is the square root of the variance. This calculation is useful because it allows for the same flexibility as variance regarding further calculations and yet also expresses variation in the same units as the original measurements (Hinkle, Wiersma and Jurs 1988).

Analyzing Differences Between Groups

Statistical tests can be used to analyze differences in the scores of two or more groups. The following statistical tests are commonly used to analyze differences between groups:

A t-test is used to determine if the scores of two groups differ on a single variable. A t-test is designed to test for the differences in mean scores. For instance, you could use a t-test to determine whether writing ability differs among students in two classrooms.

Note: A t-test is appropriate only when looking at paired data. It is useful in analyzing scores of two groups of participants on a particular variable or in analyzing scores of a single group of participants on two variables.

Matched Pairs T-Test

This type of t-test could be used to determine if the scores of the same participants in a study differ under different conditions. For instance, this sort of t-test could be used to determine if people write better essays after taking a writing class than they did before taking the writing class.

Analysis of Variance (ANOVA)