Statistical Papers

Statistical Papers is a forum for presentation and critical assessment of statistical methods encouraging the discussion of methodological foundations and potential applications.

- The Journal stresses statistical methods that have broad applications, giving special attention to those relevant to the economic and social sciences.

- Covers all topics of modern data science, such as frequentist and Bayesian design and inference as well as statistical learning.

- Contains original research papers (regular articles), survey articles, short communications, reports on statistical software, and book reviews.

- High author satisfaction with 90% likely to publish in the journal again.

- Werner G. Müller,

- Carsten Jentsch,

- Shuangzhe Liu,

- Ulrike Schneider

Latest issue

Volume 65, Issue 6

Latest articles

Confidence intervals for overall response rate difference in the sequential parallel comparison design.

- Guogen Shan

- Samuel S. Wu

Bayesian and frequentist inference derived from the maximum entropy principle with applications to propagating uncertainty about statistical methods

- David R. Bickel

Reduced bias estimation of the log odds ratio

A critical note on the exponentiated EWMA chart

- William H. Woodall

Hadamard matrices, quaternions, and the Pearson chi-square statistic

- Abbas Alhakim

Journal updates

Write & submit: overleaf latex template.

Overleaf LaTeX Template

Journal information

- Australian Business Deans Council (ABDC) Journal Quality List

- Current Index to Statistics

- Google Scholar

- Japanese Science and Technology Agency (JST)

- Mathematical Reviews

- Norwegian Register for Scientific Journals and Series

- OCLC WorldCat Discovery Service

- Research Papers in Economics (RePEc)

- Science Citation Index Expanded (SCIE)

- TD Net Discovery Service

- UGC-CARE List (India)

Rights and permissions

Editorial policies

© Springer-Verlag GmbH Germany, part of Springer Nature

- Find a journal

- Publish with us

- Track your research

An official website of the United States government

The .gov means it’s official. Federal government websites often end in .gov or .mil. Before sharing sensitive information, make sure you’re on a federal government site.

The site is secure. The https:// ensures that you are connecting to the official website and that any information you provide is encrypted and transmitted securely.

- Publications

- Account settings

- My Bibliography

- Collections

- Citation manager

Save citation to file

Email citation, add to collections.

- Create a new collection

- Add to an existing collection

Add to My Bibliography

Your saved search, create a file for external citation management software, your rss feed.

- Search in PubMed

- Search in NLM Catalog

- Add to Search

Comprehensive guidelines for appropriate statistical analysis methods in research

Affiliations.

- 1 Department of Anesthesiology and Pain Medicine, Daegu Catholic University School of Medicine, Daegu, Korea.

- 2 Department of Medical Statistics, Daegu Catholic University School of Medicine, Daegu, Korea.

- PMID: 39210669

- DOI: 10.4097/kja.24016

Background: The selection of statistical analysis methods in research is a critical and nuanced task that requires a scientific and rational approach. Aligning the chosen method with the specifics of the research design and hypothesis is paramount, as it can significantly impact the reliability and quality of the research outcomes.

Methods: This study explores a comprehensive guideline for systematically choosing appropriate statistical analysis methods, with a particular focus on the statistical hypothesis testing stage and categorization of variables. By providing a detailed examination of these aspects, this study aims to provide researchers with a solid foundation for informed methodological decision making. Moving beyond theoretical considerations, this study delves into the practical realm by examining the null and alternative hypotheses tailored to specific statistical methods of analysis. The dynamic relationship between these hypotheses and statistical methods is thoroughly explored, and a carefully crafted flowchart for selecting the statistical analysis method is proposed.

Results: Based on the flowchart, we examined whether exemplary research papers appropriately used statistical methods that align with the variables chosen and hypotheses built for the research. This iterative process ensures the adaptability and relevance of this flowchart across diverse research contexts, contributing to both theoretical insights and tangible tools for methodological decision-making.

Conclusions: This study emphasizes the importance of a scientific and rational approach for the selection of statistical analysis methods. By providing comprehensive guidelines, insights into the null and alternative hypotheses, and a practical flowchart, this study aims to empower researchers and enhance the overall quality and reliability of scientific studies.

Keywords: Algorithms; Biostatistics; Data analysis; Guideline; Statistical data interpretation; Statistical model..

PubMed Disclaimer

- Citation Manager

NCBI Literature Resources

MeSH PMC Bookshelf Disclaimer

The PubMed wordmark and PubMed logo are registered trademarks of the U.S. Department of Health and Human Services (HHS). Unauthorized use of these marks is strictly prohibited.

Statistical Analysis in Research: Meaning, Methods and Types

Home » Videos » Statistical Analysis in Research: Meaning, Methods and Types

The scientific method is an empirical approach to acquiring new knowledge by making skeptical observations and analyses to develop a meaningful interpretation. It is the basis of research and the primary pillar of modern science. Researchers seek to understand the relationships between factors associated with the phenomena of interest. In some cases, research works with vast chunks of data, making it difficult to observe or manipulate each data point. As a result, statistical analysis in research becomes a means of evaluating relationships and interconnections between variables with tools and analytical techniques for working with large data. Since researchers use statistical power analysis to assess the probability of finding an effect in such an investigation, the method is relatively accurate. Hence, statistical analysis in research eases analytical methods by focusing on the quantifiable aspects of phenomena.

What is Statistical Analysis in Research? A Simplified Definition

Statistical analysis uses quantitative data to investigate patterns, relationships, and patterns to understand real-life and simulated phenomena. The approach is a key analytical tool in various fields, including academia, business, government, and science in general. This statistical analysis in research definition implies that the primary focus of the scientific method is quantitative research. Notably, the investigator targets the constructs developed from general concepts as the researchers can quantify their hypotheses and present their findings in simple statistics.

When a business needs to learn how to improve its product, they collect statistical data about the production line and customer satisfaction. Qualitative data is valuable and often identifies the most common themes in the stakeholders’ responses. On the other hand, the quantitative data creates a level of importance, comparing the themes based on their criticality to the affected persons. For instance, descriptive statistics highlight tendency, frequency, variation, and position information. While the mean shows the average number of respondents who value a certain aspect, the variance indicates the accuracy of the data. In any case, statistical analysis creates simplified concepts used to understand the phenomenon under investigation. It is also a key component in academia as the primary approach to data representation, especially in research projects, term papers and dissertations.

Most Useful Statistical Analysis Methods in Research

Using statistical analysis methods in research is inevitable, especially in academic assignments, projects, and term papers. It’s always advisable to seek assistance from your professor or you can try research paper writing by CustomWritings before you start your academic project or write statistical analysis in research paper. Consulting an expert when developing a topic for your thesis or short mid-term assignment increases your chances of getting a better grade. Most importantly, it improves your understanding of research methods with insights on how to enhance the originality and quality of personalized essays. Professional writers can also help select the most suitable statistical analysis method for your thesis, influencing the choice of data and type of study.

Descriptive Statistics

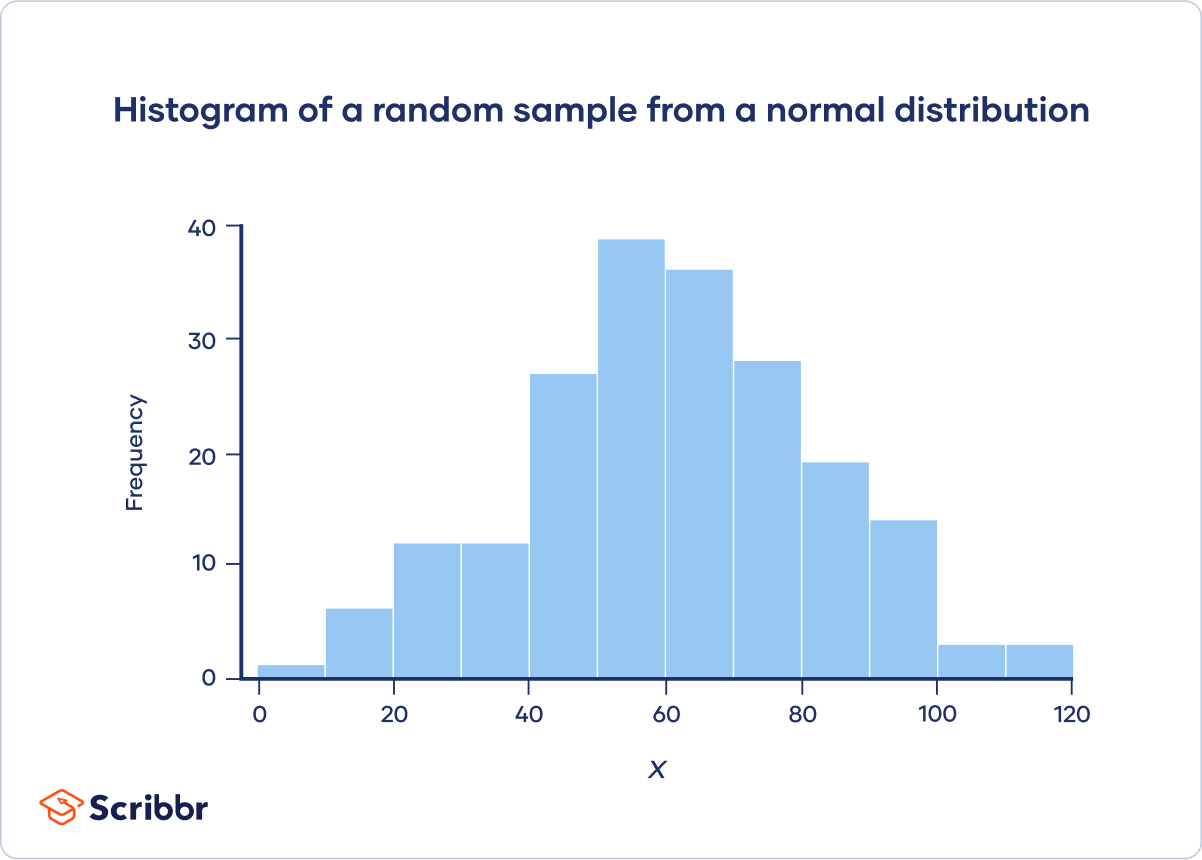

Descriptive statistics is a statistical method summarizing quantitative figures to understand critical details about the sample and population. A description statistic is a figure that quantifies a specific aspect of the data. For instance, instead of analyzing the behavior of a thousand students, research can identify the most common actions among them. By doing this, the person utilizes statistical analysis in research, particularly descriptive statistics.

- Measures of central tendency . Central tendency measures are the mean, mode, and media or the averages denoting specific data points. They assess the centrality of the probability distribution, hence the name. These measures describe the data in relation to the center.

- Measures of frequency . These statistics document the number of times an event happens. They include frequency, count, ratios, rates, and proportions. Measures of frequency can also show how often a score occurs.

- Measures of dispersion/variation . These descriptive statistics assess the intervals between the data points. The objective is to view the spread or disparity between the specific inputs. Measures of variation include the standard deviation, variance, and range. They indicate how the spread may affect other statistics, such as the mean.

- Measures of position . Sometimes researchers can investigate relationships between scores. Measures of position, such as percentiles, quartiles, and ranks, demonstrate this association. They are often useful when comparing the data to normalized information.

Inferential Statistics

Inferential statistics is critical in statistical analysis in quantitative research. This approach uses statistical tests to draw conclusions about the population. Examples of inferential statistics include t-tests, F-tests, ANOVA, p-value, Mann-Whitney U test, and Wilcoxon W test. This

Common Statistical Analysis in Research Types

Although inferential and descriptive statistics can be classified as types of statistical analysis in research, they are mostly considered analytical methods. Types of research are distinguishable by the differences in the methodology employed in analyzing, assembling, classifying, manipulating, and interpreting data. The categories may also depend on the type of data used.

Predictive Analysis

Predictive research analyzes past and present data to assess trends and predict future events. An excellent example of predictive analysis is a market survey that seeks to understand customers’ spending habits to weigh the possibility of a repeat or future purchase. Such studies assess the likelihood of an action based on trends.

Prescriptive Analysis

On the other hand, a prescriptive analysis targets likely courses of action. It’s decision-making research designed to identify optimal solutions to a problem. Its primary objective is to test or assess alternative measures.

Causal Analysis

Causal research investigates the explanation behind the events. It explores the relationship between factors for causation. Thus, researchers use causal analyses to analyze root causes, possible problems, and unknown outcomes.

Mechanistic Analysis

This type of research investigates the mechanism of action. Instead of focusing only on the causes or possible outcomes, researchers may seek an understanding of the processes involved. In such cases, they use mechanistic analyses to document, observe, or learn the mechanisms involved.

Exploratory Data Analysis

Similarly, an exploratory study is extensive with a wider scope and minimal limitations. This type of research seeks insight into the topic of interest. An exploratory researcher does not try to generalize or predict relationships. Instead, they look for information about the subject before conducting an in-depth analysis.

The Importance of Statistical Analysis in Research

As a matter of fact, statistical analysis provides critical information for decision-making. Decision-makers require past trends and predictive assumptions to inform their actions. In most cases, the data is too complex or lacks meaningful inferences. Statistical tools for analyzing such details help save time and money, deriving only valuable information for assessment. An excellent statistical analysis in research example is a randomized control trial (RCT) for the Covid-19 vaccine. You can download a sample of such a document online to understand the significance such analyses have to the stakeholders. A vaccine RCT assesses the effectiveness, side effects, duration of protection, and other benefits. Hence, statistical analysis in research is a helpful tool for understanding data.

Sources and links For the articles and videos I use different databases, such as Eurostat, OECD World Bank Open Data, Data Gov and others. You are free to use the video I have made on your site using the link or the embed code. If you have any questions, don’t hesitate to write to me!

Support statistics and data, if you have reached the end and like this project, you can donate a coffee to “statistics and data”..

Copyright © 2022 Statistics and Data

SPSS: An Imperative Quantitative Data Analysis Tool for Social Science Research

- October 2021

- V(X):300-302

- Begum Rokeya University, Rangpur

- This person is not on ResearchGate, or hasn't claimed this research yet.

Discover the world's research

- 25+ million members

- 160+ million publication pages

- 2.3+ billion citations

- Naila Rajiha

- Noor Malihah

- Nur Muthmainnah

- Rubisha Adhikari

- Rajani Shah

- Kamal Ghimire

- Raghavendra R

- INT J ENVIRON HEAL R

- Umaiza Bashir

- OPHTHAL EPIDEMIOL

- Yu-Bai Chou

- Hsin-Ho Chang

- Hsun-I Chiu

- Amirah Iryani Hayatuddin

- A’dawiyah Ismail

- Seyievono Savi

- Sabine Landau

- Brian S. Everitt

- Dorothy Giles Williams

- Earl R. Babbie

- Recruit researchers

- Join for free

- Login Email Tip: Most researchers use their institutional email address as their ResearchGate login Password Forgot password? Keep me logged in Log in or Continue with Google Welcome back! Please log in. Email · Hint Tip: Most researchers use their institutional email address as their ResearchGate login Password Forgot password? Keep me logged in Log in or Continue with Google No account? Sign up

Thank you for visiting nature.com. You are using a browser version with limited support for CSS. To obtain the best experience, we recommend you use a more up to date browser (or turn off compatibility mode in Internet Explorer). In the meantime, to ensure continued support, we are displaying the site without styles and JavaScript.

- View all journals

- Explore content

- About the journal

- Publish with us

- Sign up for alerts

Statistics articles within Scientific Reports

Article 29 August 2024 | Open Access

Effective weight optimization strategy for precise deep learning forecasting models using EvoLearn approach

- , Ashima Anand

- & Rajender Parsad

Article 26 August 2024 | Open Access

Quantification of the time-varying epidemic growth rate and of the delays between symptom onset and presenting to healthcare for the mpox epidemic in the UK in 2022

- Robert Hinch

- , Jasmina Panovska-Griffiths

- & Christophe Fraser

Investigating the causal relationship between wealth index and ICT skills: a mediation analysis approach

- Tarikul Islam

- & Nabil Ahmed Uthso

Article 24 August 2024 | Open Access

Statistical analysis of the effect of socio-political factors on individual life satisfaction

- , Isra Hasan

- & Ayman Alzaatreh

Article 23 August 2024 | Open Access

Improving the explainability of autoencoder factors for commodities through forecast-based Shapley values

- Roy Cerqueti

- , Antonio Iovanella

- & Saverio Storani

Article 20 August 2024 | Open Access

Defect detection of printed circuit board assembly based on YOLOv5

- Minghui Shen

- , Yujie Liu

- & Ye Jiang

Breaking the silence: leveraging social interaction data to identify high-risk suicide users online using network analysis and machine learning

- Damien Lekkas

- & Nicholas C. Jacobson

Stochastic image spectroscopy: a discriminative generative approach to hyperspectral image modelling and classification

- Alvaro F. Egaña

- , Alejandro Ehrenfeld

- & Jorge F. Silva

Article 15 August 2024 | Open Access

Data-driven risk analysis of nonlinear factor interactions in road safety using Bayesian networks

- Cinzia Carrodano

Article 13 August 2024 | Open Access

Momentum prediction models of tennis match based on CatBoost regression and random forest algorithms

- Xingchen Lv

- , Dingyu Gu

- & Yanfang li

Article 12 August 2024 | Open Access

Numerical and machine learning modeling of GFRP confined concrete-steel hollow elliptical columns

- Haytham F. Isleem

- , Tang Qiong

- & Ali Jahami

Experimental investigation of the distribution patterns of micro-scratches in abrasive waterjet cutting surface

- & Quan Wen

Article 07 August 2024 | Open Access

PMANet : a time series forecasting model for Chinese stock price prediction

- , Weisi Dai

- & Yunjing Zhao

Article 06 August 2024 | Open Access

Grasshopper platform-assisted design optimization of fujian rural earthen buildings considering low-carbon emissions reduction

- & Yang Ding

Article 03 August 2024 | Open Access

Effects of dietary fish to rapeseed oil ratio on steatosis symptoms in Atlantic salmon ( Salmo salar L) of different sizes

- D. Siciliani

- & Å. Krogdahl

A model-free and distribution-free multi-omics integration approach for detecting novel lung adenocarcinoma genes

- Shaofei Zhao

- & Guifang Fu

Article 01 August 2024 | Open Access

Intrinsic dimension as a multi-scale summary statistics in network modeling

- Iuri Macocco

- , Antonietta Mira

- & Alessandro Laio

A new possibilistic-based clustering method for probability density functions and its application to detecting abnormal elements

- Hung Tran-Nam

- , Thao Nguyen-Trang

- & Ha Che-Ngoc

Article 30 July 2024 | Open Access

A dynamic customer segmentation approach by combining LRFMS and multivariate time series clustering

- Shuhai Wang

- , Linfu Sun

- & Yang Yu

Article 29 July 2024 | Open Access

Field evaluation of a volatile pyrethroid spatial repellent and etofenprox treated clothing for outdoor protection against forest malaria vectors in Cambodia

- Élodie A. Vajda

- , Amanda Ross

- & Neil F. Lobo

Study on crease recovery property of warp-knitted jacquard spacer shoe upper material

- & Shiyu Peng

Article 27 July 2024 | Open Access

Calibration estimation of population total using multi-auxiliary information in the presence of non-response

- , Anant Patel

- & Menakshi Pachori

Simulation-based prior knowledge elicitation for parametric Bayesian models

- Florence Bockting

- , Stefan T. Radev

- & Paul-Christian Bürkner

Article 26 July 2024 | Open Access

Modelling Salmonella Typhi in high-density urban Blantyre neighbourhood, Malawi, using point pattern methods

- Jessie J. Khaki

- , James E. Meiring

- & Emanuele Giorgi

Exogenous variable driven deep learning models for improved price forecasting of TOP crops in India

- G. H. Harish Nayak

- , Md Wasi Alam

- & Chandan Kumar Deb

Generalization of cut-in pre-crash scenarios for autonomous vehicles based on accident data

- , Xinyu Zhu

- & Chang Xu

Article 19 July 2024 | Open Access

Automated PD-L1 status prediction in lung cancer with multi-modal PET/CT fusion

- Ronrick Da-ano

- , Gustavo Andrade-Miranda

- & Catherine Cheze Le Rest

Article 17 July 2024 | Open Access

Optimizing decision-making with aggregation operators for generalized intuitionistic fuzzy sets and their applications in the tech industry

- Muhammad Wasim

- , Awais Yousaf

- & Hamiden Abd El-Wahed Khalifa

Article 15 July 2024 | Open Access

Putting ICAP to the test: how technology-enhanced learning activities are related to cognitive and affective-motivational learning outcomes in higher education

- Christina Wekerle

- , Martin Daumiller

- & Ingo Kollar

The impact of national savings on economic development: a focused study on the ten poorest countries in Sub-Saharan Africa

Article 13 July 2024 | Open Access

Regularized ensemble learning for prediction and risk factors assessment of students at risk in the post-COVID era

- Zardad Khan

- , Amjad Ali

- & Saeed Aldahmani

Article 12 July 2024 | Open Access

Eigen-entropy based time series signatures to support multivariate time series classification

- Abhidnya Patharkar

- , Jiajing Huang

- & Naomi Gades

Article 11 July 2024 | Open Access

Exploring usage pattern variation of free-floating bike-sharing from a night travel perspective

- , Xianke Han

- & Lili Li

Early mutational signatures and transmissibility of SARS-CoV-2 Gamma and Lambda variants in Chile

- Karen Y. Oróstica

- , Sebastian B. Mohr

- & Seba Contreras

Article 10 July 2024 | Open Access

Optimizing the location of vaccination sites to stop a zoonotic epidemic

- Ricardo Castillo-Neyra

- , Sherrie Xie

- & Michael Z. Levy

Article 08 July 2024 | Open Access

Integrating socio-psychological factors in the SEIR model optimized by a genetic algorithm for COVID-19 trend analysis

- Haonan Wang

- , Danhong Wu

- & Junhui Zhang

Article 05 July 2024 | Open Access

Research on bearing fault diagnosis based on improved genetic algorithm and BP neural network

- Zenghua Chen

- , Lingjian Zhu

- & Gang Xiong

Article 04 July 2024 | Open Access

Employees’ pro-environmental behavior in an organization: a case study in the UAE

- Nadin Alherimi

- , Zeki Marva

- & Ayman Alzaaterh

Article 03 July 2024 | Open Access

The predictive capability of several anthropometric indices for identifying the risk of metabolic syndrome and its components among industrial workers

- Ekaterina D. Konstantinova

- , Tatiana A. Maslakova

- & Svetlana Yu. Ogorodnikova

Article 02 July 2024 | Open Access

A bayesian spatio-temporal dynamic analysis of food security in Africa

- Adusei Bofa

- & Temesgen Zewotir

Research on the influencing factors of promoting flipped classroom teaching based on the integrated UTAUT model and learning engagement theory

- & Wang He

Article 28 June 2024 | Open Access

Peak response regularization for localization

- , Jinzhen Yao

- & Qintao Hu

Article 25 June 2024 | Open Access

Prediction and reliability analysis of shear strength of RC deep beams

- Khaled Megahed

Multistage time-to-event models improve survival inference by partitioning mortality processes of tracked organisms

- Suresh A. Sethi

- , Alex L. Koeberle

- & Kenneth Duren

Article 24 June 2024 | Open Access

Summarizing physical performance in professional soccer: development of a new composite index

- José M. Oliva-Lozano

- , Mattia Cefis

- & Ricardo Resta

Finding multifaceted communities in multiplex networks

- László Gadár

- & János Abonyi

Article 22 June 2024 | Open Access

Utilizing Bayesian inference in accelerated testing models under constant stress via ordered ranked set sampling and hybrid censoring with practical validation

- Atef F. Hashem

- , Naif Alotaibi

- & Alaa H. Abdel-Hamid

Predicting chronic wasting disease in white-tailed deer at the county scale using machine learning

- Md Sohel Ahmed

- , Brenda J. Hanley

- & Krysten L. Schuler

Article 21 June 2024 | Open Access

Properties, quantile regression, and application of bounded exponentiated Weibull distribution to COVID-19 data of mortality and survival rates

- Shakila Bashir

- , Bushra Masood

- & Iram Saleem

Article 20 June 2024 | Open Access

Cox proportional hazards regression in small studies of predictive biomarkers

- , V. H. Nguyen

- & M. Hauptmann

Browse broader subjects

- Mathematics and computing

Quick links

- Explore articles by subject

- Guide to authors

- Editorial policies

An official website of the United States government

The .gov means it’s official. Federal government websites often end in .gov or .mil. Before sharing sensitive information, make sure you’re on a federal government site.

The site is secure. The https:// ensures that you are connecting to the official website and that any information you provide is encrypted and transmitted securely.

- Publications

- Account settings

Preview improvements coming to the PMC website in October 2024. Learn More or Try it out now .

- Advanced Search

- Journal List

- BMJ Open Access

How to write statistical analysis section in medical research

Alok kumar dwivedi.

Department of Molecular and Translational Medicine, Division of Biostatistics and Epidemiology, Texas Tech University Health Sciences Center El Paso, El Paso, Texas, USA

Associated Data

jim-2022-002479supp001.pdf

Data sharing not applicable as no datasets generated and/or analyzed for this study.

Reporting of statistical analysis is essential in any clinical and translational research study. However, medical research studies sometimes report statistical analysis that is either inappropriate or insufficient to attest to the accuracy and validity of findings and conclusions. Published works involving inaccurate statistical analyses and insufficient reporting influence the conduct of future scientific studies, including meta-analyses and medical decisions. Although the biostatistical practice has been improved over the years due to the involvement of statistical reviewers and collaborators in research studies, there remain areas of improvement for transparent reporting of the statistical analysis section in a study. Evidence-based biostatistics practice throughout the research is useful for generating reliable data and translating meaningful data to meaningful interpretation and decisions in medical research. Most existing research reporting guidelines do not provide guidance for reporting methods in the statistical analysis section that helps in evaluating the quality of findings and data interpretation. In this report, we highlight the global and critical steps to be reported in the statistical analysis of grants and research articles. We provide clarity and the importance of understanding study objective types, data generation process, effect size use, evidence-based biostatistical methods use, and development of statistical models through several thematic frameworks. We also provide published examples of adherence or non-adherence to methodological standards related to each step in the statistical analysis and their implications. We believe the suggestions provided in this report can have far-reaching implications for education and strengthening the quality of statistical reporting and biostatistical practice in medical research.

Introduction

Biostatistics is the overall approach to how we realistically and feasibly execute a research idea to produce meaningful data and translate data to meaningful interpretation and decisions. In this era of evidence-based medicine and practice, basic biostatistical knowledge becomes essential for critically appraising research articles and implementing findings for better patient management, improving healthcare, and research planning. 1 However, it may not be sufficient for the proper execution and reporting of statistical analyses in studies. 2 3 Three things are required for statistical analyses, namely knowledge of the conceptual framework of variables, research design, and evidence-based applications of statistical analysis with statistical software. 4 5 The conceptual framework provides possible biological and clinical pathways between independent variables and outcomes with role specification of variables. The research design provides a protocol of study design and data generation process (DGP), whereas the evidence-based statistical analysis approach provides guidance for selecting and implementing approaches after evaluating data with the research design. 2 5 Ocaña-Riola 6 reported a substantial percentage of articles from high-impact medical journals contained errors in statistical analysis or data interpretation. These errors in statistical analyses and interpretation of results do not only impact the reliability of research findings but also influence the medical decision-making and planning and execution of other related studies. A survey of consulting biostatisticians in the USA reported that researchers frequently request biostatisticians for performing inappropriate statistical analyses and inappropriate reporting of data. 7 This implies that there is a need to enforce standardized reporting of the statistical analysis section in medical research which can also help rreviewers and investigators to improve the methodological standards of the study.

Biostatistical practice in medicine has been improving over the years due to continuous efforts in promoting awareness and involving expert services on biostatistics, epidemiology, and research design in clinical and translational research. 8–11 Despite these efforts, the quality of reporting of statistical analysis in research studies has often been suboptimal. 12 13 We noticed that none of the methods reporting documents were developed using evidence-based biostatistics (EBB) theory and practice. The EBB practice implies that the selection of statistical analysis methods for statistical analyses and the steps of results reporting and interpretation should be grounded based on the evidence generated in the scientific literature and according to the study objective type and design. 5 Previous works have not properly elucidated the importance of understanding EBB concepts and related reporting in the write-up of statistical analyses. As a result, reviewers sometimes ask to present data or execute analyses that do not match the study objective type. 14 We summarize the statistical analysis steps to be reported in the statistical analysis section based on review and thematic frameworks.

We identified articles describing statistical reporting problems in medicine using different search terms ( online supplemental table 1 ). Based on these studies, we prioritized commonly reported statistical errors in analytical strategies and developed essential components to be reported in the statistical analysis section of research grants and studies. We also clarified the purpose and the overall implication of reporting each step in statistical analyses through various examples.

Supplementary data

Although biostatistical inputs are critical for the entire research study ( online supplemental table 2 ), biostatistical consultations were mostly used for statistical analyses only 15 . Even though the conduct of statistical analysis mismatched with the study objective and DGP was identified as the major problem in articles submitted to high-impact medical journals. 16 In addition, multivariable analyses were often inappropriately conducted and reported in published studies. 17 18 In light of these statistical errors, we describe the reporting of the following components in the statistical analysis section of the study.

Step 1: specify study objective type and outcomes (overall approach)

The study objective type provides the role of important variables for a specified outcome in statistical analyses and the overall approach of the model building and model reporting steps in a study. In the statistical framework, the problems are classified into descriptive and inferential/analytical/confirmatory objectives. In the epidemiological framework, the analytical and prognostic problems are broadly classified into association, explanatory, and predictive objectives. 19 These study objectives ( figure 1 ) may be classified into six categories: (1) exploratory, (2) association, (3) causal, (4) intervention, (5) prediction and (6) clinical decision models in medical research. 20

Comparative assessments of developing and reporting of study objective types and models. Association measures include odds ratio, risk ratio, or hazard ratio. AUC, area under the curve; C, confounder; CI, confidence interval; E, exposure; HbA1C: hemoglobin A1c; M, mediator; MFT, model fit test; MST, model specification test; PI, predictive interval; R 2 , coefficient of determinant; X, independent variable; Y, outcome.

The exploratory objective type is a specific type of determinant study and is commonly known as risk factors or correlates study in medical research. In an exploratory study, all covariates are considered equally important for the outcome of interest in the study. The goal of the exploratory study is to present the results of a model which gives higher accuracy after satisfying all model-related assumptions. In the association study, the investigator identifies predefined exposures of interest for the outcome, and variables other than exposures are also important for the interpretation and considered as covariates. The goal of an association study is to present the adjusted association of exposure with outcome. 20 In the causal objective study, the investigator is interested in determining the impact of exposure(s) on outcome using the conceptual framework. In this study objective, all variables should have a predefined role (exposures, confounders, mediators, covariates, and predictors) in a conceptual framework. A study with a causal objective is known as an explanatory or a confirmatory study in medical research. The goal is to present the direct or indirect effects of exposure(s) on an outcome after assessing the model’s fitness in the conceptual framework. 19 21 The objective of an interventional study is to determine the effect of an intervention on outcomes and is often known as randomized or non-randomized clinical trials in medical research. In the intervention objective model, all variables other than the intervention are treated as nuisance variables for primary analyses. The goal is to present the direct effect of the intervention on the outcomes by eliminating biases. 22–24 In the predictive study, the goal is to determine an optimum set of variables that can predict the outcome, particularly in external settings. The clinical decision models are a special case of prognostic models in which high dimensional data at various levels are used for risk stratification, classification, and prediction. In this model, all variables are considered input features. The goal is to present a decision tool that has high accuracy in training, testing, and validation data sets. 20 25 Biostatisticians or applied researchers should properly discuss the intention of the study objective type before proceeding with statistical analyses. In addition, it would be a good idea to prepare a conceptual model framework regardless of study objective type to understand study concepts.

A study 26 showed a favorable effect of the beta-blocker intervention on survival outcome in patients with advanced human epidermal growth factor receptor (HER2)-negative breast cancer without adjusting for all the potential confounding effects (age or menopausal status and Eastern Cooperative Oncology Performance Status) in primary analyses or validation analyses or using a propensity score-adjusted analysis, which is an EBB preferred method for analyzing non-randomized studies. 27 Similarly, another study had the goal of developing a predictive model for prediction of Alzheimer’s disease progression. 28 However, this study did not internally or externally validate the performance of the model as per the requirement of a predictive objective study. In another study, 29 investigators were interested in determining an association between metabolic syndrome and hepatitis C virus. However, the authors did not clearly specify the outcome in the analysis and produced conflicting associations with different analyses. 30 Thus, the outcome should be clearly specified as per the study objective type.

Step 2: specify effect size measure according to study design (interpretation and practical value)

The study design provides information on the selection of study participants and the process of data collection conditioned on either exposure or outcome ( figure 2 ). The appropriate use of effect size measure, tabular presentation of results, and the level of evidence are mostly determined by the study design. 31 32 In cohort or clinical trial study designs, the participants are selected based on exposure status and are followed up for the development of the outcome. These study designs can provide multiple outcomes, produce incidence or incidence density, and are preferred to be analyzed with risk ratio (RR) or hazards models. In a case–control study, the selection of participants is conditioned on outcome status. This type of study can have only one outcome and is preferred to be analyzed with an odds ratio (OR) model. In a cross-sectional study design, there is no selection restriction on outcomes or exposures. All data are collected simultaneously and can be analyzed with a prevalence ratio model, which is mathematically equivalent to the RR model. 33 The reporting of effect size measure also depends on the study objective type. For example, predictive models typically require reporting of regression coefficients or weight of variables in the model instead of association measures, which are required in other objective types. There are agreements and disagreements between OR and RR measures. Due to the constancy and symmetricity properties of OR, some researchers prefer to use OR in studies with common events. Similarly, the collapsibility and interpretability properties of RR make it more appealing to use in studies with common events. 34 To avoid variable practice and interpretation issues with OR, it is recommended to use RR models in all studies except for case–control and nested case–control studies, where OR approximates RR and thus OR models should be used. Otherwise, investigators may report sufficient data to compute any ratio measure. Biostatisticians should educate investigators on the proper interpretation of ratio measures in the light of study design and their reporting. 34 35

Effect size according to study design.

Investigators sometimes either inappropriately label their study design 36 37 or report effect size measures not aligned with the study design, 38 39 leading to difficulty in results interpretation and evaluation of the level of evidence. The proper labeling of study design and the appropriate use of effect size measure have substantial implications for results interpretation, including the conduct of systematic review and meta-analysis. 40 A study 31 reviewed the frequency of reporting OR instead of RR in cohort studies and randomized clinical trials (RCTs) and found that one-third of the cohort studies used an OR model, whereas 5% of RCTs used an OR model. The majority of estimated ORs from these studies had a 20% or higher deviation from the corresponding RR.

Step 3: specify study hypothesis, reporting of p values, and interval estimates (interpretation and decision)

The clinical hypothesis provides information for evaluating formal claims specified in the study objectives, while the statistical hypothesis provides information about the population parameters/statistics being used to test the formal claims. The inference about the study hypothesis is typically measured by p value and confidence interval (CI). A smaller p value indicates that the data support against the null hypothesis. Since the p value is a conditional probability, it can never tell about the acceptance or rejection of the null hypothesis. Therefore, multiple alternative strategies of p values have been proposed to strengthen the credibility of conclusions. 41 42 Adaption of these alternative strategies is only needed in the explanatory objective studies. Although exact p values are recommended to be reported in research studies, p values do not provide any information about the effect size. Compared with p values, the CI provides a confidence range of the effect size that contains the true effect size if the study were repeated and can be used to determine whether the results are statistically significant or not. 43 Both p value and 95% CI provide complementary information and thus need to be specified in the statistical analysis section. 24 44

Researchers often test one or more comparisons or hypotheses. Accordingly, the side and the level of significance for considering results to be statistically significant may change. Furthermore, studies may include more than one primary outcome that requires an adjustment in the level of significance for multiplicity. All studies should provide the interval estimate of the effect size/regression coefficient in the primary analyses. Since the interpretation of data analysis depends on the study hypothesis, researchers are required to specify the level of significance along with the side (one-sided or two-sided) of the p value in the test for considering statistically significant results, adjustment of the level of significance due to multiple comparisons or multiplicity, and reporting of interval estimates of the effect size in the statistical analysis section. 45

A study 46 showed a significant effect of fluoxetine on relapse rates in obsessive-compulsive disorder based on a one-sided p value of 0.04. Clearly, there was no reason for using a one-sided p value as opposed to a two-sided p value. A review of the appropriate use of multiple test correction methods in multiarm clinical trials published in major medical journals in 2012 identified over 50% of the articles did not perform multiple-testing correction. 47 Similar to controlling a familywise error rate due to multiple comparisons, adjustment of the false discovery rate is also critical in studies involving multiple related outcomes. A review of RCTs for depression between 2007 and 2008 from six journals reported that only limited studies (5.8%) accounted for multiplicity in the analyses due to multiple outcomes. 48

Step 4: account for DGP in the statistical analysis (accuracy)

The study design also requires the specification of the selection of participants and outcome measurement processes in different design settings. We referred to this specific design feature as DGP. Understanding DGP helps in determining appropriate modeling of outcome distribution in statistical analyses and setting up model premises and units of analysis. 4 DGP ( figure 3 ) involves information on data generation and data measures, including the number of measurements after random selection, complex selection, consecutive selection, pragmatic selection, or systematic selection. Specifically, DGP depends on a sampling setting (participants are selected using survey sampling methods and one subject may represent multiple participants in the population), clustered setting (participants are clustered through a recruitment setting or hierarchical setting or multiple hospitals), pragmatic setting (participants are selected through mixed approaches), or systematic review setting (participants are selected from published studies). DGP also depends on the measurements of outcomes in an unpaired setting (measured on one occasion only in independent groups), paired setting (measured on more than one occasion or participants are matched on certain subject characteristics), or mixed setting (measured on more than one occasion but interested in comparing independent groups). It also involves information regarding outcomes or exposure generation processes using quantitative or categorical variables, quantitative values using labs or validated instruments, and self-reported or administered tests yielding a variety of data distributions, including individual distribution, mixed-type distribution, mixed distributions, and latent distributions. Due to different DGPs, study data may include messy or missing data, incomplete/partial measurements, time-varying measurements, surrogate measures, latent measures, imbalances, unknown confounders, instrument variables, correlated responses, various levels of clustering, qualitative data, or mixed data outcomes, competing events, individual and higher-level variables, etc. The performance of statistical analysis, appropriate estimation of standard errors of estimates and subsequently computation of p values, the generalizability of findings, and the graphical display of data rely on DGP. Accounting for DGP in the analyses requires proper communication between investigators and biostatisticians about each aspect of participant selection and data collection, including measurements, occasions of measurements, and instruments used in the research study.

Common features of the data generation process.

A study 49 compared the intake of fresh fruit and komatsuna juice with the intake of commercial vegetable juice on metabolic parameters in middle-aged men using an RCT. The study was criticized for many reasons, but primarily for incorrect statistical methods not aligned with the study DGP. 50 Similarly, another study 51 highlighted that 80% of published studies using the Korean National Health and Nutrition Examination Survey did not incorporate survey sampling structure in statistical analyses, producing biased estimates and inappropriate findings. Likewise, another study 52 highlighted the need for maintaining methodological standards while analyzing data from the National Inpatient Sample. A systematic review 53 identified that over 50% of studies did not specify whether a paired t-test or an unpaired t-test was performed in statistical analysis in the top 25% of physiology journals, indicating poor transparency in reporting of statistical analysis as per the data type. Another study 54 also highlighted the data displaying errors not aligned with DGP. As per DGP, delay in treatment initiation of patients with cancer defined from the onset of symptom to treatment initiation should be analyzed into three components: patient/primary delay, secondary delay, and tertiary delay. 55 Similarly, the number of cancerous nodes should be analyzed with count data models. 56 However, several studies did not analyze such data according to DGP. 57 58

Step 5: apply EBB methods specific to study design features and DGP (efficiency and robustness)

The continuous growth in the development of robust statistical methods for dealing with a specific problem produced various methods to analyze specific data types. Since multiple methods are available for handling a specific problem yet with varying performances, heterogeneous practices among applied researchers have been noticed. Variable practices could also be due to a lack of consensus on statistical methods in literature, unawareness, and the unavailability of standardized statistical guidelines. 2 5 59 However, it becomes sometimes difficult to differentiate whether a specific method was used due to its robustness, lack of awareness, lack of accessibility of statistical software to apply an alternative appropriate method, intention to produce expected results, or ignorance of model diagnostics. To avoid heterogeneous practices, the selection of statistical methodology and their reporting at each stage of data analysis should be conducted using methods according to EBB practice. 5 Since it is hard for applied researchers to optimally select statistical methodology at each step, we encourage investigators to involve biostatisticians at the very early stage in basic, clinical, population, translational, and database research. We also appeal to biostatisticians to develop guidelines, checklists, and educational tools to promote the concept of EBB. As an effort, we developed the statistical analysis and methods in biomedical research (SAMBR) guidelines for applied researchers to use EBB methods for data analysis. 5 The EBB practice is essential for applying recent cutting-edge robust methodologies to yield accurate and unbiased results. The efficiency of statistical methodologies depends on the assumptions and DGP. Therefore, investigators may attempt to specify the choice of specific models in the primary analysis as per the EBB.

Although details of evidence-based preferred methods are provided in the SAMBR checklists for each study design/objective, 5 we have presented a simplified version of evidence-based preferred methods for common statistical analysis ( online supplemental table 3 ). Several examples are available in the literature where inefficient methods not according to EBB practice have been used. 31 57 60

Step 6: report variable selection method in the multivariable analysis according to study objective type (unbiased)

Multivariable analysis can be used for association, prediction or classification or risk stratification, adjustment, propensity score development, and effect size estimation. 61 Some biological, clinical, behavioral, and environmental factors may directly associate or influence the relationship between exposure and outcome. Therefore, almost all health studies require multivariable analyses for accurate and unbiased interpretations of findings ( figure 1 ). Analysts should develop an adjusted model if the sample size permits. It is a misconception that the analysis of RCT does not require adjusted analysis. Analysis of RCT may require adjustment for prognostic variables. 23 The foremost step in model building is the entry of variables after finalizing the appropriate parametric or non-parametric regression model. In the exploratory model building process due to no preference of exposures, a backward automated approach after including any variables that are significant at 25% in the unadjusted analysis can be used for variable selection. 62 63 In the association model, a manual selection of covariates based on the relevance of the variables should be included in a fully adjusted model. 63 In a causal model, clinically guided methods should be used for variable selection and their adjustments. 20 In a non-randomized interventional model, efforts should be made to eliminate confounding effects through propensity score methods and the final propensity score-adjusted multivariable model may adjust any prognostic variables, while a randomized study simply should adjust any prognostic variables. 27 Maintaining the event per variable (EVR) is important to avoid overfitting in any type of modeling; therefore, screening of variables may be required in some association and explanatory studies, which may be accomplished using a backward stepwise method that needs to be clarified in the statistical analyses. 10 In a predictive study, a model with an optimum set of variables producing the highest accuracy should be used. The optimum set of variables may be screened with the random forest method or bootstrap or machine learning methods. 64 65 Different methods of variable selection and adjustments may lead to different results. The screening process of variables and their adjustments in the final multivariable model should be clearly mentioned in the statistical analysis section.

A study 66 evaluating the effect of hydroxychloroquine (HDQ) showed unfavorable events (intubation or death) in patients who received HDQ compared with those who did not (hazard ratio (HR): 2.37, 95% CI 1.84 to 3.02) in an unadjusted analysis. However, the propensity score-adjusted analyses as appropriate with the interventional objective model showed no significant association between HDQ use and unfavorable events (HR: 1.04, 95% CI 0.82 to 1.32), which was also confirmed in multivariable and other propensity score-adjusted analyses. This study clearly suggests that results interpretation should be based on a multivariable analysis only in observational studies if feasible. A recent study 10 noted that approximately 6% of multivariable analyses based on either logistic or Cox regression used an inappropriate selection method of variables in medical research. This practice was more commonly noted in studies that did not involve an expert biostatistician. Another review 61 of 316 articles from high-impact Chinese medical journals revealed that 30.7% of articles did not report the selection of variables in multivariable models. Indeed, this inappropriate practice could have been identified more commonly if classified according to the study objective type. 18 In RCTs, it is uncommon to report an adjusted analysis based on prognostic variables, even though an adjusted analysis may produce an efficient estimate compared with an unadjusted analysis. A study assessing the effect of preemptive intervention on development outcomes showed a significant effect of an intervention on reducing autism spectrum disorder symptoms. 67 However, this study was criticized by Ware 68 for not reporting non-significant results in unadjusted analyses. If possible, unadjusted estimates should also be reported in any study, particularly in RCTs. 23 68

Step 7: provide evidence for exploring effect modifiers (applicability)

Any variable that modifies the effect of exposure on the outcome is called an effect modifier or modifier or an interacting variable. Exploring the effect modifiers in multivariable analyses helps in (1) determining the applicability/generalizability of findings in the overall or specific subpopulation, (2) generating ideas for new hypotheses, (3) explaining uninterpretable findings between unadjusted and adjusted analyses, (4) guiding to present combined or separate models for each specific subpopulation, and (5) explaining heterogeneity in treatment effect. Often, investigators present adjusted stratified results according to the presence or absence of an effect modifier. If the exposure interacts with multiple variables statistically or conceptually in the model, then the stratified findings (subgroup) according to each effect modifier may be presented. Otherwise, stratified analysis substantially reduces the power of the study due to the lower sample size in each stratum and may produce significant results by inflating type I error. 69 Therefore, a multivariable analysis involving an interaction term as opposed to a stratified analysis may be presented in the presence of an effect modifier. 70 Sometimes, a quantitative variable may emerge as a potential effect modifier for exposure and an outcome relationship. In such a situation, the quantitative variable should not be categorized unless a clinically meaningful threshold is not available in the study. In fact, the practice of categorizing quantitative variables should be avoided in the analysis unless a clinically meaningful cut-off is available or a hypothesis requires for it. 71 In an exploratory objective type, any possible interaction may be obtained in a study; however, the interpretation should be guided based on clinical implications. Similarly, some objective models may have more than one exposure or intervention and the association of each exposure according to the level of other exposure should be presented through adjusted analyses as suggested in the presence of interaction effects. 70

A review of 428 articles from MEDLINE on the quality of reporting from statistical analyses of three (linear, logistic, and Cox) commonly used regression models reported that only 18.5% of the published articles provided interaction analyses, 17 even though interaction analyses can provide a lot of useful information.

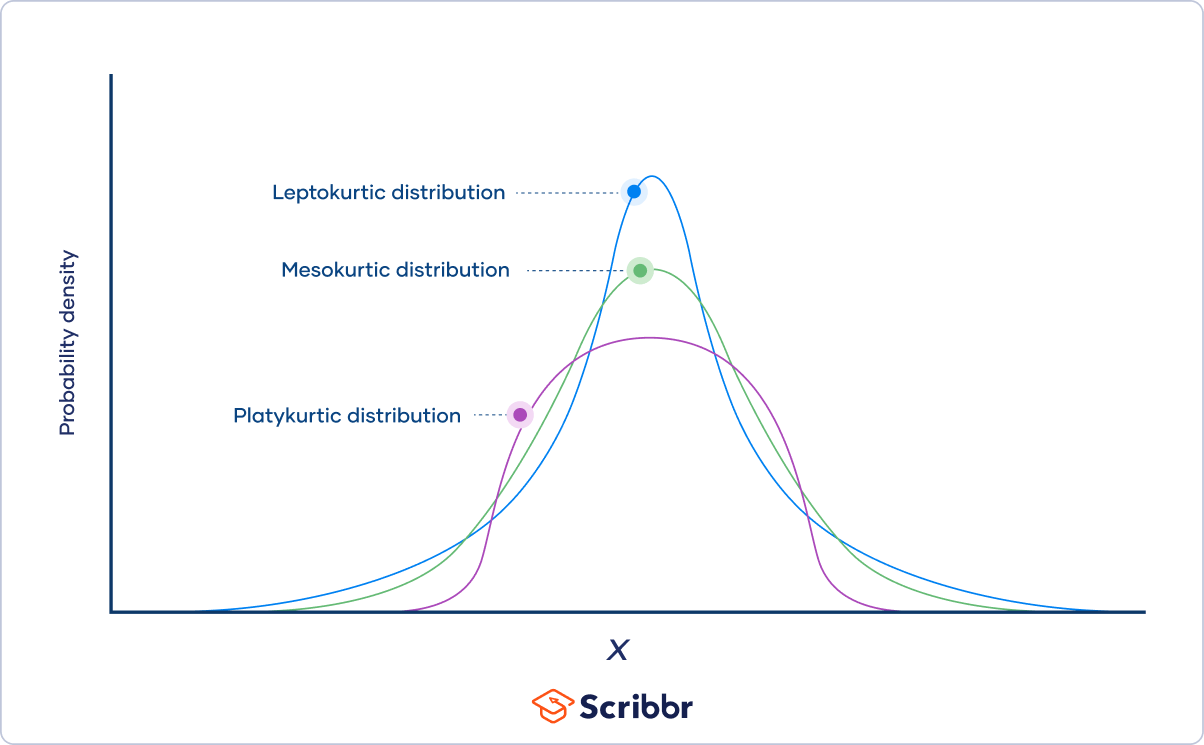

Step 8: assessment of assumptions, specifically the distribution of outcome, linearity, multicollinearity, sparsity, and overfitting (reliability)

The assessment and reporting of model diagnostics are important in assessing the efficiency, validity, and usefulness of the model. Model diagnostics include satisfying model-specific assumptions and the assessment of sparsity, linearity, distribution of outcome, multicollinearity, and overfitting. 61 72 Model-specific assumptions such as normal residuals, heteroscedasticity and independence of errors in linear regression, proportionality in Cox regression, proportionality odds assumption in ordinal logistic regression, and distribution fit in other types of continuous and count models are required. In addition, sparsity should also be examined prior to selecting an appropriate model. Sparsity indicates many zero observations in the data set. 73 In the presence of sparsity, the effect size is difficult to interpret. Except for machine learning models, most of the parametric and semiparametric models require a linear relationship between independent variables and a functional form of an outcome. Linearity should be assessed using a multivariable polynomial in all model objectives. 62 Similarly, the appropriate choice of the distribution of outcome is required for model building in all study objective models. Multicollinearity assessment is also useful in all objective models. Assessment of EVR in multivariable analysis can be used to avoid the overfitting issue of a multivariable model. 18

Some review studies highlighted that 73.8%–92% of the articles published in MEDLINE had not assessed the model diagnostics of the multivariable regression models. 17 61 72 Contrary to the monotonically, linearly increasing relationship between systolic blood pressure (SBP) and mortality established using the Framingham’s study, 74 Port et al 75 reported a non-linear relationship between SBP and all-cause mortality or cardiovascular deaths by reanalysis of the Framingham’s study data set. This study identified a different threshold for treating hypertension, indicating the role of linearity assessment in multivariable models. Although a non-Gaussian distribution model may be required for modeling patient delay outcome data in cancer, 55 a study analyzed patient delay data using an ordinary linear regression model. 57 An investigation of the development of predictive models and their reporting in medical journals identified that 53% of the articles had fewer EVR than the recommended EVR, indicating over half of the published articles may have an overfitting model. 18 Another study 76 attempted to identify the anthropometric variables associated with non-insulin-dependent diabetes and found that none of the anthropometric variables were significant after adjusting for waist circumference, age, and sex, indicating the presence of collinearity. A study reported detailed sparse data problems in published studies and potential solutions. 73

Step 9: report type of primary and sensitivity analyses (consistency)

Numerous considerations and assumptions are made throughout the research processes that require assessment, evaluation, and validation. Some assumptions, executions, and errors made at the beginning of the study data collection may not be fixable 13 ; however, additional information collected during the study and data processing, including data distribution obtained at the end of the study, may facilitate additional considerations that need to be verified in the statistical analyses. Consistencies in the research findings via modifications in the outcome or exposure definition, study population, accounting for missing data, model-related assumptions, variables and their forms, and accounting for adherence to protocol in the models can be evaluated and reported in research studies using sensitivity analyses. 77 The purpose and type of supporting analyses need to be specified clearly in the statistical analyses to differentiate the main findings from the supporting findings. Sensitivity analyses are different from secondary or interim or subgroup analyses. 78 Data analyses for secondary outcomes are often referred to as secondary analyses, while data analyses of an ongoing study are called interim analyses and data analyses according to groups based on patient characteristics are known as subgroup analyses.

Almost all studies require some form of sensitivity analysis to validate the findings under different conditions. However, it is often underutilized in medical journals. Only 18%–20.3% of studies reported some forms of sensitivity analyses. 77 78 A review of nutritional trials from high-quality journals reflected that 17% of the conclusions were reported inappropriately using findings from sensitivity analyses not based on the primary/main analyses. 77

Step 10: provide methods for summarizing, displaying, and interpreting data (transparency and usability)

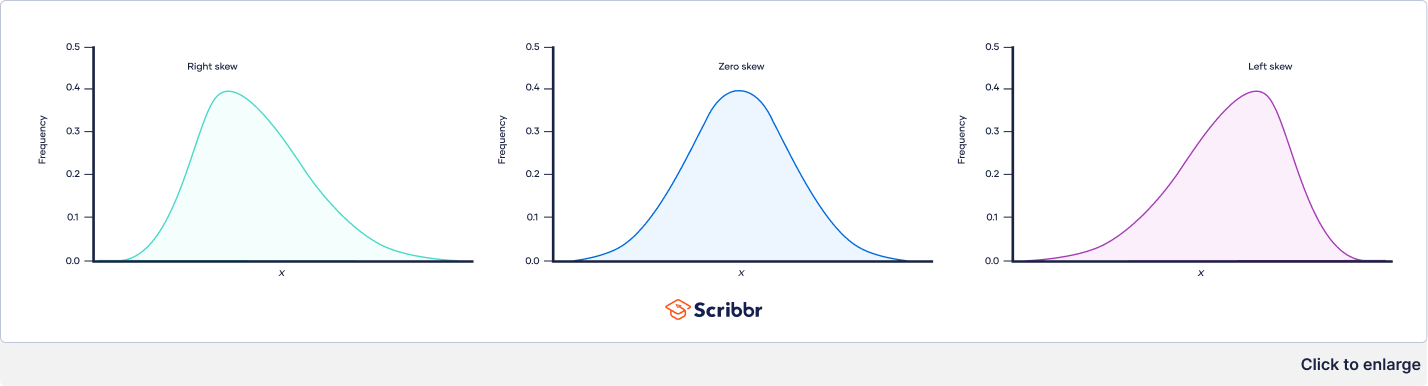

Data presentation includes data summary, data display, and data from statistical model analyses. The primary purpose of the data summary is to understand the distribution of outcome status and other characteristics in the total sample and by primary exposure status or outcome status. Column-wise data presentation should be preferred according to exposure status in all study designs, while row-wise data presentation for the outcome should be preferred in all study designs except for a case–control study. 24 32 Summary statistics should be used to provide maximum information on data distribution aligned with DGP and variable type. The purpose of results presentation primarily from regression analyses or statistical models is to convey results interpretation and implications of findings. The results should be presented according to the study objective type. Accordingly, the reporting of unadjusted and adjusted associations of each factor with the outcome may be preferred in the determinant objective model, while unadjusted and adjusted effects of primary exposure on the outcome may be preferred in the explanatory objective model. In prognostic models, the final predictive models may be presented in such a way that users can use models to predict an outcome. In the exploratory objective model, a final multivariable model should be reported with R 2 or area under the curve (AUC). In the association and interventional models, the assessment of internal validation is critically important through various sensitivity and validation analyses. A model with better fit indices (in terms of R 2 or AUC, Akaike information criterion, Bayesian information criterion, fit index, root mean square error) should be finalized and reported in the causal model objective study. In the predictive objective type, the model performance in terms of R 2 or AUC in training and validation data sets needs to be reported ( figure 1 ). 20 21 There are multiple purposes of data display, including data distribution using bar diagram or histogram or frequency polygons or box plots, comparisons using cluster bar diagram or scatter dot plot or stacked bar diagram or Kaplan-Meier plot, correlation or model assessment using scatter plot or scatter matrix, clustering or pattern using heatmap or line plots, the effect of predictors with fitted models using marginsplot, and comparative evaluation of effect sizes from regression models using forest plot. Although the key purpose of data display is to highlight critical issues or findings in the study, data display should essentially follow DGP and variable types and should be user-friendly. 54 79 Data interpretation heavily relies on the effect size measure along with study design and specified hypotheses. Sometimes, variables require standardization for descriptive comparison of effect sizes among exposures or interpreting small effect size, or centralization for interpreting intercept or avoiding collinearity due to interaction terms, or transformation for achieving model-related assumptions. 80 Appropriate methods of data reporting and interpretation aligned with study design, study hypothesis, and effect size measure should be specified in the statistical analysis section of research studies.

Published articles from reputed journals inappropriately summarized a categorized variable with mean and range, 81 summarized a highly skewed variable with mean and standard deviation, 57 and treated a categorized variable as a continuous variable in regression analyses. 82 Similarly, numerous examples from published studies reporting inappropriate graphical display or inappropriate interpretation of data not aligned with DGP or variable types are illustrated in a book published by Bland and Peacock. 83 84 A study used qualitative data on MRI but inappropriately presented with a Box-Whisker plot. 81 Another study reported unusually high OR for an association between high breast parenchymal enhancement and breast cancer in both premenopausal and postmenopausal women. 85 This reporting makes suspicious findings and may include sparse data bias. 86 A poor tabular presentation without proper scaling or standardization of a variable, missing CI for some variables, missing unit and sample size, and inconsistent reporting of decimal places could be easily noticed in table 4 of a published study. 29 Some published predictive models 87 do not report intercept or baseline survival estimates to use their predictive models in clinical use. Although a direct comparison of effect sizes obtained from the same model may be avoided if the units are different among variables, 35 a study had an objective to compare effect sizes across variables but the authors performed comparisons without standardization of variables or using statistical tests. 88

A sample for writing statistical analysis section in medical journals/research studies

Our primary study objective type was to develop a (select from figure 1 ) model to assess the relationship of risk factors (list critical variables or exposures) with outcomes (specify type from continuous/discrete/count/binary/polytomous/time-to-event). To address this objective, we conducted a (select from figure 2 or any other) study design to test the hypotheses of (equality or superiority or non-inferiority or equivalence or futility) or develop prediction. Accordingly, the other variables were adjusted or considered as (specify role of variables from confounders, covariates, or predictors or independent variables) as reflected in the conceptual framework. In the unadjusted or preliminary analyses as per the (select from figure 3 or any other design features) DGP, (specify EBB preferred tests from online supplemental table 3 or any other appropriate tests) were used for (specify variables and types) in unadjusted analyses. According to the EBB practice for the outcome (specify type) and DGP of (select from figure 3 or any other), we used (select from online supplemental table 1 or specify a multivariable approach) as the primary model in the multivariable analysis. We used (select from figure 1 ) variable selection method in the multivariable analysis and explored the interaction effects between (specify variables). The model diagnostics including (list all applicable, including model-related assumptions, linearity, or multicollinearity or overfitting or distribution of outcome or sparsity) were also assessed using (specify appropriate methods) respectively. In such exploration, we identified (specify diagnostic issues if any) and therefore the multivariable models were developed using (specify potential methods used to handle diagnostic issues). The other outcomes were analyzed with (list names of multivariable approaches with respective outcomes). All the models used the same procedure (or specify from figure 1 ) for variable selection, exploration of interaction effects, and model diagnostics using (specify statistical approaches) depending on the statistical models. As per the study design, hypothesis, and multivariable analysis, the results were summarized with effect size (select as appropriate or from figure 2 ) along with (specify 95% CI or other interval estimates) and considered statistically significant using (specify the side of p value or alternatives) at (specify the level of significance) due to (provide reasons for choosing a significance level). We presented unadjusted and/or adjusted estimates of primary outcome according to (list primary exposures or variables). Additional analyses were conducted for (specific reasons from step 9) using (specify methods) to validate findings obtained in the primary analyses. The data were summarized with (list summary measures and appropriate graphs from step 10), whereas the final multivariable model performance was summarized with (fit indices if applicable from step 10). We also used (list graphs) as appropriate with DGP (specify from figure 3 ) to present the critical findings or highlight (specify data issues) using (list graphs/methods) in the study. The exposures or variables were used in (specify the form of the variables) and therefore the effect or association of (list exposures or variables) on outcome should be interpreted in terms of changes in (specify interpretation unit) exposures/variables. List all other additional analyses if performed (with full details of all models in a supplementary file along with statistical codes if possible).

Concluding remarks

We highlighted 10 essential steps to be reported in the statistical analysis section of any analytical study ( figure 4 ). Adherence to minimum reporting of the steps specified in this report may enforce investigators to understand concepts and approach biostatisticians timely to apply these concepts in their study to improve the overall quality of methodological standards in grant proposals and research studies. The order of reporting information in statistical analyses specified in this report is not mandatory; however, clear reporting of analytical steps applicable to the specific study type should be mentioned somewhere in the manuscript. Since the entire approach of statistical analyses is dependent on the study objective type and EBB practice, proper execution and reporting of statistical models can be taught to the next generation of statisticians by the study objective type in statistical education courses. In fact, some disciplines ( figure 5 ) are strictly aligned with specific study objective types. Bioinformaticians are oriented in studying determinant and prognostic models toward precision medicine, while epidemiologists are oriented in studying association and causal models, particularly in population-based observational and pragmatic settings. Data scientists are heavily involved in prediction and classification models in personalized medicine. A common thing across disciplines is using biostatistical principles and computation tools to address any research question. Sometimes, one discipline expert does the part of others. 89 We strongly recommend using a team science approach that includes an epidemiologist, biostatistician, data scientist, and bioinformatician depending on the study objectives and needs. Clear reporting of data analyses as per the study objective type should be encouraged among all researchers to minimize heterogeneous practices and improve scientific quality and outcomes. In addition, we also encourage investigators to strictly follow transparent reporting and quality assessment guidelines according to the study design ( https://www.equator-network.org/ ) to improve the overall quality of the study, accordingly STROBE (Strengthening the Reporting of Observational Studies in Epidemiology) for observational studies, CONSORT (Consolidated Standards of Reporting Trials) for clinical trials, STARD (Standards for Reporting Diagnostic Accuracy Studies) for diagnostic studies, TRIPOD (Transparent Reporting of a multivariable prediction model for Individual Prognosis OR Diagnosis) for prediction modeling, and ARRIVE (Animal Research: Reporting of In Vivo Experiments) for preclinical studies. The steps provided in this document for writing the statistical analysis section is essentially different from other guidance documents, including SAMBR. 5 SAMBR provides a guidance document for selecting evidence-based preferred methods of statistical analysis according to different study designs, while this report suggests the global reporting of essential information in the statistical analysis section according to study objective type. In this guidance report, our suggestion strictly pertains to the reporting of methods in the statistical analysis section and their implications on the interpretation of results. Our document does not provide guidance on the reporting of sample size or results or statistical analysis section for meta-analysis. The examples and reviews reported in this study may be used to emphasize the concepts and related implications in medical research.

Summary of reporting steps, purpose, and evaluation measures in the statistical analysis section.

Role of interrelated disciplines according to study objective type.

Acknowledgments

The author would like to thank the reviewers for their careful review and insightful suggestions.

Contributors: AKD developed the concept and design and wrote the manuscript.

Funding: The authors have not declared a specific grant for this research from any funding agency in the public, commercial or not-for-profit sectors.

Competing interests: AKD is a Journal of Investigative Medicine Editorial Board member. No other competing interests declared.

Provenance and peer review: Commissioned; externally peer reviewed.

Supplemental material: This content has been supplied by the author(s). It has not been vetted by BMJ Publishing Group Limited (BMJ) and may not have been peer-reviewed. Any opinions or recommendations discussed are solely those of the author(s) and are not endorsed by BMJ. BMJ disclaims all liability and responsibility arising from any reliance placed on the content. Where the content includes any translated material, BMJ does not warrant the accuracy and reliability of the translations (including but not limited to local regulations, clinical guidelines, terminology, drug names and drug dosages), and is not responsible for any error and/or omissions arising from translation and adaptation or otherwise.

Data availability statement

Ethics statements, patient consent for publication.

Not required.

Table of Contents

Types of statistical analysis, importance of statistical analysis, benefits of statistical analysis, statistical analysis process, statistical analysis methods, statistical analysis software, statistical analysis examples, career in statistical analysis, choose the right program, become proficient in statistics today, understanding statistical analysis: techniques and applications.

Statistical analysis is the process of collecting and analyzing data in order to discern patterns and trends. It is a method for removing bias from evaluating data by employing numerical analysis. This technique is useful for collecting the interpretations of research, developing statistical models, and planning surveys and studies.

Statistical analysis is a scientific tool in AI and ML that helps collect and analyze large amounts of data to identify common patterns and trends to convert them into meaningful information. In simple words, statistical analysis is a data analysis tool that helps draw meaningful conclusions from raw and unstructured data.

The conclusions are drawn using statistical analysis facilitating decision-making and helping businesses make future predictions on the basis of past trends. It can be defined as a science of collecting and analyzing data to identify trends and patterns and presenting them. Statistical analysis involves working with numbers and is used by businesses and other institutions to make use of data to derive meaningful information.

Given below are the 6 types of statistical analysis:

Descriptive Analysis

Descriptive statistical analysis involves collecting, interpreting, analyzing, and summarizing data to present them in the form of charts, graphs, and tables. Rather than drawing conclusions, it simply makes the complex data easy to read and understand.

Inferential Analysis

The inferential statistical analysis focuses on drawing meaningful conclusions on the basis of the data analyzed. It studies the relationship between different variables or makes predictions for the whole population.

Predictive Analysis

Predictive statistical analysis is a type of statistical analysis that analyzes data to derive past trends and predict future events on the basis of them. It uses machine learning algorithms, data mining , data modelling , and artificial intelligence to conduct the statistical analysis of data.

Prescriptive Analysis

The prescriptive analysis conducts the analysis of data and prescribes the best course of action based on the results. It is a type of statistical analysis that helps you make an informed decision.

Exploratory Data Analysis

Exploratory analysis is similar to inferential analysis, but the difference is that it involves exploring the unknown data associations. It analyzes the potential relationships within the data.

Causal Analysis