- Español – América Latina

- Português – Brasil

- Tiếng Việt

- TensorFlow Core

Simple audio recognition: Recognizing keywords

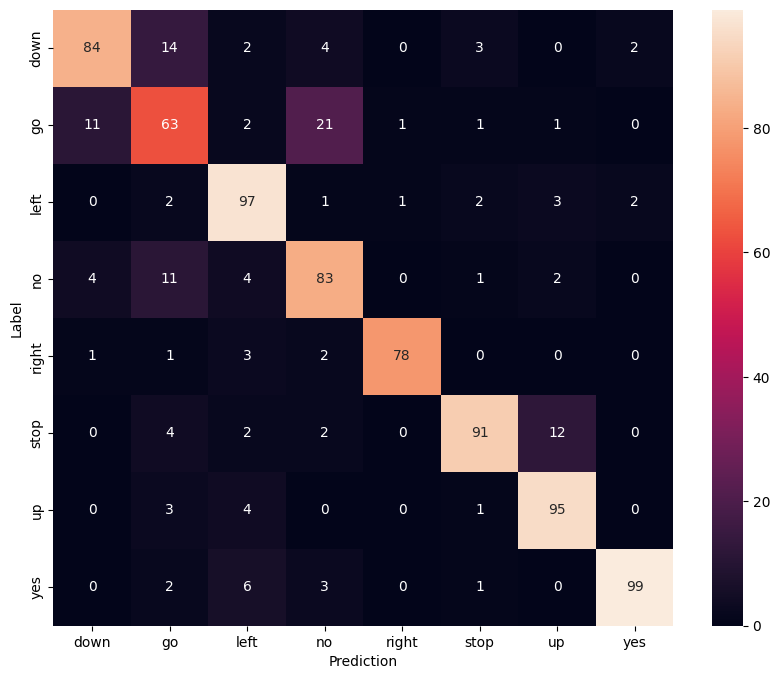

This tutorial demonstrates how to preprocess audio files in the WAV format and build and train a basic automatic speech recognition (ASR) model for recognizing ten different words. You will use a portion of the Speech Commands dataset ( Warden, 2018 ), which contains short (one-second or less) audio clips of commands, such as "down", "go", "left", "no", "right", "stop", "up" and "yes".

Real-world speech and audio recognition systems are complex. But, like image classification with the MNIST dataset , this tutorial should give you a basic understanding of the techniques involved.

Import necessary modules and dependencies. You'll be using tf.keras.utils.audio_dataset_from_directory (introduced in TensorFlow 2.10), which helps generate audio classification datasets from directories of .wav files. You'll also need seaborn for visualization in this tutorial.

Import the mini Speech Commands dataset

To save time with data loading, you will be working with a smaller version of the Speech Commands dataset. The original dataset consists of over 105,000 audio files in the WAV (Waveform) audio file format of people saying 35 different words. This data was collected by Google and released under a CC BY license.

Download and extract the mini_speech_commands.zip file containing the smaller Speech Commands datasets with tf.keras.utils.get_file :

The dataset's audio clips are stored in eight folders corresponding to each speech command: no , yes , down , go , left , up , right , and stop :

Divided into directories this way, you can easily load the data using keras.utils.audio_dataset_from_directory .

The audio clips are 1 second or less at 16kHz. The output_sequence_length=16000 pads the short ones to exactly 1 second (and would trim longer ones) so that they can be easily batched.

The dataset now contains batches of audio clips and integer labels. The audio clips have a shape of (batch, samples, channels) .

This dataset only contains single channel audio, so use the tf.squeeze function to drop the extra axis:

The utils.audio_dataset_from_directory function only returns up to two splits. It's a good idea to keep a test set separate from your validation set. Ideally you'd keep it in a separate directory, but in this case you can use Dataset.shard to split the validation set into two halves. Note that iterating over any shard will load all the data, and only keep its fraction.

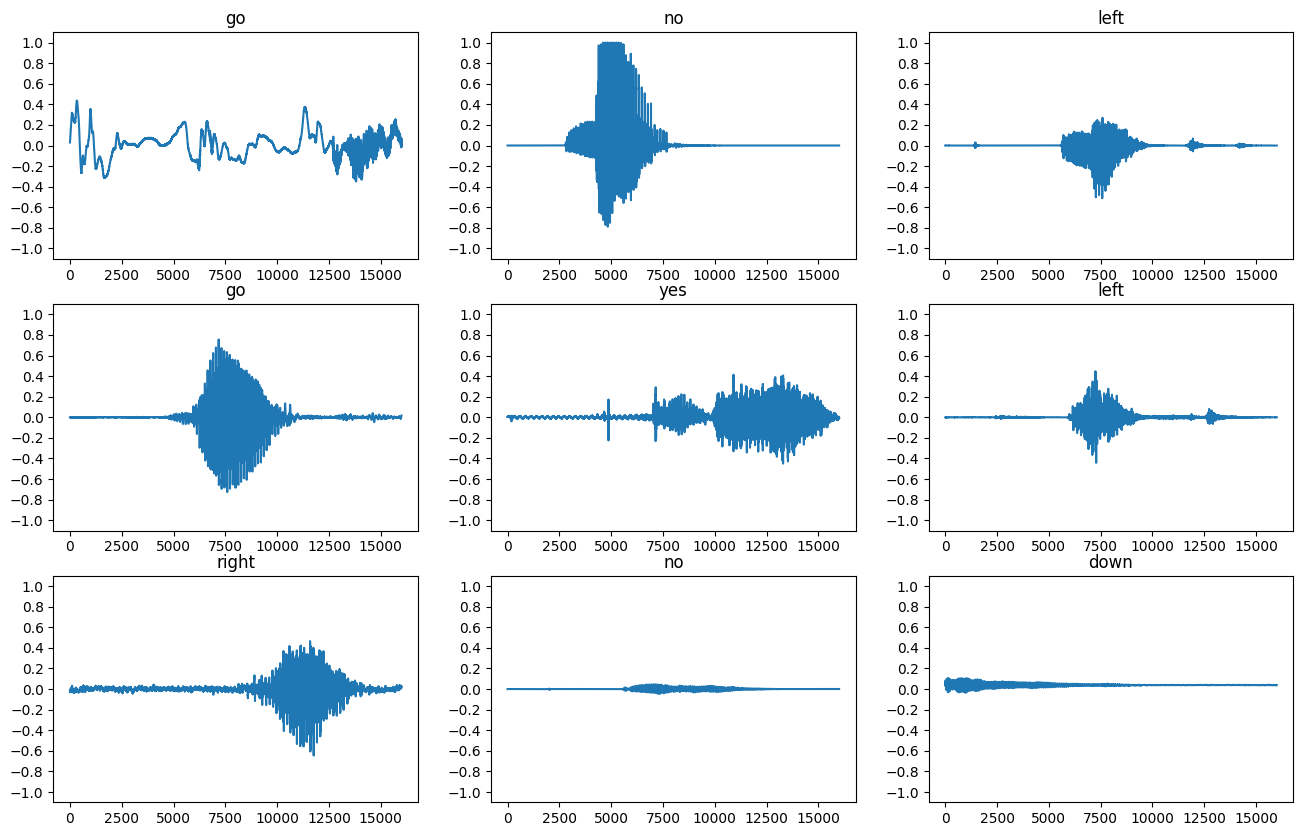

Let's plot a few audio waveforms:

Convert waveforms to spectrograms

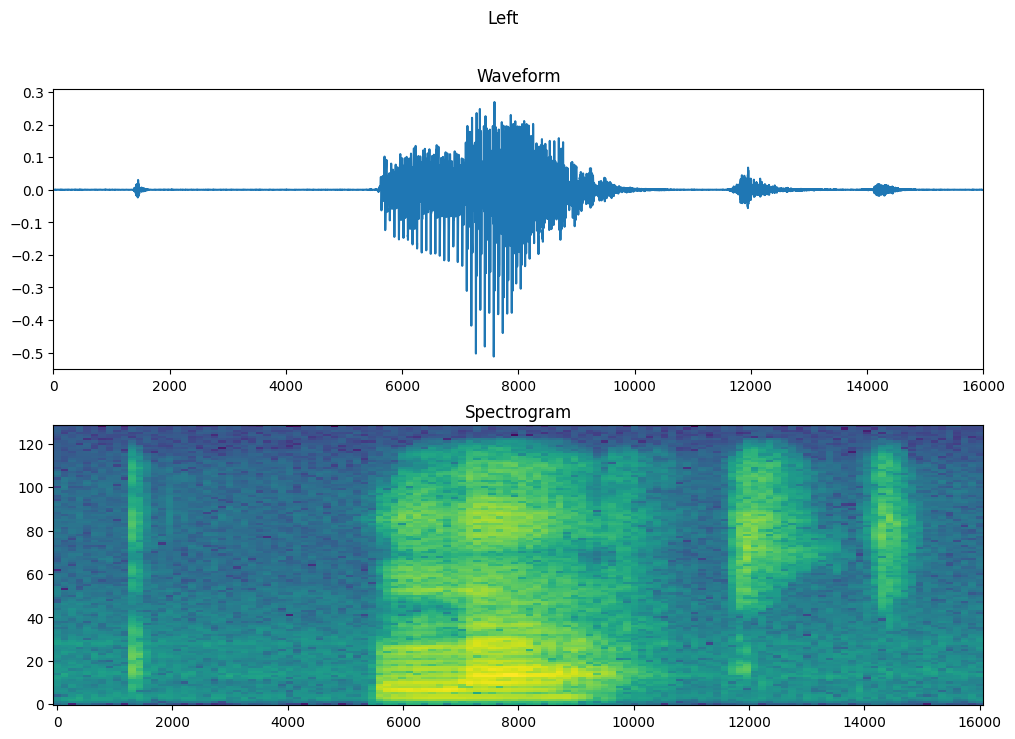

The waveforms in the dataset are represented in the time domain. Next, you'll transform the waveforms from the time-domain signals into the time-frequency-domain signals by computing the short-time Fourier transform (STFT) to convert the waveforms to as spectrograms , which show frequency changes over time and can be represented as 2D images. You will feed the spectrogram images into your neural network to train the model.

A Fourier transform ( tf.signal.fft ) converts a signal to its component frequencies, but loses all time information. In comparison, STFT ( tf.signal.stft ) splits the signal into windows of time and runs a Fourier transform on each window, preserving some time information, and returning a 2D tensor that you can run standard convolutions on.

Create a utility function for converting waveforms to spectrograms:

- The waveforms need to be of the same length, so that when you convert them to spectrograms, the results have similar dimensions. This can be done by simply zero-padding the audio clips that are shorter than one second (using tf.zeros ).

- When calling tf.signal.stft , choose the frame_length and frame_step parameters such that the generated spectrogram "image" is almost square. For more information on the STFT parameters choice, refer to this Coursera video on audio signal processing and STFT.

- The STFT produces an array of complex numbers representing magnitude and phase. However, in this tutorial you'll only use the magnitude, which you can derive by applying tf.abs on the output of tf.signal.stft .

Next, start exploring the data. Print the shapes of one example's tensorized waveform and the corresponding spectrogram, and play the original audio:

Your browser does not support the audio element.

Now, define a function for displaying a spectrogram:

Plot the example's waveform over time and the corresponding spectrogram (frequencies over time):

Now, create spectrogram datasets from the audio datasets:

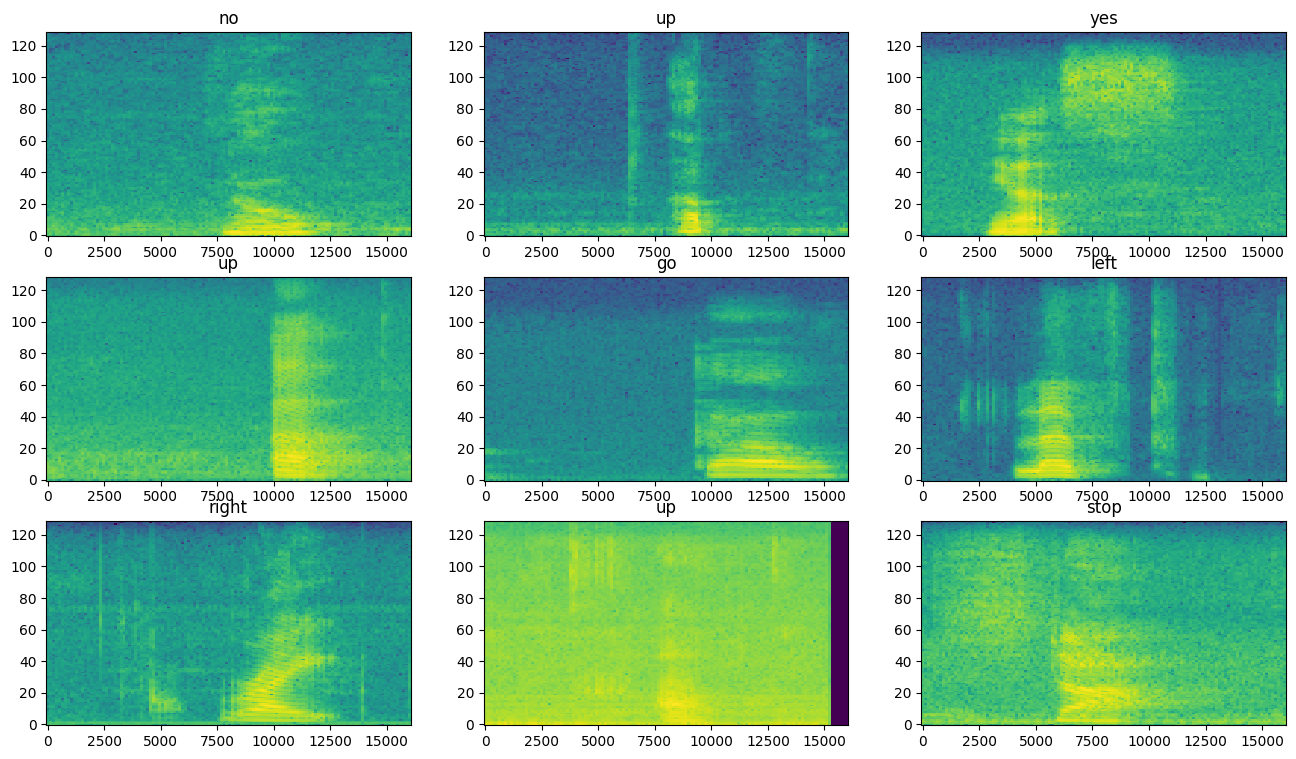

Examine the spectrograms for different examples of the dataset:

Build and train the model

Add Dataset.cache and Dataset.prefetch operations to reduce read latency while training the model:

For the model, you'll use a simple convolutional neural network (CNN), since you have transformed the audio files into spectrogram images.

Your tf.keras.Sequential model will use the following Keras preprocessing layers:

- tf.keras.layers.Resizing : to downsample the input to enable the model to train faster.

- tf.keras.layers.Normalization : to normalize each pixel in the image based on its mean and standard deviation.

For the Normalization layer, its adapt method would first need to be called on the training data in order to compute aggregate statistics (that is, the mean and the standard deviation).

Configure the Keras model with the Adam optimizer and the cross-entropy loss:

Train the model over 10 epochs for demonstration purposes:

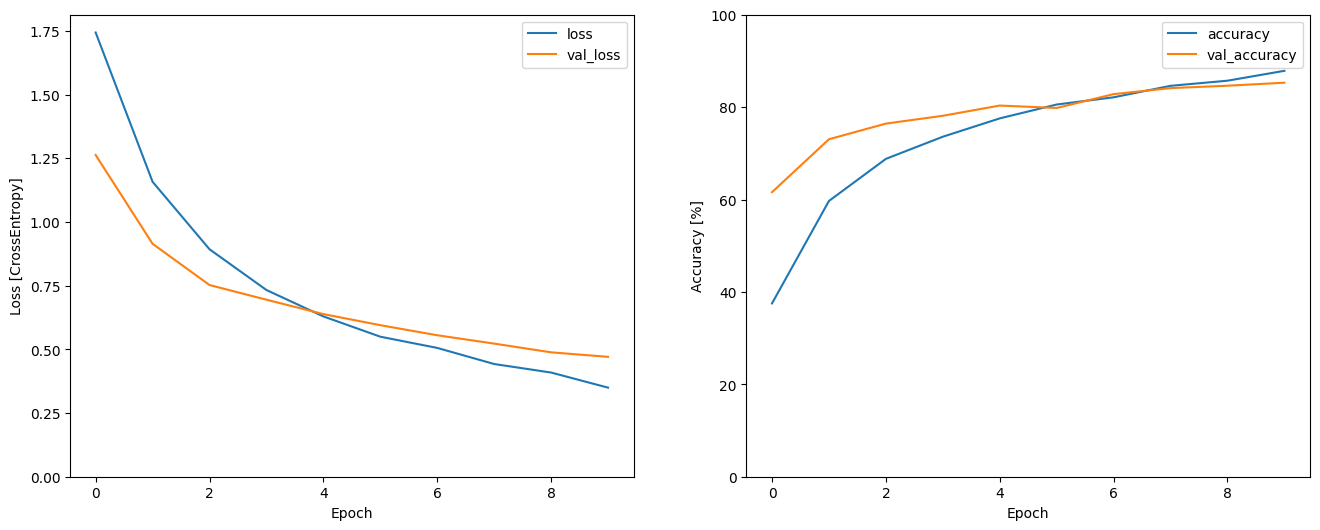

Let's plot the training and validation loss curves to check how your model has improved during training:

Evaluate the model performance

Run the model on the test set and check the model's performance:

Display a confusion matrix

Use a confusion matrix to check how well the model did classifying each of the commands in the test set:

Run inference on an audio file

Finally, verify the model's prediction output using an input audio file of someone saying "no". How well does your model perform?

As the output suggests, your model should have recognized the audio command as "no".

Export the model with preprocessing

The model's not very easy to use if you have to apply those preprocessing steps before passing data to the model for inference. So build an end-to-end version:

Test run the "export" model:

Save and reload the model, the reloaded model gives identical output:

This tutorial demonstrated how to carry out simple audio classification/automatic speech recognition using a convolutional neural network with TensorFlow and Python. To learn more, consider the following resources:

- The Sound classification with YAMNet tutorial shows how to use transfer learning for audio classification.

- The notebooks from Kaggle's TensorFlow speech recognition challenge .

- The TensorFlow.js - Audio recognition using transfer learning codelab teaches how to build your own interactive web app for audio classification.

- A tutorial on deep learning for music information retrieval (Choi et al., 2017) on arXiv.

- TensorFlow also has additional support for audio data preparation and augmentation to help with your own audio-based projects.

- Consider using the librosa library for music and audio analysis.

Except as otherwise noted, the content of this page is licensed under the Creative Commons Attribution 4.0 License , and code samples are licensed under the Apache 2.0 License . For details, see the Google Developers Site Policies . Java is a registered trademark of Oracle and/or its affiliates.

Last updated 2024-08-16 UTC.

Audio Sampling and Sample Rate

Audio sampling, or sampling, refers to the process of converting a continuous analog audio signal into a discrete digital signal. This is achieved by taking “snapshots”, i.e., samples, of the audio signal at regular intervals.

The sampling rate refers to the number of samples per second (or Hertz) taken from a continuous signal to make a discrete or digital signal. In simpler terms, it's how many times per second an audio signal is checked and its level recorded. The higher the sample rate, the more “snapshots” and the more detailed the digital representation of the sound wave.

Common Audio Sample Rates

There are several common sample rates used in digital audio. The choice of sample rate often depends on the intended use:

- 96 kHz and 192 kHz : These high-definition rates are reserved for professional music production and certain streaming services catering to audiophiles.

- 48 kHz : Adopted by the film and TV industry for clear audio syncing with video.

- 44.1 kHz : The de facto standard for music CDs and most digital audio players, balancing quality with file size.

- 16 kHz : Strikes a balance between quality and file size, used in voice commands and speech recognition technologies. (Yes, Picovoice engines require 16 kHz.)

- 8 kHz : This low rate is used when bandwidth is limited, it’s typical for telecommunication systems like old-school phone calls.

Upsampling and Downsampling

Upsampling and downsampling are the processes of changing an audio file’s sample rate:

Upsampling : As the name suggests, upsampling is the process of increasing the sample rate. While it doesn't improve the original audio quality beyond its initial recording, it can make the audio compatible with systems that require a higher sample rate or improve the performance of certain digital audio processing effects.

Downsampling : Downsampling refers to the process of decreasing the sampling rate. This is typically done to reduce the file size of an audio signal or to make it compatible with another system. Downsampling can result in a loss of audio quality if not done correctly, as it involves the removal of data. A low-pass filter is typically employed before downsampling to prevent aliasing, the distortion that occurs when the signal reconstructed from samples differs from the original continuous signal.

Why is 16 kHz Popular?

16kHz is a popular sampling rate for several reasons. First and foremost, it strikes a balance between audio quality and file size. At 16kHz, audio files are small enough to store and transmit while offering reasonable audio quality. Secondly, the human voice's most critical frequencies lie between 300Hz and 3400Hz. The Nyquist-Shannon sampling theorem states that a sampling rate of at least twice the highest frequency is required for accurate signal representation. 16kHz is more than twice 3400Hz and sufficient for processing the human voice. That’s why 16kHz has become a standard in applications using human speech and voice.

Many telephone systems and Voice over Internet Protocol (VoIP) services use 16kHz because it captures the essential range of human speech while minimizing data usage. Voice AI applications, such as virtual assistants and dictation software, often use 16kHz as it provides sufficient quality for accurate speech analysis. Even some audiobook and podcast platforms use 16kHz to reduce file sizes and make content more accessible to users with limited bandwidth or storage.

Although 16kHz is the accepted industry standard for voice AI, Picovoice Consulting works with enterprise customers to optimize the engines or audio inputs when custom solutions are needed.

Subscribe to our newsletter

More from Picovoice

As the demand for large language models (LLMs) continues to grow, so does the need for efficient and cost-effective deployment solutions.

Over the years, Large Language Models (LLMs) have dominated the scene. However, a notable shift is underway towards Small Language Models (S...

Dual Streaming Text-to-Speech allows to synthesize an incoming stream of text into consistent audio in real time, making it ideal for latenc...

Create an on-device, LLM-powered Voice Assistant for iOS using Picovoice on-device voice AI and picoLLM local LLM platforms.

Create an on-device, LLM-powered Voice Assistant for Android using Picovoice on-device voice AI and picoLLM local LLM platforms.

Create a local LLM-powered Voice Assistant for Web Browsers using Picovoice on-device voice AI and picoLLM local LLM platforms.

Create an on-device LLM-powered Voice Assistant in 400 lines of Python using Picovoice on-device voice AI and picoLLM local LLM platforms.

Learn how to perform Speech Recognition in JavaScript, including Speech-to-Text, Voice Commands, Wake Word Detection, and Voice Activity Det...

Detailed Guide on Sample Rate for ASR! [2023]

🎧 Listen to this blog

Subscribe 📨 to FutureBeeAI’s News Letter

• The Fundamentals of Automatic Speech Recognition (ASR)

• sample rate explained, why higher sample rate produce better audio quality, limitation of higher sample rate, how to choose the right sample rate for asr, recommended sample rates for various asr use cases, • futurebeeai is here to assist you.

In our blog, we love exploring a bunch of cool AI stuff, like making computers understand speech, recognize things in pictures, create art, chat like humans, read handwriting, and much more!

Today, we're diving deep into a crucial aspect of training speech recognition models: the sample rate. We'll keep things simple and explain why it's a big deal.

By the end of this blog, you'll know exactly how to pick the perfect sample rate for your speech recognition project and why it matters so much! So, let's get started!

The Fundamentals of Automatic Speech Recognition (ASR)

Automatic Speech Recognition, or ASR for short, is a branch of artificial intelligence dedicated to the conversion of spoken words into written text. For an ASR model to effectively understand any language, it must undergo rigorous training using a substantial amount of spoken language data in that particular language.

This speech dataset comprises audio files recorded in the target language, along with their corresponding transcriptions . These audio files consist of recordings featuring human speech, and a crucial technical aspect of these files is the sample rate along with bit depth, format etc. We will discuss other technical features later in the future.

When training our ASR model, we have two main options: utilizing open-source datasets, off-the-shelf datasets, or creating our own custom training dataset. In the case of open-source or off-the-shelf datasets, it is essential to verify the sample rate at which the audio data was recorded. For custom speech dataset collection , it is equally vital to ensure that all audio data is recorded at the specified sample rate.

In summary, the selection of audio files with the required sample rate plays a pivotal role in the ASR training process. To gain a deeper understanding of sample rate, let's delve into its intricacies.

Sample Rate Explained

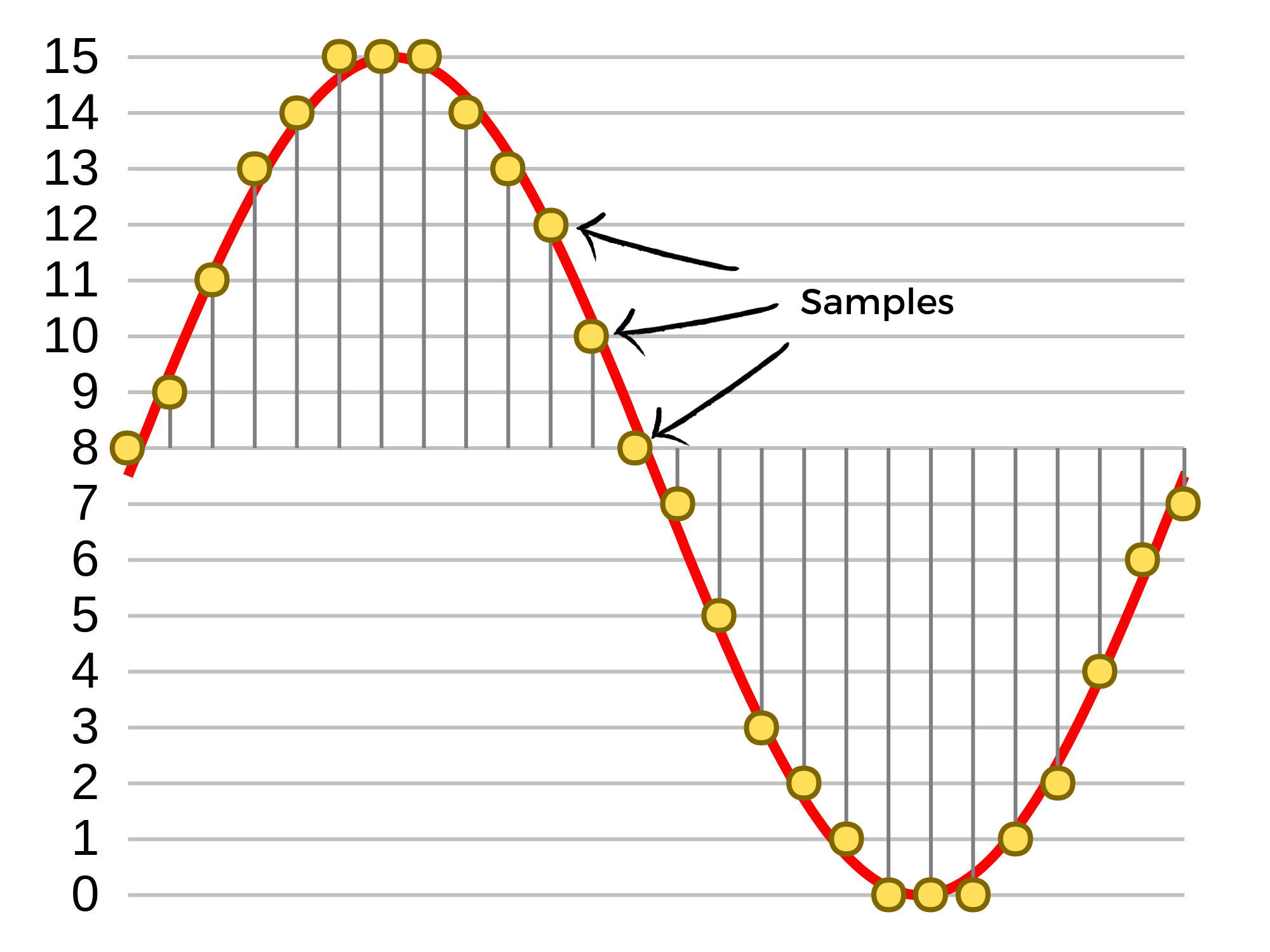

Let's dive into the concept of sample rate. In simple terms, sample rate refers to the number of audio samples captured in one second. You might also hear it called sampling frequency or sampling rate.

To measure the sample rate, we use Hertz (Hz) as the unit of measurement. Often, you'll see it expressed in kilohertz (kHz) in everyday discussions.

Now, let's visualize what the sample rate looks like on an audio graph.

The red line in the graph represents the sound signal, while the yellow dots scattered along it represent individual samples. Think of sample rate as a measure of how many of these samples are taken in a single second. For instance, if you have an audio file with an 8 kHz sample rate, it means that 8,000 samples are captured per second for that audio file.

Now, imagine you want to recreate the sound signal from these samples. Which scenario do you think would make it easier: having a high sample rate or a low one?

To clarify, think of the graph again. If you have more dots (samples), you can reconstruct the sound signal more accurately compared to having fewer dots. Essentially, a higher sample rate means a more detailed representation of the audio signal, allowing you to encode more information and ultimately resulting in better audio quality.

So, if you have two audio files, one with an 8 kHz sample rate and another with a 48 kHz sample rate, the 48 kHz file will generally sound much better.

Let's dive into why a higher sample rate allows for more information to be encoded.

Picture trying to capture images of a fast-moving car on a road. Your frequency of capturing images can be likened to the sample rate. If your capture frequency is too low, you'll miss important moments because the car is moving too quickly.

But if your capture frequency is high, you can capture each crucial moment, making it possible to faithfully reproduce the visual.

This same principle applies to audio. If your sample rate is low, meaning you're capturing fewer sound signals in a given time, you might miss subtle nuances in speech. Consequently, when you attempt to reproduce the audio, it won't match the original quality.

However, when you have a high enough sample rate, you capture all the nuances of speech, enabling accurate audio reproduction.

In fact, with a sufficiently high sample rate, you can reproduce audio so accurately that humans can't distinguish it from the original.

But what qualifies as a "high enough" sample rate? Does this mean that a higher sample rate is always better?

Not necessarily. Using the image analogy again, if your capture frequency is excessively high, you might end up with duplicate images. Similarly, in audio, an excessively high sample rate can capture unnecessary background noise and other irrelevant details.

To determine the right sample rate, we turn to Nyquist's theorem . This theorem suggests that to avoid aliasing and accurately capture a signal, you should sample it at a rate at least twice the highest frequency you want to capture.

For humans, our ears are sensitive to frequencies between 20 Hz and 20 kHz. Following Nyquist, the optimal sample rate for us would be 40 kHz. This is why most music CDs are recorded with sample rates of 44 kHz to 48 kHz, with the additional 4 kHz to 8 kHz serving as a buffer to prevent data loss during the analog-to-digital conversion process.

However, despite its high audio quality, a 48 kHz audio file may not be suitable for training Automatic Speech Recognition (ASR) models due to several reasons:

High sample rates require more computational power, making them less practical for certain applications.

Increased computational demands result in higher power consumption, leading to a larger carbon footprint.

Audio files with higher sample rates have larger file sizes, necessitating more storage space.

Larger file sizes also mean slower data transmission between modules.

As discussed earlier, higher sample rate the audio signal will try to contain more information and it sometimes captures the background noise as well which can lead to noise amplification as well.

Not all ASR systems or AI modules support high sample rates, which can limit interoperability.

Now the question is, then how to choose the optimal sample rate for the ASR system? Let’s find out its answer.

It primarily depends on the use case and the frequency range of human speech. Human speech intelligibility typically falls within the range of 300 Hz to 3400 Hz. Doubling the upper limit according to Nyquist, a sample rate of around 8000 Hz is sufficient to capture human voice accurately. This is why 8 kHz is commonly used in speech recognition systems, telecommunication channels, and codecs.

With enough quality 8 kHz also brings the advantages of lower computational power, power consumption, and lower amount of data that needs to be transferred. But that doesn’t mean 8 kHz is the best quality, it’s rather a sweet spot between the tradeoff of quality and limitation.

As mentioned earlier choosing the right sample rate also depends upon the use case. Many HD voice devices use 16 kHz as it provides more accurate high-frequency information compared to 8 kHz. So it’s like if you have more computational power to train your AI model you can choose 16 kHz in place of 8 kHz.

In most cases, ASR models for voice recognition tasks often do not require sample rates exceeding 22 kHz. On the other hand, in scenarios where exceptional audio quality is essential, such as music and audio production, a sample rate of 44 kHz to 48 kHz is preferred.

For Text-to-Speech (TTS) applications, which require detailed acoustic characteristics, sample rates of 22.05 kHz, 32 kHz, 44.1 kHz, or 48 kHz are used to ensure accurate audio reproduction from text.

We are clear till now that choosing the optimal sample rate depends on your use case. Below are some of the common ASR use cases and generally used sample rates for them.

Voice Assistants (e.g., Siri, Alexa, Google Assistant):

- Optimal Sample Rate: 16 kHz to 48 kHz - These applications prioritize high-quality audio for natural language understanding. Sample rates between 16 kHz and 48 kHz are often used to capture clear and detailed voice input.

Conversational AI, Telephony, and IVR Systems:

- Optimal Sample Rate: 8 kHz - Traditional telephone systems, Interactive Voice Response (IVR) systems, and call center asr solutions typically use an 8 kHz sample rate to match telephony standards.

Transcription Services (e.g., Speech-to-Text):

- Optimal Sample Rate: 16 kHz to 48 kHz - When transcribing spoken content for applications like transcription services, podcasts, or video captions, higher sample rates in the range of 16 kHz to 48 kHz are often preferred for accuracy.

Medical Transcription and Dictation:

- Optimal Sample Rate: 16 kHz to 48 kHz - Medical transcription and dictation applications typically benefit from higher sample rates to capture medical professionals' detailed speech accurately.

Remember that the optimal sample rate can vary based on the specific requirements and constraints of each ASR use case. It's essential to conduct testing and evaluation to determine the best sample rate for your application while considering factors like audio quality, computational resources, and the intended environment.

FutureBeeAI is Here to Assist You!

We at FutureBeeAI assist AI organizations working on any ASR use cases with our extensive speech data offerings. With our pre-made datasets including general conversation, call center conversation or scripted monologue you can scale your AI model development. All of these datasets are diverse across 40+ languages and 6+ industries. You can check out all the published speech data here .

Along with that with our state-of-the-art mobile application and global crowd community, you can collect custom speech datasets as per your tailored requirements. Our data collection mobile application Yugo allows you to record both scripted and conversational speech data with flexible technical features like sample rate, bit depth, file format, and audio channels. Check out our Yugo application here .

Feel free to reach out to us in case you need any help with training datasets for your ASR use cases. We would love to assist you!

Read more Blogs

Custom Speech Data Collection

The easiest and quickest way to collect custom speech dataset.

Training Data Training Data Preparation

How to prepare training data for speech recognition models.

Speech Recognition Voice Recognition

Speech recognition vs. voice recognition: in depth comparisonr, supercharge your model creation with futurebeeai’s premium quality datasets.

We Use Cookies!!!

We use cookies to ensure that we give you the best experience on our website. Read cookies policies.

A Complete Guide to Audio Datasets

*]:break-words" href="#introduction" title="Introduction"> Introduction *]:break-words" href="#contents" title="Contents"> Contents *]:break-words" href="#the-hub" title="The Hub"> The Hub *]:break-words" href="#load-an-audio-dataset" title="Load an Audio Dataset"> Load an Audio Dataset *]:break-words" href="#easy-to-load-easy-to-process" title="Easy to Load, Easy to Process"> Easy to Load, Easy to Process 1. Resampling the Audio Data 2. Pre-Processing Function 3. Filtering Function *]:break-words" href="#streaming-mode-the-silver-bullet" title="Streaming Mode: The Silver Bullet"> Streaming Mode: The Silver Bullet *]:break-words" href="#a-tour-of-audio-datasets-on-the-hub" title="A Tour of Audio Datasets on The Hub"> A Tour of Audio Datasets on The Hub English Speech Recognition Multilingual Speech Recognition Speech Translation Audio Classification *]:break-words" href="#closing-remarks" title="Closing Remarks"> Closing Remarks Introduction

🤗 Datasets is an open-source library for downloading and preparing datasets from all domains. Its minimalistic API allows users to download and prepare datasets in just one line of Python code, with a suite of functions that enable efficient pre-processing. The number of datasets available is unparalleled, with all the most popular machine learning datasets available to download.

Not only this, but 🤗 Datasets comes prepared with multiple audio-specific features that make working with audio datasets easy for researchers and practitioners alike. In this blog, we'll demonstrate these features, showcasing why 🤗 Datasets is the go-to place for downloading and preparing audio datasets.

Load an Audio Dataset

Easy to load, easy to process, streaming mode: the silver bullet.

- A Tour of Audio Datasets on the Hub

Closing Remarks

The Hugging Face Hub is a platform for hosting models, datasets and demos, all open source and publicly available. It is home to a growing collection of audio datasets that span a variety of domains, tasks and languages. Through tight integrations with 🤗 Datasets, all the datasets on the Hub can be downloaded in one line of code.

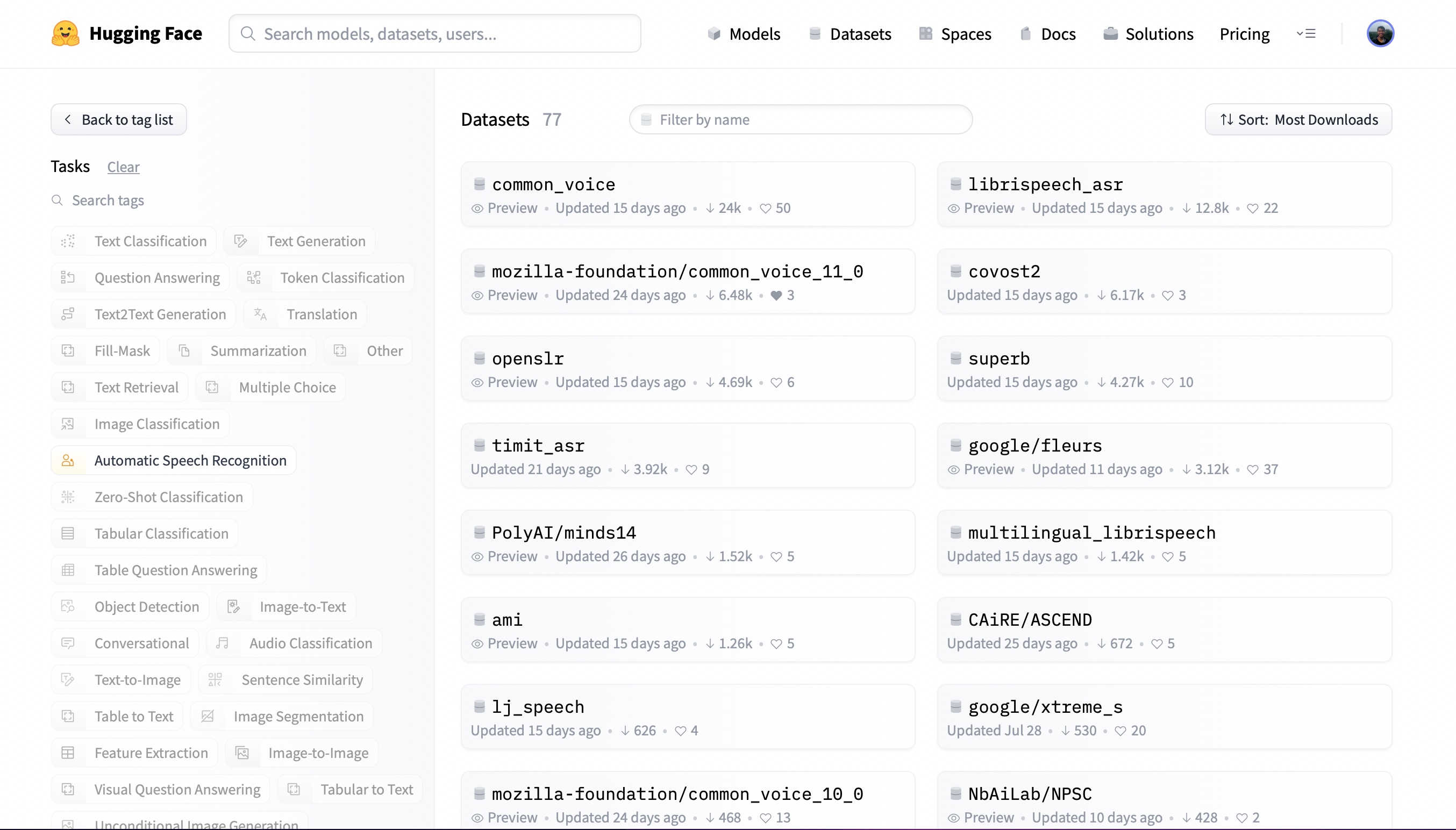

Let's head to the Hub and filter the datasets by task:

- Speech Recognition Datasets on the Hub

- Audio Classification Datasets on the Hub

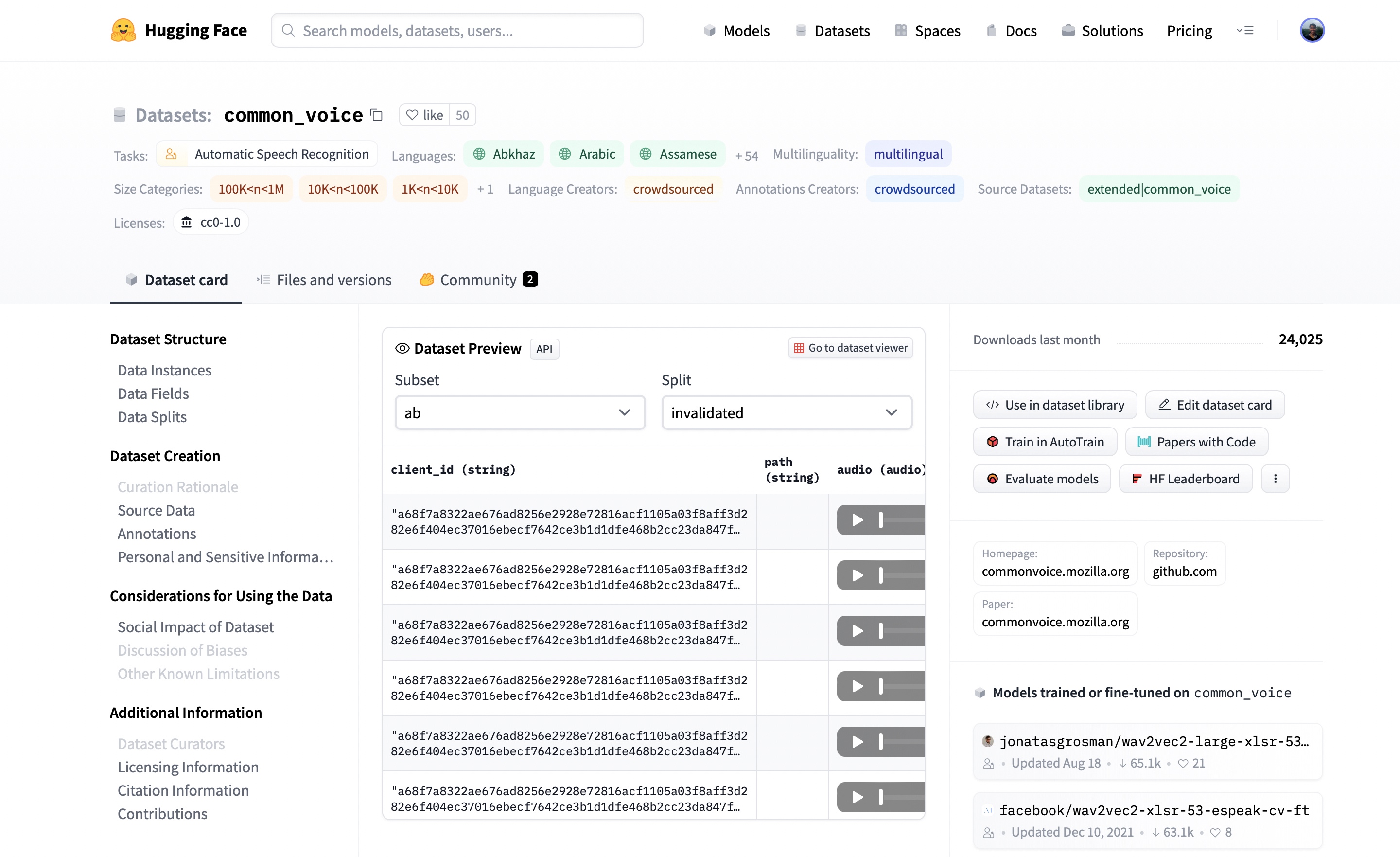

At the time of writing, there are 77 speech recognition datasets and 28 audio classification datasets on the Hub, with these numbers ever-increasing. You can select any one of these datasets to suit your needs. Let's check out the first speech recognition result. Clicking on common_voice brings up the dataset card:

Here, we can find additional information about the dataset, see what models are trained on the dataset and, most excitingly, listen to actual audio samples. The Dataset Preview is presented in the middle of the dataset card. It shows us the first 100 samples for each subset and split. What's more, it's loaded up the audio samples ready for us to listen to in real-time. If we hit the play button on the first sample, we can listen to the audio and see the corresponding text.

The Dataset Preview is a brilliant way of experiencing audio datasets before committing to using them. You can pick any dataset on the Hub, scroll through the samples and listen to the audio for the different subsets and splits, gauging whether it's the right dataset for your needs. Once you've selected a dataset, it's trivial to load the data so that you can start using it.

One of the key defining features of 🤗 Datasets is the ability to download and prepare a dataset in just one line of Python code. This is made possible through the load_dataset function. Conventionally, loading a dataset involves: i) downloading the raw data, ii) extracting it from its compressed format, and iii) preparing individual samples and splits. Using load_dataset , all of the heavy lifting is done under the hood.

Let's take the example of loading the GigaSpeech dataset from Speech Colab. GigaSpeech is a relatively recent speech recognition dataset for benchmarking academic speech systems and is one of many audio datasets available on the Hugging Face Hub.

To load the GigaSpeech dataset, we simply take the dataset's identifier on the Hub ( speechcolab/gigaspeech ) and specify it to the load_dataset function. GigaSpeech comes in five configurations of increasing size, ranging from xs (10 hours) to xl (10,000 hours). For the purpose of this tutorial, we'll load the smallest of these configurations. The dataset's identifier and the desired configuration are all that we require to download the dataset:

Print Output:

And just like that, we have the GigaSpeech dataset ready! There simply is no easier way of loading an audio dataset. We can see that we have the training, validation and test splits pre-partitioned, with the corresponding information for each.

The object gigaspeech returned by the load_dataset function is a DatasetDict . We can treat it in much the same way as an ordinary Python dictionary. To get the train split, we pass the corresponding key to the gigaspeech dictionary:

This returns a Dataset object, which contains the data for the training split. We can go one level deeper and get the first item of the split. Again, this is possible through standard Python indexing:

We can see that there are a number of features returned by the training split, including segment_id , speaker , text , audio and more. For speech recognition, we'll be concerned with the text and audio columns.

Using 🤗 Datasets' remove_columns method, we can remove the dataset features not required for speech recognition:

Let's check that we've successfully retained the text and audio columns:

Great! We can see that we've got the two required columns text and audio . The text is a string with the sample transcription and the audio a 1-dimensional array of amplitude values at a sampling rate of 16KHz. That's our dataset loaded!

Loading a dataset with 🤗 Datasets is just half of the fun. We can now use the suite of tools available to efficiently pre-process our data ready for model training or inference. In this Section, we'll perform three stages of data pre-processing:

- Resampling the Audio Data

- Pre-Processing Function

- Filtering Function

1. Resampling the Audio Data

The load_dataset function prepares audio samples with the sampling rate that they were published with. This is not always the sampling rate expected by our model. In this case, we need to resample the audio to the correct sampling rate.

We can set the audio inputs to our desired sampling rate using 🤗 Datasets' cast_column method. This operation does not change the audio in-place, but rather signals to datasets to resample the audio samples on the fly when they are loaded. The following code cell will set the sampling rate to 8kHz:

Re-loading the first audio sample in the GigaSpeech dataset will resample it to the desired sampling rate:

We can see that the sampling rate has been downsampled to 8kHz. The array values are also different, as we've now only got approximately one amplitude value for every two that we had before. Let's set the dataset sampling rate back to 16kHz, the sampling rate expected by most speech recognition models:

Easy! cast_column provides a straightforward mechanism for resampling audio datasets as and when required.

2. Pre-Processing Function

One of the most challenging aspects of working with audio datasets is preparing the data in the right format for our model. Using 🤗 Datasets' map method, we can write a function to pre-process a single sample of the dataset, and then apply it to every sample without any code changes.

First, let's load a processor object from 🤗 Transformers. This processor pre-processes the audio to input features and tokenises the target text to labels. The AutoProcessor class is used to load a processor from a given model checkpoint. In the example, we load the processor from OpenAI's Whisper medium.en checkpoint, but you can change this to any model identifier on the Hugging Face Hub:

Great! Now we can write a function that takes a single training sample and passes it through the processor to prepare it for our model. We'll also compute the input length of each audio sample, information that we'll need for the next data preparation step:

We can apply the data preparation function to all of our training examples using 🤗 Datasets' map method. Here, we also remove the text and audio columns, since we have pre-processed the audio to input features and tokenised the text to labels:

3. Filtering Function

Prior to training, we might have a heuristic for filtering our training data. For instance, we might want to filter any audio samples longer than 30s to prevent truncating the audio samples or risking out-of-memory errors. We can do this in much the same way that we prepared the data for our model in the previous step.

We start by writing a function that indicates which samples to keep and which to discard. This function, is_audio_length_in_range , returns a boolean: samples that are shorter than 30s return True, and those that are longer False.

We can apply this filtering function to all of our training examples using 🤗 Datasets' filter method, keeping all samples that are shorter than 30s (True) and discarding those that are longer (False):

And with that, we have the GigaSpeech dataset fully prepared for our model! In total, this process required 13 lines of Python code, right from loading the dataset to the final filtering step.

Keeping the notebook as general as possible, we only performed the fundamental data preparation steps. However, there is no restriction to the functions you can apply to your audio dataset. You can extend the function prepare_dataset to perform much more involved operations, such as data augmentation, voice activity detection or noise reduction. With 🤗 Datasets, if you can write it in a Python function, you can apply it to your dataset!

One of the biggest challenges faced with audio datasets is their sheer size. The xs configuration of GigaSpeech contained just 10 hours of training data, but amassed over 13GB of storage space for download and preparation. So what happens when we want to train on a larger split? The full xl configuration contains 10,000 hours of training data, requiring over 1TB of storage space. For most speech researchers, this well exceeds the specifications of a typical hard drive disk. Do we need to fork out and buy additional storage? Or is there a way we can train on these datasets with no disk space constraints ?

🤗 Datasets allow us to do just this. It is made possible through the use of streaming mode, depicted graphically in Figure 1. Streaming allows us to load the data progressively as we iterate over the dataset. Rather than downloading the whole dataset at once, we load the dataset sample by sample. We iterate over the dataset, loading and preparing samples on the fly when they are needed. This way, we only ever load the samples that we're using, and not the ones that we're not! Once we're done with a sample, we continue iterating over the dataset and load the next one.

This is analogous to downloading a TV show versus streaming it. When we download a TV show, we download the entire video offline and save it to our disk. We have to wait for the entire video to download before we can watch it and require as much disk space as size of the video file. Compare this to streaming a TV show. Here, we don’t download any part of the video to disk, but rather iterate over the remote video file and load each part in real-time as required. We don't have to wait for the full video to buffer before we can start watching, we can start as soon as the first portion of the video is ready! This is the same streaming principle that we apply to loading datasets.

Streaming mode has three primary advantages over downloading the entire dataset at once:

- Disk space: samples are loaded to memory one-by-one as we iterate over the dataset. Since the data is not downloaded locally, there are no disk space requirements, so you can use datasets of arbitrary size.

- Download and processing time: audio datasets are large and need a significant amount of time to download and process. With streaming, loading and processing is done on the fly, meaning you can start using the dataset as soon as the first sample is ready.

- Easy experimentation: you can experiment on a handful samples to check that your script works without having to download the entire dataset.

There is one caveat to streaming mode. When downloading a dataset, both the raw data and processed data are saved locally to disk. If we want to re-use this dataset, we can directly load the processed data from disk, skipping the download and processing steps. Consequently, we only have to perform the downloading and processing operations once, after which we can re-use the prepared data. With streaming mode, the data is not downloaded to disk. Thus, neither the downloaded nor pre-processed data are cached. If we want to re-use the dataset, the streaming steps must be repeated, with the audio files loaded and processed on the fly again. For this reason, it is advised to download datasets that you are likely to use multiple times.

How can you enable streaming mode? Easy! Just set streaming=True when you load your dataset. The rest will be taken care for you:

All the steps covered so far in this tutorial can be applied to the streaming dataset without any code changes. The only change is that you can no longer access individual samples using Python indexing (i.e. gigaspeech["train"][sample_idx] ). Instead, you have to iterate over the dataset, using a for loop for example.

Streaming mode can take your research to the next level: not only are the biggest datasets accessible to you, but you can easily evaluate systems over multiple datasets in one go without worrying about your disk space. Compared to evaluating on a single dataset, multi-dataset evaluation gives a better metric for the generalisation abilities of a speech recognition system ( c.f. End-to-end Speech Benchmark (ESB) ). The accompanying Google Colab provides an example for evaluating the Whisper model on eight English speech recognition datasets in one script using streaming mode.

A Tour of Audio Datasets on The Hub

This Section serves as a reference guide for the most popular speech recognition, speech translation and audio classification datasets on the Hugging Face Hub. We can apply everything that we've covered for the GigaSpeech dataset to any of the datasets on the Hub. All we have to do is switch the dataset identifier in the load_dataset function. It's that easy!

English Speech Recognition

Multilingual speech recognition, speech translation, audio classification.

Speech recognition, or speech-to-text, is the task of mapping from spoken speech to written text, where both the speech and text are in the same language. We provide a summary of the most popular English speech recognition datasets on the Hub:

| Dataset | Domain | Speaking Style | Train Hours | Casing | Punctuation | License | Recommended Use |

|---|---|---|---|---|---|---|---|

| Audiobook | Narrated | 960 | ❌ | ❌ | CC-BY-4.0 | Academic benchmarks | |

| Wikipedia | Narrated | 2300 | ✅ | ✅ | CC0-1.0 | Non-native speakers | |

| European Parliament | Oratory | 540 | ❌ | ✅ | CC0 | Non-native speakers | |

| TED talks | Oratory | 450 | ❌ | ❌ | CC-BY-NC-ND 3.0 | Technical topics | |

| Audiobook, podcast, YouTube | Narrated, spontaneous | 10000 | ❌ | ✅ | apache-2.0 | Robustness over multiple domains | |

| Fincancial meetings | Oratory, spontaneous | 5000 | ✅ | ✅ | User Agreement | Fully formatted transcriptions | |

| Fincancial meetings | Oratory, spontaneous | 119 | ✅ | ✅ | CC-BY-SA-4.0 | Diversity of accents | |

| Meetings | Spontaneous | 100 | ✅ | ✅ | CC-BY-4.0 | Noisy speech conditions |

Refer to the Google Colab for a guide on evaluating a system on all eight English speech recognition datasets in one script.

The following dataset descriptions are largely taken from the ESB Benchmark paper.

LibriSpeech ASR

LibriSpeech is a standard large-scale dataset for evaluating ASR systems. It consists of approximately 1,000 hours of narrated audiobooks collected from the LibriVox project. LibriSpeech has been instrumental in facilitating researchers to leverage a large body of pre-existing transcribed speech data. As such, it has become one of the most popular datasets for benchmarking academic speech systems.

Common Voice

Common Voice is a series of crowd-sourced open-licensed speech datasets where speakers record text from Wikipedia in various languages. Since anyone can contribute recordings, there is significant variation in both audio quality and speakers. The audio conditions are challenging, with recording artefacts, accented speech, hesitations, and the presence of foreign words. The transcriptions are both cased and punctuated. The English subset of version 11.0 contains approximately 2,300 hours of validated data. Use of the dataset requires you to agree to the Common Voice terms of use, which can be found on the Hugging Face Hub: mozilla-foundation/common_voice_11_0 . Once you have agreed to the terms of use, you will be granted access to the dataset. You will then need to provide an authentication token from the Hub when you load the dataset.

VoxPopuli is a large-scale multilingual speech corpus consisting of data sourced from 2009-2020 European Parliament event recordings. Consequently, it occupies the unique domain of oratory, political speech, largely sourced from non-native speakers. The English subset contains approximately 550 hours labelled speech.

TED-LIUM is a dataset based on English-language TED Talk conference videos. The speaking style is oratory educational talks. The transcribed talks cover a range of different cultural, political, and academic topics, resulting in a technical vocabulary. The Release 3 (latest) edition of the dataset contains approximately 450 hours of training data. The validation and test data are from the legacy set, consistent with earlier releases.

GigaSpeech is a multi-domain English speech recognition corpus curated from audiobooks, podcasts and YouTube. It covers both narrated and spontaneous speech over a variety of topics, such as arts, science and sports. It contains training splits varying from 10 hours - 10,000 hours and standardised validation and test splits.

SPGISpeech is an English speech recognition corpus composed of company earnings calls that have been manually transcribed by S&P Global, Inc. The transcriptions are fully-formatted according to a professional style guide for oratory and spontaneous speech. It contains training splits ranging from 200 hours - 5,000 hours, with canonical validation and test splits.

Earnings-22

Earnings-22 is a 119-hour corpus of English-language earnings calls collected from global companies. The dataset was developed with the goal of aggregating a broad range of speakers and accents covering a range of real-world financial topics. There is large diversity in the speakers and accents, with speakers taken from seven different language regions. Earnings-22 was published primarily as a test-only dataset. The Hub contains a version of the dataset that has been partitioned into train-validation-test splits.

AMI comprises 100 hours of meeting recordings captured using different recording streams. The corpus contains manually annotated orthographic transcriptions of the meetings aligned at the word level. Individual samples of the AMI dataset contain very large audio files (between 10 and 60 minutes), which are segmented to lengths feasible for training most speech recognition systems. AMI contains two splits: IHM and SDM. IHM (individual headset microphone) contains easier near-field speech, and SDM (single distant microphone) harder far-field speech.

Multilingual speech recognition refers to speech recognition (speech-to-text) for all languages except English.

Multilingual LibriSpeech

Multilingual LibriSpeech is the multilingual equivalent of the LibriSpeech ASR corpus. It comprises a large corpus of read audiobooks taken from the LibriVox project, making it a suitable dataset for academic research. It contains data split into eight high-resource languages - English, German, Dutch, Spanish, French, Italian, Portuguese and Polish.

Common Voice is a series of crowd-sourced open-licensed speech datasets where speakers record text from Wikipedia in various languages. Since anyone can contribute recordings, there is significant variation in both audio quality and speakers. The audio conditions are challenging, with recording artefacts, accented speech, hesitations, and the presence of foreign words. The transcriptions are both cased and punctuated. As of version 11, there are over 100 languages available, both low and high-resource.

VoxPopuli is a large-scale multilingual speech corpus consisting of data sourced from 2009-2020 European Parliament event recordings. Consequently, it occupies the unique domain of oratory, political speech, largely sourced from non-native speakers. It contains labelled audio-transcription data for 15 European languages.

FLEURS (Few-shot Learning Evaluation of Universal Representations of Speech) is a dataset for evaluating speech recognition systems in 102 languages, including many that are classified as 'low-resource'. The data is derived from the FLoRes-101 dataset, a machine translation corpus with 3001 sentence translations from English to 101 other languages. Native speakers are recorded narrating the sentence transcriptions in their native language. The recorded audio data is paired with the sentence transcriptions to yield multilingual speech recognition over all 101 languages. The training sets contain approximately 10 hours of supervised audio-transcription data per language.

Speech translation is the task of mapping from spoken speech to written text, where the speech and text are in different languages (e.g. English speech to French text).

CoVoST 2 is a large-scale multilingual speech translation corpus covering translations from 21 languages into English and from English into 15 languages. The dataset is created using Mozilla's open-source Common Voice database of crowd-sourced voice recordings. There are 2,900 hours of speech represented in the corpus.

FLEURS (Few-shot Learning Evaluation of Universal Representations of Speech) is a dataset for evaluating speech recognition systems in 102 languages, including many that are classified as 'low-resource'. The data is derived from the FLoRes-101 dataset, a machine translation corpus with 3001 sentence translations from English to 101 other languages. Native speakers are recorded narrating the sentence transcriptions in their native languages. An n n n -way parallel corpus of speech translation data is constructed by pairing the recorded audio data with the sentence transcriptions for each of the 101 languages. The training sets contain approximately 10 hours of supervised audio-transcription data per source-target language combination.

Audio classification is the task of mapping a raw audio input to a class label output. Practical applications of audio classification include keyword spotting, speaker intent and language identification.

SpeechCommands

SpeechCommands is a dataset comprised of one-second audio files, each containing either a single spoken word in English or background noise. The words are taken from a small set of commands and are spoken by a number of different speakers. The dataset is designed to help train and evaluate small on-device keyword spotting systems.

Multilingual Spoken Words

Multilingual Spoken Words is a large-scale corpus of one-second audio samples, each containing a single spoken word. The dataset consists of 50 languages and more than 340,000 keywords, totalling 23.4 million one-second spoken examples or over 6,000 hours of audio. The audio-transcription data is sourced from the Mozilla Common Voice project. Time stamps are generated for every utterance on the word-level and used to extract individual spoken words and their corresponding transcriptions, thus forming a new corpus of single spoken words. The dataset's intended use is academic research and commercial applications in multilingual keyword spotting and spoken term search.

FLEURS (Few-shot Learning Evaluation of Universal Representations of Speech) is a dataset for evaluating speech recognition systems in 102 languages, including many that are classified as 'low-resource'. The data is derived from the FLoRes-101 dataset, a machine translation corpus with 3001 sentence translations from English to 101 other languages. Native speakers are recorded narrating the sentence transcriptions in their native languages. The recorded audio data is paired with a label for the language in which it is spoken. The dataset can be used as an audio classification dataset for language identification : systems are trained to predict the language of each utterance in the corpus.

In this blog post, we explored the Hugging Face Hub and experienced the Dataset Preview, an effective means of listening to audio datasets before downloading them. We loaded an audio dataset with one line of Python code and performed a series of generic pre-processing steps to prepare it for a machine learning model. In total, this required just 13 lines of code, relying on simple Python functions to perform the necessary operations. We introduced streaming mode, a method for loading and preparing samples of audio data on the fly. We concluded by summarising the most popular speech recognition, speech translation and audio classification datasets on the Hub.

Having read this blog, we hope you agree that 🤗 Datasets is the number one place for downloading and preparing audio datasets. 🤗 Datasets is made possible through the work of the community. If you would like to contribute a dataset, refer to the Guide for Adding a New Dataset .

Thank you to the following individuals who help contribute to the blog post: Vaibhav Srivastav, Polina Kazakova, Patrick von Platen, Omar Sanseviero and Quentin Lhoest.

More Articles from our Blog

The 5 Most Under-Rated Tools on Hugging Face

By derek-thomas August 22, 2024 • 54

Introduction to ggml

By ngxson August 13, 2024 • 80

- Get Started

Learn about PyTorch’s features and capabilities

Learn about the PyTorch foundation

Join the PyTorch developer community to contribute, learn, and get your questions answered.

Learn how our community solves real, everyday machine learning problems with PyTorch.

Find resources and get questions answered

Find events, webinars, and podcasts

A place to discuss PyTorch code, issues, install, research

Discover, publish, and reuse pre-trained models

- Speech Recognition with Wav2Vec2 >

- Old version (stable)

Click here to download the full example code

Speech Recognition with Wav2Vec2 ¶

Author : Moto Hira

This tutorial shows how to perform speech recognition using using pre-trained models from wav2vec 2.0 [ paper ].

The process of speech recognition looks like the following.

Extract the acoustic features from audio waveform

Estimate the class of the acoustic features frame-by-frame

Generate hypothesis from the sequence of the class probabilities

Torchaudio provides easy access to the pre-trained weights and associated information, such as the expected sample rate and class labels. They are bundled together and available under torchaudio.pipelines module.

Preparation ¶

Creating a pipeline ¶.

First, we will create a Wav2Vec2 model that performs the feature extraction and the classification.

There are two types of Wav2Vec2 pre-trained weights available in torchaudio. The ones fine-tuned for ASR task, and the ones not fine-tuned.

Wav2Vec2 (and HuBERT) models are trained in self-supervised manner. They are firstly trained with audio only for representation learning, then fine-tuned for a specific task with additional labels.

The pre-trained weights without fine-tuning can be fine-tuned for other downstream tasks as well, but this tutorial does not cover that.

We will use torchaudio.pipelines.WAV2VEC2_ASR_BASE_960H here.

There are multiple pre-trained models available in torchaudio.pipelines . Please check the documentation for the detail of how they are trained.

The bundle object provides the interface to instantiate model and other information. Sampling rate and the class labels are found as follow.

Model can be constructed as following. This process will automatically fetch the pre-trained weights and load it into the model.

Loading data ¶

We will use the speech data from VOiCES dataset , which is licensed under Creative Commos BY 4.0.

To load data, we use torchaudio.load() .

If the sampling rate is different from what the pipeline expects, then we can use torchaudio.functional.resample() for resampling.

torchaudio.functional.resample() works on CUDA tensors as well.

When performing resampling multiple times on the same set of sample rates, using torchaudio.transforms.Resample might improve the performace.

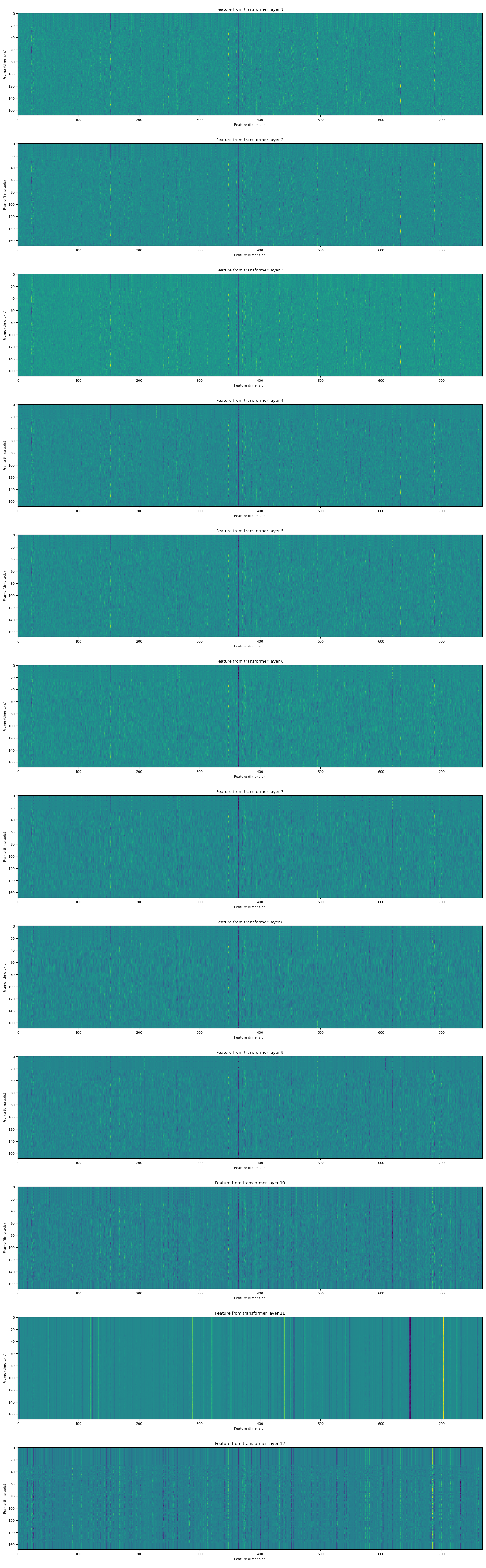

Extracting acoustic features ¶

The next step is to extract acoustic features from the audio.

Wav2Vec2 models fine-tuned for ASR task can perform feature extraction and classification with one step, but for the sake of the tutorial, we also show how to perform feature extraction here.

The returned features is a list of tensors. Each tensor is the output of a transformer layer.

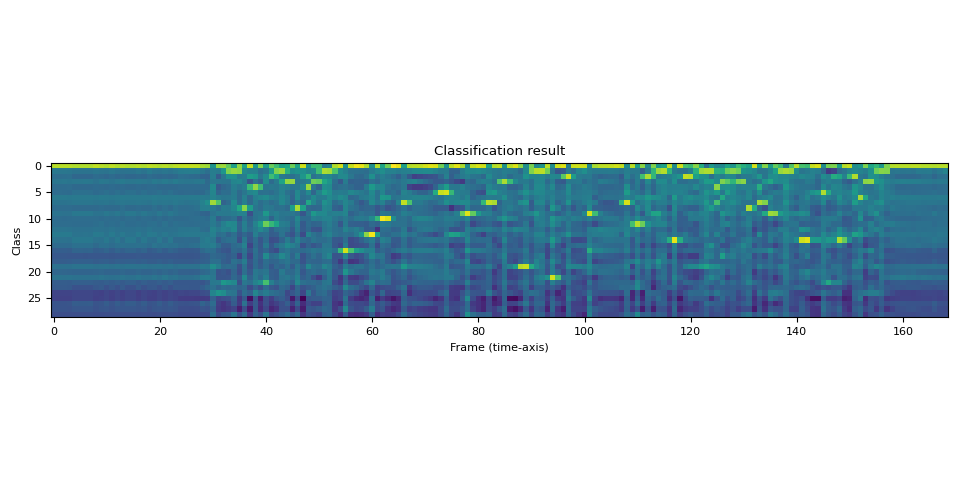

Feature classification ¶

Once the acoustic features are extracted, the next step is to classify them into a set of categories.

Wav2Vec2 model provides method to perform the feature extraction and classification in one step.

The output is in the form of logits. It is not in the form of probability.

Let’s visualize this.

We can see that there are strong indications to certain labels across the time line.

Generating transcripts ¶

From the sequence of label probabilities, now we want to generate transcripts. The process to generate hypotheses is often called “decoding”.

Decoding is more elaborate than simple classification because decoding at certain time step can be affected by surrounding observations.

For example, take a word like night and knight . Even if their prior probability distribution are differnt (in typical conversations, night would occur way more often than knight ), to accurately generate transcripts with knight , such as a knight with a sword , the decoding process has to postpone the final decision until it sees enough context.

There are many decoding techniques proposed, and they require external resources, such as word dictionary and language models.

In this tutorial, for the sake of simplicity, we will perform greedy decoding which does not depend on such external components, and simply pick up the best hypothesis at each time step. Therefore, the context information are not used, and only one transcript can be generated.

We start by defining greedy decoding algorithm.

Now create the decoder object and decode the transcript.

Let’s check the result and listen again to the audio.

The ASR model is fine-tuned using a loss function called Connectionist Temporal Classification (CTC). The detail of CTC loss is explained here . In CTC a blank token (ϵ) is a special token which represents a repetition of the previous symbol. In decoding, these are simply ignored.

Conclusion ¶

In this tutorial, we looked at how to use Wav2Vec2ASRBundle to perform acoustic feature extraction and speech recognition. Constructing a model and getting the emission is as short as two lines.

Total running time of the script: ( 0 minutes 4.399 seconds)

Download Python source code: speech_recognition_pipeline_tutorial.py

Download Jupyter notebook: speech_recognition_pipeline_tutorial.ipynb

Gallery generated by Sphinx-Gallery

- Preparation

- Creating a pipeline

- Loading data

- Extracting acoustic features

- Feature classification

- Generating transcripts

Access comprehensive developer documentation for PyTorch

Get in-depth tutorials for beginners and advanced developers

Find development resources and get your questions answered

To analyze traffic and optimize your experience, we serve cookies on this site. By clicking or navigating, you agree to allow our usage of cookies. As the current maintainers of this site, Facebook’s Cookies Policy applies. Learn more, including about available controls: Cookies Policy .

- torchvision

- PyTorch on XLA Devices

- PyTorch Foundation

- Community Stories

- Developer Resources

- Models (Beta)

- Stack Overflow for Teams Where developers & technologists share private knowledge with coworkers

- Advertising & Talent Reach devs & technologists worldwide about your product, service or employer brand

- OverflowAI GenAI features for Teams

- OverflowAPI Train & fine-tune LLMs

- Labs The future of collective knowledge sharing

- About the company Visit the blog

Collectives™ on Stack Overflow

Find centralized, trusted content and collaborate around the technologies you use most.

Q&A for work

Connect and share knowledge within a single location that is structured and easy to search.

Get early access and see previews of new features.

What is the best sample rate for Google Speech API? Any Google employee or expert to comment on?

So far I have tested on a very little audio file with 16 kHz and 48 kHz. I would love to conduct much bigger tests but it costs money as you know.

48 kHz sample rate provided better results. However, on documentation it says best is 16 kHz

So I am a bit confused

Here the 16 kHz and 48 kHz flac files I have used to test with Google Speech to Text API

16 kHz : https://drive.google.com/file/d/1MbiW3t86W68ZqENtDqD4XdNmEV7QZbZA/view?usp=sharing

48 kHz : https://drive.google.com/file/d/1aLN1ptMJBwuYc6FdAk6CxcK1Ex4jI3vh/view?usp=sharing

And here the produced transcripts

Original sample rate of the video is 48 kHz

So any expert or employee can comment on this?

These are the 16 kHz and 48 kHz commands I used with ffmpeg to compose the flac file

- google-cloud-platform

- speech-to-text

- google-speech-api

- google-speech-to-text-api

2 Answers 2

16 kHz is just the recommended sample rate to be used for transcribing Speech-to-Text. 1

We recommend a sample rate of at least 16 kHz in the audio files that you use for transcription with Speech-to-Text. Sample rates found in audio files are typically 16 kHz, 32 kHz, 44.1 kHz, and 48 kHz. Because intelligibility is greatly affected by the frequency range, especially in the higher frequencies, a sample rate of less than 16 kHz results in an audio file that has little or no information above 8 kHz. This can prevent Speech-to-Text from correctly transcribing spoken audio. Speech intelligibility requires information throughout the 2 kHz to 4 kHz range, although the harmonics (multiples) of those frequencies in the higher range are also important for preserving speech intelligibility. Therefore, keeping the sample rate to a minimum of 16 kHz is a good practice.

16k is the MINIMUM. Downsampling loses data. So, if your original is 48k - it's best to keep it.

Your Answer

Reminder: Answers generated by artificial intelligence tools are not allowed on Stack Overflow. Learn more

Sign up or log in

Post as a guest.

Required, but never shown

By clicking “Post Your Answer”, you agree to our terms of service and acknowledge you have read our privacy policy .

Not the answer you're looking for? Browse other questions tagged google-cloud-platform google-api speech-to-text google-speech-api google-speech-to-text-api or ask your own question .

- The Overflow Blog

- From PHP to JavaScript to Kubernetes: how one backend engineer evolved over time

- Where does Postgres fit in a world of GenAI and vector databases?

- Featured on Meta

- We've made changes to our Terms of Service & Privacy Policy - July 2024

- Bringing clarity to status tag usage on meta sites

- Feedback requested: How do you use tag hover descriptions for curating and do...

- What does a new user need in a homepage experience on Stack Overflow?

- Staging Ground Reviewer Motivation

Hot Network Questions

- How do you hide an investigation of alien ruins on the moon during Apollo 11?

- Why does my PC take a long time to start, then when it's at the login screen it jumps to the desktop instantly?

- Deviation from the optimal solution for Solomon instances of CVRPTW

- Why doesn't the world fill with time travelers?

- Is the theory of ordinals in Cantor normal form with just addition decidable?

- Amount Transfer Between Different Accounts

- What did the Ancient Greeks think the stars were?

- Why did General Leslie Groves evade Robert Oppenheimer's question here?

- Why do National Geographic and Discovery Channel broadcast fake or pseudoscientific programs?

- Replacing a multi character pattern that includes a newline with some characters

- Can you find what these letters might be?

- How do I alter a table by using AFTER in hook update?

- How can you trust a forensic scientist to have maintained the chain of custody?

- Is 2'6" within the size constraints of small, and what would the weight of a fairy that size be?

- Can I retain the ordinal nature of a predictor while answering a question about it that is inherently binary?

- How to use and interpret results from glmer() in R, when the predicted risks are lower than observed

- High CPU usage by process with obfuscated name on Linux server – Potential attack?

- How long does it take to achieve buoyancy in a body of water?

- What is the spiritual difference between hungering and thirsting? (Matthew 5:6)

- How do I safely remove a mystery cast iron pipe in my basement?

- What are the risks of a compromised top tube and of attempts to repair it?

- Are there different conventions for 'rounding to even'?

- What is the significance of bringing the door to Nippur in the Epic of Gilgamesh?

- How could Bangladesh protect itself from Indian dams and barrages?

- About AssemblyAI

DeepSpeech for Dummies - A Tutorial and Overview

What is DeepSpeech and how does it work? This post shows basic examples of how to use DeepSpeech for asynchronous and real time transcription.

Contributor

What is DeepSpeech? DeepSpeech is a neural network architecture first published by a research team at Baidu . In 2017, Mozilla created an open source implementation of this paper - dubbed “ Mozilla DeepSpeech ”.

The original DeepSpeech paper from Baidu popularized the concept of “end-to-end” speech recognition models. “End-to-end” means that the model takes in audio, and directly outputs characters or words. This is compared to traditional speech recognition models, like those built with popular open source libraries such as Kaldi or CMU Sphinx, that predict phonemes, and then convert those phonemes to words in a later, downstream process.

The goal of “end-to-end” models, like DeepSpeech, was to simplify the speech recognition pipeline into a single model. In addition, the theory introduced by the Baidu research paper was that training large deep learning models, on large amounts of data, would yield better performance than classical speech recognition models.

Today, the Mozilla DeepSpeech library offers pre-trained speech recognition models that you can build with, as well as tools to train your own DeepSpeech models. Another cool feature is the ability to contribute to DeepSpeech’s public training dataset through the Common Voice project.

In the below tutorial, we’re going to walk you through installing and transcribing audio files with the Mozilla DeepSpeech library (which we’ll just refer to as DeepSpeech going forward).

Basic DeepSpeech Example

DeepSpeech is easy to get started with. As discussed in our overview of Python Speech Recognition in 2021 , you can download, and get started with, DeepSpeech using Python’s built-in package installer, pip. If you have cURL installed, you can download DeepSpeech’s pre-trained English model files from the DeepSpeech GitHub repo as well. Notice that the files we’re downloading below are the ‘.scorer’ and ‘.pbmm’ files.

A quick heads up - when using DeepSpeech, it is important to consider that only 16 kilohertz (kHz) .wav files are supported as of late September 2021.

Let’s go through some example code on how to asynchronously transcribe speech with DeepSpeech. If you’re using a Unix distribution, you’ll need to install Sound eXchange (sox). Sox can be installed by using either ‘apt’ for Ubuntu/Debian or ‘dnf’ for Fedora as shown below.

Now let’s also install the Python libraries we’ll need to get this to work. We’re going to need the DeepSpeech library, webrtcvad for voice activity detection, and pyqt5 for accessing multimedia (sound) capabilities on desktop systems. Earlier, we already installed DeepSpeech, we can install the other two libraries with pip like so:

Now that we have all of our dependencies, let’s create a transcriber. When we’re finished, we will be able to transcribe any ‘.wav’ audio file just like the example shown below.

Before we get started on building our transcriber, make sure the model files we downloaded earlier are saved in the ‘./models’ directory of the working directory. The first thing we’re going to do is create a voice activity detection (VAD) function and use that to extract the parts of the audio file that have voice activity.

How can we create a VAD function? We’re going to need a function to read in the ‘.wav’ file, a way to generate frames of audio, and a way to create a buffer to collect the parts of the audio that have voice activity. Frames of audio are objects that we construct that contain the byte data of the audio, the timestamp in the total audio, and the duration of the frame. Let’s start by creating our wav file reader function.

All we need to do is open the file given, assert that the channels, sample width, sample rate are what we need, and finally get the frames and return the data as PCM data along with the sample rate and duration. We’ll use ‘contextlib’ to open, read, and close the wav file.

We’re expecting audio files with 1 channel, a sample width of 2, and a sample rate of either 8000, 16000, or 32000. We calculate duration as the number of frames divided by the sample rate.

Now that we have a way to read in the wav file, let’s create a frame generator to generate individual frames containing the size, timestamp, and duration of a frame. We’re going to generate frames in order to ensure that our audio is processed in reasonably sized clips and to separate out segments with and without speech.

Looking for more tutorials like this?

Subscribe to our newsletter!

The below generator function takes the frame duration in milliseconds, the PCM audio data, and the sample rate as inputs. It uses that data to create an offset starting at 0, a frame size, and a duration. While we have not yet produced enough frames to cover the entire audio file, the function will continue to yield frames and add to our timestamp and offset.

After being able to generate frames of audio, we’ll create a function called vad_collector to separate out the parts of audio with and without speech. This function requires an input of the sample rate, the frame duration in milliseconds, the padding duration in milliseconds, a webrtcvad.Vad object, and a collection of audio frames. This function, although not explicitly called as such, is also a generator function that generates a series of PCM audio data.

The first thing we’re going to do in this function is get the number of padding frames and create a ring buffer with a dequeue. Ring buffers are most commonly used for buffering data streams.

We’ll have two states, triggered and not triggered, to indicate whether or not the VAD collector function should be adding frames to the list of voiced frames or yielding that list in bytes.

Starting with an empty list of voiced frames and a not triggered state, we loop through each frame. If we are not in a triggered state, and the frame is decided to be speech, then we add it to the buffer. If after this addition of the new frame to the buffer more than 90% of the buffer is decided to be speech, we enter the triggered state, appending the buffered frames to voiced frames and clearing the buffer.

If the function is already in a triggered state when we process a frame, then we append that frame to the voiced frames list regardless of whether it is speech or not. We then append it, and the truth value for whether it is speech or not, to the buffer. After appending to the buffer, if the buffer is more than 90% non-speech, then we change our state to not-triggered, yield voiced frames as bytes, and clear both the voiced frames list and the ring buffer. If, by the end of the frames, there are still frames in voiced frames, yield them as bytes.

That’s all we need to do to make sure that we can read in our wav file and use it to generate clips of PCM audio with voice activity detection. Now let’s create a segment generator that will return more than just the segment of byte data for the audio, but also the metadata needed to transcribe it. This function requires only one parameter, the ‘.wav’ file. It is meant to filter out all the audio frames that it does not detect voice on, and return the parts of the audio file with voice. The function returns a tuple of the segments, the sample rate of the audio file, and the length of the audio file.

Now that we’ve handled the wav file and have created all the functions necessary to turn a wav file into segments of voiced PCM audio data that DeepSpeech can process, let’s create a way to load and resolve our models.

We’ll create two functions called load_model and resolve_models . Intuitively, the load_model function loads a model, returning the DeepSpeech object, the model load time, and the scorer load time. This function requires a model and a scorer. This function calculates the time it takes to load the model and scorer via the timer() module from Python. It also creates a DeepSpeech ‘Model’ object from the ‘model’ parameter passed in.

The resolve models function takes a directory name indicating which directory the models are in. Then it grabs the first file ending in ‘.pbmm’ and the first file ending in ‘.scorer’ and loads them as the models.

Being able to segment out the speech from our wav file, and load up our models, is all the preprocessing we need leading up to doing the actual Speech-to-Text conversion.

Let’s now create a function that will allow us to transcribe our speech segments . This function will have three parameters: the DeepSpeech object (returned from load_models), the audio file, and fs the sampling rate of the audio file. All it does, other than keep track of processing time, is call the DeepSpeech object’s stt function on the audio.

Alright, all our support functions are ready to go, let’s do the actual Speech-to-Text conversion.

In our “main” function below we’ll go ahead and directly provide a path to the models we downloaded and moved to the ‘./models’ directory of our working directory at the beginning of this tutorial.

We can ask the user for the level of aggressiveness for filtering out non-voice, or just automatically set it to 1 (from a scale of 0-3). We’ll also need to know where the audio file is located.

After that, all we have to do is use the functions we made earlier to load and resolve our models, load up the audio file, and run the Speech-to-Text inference on each segment of audio. The rest of the code below is just for debugging purposes to show you the filename, the duration of the file, how long it took to run inference on a segment, and the load times for the model and the scorer.

The function will save your transcript to a ‘.txt’ file, as well as output the transcription in the terminal.

That’s it! That’s all we have to do to use DeepSpeech to do Speech Recognition on an audio file. That’s a surprisingly large amount of code. A while ago, I also wrote an article on how to do this in much less code with the AssemblyAI Speech-to-Text API. You can read about how to do Speech Recognition in Python in under 25 lines of code if you don’t want to go through all of this code to use DeepSpeech.

Basic DeepSpeech Real-Time Speech Recognition Example

Now that we’ve seen how we can do asynchronous Speech Recognition with DeepSpeech, let’s also build a real time Speech Recognition example. Just like before, we’ll start with installing the right requirements. Similar to the asynchronous example above, we’ll need webrtcvad, but we’ll also need pyaudio, halo, numpy, and scipy.

Halo is for an indicator that the program is streaming, numpy and scipy are used for resampling our audio to the right sampling rate.

How will we build a real time Speech Recognition program with DeepSpeech? Just as we did in the example above, we’ll need to separate out voice activity detected segments of audio from segments with no voice activity. If the audio frame has voice activity, then we’ll feed it into the DeepSpeech model to be transcribed.

Let’s make an object for our voice activity detected audio frames, we’ll call it VADAudio (voice activity detection audio). To start, we’ll define the format, the rate, the number of channels, and the number of frames per second for our class.

Every class needs an __init__ function. The __init__ function for our VADAudio class, defined below, will take in four parameters: a callback, a device, an input rate, and a file. Everything but the input_rate will default to None if they are not passed at creation.

The input sampling rate will be the rate sampling process we defined in our class above. When we initialize our class, we will also create an instance method called proxy_callback which returns a tuple of None and the pyAudio signal to continue, but before it returns it calls the callback function, hence the name proxy_callback .

Upon initialization, the first thing we do is set ‘callback’ to a function that puts the data into the buffer queue belonging to the object instance. We initialize an empty queue for the instance’s buffer queue. We set the device and input rate to the values passed in, and the sample rate to the Class’ sample rate. Then, we derive our block size and block size input as quotients of the Class’ sample rate and the input rate divided by the number of blocks per second respectively. Blocks are the discrete segments of audio data that we will work with.

Next, we create a PyAudio object and declare a set of keyword arguments. The keyword arguments are format , set to the VADAudio Class’ format value we declared earlier, channels , set to the Class’s channel value, rate , set to the input rate, input , set to true, frames_per_buffer set to the block size input calculated earlier, and stream_callback , set to the proxy_callback instance function we created earlier. We’ll also set our aggressiveness of filtering background noise here to the aggressiveness passed in, set to a default of 3, the highest filter. We set the chunk size to None for now. If there is a device passed into the initialization of the object, we set a new keyword argument, input_device_index to the device. The device is the input device used, but what we actually pass through will be the index of the device as defined by pyAudio, this is only necessary if you want to use an input device that is not the default input device of your computer. If there was not a device passed in and we passed in a file object, we change the chunk size to 320 and open up the file to read in as bytes. Finally, we open and start a PyAudio stream with the keyword arguments dictionary we made.

Our VADAudio Class will have 6 functions: resample, read_resampled, read, write_wav, a frame generator, and a voice activity detected segment collector. Let’s start by making the resample function. Due to limitations in technology, not all microphones support DeepSpeech’s native processing sampling rate. This function takes in audio data and an input sample rate, and returns a string of the data resampled into 16 kHz.

Next, we’ll make the read and read_resampled functions together because they do basically the same thing. The read function “reads” the audio data, and the read_resampled function will read the resampled audio data. The read_resampled function will be used to read audio that wasn’t sampled at the right sampling rate initially.

The write_wav function takes a filename and data. It opens a file with the filename and allows writing of bytes with a sample width of 2 and a frame rate equal to the instance’s sample rate, and writes the data as the frames before closing the wave file.

Before we create our frame generator, we’ll set a property for the frame duration in milliseconds using the block size and sample rate of the instance.

Now, let’s create our frame generator. The frame generator will either yield the raw data from the microphone/file, or the resampled data using the read and read_resampled functions from the Audio class. If the input rate is equal to the default rate, then it will simply read in the raw data, else it will return the resampled data.

The final function we’ll need in our VADAudio is a way to collect our audio frames. This function takes a padding in milliseconds, a ratio that controls when the function “triggers” similar to the one in the basic async example above, and a set of frames that defaults to None.

The default value for padding_ms is 300, and the default for the ratio is 0.75. The padding is for padding the audio segments, and a ratio of 0.75 here means that if 75% of the audio in the buffer is speech, we will enter the triggered state. If there are no frames passed in, we’ll call the frame generator function we created earlier. We’ll define the number of padding frames as the padding in milliseconds divided by the frame duration in milliseconds that we derived earlier.