Short Speech on Computer in English for Students and Children

3 minute speech on computer for school and college students.

Respected Principal, teachers and my dear classmates. A wonderful morning to all of you. Today we all have gathered here to celebrate this day, I would like to speak a few words on – � Computer �.

Offices, schools, hospitals, governmental organizations, and non- governmental organizations, all are incomplete without it. Our work whether its school homework or office work or mother�s household work, all is dependent on the computer.

Computers have also contributed to the most important sector of a country, i.e, defense. It helps the country�s security agencies to detect a threat that can be harmful in the future. The defense industry also uses to keep surveillance on our enemy.

Apart from this threat, there are other threats too. Such as threats like viruses, spams, bugs, etc. It has become an addiction too for many people. Even students spend a lot of their time sitting in front of computer screen playing games and watching movies.

We need to remind ourselves that excess of everything is bad. We should try and limit its use as it�s excessive use can be harmful to our eyes, back, brain and can lead to various problems too.

Related Posts:

Speech on Technology for Students and Children

3 minutes speech on technology.

We live in the 21st century, where we do all over work with the help of technology. We know technology as the name “technological know-how”. Read Speech on Technology.

Also, it implies the modern practical knowledge that we require to do things in an effective and efficient manner. Moreover, technological advancements have made life easier and convenient.

We use this technology on a daily basis to fulfill our interests and particular duties. From morning till evening we use this technology as it helps us numerous ways.

Also, it benefits all age groups, people, until and unless they know how to access the same. However, one must never forget that anything that comes to us has its share of pros and cons.

Benefits of Technology

In our day-to-day life technology is very useful and important. Furthermore, it has made communication much easier than ever before. The introduction of modified and advanced innovations of phones and its application has made connecting to people much easier.

Moreover, technology-not only transformed our professional world but also has changed the household life to a great extent. In addition, most of the technology that we today use is generally automatic in comparison to that our parents and grandparents had in their days.

Due to technology in the entertainment industry, they have more techniques to provide us with a more realistic real-time experience.

Get the Huge list of 100+ Speech Topics here

Drawbacks of Technology

On the one hand, technology provides users with benefits or advantages, while on the other hand, it has some drawbacks too. These drawbacks or disadvantages negatively affect the importance of technology. One of the biggest problems, which everyone can easily observe, is unemployment.

In so many sectors, due to the over practice and much involvement of technology the machines have replaced human labor leading to unemployment.

Moreover, certain physiological researches teams have also proved their disadvantages. Because of the presence of social media applications like Facebook, Whatsapp, Twitter, Instagram, etc. the actual isolation has increased manifold. And ultimately it leads to increased loneliness and depression cases amongst the youngsters.

Due to the dependence of humans on technology, it has deteriorated the intelligence and creativity of children. Moreover, in today’s world technology is very important but if the people use it negatively, then there arises the negativity of the technology.

However, one thing that we need to keep in mind is that innovations are made to help us not to make us a victim of this technology.

How to use Technology?

Today we have technology that can transform lives. We have quick and vast access to the reservoir of knowledge through the Internet. So, we should make good use of it to solve the problems that we have around the world.

In the past, people use to write a letter to people that take many days to reach the destination, like the money order, personal letter, or a greeting card, but now we can send them much easily within few minutes.

Nowadays, we can easily transfer money online through our mobile phone and can send greetings through e-mail within a matter of minutes.

Besides, we cannot simply sum up the advantages and usefulness of technology at our fingertips.

In conclusion, I would say that it depends on a person that to what degree she/he wants to be dependent on technology. Moreover, there is nothing in the world that comes easy and it’s up to our conscience to decide what we want to learn from the things that we are provided to us.

Technology is not just a boom but a curse too. On one hand, it can save lives, on the other hand, it can destroy them too.

Essays for Students and Children here !

Customize your course in 30 seconds

Which class are you in.

Speech for Students

- Speech on India for Students and Children

- Speech on Mother for Students and Children

- Speech on Air Pollution for Students and Children

- Speech about Life for Students and Children

- Speech on Disaster Management for Students and Children

- Speech on Internet for Students and Children

- Speech on Generation Gap for Students and Children

- Speech on Indian Culture for Students and Children

- Speech on Sports for Students and Children

- Speech on Water for Students and Children

16 responses to “Speech on Water for Students and Children”

this was very helpful it saved my life i got this at the correct time very nice and helpful

This Helped Me With My Speech!!!

I can give it 100 stars for the speech it is amazing i love it.

Its amazing!!

Great !!!! It is an advanced definition and detail about Pollution. The word limit is also sufficient. It helped me a lot.

This is very good

Very helpful in my speech

Oh my god, this saved my life. You can just copy and paste it and change a few words. I would give this 4 out of 5 stars, because I had to research a few words. But my teacher didn’t know about this website, so amazing.

Tomorrow is my exam . This is Very helpfull

It’s really very helpful

yah it’s is very cool and helpful for me… a lot of 👍👍👍

Very much helpful and its well crafted and expressed. Thumb’s up!!!

wow so amazing it helped me that one of environment infact i was given a certificate

check it out travel and tourism voucher

thank you very much

Leave a Reply Cancel reply

Your email address will not be published. Required fields are marked *

Download the App

Talk to our experts

1800-120-456-456

- Speech on Technology

Technology in This Generation

We are in a generation, where technology has surrounded us from all sides. Our everyday life runs on the use of technology, be it in the form of an alarm clock or a table lamp. Technology has been an important part of our daily lives. Therefore, it is important for the students to be familiar with the term technology. Therefore, we have provided a long speech on technology for students of all age groups. There is also a short speech and a 10 lines speech given in this article.

Long Speech on Technology

A warm welcome to everyone gathered here today. I am here to deliver a speech on technology which has taken a tremendous role in our day to day life. We all are in a generation where everything is dependent on technology. Let’s understand what technology is through the lens of Science.

Technology comes in the form of tangible and intangible properties by exerting physical and mental force to achieve something that adds value. For example, a mobile phone is tangible, and the network connection used by the phone is intangible. Technology has taken its place as indispensable, wherein it has resulted in economic benefits, better health care, time-saving, and better lifestyle.

Due to technology, we have a significant amount of knowledge to improve our lives and solve problems. We can get our work done efficiently and effectively. As long as you know how to access technology, it can be used and proves to benefit people of all ages greatly. Technology is constantly being modified and upgraded every passing year.

The evolution of technology has made it possible to achieve lots in less time. Technology has given tools and machines to be used to solve problems around the world. There has been a complete transformation in the way we do things because of contributions from scientific technology. We can achieve more tasks while saving our time and hence in a better place than our previous generation.

Right from the ringing of the morning alarm to switching off the fan, everything runs behind the technology. Even the microphone that I am using is an innovation of technology and thus the list continues. With several inventions of hi-tech products, our daily needs are available on a screen at our fingertips. These innovations and technologies have made our lives a lot easier. Everything can be done at the comfort of your home within a couple of hours or so. These technologies have not only helped us in the digital platform but have also given us innovations in the field of medical, educational, industrial as well as in agricultural sectors. If we go back to the older generations, it would take days to get any things solved, even if there were not many treatments for several diseases.

But today with the innovations of technology, many diseases can be treated and diagnosed within a shorter period of time. The relationship between humans and technology has continued for ages and has given rise to many innovations. It has made it easier for us to handle our daily chores starting from home, office, schools and kitchen needs. It has made available basic necessities and safer living spaces. We can sit at home comfortably and make transactions through the use of online banking. Online shopping, video calling, and attending video lectures on the phone have all been possible due to the invention of the internet.

People in the past would write letters to communicate with one another, and today due to technology, traditional letters have been replaced by emails and mobile phones. These features are the essential gifts of technology. Everything is just at our fingertips, right from turning on the lights to doing our laundry. The whole world runs on technology and hence, we are solely dependent on it. But everything has its pros and cons. While the benefits of technology are immense, it also comes with some negative effects and possibly irreversible damages to humanity and our planet.

We have become so dependent on technology that we often avoid doing things on our own. It as a result makes us lazy and physically inactive. This has also led to several health issues such as obesity and heart diseases. We prefer booking a cab online rather than walking a few kilometres. Technology has increased screen time, and thus, children are no longer used to playing in the playgrounds but are rather found spending hours on their phones playing video games. This has eroded children’s creativity, intelligence, and memory. No doubt, technology is a very essential part of our life, but we should not be totally dependent on it. We should practise being more fit and do regular activities on our own to maintain a healthy lifestyle.

The other aspects that have been badly affected us are that since technology replaced human interference, is unemployment. Social media platforms like Instagram, Facebook, Twitter, etc., were meant to connect people and increase our community circle. Still, it has made people all the more lonely, with cases of depression on the rise amongst the youth.

There are several controversies around the way world leaders have used technology in defence and industrialisation under the banner of development and advancements. The side effects of technology have resulted in pollution, climate change, forest fires, extreme storms, cyclones, impure air, global warming, land area getting reduced and natural resources getting extinct. It’s time we change our outlook towards selfish technology and bring about responsible technology. Every nation needs to set aside budgets to come up with sustainable technological developments.

As students, we should develop creative problem solving using critical thinking to bring clean technology into our world. As we improve our nation, we must think of our future for a greener and cleaner tomorrow. You would be glad to know that several initiatives have been initiated to bring awareness amongst children and youth to invent cleaner technology.

For example, 15-year-old Vinisha Umashankar invented a solar ironing cart and has been awarded the Earth Shot Prize by the Royal Foundation of the duke and duchess of Cambridge and honoured to speak at the COP26 climate change conference in Glasgow, Scotland. Her invention should be an inspiration to each one of us to pursue clean technology.

The top five technologically advanced countries are Japan, America, Germany, China and South Korea. We Indians will make our mark on this list someday. Technology has a vital role in our lives but lets us be mindful that we control technology and that technology doesn’t control us. Technology is a tool to elevate humanity and is not meant to be a self-destroying mechanism under the pretext of economic development. Lastly, I would like to conclude my speech by saying that technology is a boon for our society but we should use it in a productive way.

A Short Speech on Technology

A warm greeting to everyone present here. Today I am here to talk about technology and how it has gifted us with various innovations. Technology as we know it is the application of scientific ideas to develop a machine or a device for serving the needs of humans. We, human beings, are completely dependent on technology in our daily life. We have used technology in every aspect of our life starting from household needs, schools, offices, communication and entertainment. Our life has been more comfortable due to the use of technology. We are in a much better and comfortable position as compared to our older generation. This is possible because of various contributions and innovations made in the field of technology. Everything has been made easily accessible for us at our fingertips right from buying a thing online to making any banking transaction. It has also led to the invention of the internet which gave us access to search for any information on google. But there are also some disadvantages. Relying too much on technology has made us physically lazy and unhealthy due to the lack of any physical activity. Children have become more prone to video games and social media which have led to obesity and depression. Since they are no longer used to playing outside and socialising, they often feel isolated. Therefore, we must not totally be dependent on technology and should try using it in a productive way.

10 Lines Speech on Technology

Technology has taken an important place in our lives and is considered an asset for our daily needs.

The world around us is totally dependent on technology, thus, making our lives easier.

The innovation of phones, televisions and laptops has digitally served the purpose of entertainment today.

Technology has not only helped us digitally but has also led to various innovations in the field of medical science.

Earlier it took years to diagnose and treat any particular disease, but today with the help of technology it has led to the early diagnosis of several diseases.

We, in this generation, like to do things sitting at our own comfort within a short period of time. This thing has been made possible by technology.

All our daily activities such as banking, shopping, entertainment, learning and communication can be done on a digital platform just by a click on our phone screen.

Although all these gifts of technology are really making our lives faster and easier, it too has got several disadvantages.

Since we all are highly dependent on technology, it has reduced our daily physical activity. We no longer put effort to do anything on our own as everything is available at a minute's click.

Children nowadays are more addicted to online video games rather than playing outside in the playground. These habits make them more physically inactive.

FAQs on Speech on Technology

1. Which kind of technology is the most widely used nowadays?

Artificial Intelligence (AI) is the field of technology that is being used the most nowadays and is expected to grow even more even in the future. With AI being adopted in numerous sectors and industries and continuously more research being done on it, it will not be long before we see more forms of AI in our daily lives.

2. What is the biggest area of concern with using technology nowadays?

Protection of the data you have online is the biggest area of concern. With hacking and cyberattacks being so common, it is important for everyone to ensure they do not post sensitive data online and be cautious when sharing information with others.

3 Minute Speech On information Technology

Speech On information Technology: Information Technology is a way or method of sending, receiving and storing information using electronic equipment like computers, smartphones and many others. We use it knowingly or unknowingly. In the speech below we will understand this technology.

Welcome to Thenextskill.com. This page will help you compose a short speech on information technology for students studying in classes 6, 7, 8, 9, 10, 11 & 12. You can read a range of speeches on this website. You can also read a long essay on information technology here.

Short Speech On Information Technology [3 Minutes]

Before I deliver my speech I would like to wish you all the best wishes. And I also want to thank you a lot for having me a chance to share my views on Information Technology.

Information Technology refers to the use of electronic gadgets like computers, smartphones and tablets to send, receive and store information. It has transformed the way of living of the whole of humanity. Thanks to I.T. (Information Technology) that has made information available to everyone.

With the help of Information Technology, today anyone can gain knowledge almost of each type from various sources. Information Technology has not only transformed the lives of normal people but also has assisted offices and organisations.

The Internet, which has brought a drastic change in the way people acquire knowledge, is also a gift of Information Technology. Today, we are just one click away from any kind of information and knowledge with the use of the Internet.

As discussed above, Internet has impacted the lives of the common man making information accessible and affordable. Work from home is made possible because of this information technology. Students can attend classes from their homes with the help of it.

If we talk about the advantages of Information Technology, it has multiple benefits for all. In fact, we can not say a complete number because it keeps evolving and present us with new benefits. You can’t even know about the many benefits you are taking advantage of. For example, A.TM. Cards, Adhar cards, Smart cards, UPI, FasTag etc. are the latest technologies of Information Technology.

Here are some advantages of Information Technology:

- Access to Information

- Saves natural resourses

- Ease of mobility

- Better communication

- Cost efficient

- Offers new jobs

- Improved Banking

- Globlization

- Better Communication

- Learning made easy

- Reduced distance

- Entertainment

Nothing in this world exists without disadvantages. Information technology has also various disadvantages. On the one hand, where I.T. offers new job opportunities, on the other hand, it had snatched jobs of many people. If we talk about the vulnerability of security, Information technology is not secured enough.

To sum it up, we can say that Information technology is both beneficial and harmful at the same time. It is obvious that Information Technology offers us manyfold benefits but data privacy is always at stake. It totally depends on the user how carefully and intelligently he or she is using this technology.

There is a lot to tell but the time is limited and we should respect time so I have to stop here.

Thank you all for listening to my words. I Hope, you will have liked my speech.

Other speeches

- Importance Of Time Management Speech [1,2,3 Minutes]

- 1 Minute Speech On Health Is Wealth

- 2 Minute Speech On Child Labour

- 1 Minute Speech On Child Labour

- Speech On Nature [ 1-2 minutes ]

- 2 Minute Speech on Importance Of Education

- 1 Minute Speech on Pollution

Speech on Technology In Education

Technology is transforming how you learn at school. It’s like a new friend that makes studying interesting and fun. In the second paragraph, imagine how cool it is when your homework comes alive on your computer screen. That’s the magic of technology in education!

1-minute Speech on Technology In Education

First off, we know technology has changed many aspects of our lives, and it’s doing the same for education. You see, students no longer rely only on books. Now, they use laptops, tablets, and even their phones to learn. This helps them to access knowledge from all around the world.

Also, with technology, learning is becoming more personalized. Apps and online platforms can adjust to your speed and style of learning. If you find math tricky, the app can provide extra practice in that area. It’s like having a personal tutor right in your pocket!

Let’s not forget teachers. Technology also helps them. They can use digital tools to prepare classes, track student progress, and even communicate with parents. So, it makes their job easier and more effective.

Lastly, technology teaches us important skills for the future. By using technology, students learn how to solve problems, think critically, and work in teams. These are valuable skills in the digital age.

2-minute Speech on Technology In Education

Good day, everyone!

First, let’s look at how technology makes learning more exciting and interactive. Think about the computer or tablet you use at school or home. Now, imagine learning about the solar system by exploring it in a 3D model on your device. It’s like taking a trip to outer space without leaving your room! Cool, right? That’s what technology does. It makes learning fun and brings our textbooks to life.

Next, technology also helps us learn at our own pace. Have you ever felt stuck on a math problem while doing your homework? With technology, you can now use learning apps to practice and understand these problems better. You can learn whenever you want, wherever you want, and at your own speed. That’s the beauty of tech in education.

Let’s not forget how technology helps teachers. With tech tools, teachers can design interesting lessons and track our progress better. They can quickly identify where we need help and provide us with more support. It’s like having a personal assistant that helps teachers guide us better.

Finally, technology helps us stay connected. We can collaborate on projects with classmates, even when we can’t meet them. We can share ideas, give feedback, and learn from each other. This way, technology makes learning a team sport.

In conclusion, technology in education is like a friend who makes learning fun, easy, and accessible. It’s a tool that opens up new worlds and helps us become better learners. So, next time you pick up your tablet or log into your online class, remember, you’re not just using a device – you’re stepping into a world of endless possibilities.

We also have speeches on more interesting topics that you may want to explore.

Leave a Reply Cancel reply

Save my name, email, and website in this browser for the next time I comment.

Speech recognition, also known as automatic speech recognition (ASR), computer speech recognition or speech-to-text, is a capability that enables a program to process human speech into a written format.

While speech recognition is commonly confused with voice recognition, speech recognition focuses on the translation of speech from a verbal format to a text one whereas voice recognition just seeks to identify an individual user’s voice.

IBM has had a prominent role within speech recognition since its inception, releasing of “Shoebox” in 1962. This machine had the ability to recognize 16 different words, advancing the initial work from Bell Labs from the 1950s. However, IBM didn’t stop there, but continued to innovate over the years, launching VoiceType Simply Speaking application in 1996. This speech recognition software had a 42,000-word vocabulary, supported English and Spanish, and included a spelling dictionary of 100,000 words.

While speech technology had a limited vocabulary in the early days, it is utilized in a wide number of industries today, such as automotive, technology, and healthcare. Its adoption has only continued to accelerate in recent years due to advancements in deep learning and big data. Research (link resides outside ibm.com) shows that this market is expected to be worth USD 24.9 billion by 2025.

Explore the free O'Reilly ebook to learn how to get started with Presto, the open source SQL engine for data analytics.

Register for the guide on foundation models

Many speech recognition applications and devices are available, but the more advanced solutions use AI and machine learning . They integrate grammar, syntax, structure, and composition of audio and voice signals to understand and process human speech. Ideally, they learn as they go — evolving responses with each interaction.

The best kind of systems also allow organizations to customize and adapt the technology to their specific requirements — everything from language and nuances of speech to brand recognition. For example:

- Language weighting: Improve precision by weighting specific words that are spoken frequently (such as product names or industry jargon), beyond terms already in the base vocabulary.

- Speaker labeling: Output a transcription that cites or tags each speaker’s contributions to a multi-participant conversation.

- Acoustics training: Attend to the acoustical side of the business. Train the system to adapt to an acoustic environment (like the ambient noise in a call center) and speaker styles (like voice pitch, volume and pace).

- Profanity filtering: Use filters to identify certain words or phrases and sanitize speech output.

Meanwhile, speech recognition continues to advance. Companies, like IBM, are making inroads in several areas, the better to improve human and machine interaction.

The vagaries of human speech have made development challenging. It’s considered to be one of the most complex areas of computer science – involving linguistics, mathematics and statistics. Speech recognizers are made up of a few components, such as the speech input, feature extraction, feature vectors, a decoder, and a word output. The decoder leverages acoustic models, a pronunciation dictionary, and language models to determine the appropriate output.

Speech recognition technology is evaluated on its accuracy rate, i.e. word error rate (WER), and speed. A number of factors can impact word error rate, such as pronunciation, accent, pitch, volume, and background noise. Reaching human parity – meaning an error rate on par with that of two humans speaking – has long been the goal of speech recognition systems. Research from Lippmann (link resides outside ibm.com) estimates the word error rate to be around 4 percent, but it’s been difficult to replicate the results from this paper.

Various algorithms and computation techniques are used to recognize speech into text and improve the accuracy of transcription. Below are brief explanations of some of the most commonly used methods:

- Natural language processing (NLP): While NLP isn’t necessarily a specific algorithm used in speech recognition, it is the area of artificial intelligence which focuses on the interaction between humans and machines through language through speech and text. Many mobile devices incorporate speech recognition into their systems to conduct voice search—e.g. Siri—or provide more accessibility around texting.

- Hidden markov models (HMM): Hidden Markov Models build on the Markov chain model, which stipulates that the probability of a given state hinges on the current state, not its prior states. While a Markov chain model is useful for observable events, such as text inputs, hidden markov models allow us to incorporate hidden events, such as part-of-speech tags, into a probabilistic model. They are utilized as sequence models within speech recognition, assigning labels to each unit—i.e. words, syllables, sentences, etc.—in the sequence. These labels create a mapping with the provided input, allowing it to determine the most appropriate label sequence.

- N-grams: This is the simplest type of language model (LM), which assigns probabilities to sentences or phrases. An N-gram is sequence of N-words. For example, “order the pizza” is a trigram or 3-gram and “please order the pizza” is a 4-gram. Grammar and the probability of certain word sequences are used to improve recognition and accuracy.

- Neural networks: Primarily leveraged for deep learning algorithms, neural networks process training data by mimicking the interconnectivity of the human brain through layers of nodes. Each node is made up of inputs, weights, a bias (or threshold) and an output. If that output value exceeds a given threshold, it “fires” or activates the node, passing data to the next layer in the network. Neural networks learn this mapping function through supervised learning, adjusting based on the loss function through the process of gradient descent. While neural networks tend to be more accurate and can accept more data, this comes at a performance efficiency cost as they tend to be slower to train compared to traditional language models.

- Speaker Diarization (SD): Speaker diarization algorithms identify and segment speech by speaker identity. This helps programs better distinguish individuals in a conversation and is frequently applied at call centers distinguishing customers and sales agents.

A wide number of industries are utilizing different applications of speech technology today, helping businesses and consumers save time and even lives. Some examples include:

Automotive: Speech recognizers improves driver safety by enabling voice-activated navigation systems and search capabilities in car radios.

Technology: Virtual agents are increasingly becoming integrated within our daily lives, particularly on our mobile devices. We use voice commands to access them through our smartphones, such as through Google Assistant or Apple’s Siri, for tasks, such as voice search, or through our speakers, via Amazon’s Alexa or Microsoft’s Cortana, to play music. They’ll only continue to integrate into the everyday products that we use, fueling the “Internet of Things” movement.

Healthcare: Doctors and nurses leverage dictation applications to capture and log patient diagnoses and treatment notes.

Sales: Speech recognition technology has a couple of applications in sales. It can help a call center transcribe thousands of phone calls between customers and agents to identify common call patterns and issues. AI chatbots can also talk to people via a webpage, answering common queries and solving basic requests without needing to wait for a contact center agent to be available. It both instances speech recognition systems help reduce time to resolution for consumer issues.

Security: As technology integrates into our daily lives, security protocols are an increasing priority. Voice-based authentication adds a viable level of security.

Convert speech into text using AI-powered speech recognition and transcription.

Convert text into natural-sounding speech in a variety of languages and voices.

AI-powered hybrid cloud software.

Enable speech transcription in multiple languages for a variety of use cases, including but not limited to customer self-service, agent assistance and speech analytics.

Learn how to keep up, rethink how to use technologies like the cloud, AI and automation to accelerate innovation, and meet the evolving customer expectations.

IBM watsonx Assistant helps organizations provide better customer experiences with an AI chatbot that understands the language of the business, connects to existing customer care systems, and deploys anywhere with enterprise security and scalability. watsonx Assistant automates repetitive tasks and uses machine learning to resolve customer support issues quickly and efficiently.

Speech on Computer (Short & Long Speech) For Students

Speech on computer, introduction.

A very good morning to one and all present over here . Respected guests and my dear friends , today I will be giving speech on the topic “COMPUTER”

Leave a Reply Cancel reply

We have a strong team of experienced teachers who are here to solve all your exam preparation doubts, up scert solutions class 6 english chapter 9 – the rainbow fairies, up scert solutions class 5 english chapter 8 bobby: the robot, up scert solutions class 6 english chapter 4 – the donkey and the dog, up scert solutions class 6 english chapter 1 – learning together.

Voice technology for the rest of the world

Project aims to build a dataset with 1,000 words in 1,000 different languages to bring voice technology to hundreds of millions of speakers around the world.

Voice-enabled technologies like Siri have gone from a novelty to a routine way to interact with technology in the past decade. In the coming years, our devices will only get chattier as the market for voice-enabled apps, technologies and services continues to expand.

But the growth of voice-enabled technology is not universal. For much of the world, technology remains frustratingly silent.

“Speech is a natural way for people to interact with devices, but we haven’t realized the full potential of that yet because so much of the world is shut out from these technologies,” said Mark Mazumder, a Ph.D. student at the Harvard John A. Paulson School of Engineering and Applied Sciences (SEAS) and the Graduate School of Arts and Sciences.

The challenge is data. Voice assistants like Apple’s Siri or Amazon’s Alexa need thousands to millions of unique examples to recognize individual keywords like “light” or “off”. Building those enormous datasets is incredibly expensive and time-consuming, prohibiting all but the biggest companies from developing voice recognition interfaces.

Even companies like Apple and Google only train their models on a handful of languages, shutting out hundreds of millions of people from interacting with their devices via voice. Want to build a voice-enabled app for the nearly 50 million Hausa speakers across West Africa? Forget it. Neither Siri, Alexa nor Google Home currently support a single African language.

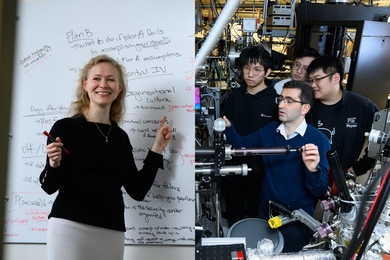

But Mazumder and a team of SEAS researchers, in collaboration with researchers from the University of Michigan, Intel, NVIDIA, Landing AI, Google, MLCommons and Coqui, are building a solution to bring voice technology to the rest of the world.

At the Neural Information Processing Systems conference last week, the team presented a diverse, multilingual speech dataset that spans languages spoken by over 5 billion people. Dubbed the Multilingual Spoken Words Corpus , the dataset has more than 340,000 keywords in 50 languages with upwards of 23.4 million audio examples so far.

“We have built a dataset automation pipeline that can automatically identify and extract keywords and synthesize them into a dataset,” said Vijay Janapa Reddi , Associate Professor of Electrical Engineering at SEAS and senior author of the study. “The Multilingual Spoken Words Corpus advances the research and development of voice-enabled applications for a broad global audience."

Voice interfaces can make technology more accessible for users with visual or physical impairments, or for lower literacy users. We hope free datasets like ours will help assistive technology developers to meet these needs.

Speech technology can empower billions of people across the planet, but there’s a real need for large, open, and diverse datasets to catalyze innovation.

This is just the beginning. Our goal is to build a dataset with 1,000 words in 1,000 different languages.

“Speech technology can empower billions of people across the planet, but there’s a real need for large, open, and diverse datasets to catalyze innovation,” said David Kanter, MLCommons co-founder and executive director and co-author of the study. “The Multilingual Spoken Words Corpus offers a tremendous breadth of languages. I’m excited for these datasets to improve everyday experiences like voice-enabled consumer devices and speech recognition.”

To build the dataset, the team used recordings from Mozilla Common Voice, a massive global project that collects donated voice recordings in a wide variety of spoken languages, including languages with a smaller population of speakers. Through the Common Voice website, volunteer speakers are given a sentence to read aloud in their chosen language. Another group of volunteers listens to the recorded sentences and verifies its accuracy.

The researchers applied a machine learning algorithm that can recognize and pull keywords from recorded sentences in Common Voice.

For example, one sentence prompt from Common Voice reads: “He played college football at Texas and Rice.”

First, the algorithm uses a common machine learning technique called forced alignment — specifically a tool called the Montreal Forced Aligner — to match the spoken words with text. Then the algorithm filters and extracts words with three or more characters (or two characters in Chinese). From the above sentence, the algorithm would pull “played” “college” “football” “Texas” “and” and “Rice.” To add the word to the dataset, the algorithm needs to find at least five examples of the word, which ensures all words have multiple pronunciation examples.

The algorithm also optimizes for gender balance and minimal speaker overlap between the samples used for training and evaluating keyword spotting models.

“Our goal was to create a large corpus of very common words,” said Mazumder, who is the first author of the study. “So, if you want to train a model for smart lights in Tamil, for example, you would probably use our dataset to pull the keywords “light”, “on”, “off” and “dim” and be able to find enough examples to train the model.”

“We want to build the voice equivalent of Google search for text and images,” said Reddi. “A dataset search engine that can go and find what you want, when you want it on the fly, rather than rely on static datasets that are costly and tedious to create.”

When the researchers compared the accuracy of models trained on their dataset against models trained on a Google dataset that was manually constructed by carefully sourcing individual and specific words, the team found only a small accuracy gap between the two.

For most of the 50 languages, the Multilingual Spoken Words Corpus is the first available keyword dataset that is free for commercial use. For several languages, such as Mongolian, Sakha, and Hakha Chin, it is the first keyword spotting dataset in the language.

“This is just the beginning,” said Reddi. “Our goal is to build a dataset with 1,000 words in 1,000 different languages.”

“Whether it’s on Common Voice or YouTube, Wikicommons, archive.org, or any other creative commons site, there is so much more data out there that we can scrape to build this dataset and expand the diversity of the languages for voice-based interfaces,” said Mazumder. “Voice interfaces can make technology more accessible for users with visual or physical impairments, or for lower literacy users. We hope free datasets like ours will help assistive technology developers to meet these needs.”

The corpus is available on MLCommons, a not-for-profit, open engineering consortium dedicated to improving machine learning for everyone. Reddi is Vice President and a board member of MLCommons .

The paper was co-authored by Sharad Chitlangia, Colby Banbury, Yiping Kang, Juan Manuel Ciro, Keith Achorn, Daniel Galvez, Mark Sabini, Peter Mattson, Greg Diamos, Pete Warden and Josh Meyer.

The research was sponsored in part by the Semiconductor Research Corporation (SRC) and Google.

Topics: AI / Machine Learning , Computer Science

Cutting-edge science delivered direct to your inbox.

Join the Harvard SEAS mailing list.

Scientist Profiles

Vijay Janapa Reddi

John L. Loeb Associate Professor of Engineering and Applied Sciences

Press Contact

Leah Burrows | 617-496-1351 | [email protected]

Related News

Thank you for visiting nature.com. You are using a browser version with limited support for CSS. To obtain the best experience, we recommend you use a more up to date browser (or turn off compatibility mode in Internet Explorer). In the meantime, to ensure continued support, we are displaying the site without styles and JavaScript.

- View all journals

- ADVERTISEMENT FEATURE Advertiser retains sole responsibility for the content of this article

A blueprint for AI-powered smart speech technology

Produced by

Speech recognition technology is becoming increasingly crucial to our daily lives, and iFLYTEK, based in Hefei, China, has been working on new ways of using this smart technology since the company was founded in 1999.

But, as speech recognition begins to cope with new needs — such as conversion from a complex mess of overlapping speech to text in noisy environments, and human-computer interaction in the era of the Internet of Things — there are technical hurdles which need to be rapidly overcome.

In recent years, the award-winning company has transformed the application of speech recognition from simple scenarios such as voice input and smart call centres, to more complex uses, such as detecting and transcribing speech when there are multiple speakers or multiple languages.

iFLYTEK’s Cong Liu introduces children to some of the firm’s technology. Credit: iFLYTEK

Complex Speech scenarios

Speech recognition needs to be better at coping in complex scenarios, such as noisy environments, or when multiple speakers speak over one another.

“What we are trying to tackle is the long-standing ‘cocktail party’ problem. It’s very difficult to achieve with existing speech recognition technology,” says Cong Liu, executive director of the iFLYTEK Research Institute.

To address the problem of complex speech scenarios, Liu and his team developed a new speech-recognition framework which encompasses two algorithms, Spatial-and-Speaker-Aware Iterative Mask Estimation (SSA-IME) and Spatial-and-Speaker-Aware Acoustic Model (SSA-AM). The SSA-IME combines traditional signal-processing and deep-learning based methods to identify multiple speakers. The SSA-AM then integrates the data extracted by the SSA-IME to recognise each speaker’s voice. Furthermore, the estimated text can provide useful information for the SSA-IME to extract more robust spatial- and speaker-aware features. The framework iteratively reduces background noise and interference to speakers’ voices, resulting in more accurate speech recognition.

Liu’s team won the 6th CHiME speech separation and recognition challenge in 2020 (CHiME-6), with a result of 70% accuracy, but their goal is to improve that rate to 95%.

“The day we accomplish that, we can call the mission completed,” he says. “But it will take another three to five years.”

Multilingual speech Recognition

The accuracy of a speech recognition system depends on a large amount of labelled data. Such data sources are rich for widely spoken languages such as Chinese and English, but the problem is harder for other languages. However, with growing globalization, it is urgent to build accurate speech recognition systems for so-called low-resource languages.

In order to achieve this, Liu and his team have developed a framework called Unified Spatial Representation Semi-supervised Automatic Speech Recognition (USRS-ASR), which allows unlabelled data from less commonly spoken languages to be widely used.

Liu’s team has used this framework to create an automatic speech recognition (ASR) system baseline with powerful acoustic modelling capabilities, and in 2021, it won the OpenASR Challenge, a major international low-resource ASR contest organized by the U.S. National Institute of Standards and Technology, coming first in 22 categories of 15 languages.

Moving into new areas, Liu and his team have now built a new paradigm of human-computer interaction based on multi-modal information. For example, through a deep understanding of a driver’s intent — with the aid of visual perception technology and multi-modal interaction — AI can focus on the target person’s voice in noisy settings.

iFLYTEK has adopted its cutting-edge speech technologies in designing many of its products. These include: the smart voice recorder, with an eight-channel microphone array; the smart notebook ‘AI Note’, specially designed for conference audio recording and audio-text transcription; and the input tools which recognise speech in English, Mandarin Chinese and 25 Chinese dialects without switching between them.

www.iflytek.com

This advertisement appears in Nature Index 2022 AI and robotics , an editorially independent supplement. Advertisers have no influence over the content.

Quick links

- Explore articles by subject

- Guide to authors

- Editorial policies

How Did Stephen Hawking’s Communication System Work?

Amyotrophic lateral sclerosis (als): disease that affected hawking, hawking’s speech-generating device.

Stephen Hawking used a speech-generating device (SGD) or a voice output communication aid to talk through ‘the computer’. This is a special device that either supplements or replaces speech/writing for people who have problems communicating in a traditional way.

Stephen Hawking is a name that is impossible to ignore, especially if you are a human being on Earth. However, even aliens might know a thing or two about him.

To give you a quick background, he was a British cosmologist and physicist, best known for his remarkable scientific contributions to the theoretical prediction of radiation emission from black holes (Hawking radiation), Penrose-Hawking theorems, the general theory of relativity , and quantum mechanics. He also authored A Brief History of Time , a popular bestseller discussing the Big Bang and black holes.

In this article, I will explain how he talked using a special device.

Recommended Video for you:

Apart from these accomplishments, there’s one more and rather unfortunate thing that he was commonly known for: he had amyotrophic lateral sclerosis (ALS) . Also referred to as motor neuron disease in some countries, it involves the death of neurons in a patient’s brain. It results in muscle twitching and gradually deteriorating muscles, leading to difficulty swallowing, speaking, and eventually breathing.

Stephen Hawking used various gadgets to give lectures and communicate with people, as he could not speak like most people due to his medical condition. You might have seen him in pictures or videos, sitting in a wheelchair with several machines attached.

Let’s take a closer look at these machines and how they helped Hawking share his knowledge and ideas with the world through his words.

Also Read: Neuralink: Can We Control A Computer Through Our Thoughts?

Stephen Hawking used a speech-generating device (SGD) or a voice output communication aid to communicate. This device is designed to supplement or replace speech and writing for people who have difficulty communicating in a traditional way.

Since 1997, Hawking had been using a computer-based communication system made by Intel Corporation. The entire computer system was replaced every two years to accommodate his gradual loss of muscle control over time. Hawking also wrote a short post titled ‘The Computer,’ briefly discussing the tools that helped him communicate.

Intel has released Hawking’s speech system called Assistive Context-Aware Toolkit as open-source code for the general public to customize and improve the system to make it more suitable for a wider range of communicative disabilities.

Feeding The Information Into The Machine

Stephen Hawking’s communication system was made up of three major components. The most challenging element in his case was the input due to his lack of control over his muscles, which made it impossible to type out words or click buttons as he had been able to do when his condition was better initially. As a result, he needed a more sophisticated input method to feed information into the computer.

To solve this problem, an infrared switch was mounted on his spectacles, which could detect the slightest twitches or movements in his cheek. In the past, when his condition was better, he input information by pressing a clicker with his thumb.

However, as he eventually lost control of the nerves that controlled his thumb muscles, he had to use other input methods, hence the infrared switch that traced movements in his cheek.

The next part involved forming words using the input from the infrared switch. This interface is a program called EZ Keys, developed by Words Plus Inc. It provided a software keyboard that was displayed on a tablet computer and mounted on one arm of his wheelchair, which was powered by the wheelchair batteries.

The software moved a cursor across the keyboard by either moving through columns or rows. When it reached the desired word, Hawking could stop it with a twitch of his cheek. Individual letters were selected in this way to form words and then sentences. Furthermore, EZ Keys also let him move the pointer in the Windows computer that he used.

To make things easier still, the software also included an auto-complete feature – very similar to what we have in smartphones and tablets – that predicts the word without requiring Hawking to complete the spelling of the entire word.

Output: Talking Out Loud

The final step, probably the easiest, involved speaking the entire sentence out loud. To accomplish this, Hawking used a speech synthesizer developed by Speech+ that would speak the sentence once it was approved or completed. However, it had a recognizable accent that was described as American, Scottish, or Scandinavian.

The machine allowed him to speak and perform various other tasks, such as checking his email, browsing the internet, making notes, and using Skype to chat with his friends. Intel had a dedicated team of engineers working on improving his communication system and expanding the range of tasks he could perform. Hawking could give lectures and interact with people with ease using this communication system.

Also Read: Can Computers Read Minds?

Last Updated By: Ashish Tiwari

- Myres, J. D. (2016, March 14). The Bit Player: Stephen Hawking and the Object Voice. Rhetoric Society Quarterly. Informa UK Limited.

- "ACAT 2.0: An AI Transformer-Based Approach to Predictive ....

- Denman, P., Nachman, L., & Prasad, S. (2016, August 15). Designing for "a" user. Proceedings of the 14th Participatory Design Conference: Short Papers, Interactive Exhibitions, Workshops - Volume 2. ACM.

Ashish is a Science graduate (Bachelor of Science) from Punjabi University (India). He spearheads the content and editorial wing of ScienceABC and manages its official Youtube channel . He’s a Harry Potter fan and tries, in vain, to use spells and charms ( Accio! [insert object name]) in real life to get things done. He totally gets why JRR Tolkien would create, from scratch, a language spoken by elves, and tries to bring the same passion in everything he does. A big admirer of Richard Feynman and Nikola Tesla, he obsesses over how thoroughly science dictates every aspect of life… in this universe, at least.

Whats Is The Technological Singularity?

What Is A Brain Computer Interface?

What Is Artificial Intelligence And How Is It Powering Our Lives?

How Does Apple’s Siri Work?

Can We Get Electricity From Black Holes?

Bot Or Not: How To Tell A Bot From A Human

Human Brain vs Supercomputer: Which One Wins?

Why Can't Animals Talk Like Humans?

Hawking Radiation Explained: What Exactly Was Stephen Hawking Famous For?

Did 'Texting' KILL Grammar? The Dark Side of Internet/Language Evolution

Entropy : Why is it Predicted to Cause the Heat Death of the Universe?

How Did Computers Go From The Size Of A Room To The Size Of A Fingernail?

Conference Coverage

- AWS, Google release contact center GenAI tools

- Zoom spreads AI across collaboration services

- Cisco releases Webex AI Assistant for office, contact center

- Avaya simplifies contact center line, inks Zoom partnership

- Talkdesk releases on-prem AI contact center automation suite

- Enterprise Connect 2024 brings AI reality check

- Recapping Enterprise Connect 2024

- Will AI replace customer service reps?

- 3 ways to take advantage of collaboration behavior data

- Creating equity for women in communications and technology

- AI, toll fraud and messaging top the list of UC security concerns

- How agent assist technology works in the contact center

AI drives new speech technology trends and use cases

- Enhanced 911 transitions to Next Generation 911

- In 2023, generative AI made inroads in customer service

- Cisco unveils Webex AI tools for hybrid work, contact center

- Observations from the intersection of UCC and EUC

- How AI is transforming the BPO industry and contact centers

The next generation of AI-based speech technology tools will have much broader, organization-wide impacts. No longer can these tools be viewed in a vacuum.

Speech technology -- a broad field that has existed for decades -- is evolving quickly, thanks largely to the advent of AI.

No longer is the field primarily about speech recognition and the accuracy of speech-to-text transcription. Underpinned by AI, speech-to-text today has been automated to the point where real-time transcription is good enough for most business use cases. Speech-to-text will never be 100% accurate, but it's on par with human-based transcription, and it can be done much faster and at a fraction of the cost.

For some, that might be the only AI-based speech technology use case of interest, but within the workplace communication and collaboration field, it's really just the beginning. For the past six years, I have been presenting an annual update on this topic at Enterprise Connect. Let's explore three main speech technology trends discussed at this year's conference that IT leaders should consider.

1. AI builds on speech technology

Today, AI has now gone well beyond basic transcription . Many AI-driven applications have become standard features of all the leading unified communications as a service ( UCaaS ) offerings, among them real-time transcription, real-time translation, meeting summaries and post-meeting action items. Note that some use cases apply solely to speech, but others are voice-based activities that tie into other applications, such as calendaring.

More recent applications rely on generative AI , which can automatically create cohesive email responses, memos and blog posts from either voice or text prompts (most workers will likely prefer using their voices).

The current state of play builds on conventional forms of speech technology. But with AI, the use cases are broader and are integrated across workflows, as opposed to just being used for speech recognition.

IT leaders should expect these capabilities to be table stakes as they evaluate potential UCaaS offerings or as they consider how to stay current within their existing deployments. All of these AI-based applications are still works in progress and should keep improving -- both in terms of speech accuracy and how well they integrate with other workplace and productivity tools.

2. Emerging applications

Even as IT leaders assess these new capabilities, they mustn't lose sight of the bigger picture. These applications mainly apply to the way people work today and they tend to be viewed as point products, which do a specific set of tasks very well. However, AI moves on a faster track than anything before. While many of these tasks are largely mastered now, the next wave of innovation based on AI speech technology operates on a higher, organization-wide scale.

A case in point is conversational AI, which enables chatbots to be more conversational and human-like, making them much more palatable options for self-service in the contact center. Today's chatbots are far from perfect, but they are gaining much wider adoption now, including in the enterprise where workers now use them as digital assistants.

Large language models ( LLMs ) are the next big phase for AI. The main idea here is that enterprises are seeing value in capturing all forms of digital communication to help make AI applications more effective. Although text and video have long been digitized, many forms of speech have not. With the majority of everyday communications being voice-based, there is a growing interest in capturing this information, otherwise known as dark data , as it represents a valuable set of data inputs for AI.

LLM development and management is evolving quickly, not just due to the nature of AI, but also because C-suite executives now see the potential of LLMs as a competitive differentiator. (There are, in fact, many types of language models for AI, so the reference here to LLMs is an oversimplification. Most IT leaders are not data scientists, so this is an area where outside expertise would be of value.) With speech being so central to this trend, IT leaders need to take a more strategic view of speech technology.

More important is recognizing how AI now ties speech applications to everything else, integrating with workflows, project management, personal productivity and team-based outcomes.

3. Strategic implications for IT

Clearly, IT needs to move past the legacy model of speech technology, especially as AI drives much of the innovation around voice and other communications. As such, speech technology trends can no longer be viewed in a vacuum, where the metric of success is transcription accuracy.

More important is recognizing how AI now ties speech applications to everything else, integrating with workflows, project management, personal productivity and team-based outcomes. Everyday conversations, wherever they take place, still have inherent value, but with AI, their worth as digital streams that blend with other digital streams is poised to become even greater.

This is what makes speech technology in the enterprise so strategic. These applications will continue playing a key role in helping workers communicate and collaborate more effectively -- mainly with UCaaS -- but the bigger picture is pinpointing where AI's business value really lies.

Data is the oxygen that gives AI life, and the more data your model has, the greater the benefit. Most organizations are only capturing a small portion of their dark data, and this is where speech technology really comes into play when considering your plans for AI.

Jon Arnold is principal of J Arnold & Associates, an independent analyst providing thought leadership and go-to-market counsel with a focus on the business-level effect of communications technology on digital transformation.

Related Resources

- CW ASEAN: Connect your people –TechTarget ComputerWeekly.com

Dig Deeper on UC strategy

AI speech technology offers enterprises benefits, risks

Explore UCaaS architecture options and when to choose them

Health IT Vendor Integrates Generative AI Tool into Epic EHR

cloud PBX (private branch exchange)

Test scripts are the heart of any job in pyATS. Best practices for test scripts include proper structure, API integration and the...

Cloud and on-premises subnets use IP ranges, subnet masks or prefixes, and security policies. But cloud subnets are simpler to ...

Satellite connectivity lets Broadcom offer the VeloCloud SD-WAN as an option for linking IoT devices to the global network from ...

Popular pricing models for managed service providers include monitoring only, per device, per user, all-you-can-eat or ...

Global IT consultancies take a multilayered approach to GenAI training by developing in-house programs, partnering with tech ...

IT service providers are upskilling a large portion of their workforces on the emerging technology. The campaign seeks to boost ...

Suggestions or feedback?

MIT News | Massachusetts Institute of Technology

- Machine learning

- Sustainability

- Black holes

- Classes and programs

Departments

- Aeronautics and Astronautics

- Brain and Cognitive Sciences

- Architecture

- Political Science

- Mechanical Engineering

Centers, Labs, & Programs

- Abdul Latif Jameel Poverty Action Lab (J-PAL)

- Picower Institute for Learning and Memory

- Lincoln Laboratory

- School of Architecture + Planning

- School of Engineering

- School of Humanities, Arts, and Social Sciences

- Sloan School of Management

- School of Science

- MIT Schwarzman College of Computing

Computer system transcribes words users “speak silently”

Press contact :, media download.

*Terms of Use:

Images for download on the MIT News office website are made available to non-commercial entities, press and the general public under a Creative Commons Attribution Non-Commercial No Derivatives license . You may not alter the images provided, other than to crop them to size. A credit line must be used when reproducing images; if one is not provided below, credit the images to "MIT."

Previous image Next image

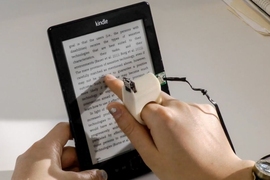

MIT researchers have developed a computer interface that can transcribe words that the user verbalizes internally but does not actually speak aloud.

The system consists of a wearable device and an associated computing system. Electrodes in the device pick up neuromuscular signals in the jaw and face that are triggered by internal verbalizations — saying words “in your head” — but are undetectable to the human eye. The signals are fed to a machine-learning system that has been trained to correlate particular signals with particular words.

The device also includes a pair of bone-conduction headphones, which transmit vibrations through the bones of the face to the inner ear. Because they don’t obstruct the ear canal, the headphones enable the system to convey information to the user without interrupting conversation or otherwise interfering with the user’s auditory experience.

The device is thus part of a complete silent-computing system that lets the user undetectably pose and receive answers to difficult computational problems. In one of the researchers’ experiments, for instance, subjects used the system to silently report opponents’ moves in a chess game and just as silently receive computer-recommended responses.

“The motivation for this was to build an IA device — an intelligence-augmentation device,” says Arnav Kapur, a graduate student at the MIT Media Lab, who led the development of the new system. “Our idea was: Could we have a computing platform that’s more internal, that melds human and machine in some ways and that feels like an internal extension of our own cognition?”

“We basically can’t live without our cellphones, our digital devices,” says Pattie Maes, a professor of media arts and sciences and Kapur’s thesis advisor. “But at the moment, the use of those devices is very disruptive. If I want to look something up that’s relevant to a conversation I’m having, I have to find my phone and type in the passcode and open an app and type in some search keyword, and the whole thing requires that I completely shift attention from my environment and the people that I’m with to the phone itself. So, my students and I have for a very long time been experimenting with new form factors and new types of experience that enable people to still benefit from all the wonderful knowledge and services that these devices give us, but do it in a way that lets them remain in the present.”

The researchers describe their device in a paper they presented at the Association for Computing Machinery’s ACM Intelligent User Interface conference. Kapur is first author on the paper, Maes is the senior author, and they’re joined by Shreyas Kapur, an undergraduate major in electrical engineering and computer science.

Subtle signals

The idea that internal verbalizations have physical correlates has been around since the 19th century, and it was seriously investigated in the 1950s. One of the goals of the speed-reading movement of the 1960s was to eliminate internal verbalization, or “subvocalization,” as it’s known.

But subvocalization as a computer interface is largely unexplored. The researchers’ first step was to determine which locations on the face are the sources of the most reliable neuromuscular signals. So they conducted experiments in which the same subjects were asked to subvocalize the same series of words four times, with an array of 16 electrodes at different facial locations each time.

The researchers wrote code to analyze the resulting data and found that signals from seven particular electrode locations were consistently able to distinguish subvocalized words. In the conference paper, the researchers report a prototype of a wearable silent-speech interface, which wraps around the back of the neck like a telephone headset and has tentacle-like curved appendages that touch the face at seven locations on either side of the mouth and along the jaws.

But in current experiments, the researchers are getting comparable results using only four electrodes along one jaw, which should lead to a less obtrusive wearable device.

Once they had selected the electrode locations, the researchers began collecting data on a few computational tasks with limited vocabularies — about 20 words each. One was arithmetic, in which the user would subvocalize large addition or multiplication problems; another was the chess application, in which the user would report moves using the standard chess numbering system.

Then, for each application, they used a neural network to find correlations between particular neuromuscular signals and particular words. Like most neural networks, the one the researchers used is arranged into layers of simple processing nodes, each of which is connected to several nodes in the layers above and below. Data are fed into the bottom layer, whose nodes process it and pass them to the next layer, whose nodes process it and pass them to the next layer, and so on. The output of the final layer yields is the result of some classification task.

The basic configuration of the researchers’ system includes a neural network trained to identify subvocalized words from neuromuscular signals, but it can be customized to a particular user through a process that retrains just the last two layers.

Practical matters

Using the prototype wearable interface, the researchers conducted a usability study in which 10 subjects spent about 15 minutes each customizing the arithmetic application to their own neurophysiology, then spent another 90 minutes using it to execute computations. In that study, the system had an average transcription accuracy of about 92 percent.

But, Kapur says, the system’s performance should improve with more training data, which could be collected during its ordinary use. Although he hasn’t crunched the numbers, he estimates that the better-trained system he uses for demonstrations has an accuracy rate higher than that reported in the usability study.

In ongoing work, the researchers are collecting a wealth of data on more elaborate conversations, in the hope of building applications with much more expansive vocabularies. “We’re in the middle of collecting data, and the results look nice,” Kapur says. “I think we’ll achieve full conversation some day.”

“I think that they’re a little underselling what I think is a real potential for the work,” says Thad Starner, a professor in Georgia Tech’s College of Computing. “Like, say, controlling the airplanes on the tarmac at Hartsfield Airport here in Atlanta. You’ve got jet noise all around you, you’re wearing these big ear-protection things — wouldn’t it be great to communicate with voice in an environment where you normally wouldn’t be able to? You can imagine all these situations where you have a high-noise environment, like the flight deck of an aircraft carrier, or even places with a lot of machinery, like a power plant or a printing press. This is a system that would make sense, especially because oftentimes in these types of or situations people are already wearing protective gear. For instance, if you’re a fighter pilot, or if you’re a firefighter, you’re already wearing these masks.”

“The other thing where this is extremely useful is special ops,” Starner adds. “There’s a lot of places where it’s not a noisy environment but a silent environment. A lot of time, special-ops folks have hand gestures, but you can’t always see those. Wouldn’t it be great to have silent-speech for communication between these folks? The last one is people who have disabilities where they can’t vocalize normally. For example, Roger Ebert did not have the ability to speak anymore because lost his jaw to cancer. Could he do this sort of silent speech and then have a synthesizer that would speak the words?”

Share this news article on:

Press mentions, smithsonian magazine.

Smithsonian reporter Emily Matchar spotlights AlterEgo, a device developed by MIT researchers to help people with speech pathologies communicate. “A lot of people with all sorts of speech pathologies are deprived of the ability to communicate with other people,” says graduate student Arnav Kapur. “This could restore the ability to speak for people who can’t.”

WCVB-TV’s Mike Wankum visits the Media Lab to learn more about a new wearable device that allows users to communicate with a computer without speaking by measuring tiny electrical impulses sent by the brain to the jaw and face. Graduate student Arnav Kapur explains that the device is aimed at exploring, “how do we marry AI and human intelligence in a way that’s symbiotic.”

Fast Company

Fast Company reporter Eillie Anzilotti highlights how MIT researchers have developed an AI-enabled headset device that can translate silent thoughts into speech. Anzilotti explains that one of the factors that is motivating graduate student Arnav Kapur to develop the device is “to return control and ease of verbal communication to people who struggle with it.”

Quartz reporter Anne Quito spotlights how graduate student Arnav Kapur has developed a wearable device that allows users to access the internet without speech or text and could help people who have lost the ability to speak vocalize their thoughts. Kapur explains that the device is aimed at augmenting ability.

Axios reporter Ina Fried spotlights how graduate student Arnav Kapur has developed a system that can detect speech signals. “The technology could allow those who have lost the ability to speak to regain a voice while also opening up possibilities of new interfaces for general purpose computing,” Fried explains.

After several years of experimentation, graduate student Arnav Kapur developed AlterEgo, a device to interpret subvocalization that can be used to control digital applications. Describing the implications as “exciting,” Katharine Schwab at Co.Design writes, “The technology would enable a new way of thinking about how we interact with computers, one that doesn’t require a screen but that still preserves the privacy of our thoughts.”

The Guardian

AlterEgo, a device developed by Media Lab graduate student Arnav Kapur, “can transcribe words that wearers verbalise internally but do not say out loud, using electrodes attached to the skin,” writes Samuel Gibbs of The Guardian . “Kapur and team are currently working on collecting data to improve recognition and widen the number of words AlterEgo can detect.”

Popular Science

Researchers at the Media Lab have developed a device, known as “AlterEgo,” which allows an individual to discreetly query the internet and control devices by using a headset “where a handful of electrodes pick up the miniscule electrical signals generated by the subtle internal muscle motions that occur when you silently talk to yourself,” writes Rob Verger for Popular Science.

New Scientist

A new headset developed by graduate student Arnav Kapur reads the small muscle movements in the face that occur when the wearer thinks about speaking, and then uses “artificial intelligence algorithms to decipher their meaning,” writes Chelsea Whyte for New Scientist . Known as AlterEgo, the device “is directly linked to a program that can query Google and then speak the answers.”

Previous item Next item

Related Links

- Paper: “AlterEgo: A personalized wearable silent speech interface”

- Arnav Kapur

- Pattie Maes

- Fluid Interfaces group

- School of Architecture and Planning

Related Topics

- Assistive technology

- Computer science and technology

- Artificial intelligence

Related Articles

Recycling air pollution to make art

Finger-mounted reading device for the blind

‘Wise chisels’: Art, craftsmanship, and power tools

More mit news.

MIT chemists explain why dinosaur collagen may have survived for millions of years

Read full story →

Study: EV charging stations boost spending at nearby businesses

Engineering proteins to treat cancer

MIT team wins grand prize at NASA’s First Nations Launch High-Power Rocket Competition

Nurturing success

For developing designers, there’s magic in 2.737 (Mechatronics)

- More news on MIT News homepage →

Massachusetts Institute of Technology 77 Massachusetts Avenue, Cambridge, MA, USA

- Map (opens in new window)

- Events (opens in new window)

- People (opens in new window)

- Careers (opens in new window)

- Accessibility

- Social Media Hub

- MIT on Facebook

- MIT on YouTube

- MIT on Instagram

Computer technology

European Commission

Exponential improvement

Speech Details

computer technology, to fold in two, exponential growth, electronical engineers, British Railways system, tracks, equilibrium, computer chips, silicon chips, to evacuate heat, laser beam, optical fibre

mandatory field

- Speech Technology

Theory and Applications

- © 2010

- Fang Chen 0 ,

- Kristiina Jokinen 1

Department of Computing Science & Engineering, Chalmers University of Technology, Göteborg, Sweden

You can also search for this editor in PubMed Google Scholar

Department of Speech Sciences, University of Helsinki, Helsinki, Finland

- Reviews new automatic speech recognition technology and spoken dialogue systems;

- Includes applications on in-vehicle information systems, military applications, and other industrial speech applications;

- Focuses on human factors in automatic speech-based systems

- Includes supplementary material: sn.pub/extras

This is a preview of subscription content, log in via an institution to check access.

Access this book

Subscribe and save.

- Get 10 units per month

- Download Article/Chapter or eBook

- 1 Unit = 1 Article or 1 Chapter

- Cancel anytime

- Available as EPUB and PDF

- Read on any device

- Instant download

- Own it forever

- Compact, lightweight edition

- Dispatched in 3 to 5 business days

- Free shipping worldwide - see info

- Durable hardcover edition

Tax calculation will be finalised at checkout

Other ways to access

Licence this eBook for your library

Institutional subscriptions

About this book

Similar content being viewed by others.

Speech and Dialogue Technologies, Assets for the Multilingual Digital Single Market

What HLT Can Do for You (and Vice Versa)

Spoken Language Processing: Where Do We Go from Here?

- Affective Computing

- Information

- User Experience

- communication

- in-vehicle information system

- speech processing

- speech recognition

- speech synthesis

- spoken dialogue system

Table of contents (16 chapters)

Front matter, history and development of speech recognition.

- Sadaoki Furui

Challenges in Speech Synthesis

- David Suendermann, Harald Höge, Alan Black

Spoken Language Dialogue Models

Kristiina Jokinen

The Industry of Spoken-Dialog Systems and the Third Generation of Interactive Applications

- Roberto Pieraccini

Deceptive Speech: Clues from Spoken Language

- Julia Hirschberg

Cognitive Approaches to Spoken Language Technology

- Roger K. Moore

Expressive Speech Processing and Prosody Engineering: An Illustrated Essay on the Fragmented Nature of Real Interactive Speech

- Nick Campbell

Interacting with Embodied Conversational Agents

- Elisabeth André, Catherine Pelachaud