How to Turn Audio to Text using OpenAI Whisper

Do you know what OpenAI Whisper is? It’s the latest AI model from OpenAI that helps you to automatically convert speech to text.

Transforming audio into text is now simpler and more accurate, thanks to OpenAI’s Whisper.

This article will guide you through using Whisper to convert spoken words into written form, providing a straightforward approach for anyone looking to leverage AI for efficient transcription.

Introduction to OpenAI Whisper

OpenAI Whisper is an AI model designed to understand and transcribe spoken language. It is an automatic speech recognition (ASR) system designed to convert spoken language into written text.

Its capabilities have opened up a wide array of use cases across various industries. Whether you’re a developer, a content creator, or just someone fascinated by AI, Whisper has something for you.

Let's go over some its key features:

1. Transcription s ervices: Whisper can transcribe audio and video content in real-time or from recordings, making it useful for generating accurate meeting notes, interviews, lectures, and any spoken content that needs to be documented in text form.

2. Subtitling and c losed c aptioning: It can automatically generate subtitles and closed captions for videos, improving accessibility for the deaf and hard-of-hearing community, as well as for viewers who prefer to watch videos with text.

3. Language l earning and t ranslation : Whisper's ability to transcribe in multiple languages supports language learning applications, where it can help in pronunciation practice and listening comprehension. Combined with translation models, it can also facilitate real-time cross-lingual communication.

4. Accessibility t ools: Beyond subtitling, Whisper can be integrated into assistive technologies to help individuals with speech impairments or those who rely on text-based communication. It can convert spoken commands or queries into text for further processing, enhancing the usability of devices and software for everyone.

5. Content s earchability: By transcribing audio and video content into text, Whisper makes it possible to search through vast amounts of multimedia data. This capability is crucial for media companies, educational institutions, and legal professionals who need to find specific information efficiently.

6. Voice- c ontrolled a pplications: Whisper can serve as the backbone for developing voice-controlled applications and devices. It enables users to interact with technology through natural speech. This includes everything from smart home devices to complex industrial machinery.

7. Customer s upport a utomation: In customer service, Whisper can transcribe calls in real time. It allows for immediate analysis and response from automated systems. This can improve response times, accuracy in handling queries, and overall customer satisfaction.

8. Podcasting and j ournalism: For podcasters and journalists, Whisper offers a fast way to transcribe interviews and audio content for articles, blogs, and social media posts, streamlining content creation and making it accessible to a wider audience.

OpenAI's Whisper represents a significant advancement in speech recognition technology.

With its use cases spanning across enhancing accessibility, streamlining workflows, and fostering innovative applications in technology, it's a powerful tool for building modern applications.

How to Work with Whisper

Now let’s look at a simple code example to convert an audio file into text using OpenAI’s Whisper. I would recommend using a Google Collab notebook .

Before we dive into the code, you need two things:

- OpenAI API Key

- Sample audio file

First, install the OpenAI library (Use ! only if you are installing it on the notebook):

Now let’s write the code to transcribe a sample speech file to text:

This script showcases a straightforward way to use OpenAI Whisper for transcribing audio files. By running this script with Python, you’ll see the transcription of your specified audio file printed to the console.

Feel free to experiment with different audio files and explore additional options provided by the Whisper Library to customize the transcription process to your needs.

Tips for Better Transcriptions

Whisper is powerful, but there are ways to get even better results from it. Here are some tips:

- Clear a udio: The clearer your audio file, the better the transcription. Try to use files with minimal background noise.

- Language s election: Whisper supports multiple languages. If your audio isn’t in English, make sure to specify the language for better accuracy.

- Customiz e o utput: Whisper offers options to customize the output. You can ask it to include timestamps, confidence scores, and more. Explore the documentation to see what’s possible.

Advanced Features

Whisper isn’t just for simple transcriptions. It has features that cater to more advanced needs:

- Real- t ime t ranscription : You can set up Whisper to transcribe the audio in real time. This is great for live events or streaming.

- Multi- l anguage s upport: Whisper can handle multiple languages in the same audio file. It’s perfect for multilingual meetings or interviews.

- Fine- t uning: If you have specific needs, you can fine-tune Whisper’s models to suit your audio better. This requires more technical skill but can significantly improve results.

Working with OpenAI Whisper opens up a world of possibilities. It’s not just about transcribing audio – it’s about making information more accessible and processes more efficient.

Whether you’re transcribing interviews for a research project, making your podcast more accessible with transcripts, or exploring new ways to interact with technology, Whisper has you covered.

Hope you enjoyed this article. Visit turingtalks.ai for daily byte-sized AI tutorials.

Cybersecurity & Machine Learning Engineer. Loves building useful software and teaching people how to do it. More at manishmshiva.com

If you read this far, thank the author to show them you care. Say Thanks

Learn to code for free. freeCodeCamp's open source curriculum has helped more than 40,000 people get jobs as developers. Get started

This browser is no longer supported.

Upgrade to Microsoft Edge to take advantage of the latest features, security updates, and technical support.

What is the Whisper model?

- 2 contributors

The Whisper model is a speech to text model from OpenAI that you can use to transcribe audio files. The model is trained on a large dataset of English audio and text. The model is optimized for transcribing audio files that contain speech in English. The model can also be used to transcribe audio files that contain speech in other languages. The output of the model is English text.

Whisper models are available via the Azure OpenAI Service or via Azure AI Speech. The features differ for those offerings. In Azure AI Speech, Whisper is just one of several speech to text models that you can use.

You might ask:

Is the Whisper Model a good choice for my scenario, or is an Azure AI Speech model better? What are the API comparisons between the two types of models?

If I want to use the Whisper Model, should I use it via the Azure OpenAI Service or via Azure AI Speech? What are the scenarios that guide me to use one or the other?

Whisper model or Azure AI Speech models

Either the Whisper model or the Azure AI Speech models are appropriate depending on your scenarios. If you decide to use Azure AI Speech, you can choose from several models, including the Whisper model. The following table compares options with recommendations about where to start.

| Scenario | Whisper model | Azure AI Speech models |

|---|---|---|

| Real-time transcriptions, captions, and subtitles for audio and video. | Not available | Recommended |

| Transcriptions, captions, and subtitles for prerecorded audio and video. | The Whisper model via is recommended for fast processing of individual audio files. The Whisper model via is recommended for batch processing of large files. For more information, see | Recommended for batch processing of large files, diarization, and word level timestamps. |

| Transcript of phone call recordings and analytics such as call summary, sentiment, key topics, and custom insights. | Available | Recommended |

| Real-time transcription and analytics to assist call center agents with customer questions. | Not available | Recommended |

| Transcript of meeting recordings and analytics such as meeting summary, meeting chapters, and action item extraction. | Available | Recommended |

| Real-time text entry and document generation through voice dictation. | Not available | Recommended |

| Contact center voice agent: Call routing and interactive voice response for call centers. | Available | Recommended |

| Voice assistant: Application specific voice assistant for a set-top box, mobile app, in-car, and other scenarios. | Available | Recommended |

| Pronunciation assessment: Assess the pronunciation of a speaker's voice. | Not available | Recommended |

| Translate live audio from one language to another. | Not available | Recommended via the |

| Translate prerecorded audio from other languages into English. | Recommended | Available via the |

| Translate prerecorded audio into languages other than English. | Not available | Recommended via the |

Whisper model via Azure AI Speech or via Azure OpenAI Service?

If you decide to use the Whisper model, you have two options. You can choose whether to use the Whisper Model via Azure OpenAI or via Azure AI Speech . In either case, the readability of the transcribed text is the same. You can input mixed language audio and the output is in English.

Whisper Model via Azure OpenAI Service might be best for:

- Quickly transcribing audio files one at a time

- Translate audio from other languages into English

- Provide a prompt to the model to guide the output

- Supported file formats: mp3, mp4, mpweg, mpga, m4a, wav, and webm

Whisper Model via Azure AI Speech might be best for:

- Transcribing files larger than 25MB (up to 1GB). The file size limit for the Azure OpenAI Whisper model is 25 MB.

- Transcribing large batches of audio files

- Diarization to distinguish between the different speakers participating in the conversation. The Speech service provides information about which speaker was speaking a particular part of transcribed speech. The Whisper model via Azure OpenAI doesn't support diarization.

- Word-level timestamps

- Supported file formats: mp3, wav, and ogg

- Customization of the Whisper base model to improve accuracy for your scenario (coming soon)

Regional support is another consideration.

- The Whisper model via Azure OpenAI Service is available in the following regions: EastUS 2, India South, North Central, Norway East, Sweden Central, and West Europe.

- The Whisper model via Azure AI Speech is available in the following regions: Australia East, East US, North Central US, South Central US, Southeast Asia, UK South, and West Europe.

- Use Whisper models via the Azure AI Speech batch transcription API

- Try the speech to text quickstart for Whisper via Azure OpenAI

- Try the real-time speech to text quickstart via Azure AI Speech

Was this page helpful?

Coming soon: Throughout 2024 we will be phasing out GitHub Issues as the feedback mechanism for content and replacing it with a new feedback system. For more information see: https://aka.ms/ContentUserFeedback .

Submit and view feedback for

Additional resources

- Data Science

- Data Analysis

- Data Visualization

- Machine Learning

- Deep Learning

- Computer Vision

- Artificial Intelligence

- AI ML DS Interview Series

- AI ML DS Projects series

- Data Engineering

- Web Scrapping

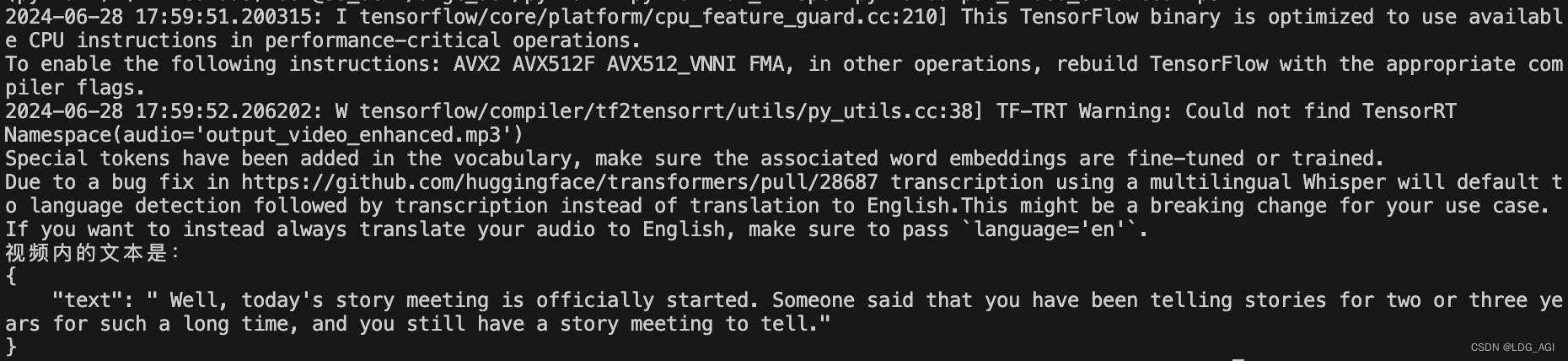

OpenAI Whisper – Converting Speech to Text

In the digital era, the demand for precise and efficient transcription of audio content is everywhere, spanning across professions and purposes. Whether you’re creating subtitles, conducting research, or pursuing various other tasks, the conversion of audio and video to text is a common requirement. While numerous modules exist for this purpose, the goal of 100% accuracy remains a challenge for machine learning models.

Enter the Whisper Model, a Python library that stands out for its exceptional accuracy in speech-to-text conversion, providing exact word recognition. This article delves into the world of Whisper, offering a comprehensive guide on how to harness its capabilities for audio transcription in Python, all without the need for external APIs. The transcription mentioned in this article does not involve sharing our data with cloud providers or sending it out to APIs; rather, everything is done locally. Thus, privacy is ensured.

Note: In this article, we will not be using any API service or sending the data to the server for processing. Instead, everything is done locally on your computer for free. It is completely model- and machine-dependent.

About OpenAI Whisper

Whisper is a general-purpose speech recognition model made by OpenAI . It is a multitasking model that can perform multilingual speech recognition , speech translation, and language identification. It was trained using an extensive set of audio.

Whisper is a transformer -based encoder-decoder model, also referred to as a sequence-to-sequence model , which is considered powerful in machine learning, specifically natural language processing.

Architecture and Working

Working of Whisper Model

The Whisper architecture is a traditional encoder-decoder transformer consisting of 12 transformer blocks in both the encoder and the decoder. Each transformer block includes a self-attention layer and a feed-forward layer.

To establish connectivity between the encoder and decoder, a cross-attention layer is utilized. This layer allows the decoder to focus on the encoder output, aiding in the generation of text tokens that align with the audio signal. To develop its transcription capabilities, Whisper is trained on a vast dataset containing multilingual audio and text data. This extensive training data equips Whisper with the ability to transcribe speech proficiently in different languages and accents, even in environments plagued by noise.

Whisper employs a two-step process when processing audio input. Initially, it divides the input into 30-second segments. Next, each segment undergoes conversion into a mel-frequency cepstrum (MFC), which is a robust representation of the audio signal that accounts for both noise and accents. These MFCs are then sent to the encoder, which is responsible for learning to extract features from the audio signal.

The output of the encoder is subsequently forwarded to the decoder. The decoder’s role is to learn how to produce text captions using the audio features. Throughout this process, the decoder employs an attention mechanism to focus on various sections of the encoder output as it generates the text captions.

Utilizing the Whisper for Audio Transcription

Tools required.

- A text editor (VS Code is recommended)

- The latest version of Python

- Or google colab

Steps to follow

If you are working on your desktop, then create a new folder, open VS Code, and create the file ‘app.py’ in the folder you have just created.

Installing Package

To import Whisper and use it to transcribe, we first need to install it on our local machine. Use the following command to install the package using Python in the terminal: It is required to have an internet connection while installing the package.

Installing FFmpeg (For Windows)

Install and set ffmpeg in your machine’s environment variables. This step is only needed in Windows to get rid of the File Not Found error. If you are working in Google Colab, you can skip it.

Refer to this post to install ffmpeg on Windows.

Importing Package

Import the package using the following code:

Loading Model

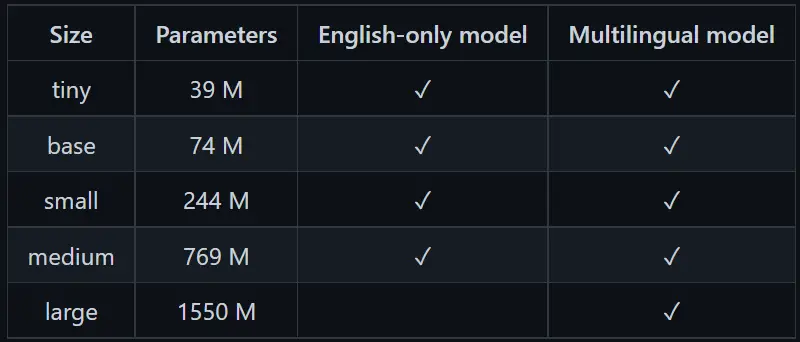

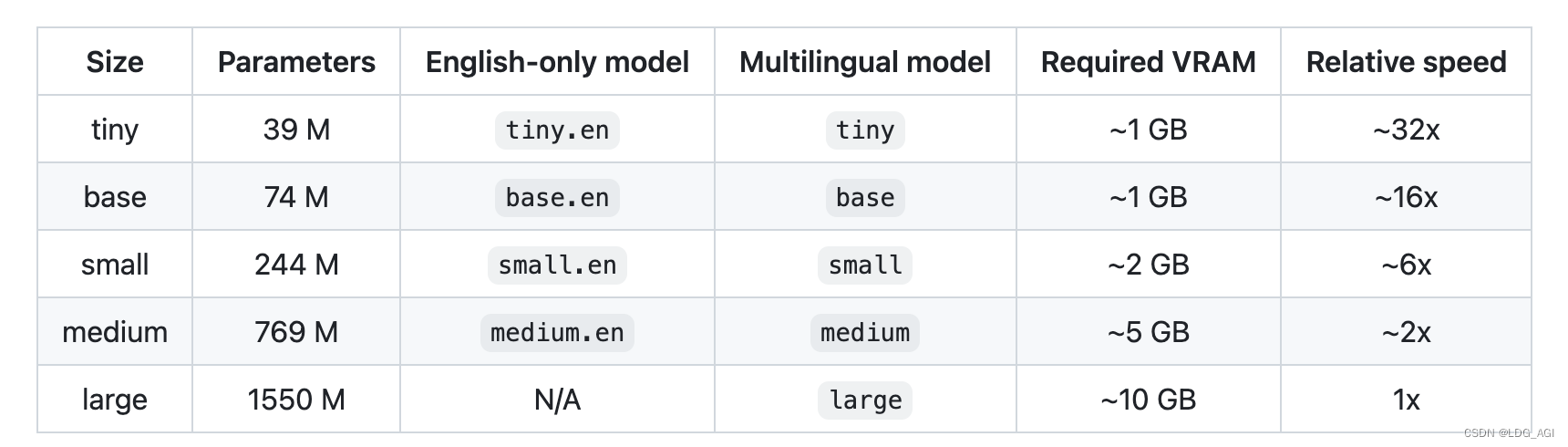

You can load the model to process the audio using the load_model function with model size as the parameter. Below is the code for it:

Note: Here we are using the “base” model, which is the second-smallest model. The bigger the model, the bigger its size and accuracy. If you want to get perfect accuracy and have good resources (RAM, CPU), then go for the “large” model, or else go with “base” and “medium,” which provide decent results. The base model size is 139 MB.

Transcribing Audio

After loading the model, you can use the transcribe function to run the processing on the input audio to convert it into text. The transcribe function needs an important parameter, which is the name of your audio file, to get transcribed.

Here is the code for it.

Note : The audio file should be present in the current working directory; give the global path to it.

Optional Parameters

Some of the additional useful parameters we can use while transcribing audio are:

- word_timestamps: as True , the output is returned with words and the time when they were spelt in the audio.

- initial_prompt : This is very handy when we want to specify how the transcription should be. For example, if the output doesn’t contain punctuation, we can specify a starting sentence like “Hello, include punctuation!” to compel the model to punctuate the transcription.

- temperature : This is used to control the randomness of the transcription.

Getting Result

After processing is done, the result is stored in the transcription variable, which contains text and metadata for transcription. We can extract the final text using the below code:

Complete Code

Here is the complete code for transcribing audio into text. You can download the audio file from here .

Applications

Whisper has a range of applications, such as:

- Speech Recognition: Whisper enables the conversion of audio recordings into written text. This functionality proves valuable in generating transcripts for various contexts like meetings, lectures, and other audio recordings.

- Speech Translation: Whisper facilitates the translation of spoken language from one language to another. This capability is particularly helpful in communication with individuals who speak different languages.

- Language Detection: Whisper can be utilized to identify the language present in an audio recording. This feature is beneficial in determining the language used in a video or audio clip.

Overall, the model can be used in many ways.

Please Login to comment...

Similar reads, improve your coding skills with practice.

What kind of Experience do you want to share?

WhisperSpeech / WhisperSpeech like 170

Whisperspeech.

An Open Source text-to-speech system built by inverting Whisper. Previously known as spear-tts-pytorch .

We want this model to be like Stable Diffusion but for speech – both powerful and easily customizable.

We are working only with properly licensed speech recordings and all the code is Open Source so the model will be always safe to use for commercial applications.

Currently the models are trained on the English LibreLight dataset. In the next release we want to target multiple languages (Whisper and EnCodec are both multilanguage).

Progress update [2024-01-18]

We spend the last week optimizing inference performance. We integrated torch.compile , added kv-caching and tuned some of the layers – we are now working over 12x faster than real-time on a consumer 4090!

We also added an easy way to test voice-cloning. Here is a sample voice cloned from a famous speech by Winston Churchill :

https://github.com/collabora/WhisperSpeech/assets/107984/bd28110b-31fb-4d61-83f6-c997f560bc26

We can also mix languages in a single sentence (here the highlighted English project names are seamlessly mixed into Polish speech):

To jest pierwszy test wielojęzycznego Whisper Speech modelu zamieniającego tekst na mowę, który Collabora i Laion nauczyli na superkomputerze Jewels .

https://github.com/collabora/WhisperSpeech/assets/107984/d7092ef1-9df7-40e3-a07e-fdc7a090ae9e

You can test all of these on Collab . A Huggingface Space is coming soon.

Progress update [2024-01-10]

We’ve pushed a new SD S2A model that is a lot faster while still generating high-quality speech. We’ve also added an example of voice cloning based on a reference audio file.

As always, you can check out our Colab to try it yourself!

Progress update [2023-12-10]

Another trio of models, this time they support multiple languages (English and Polish). Here are two new samples for a sneak peek. You can check out our Colab to try it yourself!

English speech, female voice (transferred from a Polish language dataset):

https://github.com/collabora/WhisperSpeech/assets/107984/aa5a1e7e-dc94-481f-8863-b022c7fd7434

A Polish sample, male voice:

https://github.com/collabora/WhisperSpeech/assets/107984/4da14b03-33f9-4e2d-be42-f0fcf1d4a6ec

Older progress updates are archived here

We encourage you to start with the Google Colab link above or run the provided notebook locally. If you want to download manually or train the models from scratch then both the WhisperSpeech pre-trained models as well as the converted datasets are available on HuggingFace.

- Gather a bigger emotive speech dataset

- Figure out a way to condition the generation on emotions and prosody

- Create a community effort to gather freely licensed speech in multiple languages

- Train final multi-language models

Architecture

The general architecture is similar to AudioLM , SPEAR TTS from Google and MusicGen from Meta. We avoided the NIH syndrome and built it on top of powerful Open Source models: Whisper from OpenAI to generate semantic tokens and perform transcription, EnCodec from Meta for acoustic modeling and Vocos from Charactr Inc as the high-quality vocoder.

Whisper for modeling semantic tokens

We utilize the OpenAI Whisper encoder block to generate embeddings which we then quantize to get semantic tokens.

If the language is already supported by Whisper then this process requires only audio files (without ground truth transcriptions).

EnCodec for modeling acoustic tokens

We use EnCodec to model the audio waveform. Out of the box it delivers reasonable quality at 1.5kbps and we can bring this to high-quality by using Vocos – a vocoder pretrained on EnCodec tokens.

Appreciation

This work would not be possible without the generous sponsorships from:

- Collabora – code development and model training

- LAION – community building and datasets (special thanks to

- Jülich Supercomputing Centre - JUWELS Booster supercomputer

We gratefully acknowledge the Gauss Centre for Supercomputing e.V. ( www.gauss-centre.eu ) for funding part of this work by providing computing time through the John von Neumann Institute for Computing (NIC) on the GCS Supercomputer JUWELS Booster at Jülich Supercomputing Centre (JSC), with access to compute provided via LAION cooperation on foundation models research.

We’d like to also thank individual contributors for their great help in building this model:

- inevitable-2031 ( qwerty_qwer on Discord) for dataset curation

We rely on many amazing Open Source projects and research papers:

Spaces using WhisperSpeech/WhisperSpeech 10

OpenAI debuts Whisper API for speech-to-text transcription and translation

To coincide with the rollout of the ChatGPT API , OpenAI today launched the Whisper API, a hosted version of the open source Whisper speech-to-text model that the company released in September.

Priced at $0.006 per minute, Whisper is an automatic speech recognition system that OpenAI claims enables “robust” transcription in multiple languages as well as translation from those languages into English. It takes files in a variety of formats, including M4A, MP3, MP4, MPEG, MPGA, WAV and WEBM.

Countless organizations have developed highly capable speech recognition systems, which sit at the core of software and services from tech giants like Google, Amazon and Meta. But what makes Whisper different is that it was trained on 680,000 hours of multilingual and “multitask” data collected from the web, according to OpenAI president and chairman Greg Brockman, which lead to improved recognition of unique accents, background noise and technical jargon.

“We released a model, but that actually was not enough to cause the whole developer ecosystem to build around it,” Brockman said in a video call with TechCrunch yesterday afternoon. “The Whisper API is the same large model that you can get open source, but we’ve optimized to the extreme. It’s much, much faster and extremely convenient.”

To Brockman’s point, there’s plenty in the way of barriers when it comes to enterprises adopting voice transcription technology. According to a 2020 Statista survey , companies cite accuracy, accent- or dialect-related recognition issues and cost as the top reasons they haven’t embraced tech like tech-to-speech.

Whisper has its limitations, though — particularly in the area of “next-word” prediction. Because the system was trained on a large amount of noisy data, OpenAI cautions that Whisper might include words in its transcriptions that weren’t actually spoken — possibly because it’s both trying to predict the next word in audio and transcribe the audio recording itself. Moreover, Whisper doesn’t perform equally well across languages, suffering from a higher error rate when it comes to speakers of languages that aren’t well-represented in the training data.

That last bit is nothing new to the world of speech recognition, unfortunately. Biases have long plagued even the best systems, with a 2020 Stanford study finding systems from Amazon, Apple, Google, IBM and Microsoft made far fewer errors — about 19% — with users who are white than with users who are Black.

Despite this, OpenAI sees Whisper’s transcription capabilities being used to improve existing apps, services, products and tools. Already, AI-powered language learning app Speak is using the Whisper API to power a new in-app virtual speaking companion.

If OpenAI can break into the speech-to-text market in a major way, it could be quite profitable for the Microsoft-backed company. According to one report, the segment could be worth $5.4 billion by 2026, up from $2.2 billion in 2021.

“Our picture is that we really want to be this universal intelligence,” Brockman said. “W e really want to, very flexibly, be able to take in whatever kind of data you have — whatever kind of task you want to accomplish — and be a force multiplier on that attention.”

More TechCrunch

Get the industry’s biggest tech news, techcrunch daily news.

Every weekday and Sunday, you can get the best of TechCrunch’s coverage.

Startups Weekly

Startups are the core of TechCrunch, so get our best coverage delivered weekly.

TechCrunch Fintech

The latest Fintech news and analysis, delivered every Tuesday.

TechCrunch Mobility

TechCrunch Mobility is your destination for transportation news and insight.

With the Polestar 3 now “weeks” away, its CEO looks to make company “self-sustaining”

Thomas Ingenlath is having perhaps a little too much fun in his Polestar 3, silently rocketing away from stop signs and swinging through tightening bends, grinning like a man far…

South Korea’s AI textbook program faces skepticism from parents

Some parents have reservations about the South Korean government’s plans to bring tablets with AI-powered textbooks into classrooms, according to a report in The Financial Times. The tablets are scheduled…

Featured Article

How VC Pippa Lamb ended up on ‘Industry’ — one of the hottest shows on TV

Season 3 of “Industry” focuses on the fictional bank Pierpoint blends the worlds — and drama — of tech, media, government, and finance.

Selling a startup in an ‘acqui-hire’ is more lucrative than it seems, founders and VCs say

Selling under such circumstances is often not as poor of an outcome for founders and key staff as it initially seems.

These fintech companies are hiring, despite a rough market in 2024

While the rapid pace of funding has slowed, many fintechs are continuing to see growth and expand their teams.

Rippling’s Parker Conrad says founders should ‘go all the way to the ground’ to run their companies

This is just one area of leadership where Parker Conrad takes a contrarian approach. He also said he doesn’t believe in top-down management.

Nancy Pelosi criticizes California AI bill as ‘ill-informed’

Congresswoman Nancy Pelosi issued a statement late yesterday laying out her opposition to SB 1047, a California bill that seeks to regulate AI. “The view of many of us in…

Palantir CEO Alex Karp is ‘not going to apologize’ for military work

Data analytics company Palantir has faced criticism and even protests over its work with the military, police, and U.S. Immigration and Customs Enforcement, but co-founder and CEO Alex Karp isn’t…

Why Porsche NA CEO Timo Resch is betting on ‘choice’ to survive the turbulent EV market

Timo Resch is basking in the sun. That’s literally true, as we speak on a gloriously clear California day at the Quail, one of Monterey Car Week’s most prestigious events.…

Google takes on OpenAI with Gemini Live

Made by Google was this week, featuring a full range of reveals from Google’s biggest hardware event. Google unveiled its new lineup of Pixel 9 phones, including the $1,799 Pixel…

OpenAI’s new voice mode let me talk with my phone, not to it

I’ve been playing around with OpenAI’s Advanced Voice Mode for the last week, and it’s the most convincing taste I’ve had of an AI-powered future yet. This week, my phone…

X says it’s closing operations in Brazil

X, the social media platform formerly known as Twitter, said today that it’s ending operations in Brazil, although the service will remain available to users in the country. The announcement…

Ikea expands its inventory drone fleet

One of the biggest questions looming over the drone space is how to best use the tech. Inspection has become a key driver, as the autonomous copters are deployed to…

Keychain aims to unlock a new approach to manufacturing consumer goods

Brands can use Keychain to look up different products and see who actually manufactures them.

Microsoft Copilot: Everything you need to know about Microsoft’s AI

In this post, we explain the many Microsoft Copilots available and what they do, and highlight the key differences between each.

How the ransomware attack at Change Healthcare went down: A timeline

A hack on UnitedHealth-owned tech giant Change Healthcare likely stands as one of the biggest data breaches of U.S. medical data in history.

Gogoro delays India plans due to policy uncertainty, launches bike-taxi pilot with Rapido

Gogoro has deferred its India plans over delay in government incentives, but the Taiwanese company has partnered with Rapido for a bike-taxi pilot.

A16z offers social media tips after its founder’s ‘attack’ tweet goes viral

On Friday, the venture firm Andreessen Horowitz tweeted out a link to its guide on how to “build your social media presence” which features advice for founders.

OpenAI shuts down election influence operation that used ChatGPT

OpenAI has banned a cluster of ChatGPT accounts linked to an Iranian influence operation that was generating content about the U.S. presidential election, according to a blog post on Friday.…

Apple reportedly has ‘several hundred’ working on a robot arm with attached iPad

Apple is reportedly shifting into the world of home robots after the wheels came off its electric car. According to a new report from Bloomberg, a team of several hundred…

Another week in the circle of startup life

Welcome to Startups Weekly — your weekly recap of everything you can’t miss from the world of startups. I’m Anna Heim from TechCrunch’s international team, and I’ll be writing this newsletter…

Researchers develop hair-thin battery to power tiny robots

MIT this week showcased tiny batteries designed specifically for the purpose of power these systems to execute varied tasks.

The Nevera R all-new electric hypercar can hit a top speed of 217 mph, and it only starts at $2.5 million

Rimac revealed Friday during The Quail, a Motorsports Gathering at Monterey Car Week the Nevera R, an all-electric hypercar that’s meant to push the performance bounds of its predecessor.

A hellish new AI threat: ‘Undressing’ sites targeted by SF authorities

While the ethics of AI-generated porn are still under debate, using the technology to create nonconsensual sexual imagery of people is, I think we can all agree, reprehensible. One such…

African e-commerce company Jumia completes sale of secondary shares at $99.6M

Almost two weeks ago, TechCrunch reported that African e-commerce giant Jumia was planning to sell 20 million American depositary shares (ADSs) and raise more than $100 million, given its share…

Only 7 days left to save on TechCrunch Disrupt 2024 tickets

We’re entering the final week of discounted rates for TechCrunch Disrupt 2024. Save up to $600 on select individual ticket types until August 23. Join a dynamic crowd of over…

‘Fortnite’ maker Epic Games launches its app store on iOS in the EU, worldwide on Android

Epic Games, the maker of Fortnite, announced on Friday that it has officially launched its rival iOS app store in the European Union. The Epic Games Store is also launching…

Google is bringing AI overviews to India, Brazil, Japan, UK, Indonesia and Mexico

After bringing AI overviews to the U.S., Google is expanding the AI-powered search summaries to six more countries: India, Brazil, Japan, the U.K., Indonesia and Mexico. These markets will also…

Meta draws fresh questions from EU over its CrowdTangle shut-down

The Commission is seeking more information from Meta following its decision to deprecate its CrowdTangle transparency tool. The latest EU request for information (RFI) on Meta has been made under…

What is Instagram’s Threads app? All your questions answered

Twitter alternatives — new and old — have found audiences willing to try out a newer social networks since Elon Musk took over the company in 2022. Mastodon, Bluesky, Spill…

How to Use Whisper: A Free Speech-to-Text AI Tool by OpenAI

- September 21, 2022

- 24 comments

- 4 minute read

Whisper is automatic speech recognition ( ASR ) system that can understand multiple languages. It has been trained on 680,000 hours of supervised data collected from the web.

Whisper is developed by OpenAI . It’s free and open source.

Speech processing is a critical component of many modern applications, from voice-activated assistants to automated customer service systems. This tool will make it easier than ever to transcribe and translate speeches , making them more accessible to a wider audience. OpenAI hopes that by open-sourcing their models and code, others will be able to build upon their work to create even more powerful applications.

Whisper can handle transcription in multiple languages , and it can also translate those languages into English.

It will also be used by commercial software developers who want to add speech recognition capabilities to their products. This will help them save a lot of money since they won’t have to pay for a commercial speech recognition tool.

I think this tool is going to be very popular, and I think it has a lot of potential.

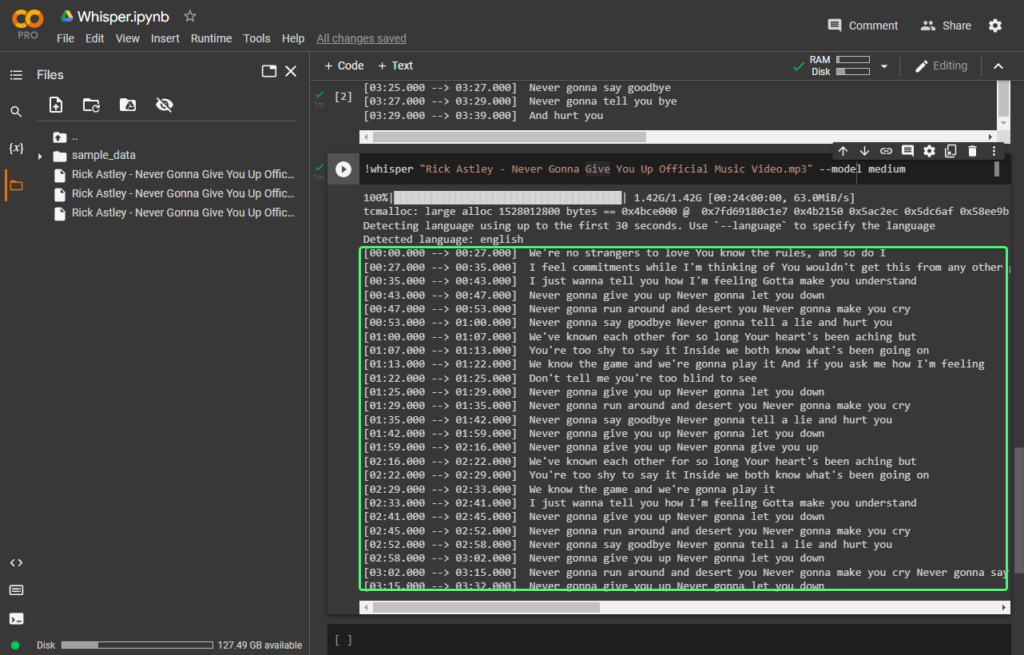

In this tutorial, we’ll get started using Whisper in Google Colab. We’ll quickly install it, and then we’ll run it with one line to transcribe an mp3 file. We won’t go in-depth, and we want to just test it out to see what it can do.

Table of Contents

Quick video demo, open a google colab notebook, install whisper, upload an audio file, run whisper to transcribe speech to text, using whisper models, whisper command-line options, useful resources & acknowledgements.

This is a short demo showing how we’ll use Whisper in this tutorial.

Sidenote : AI art tools are developing so fast it’s hard to keep up.

We set up a newsletter called tl;dr AI News .

In this newsletter we distill the information that’s most valuable to you into a quick read to save you time . We cover the latest news and tutorials in the AI art world on a daily basis, so that you can stay up-to-date with the latest developments.

Using Whisper For Speech Recognition Using Google Colab

[powerkit_alert type=”info” dismissible=”false” multiline=”false”] Google Colab is a cloud-based service that allows users to write and execute code in a web browser. Essentially Google Colab is like Google Docs, but for coding in Python .

You can use Google Colab on any device, and you don’t have to download anything. For a quick beginner friendly intro, feel free to check out our tutorial on Google Colab to get comfortable with it.[/powerkit_alert]

If you don’t have a powerful computer or don’t have experience with Python, using Whisper on Google Colab will be much faster and hassle free . For example, on my computer ( CPU I7-7700k/ GPU 1660 SUPER) I’m transcribing 30s in a few minutes, whereas on Google Colab it’s a few seconds.

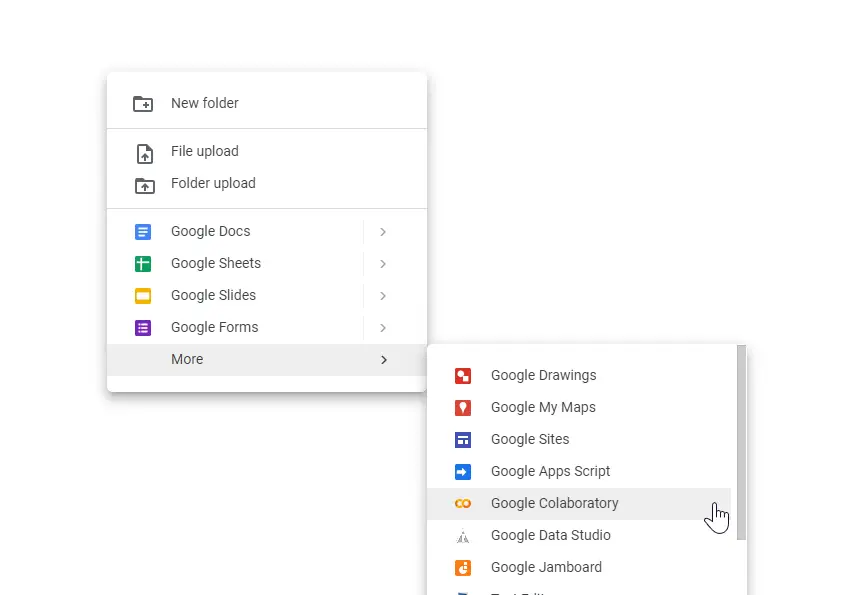

First, we’ll need to open a Colab Notebook. To do that, you can just visit this link https://colab.research.google.com/#create=true and Google will generate a new Colab notebook for you.

Alternatively, you can go anywhere in your Google Drive > Right Click (in an empty space like you want to create a new file) > More > Google Colaboratory . A new tab will open with your new notebook. It’s called Untitled.ipynb but you can rename it anything you want.

Next, we want to make sure our notebook is using a GPU . Google often allocates us a GPU by default, but not always.

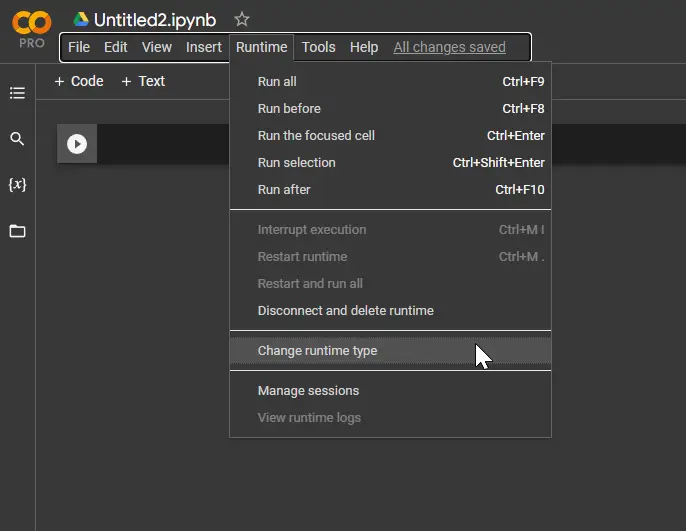

To do this, in our Google Colab menu, go to Runtime > Change runtime type .

Next, a small window will pop up. Under Hardware accelerator there’s a dropdown. Make sure GPU is selected and click Save .

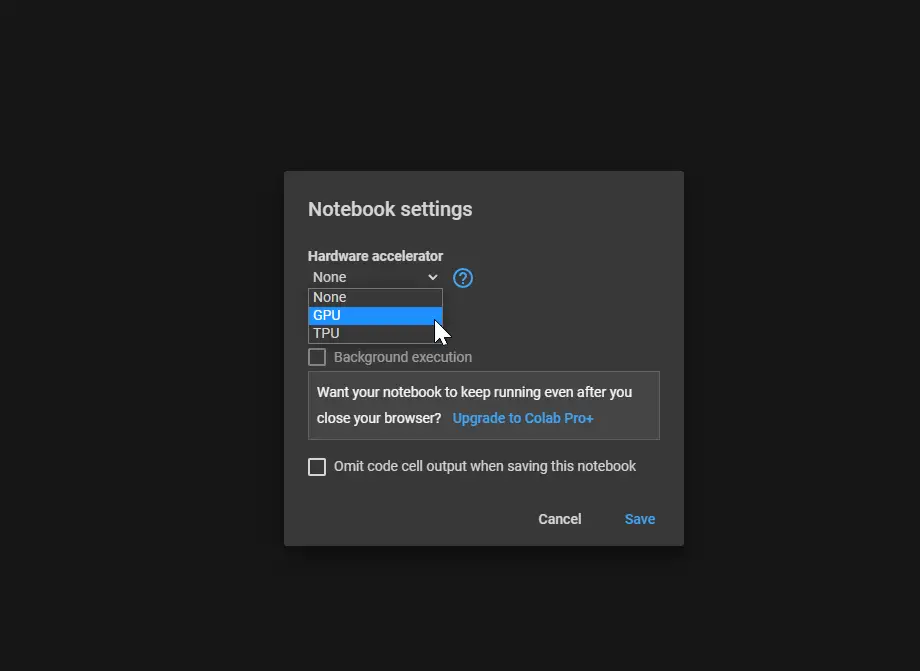

Now we can install Whisper. (You can also check install instructions in the official Github repository ).

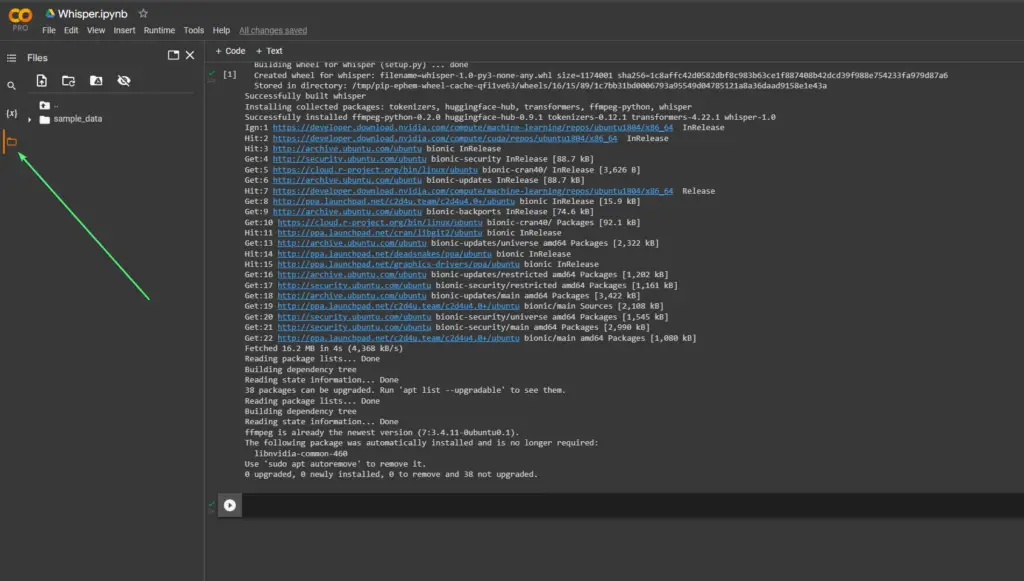

To install it, just paste the following lines in a cell. To run the commands, click the play button at the left of the cell or press Ctrl + Enter . The install process should take 1-2 minutes.

[powerkit_alert type=”info” dismissible=”false” multiline=”false”] Note : We’re prefixing every command with ! because that’s how Google Colabs works when using shell scripts instead of Python. If you’re using Whisper on your computer, in a terminal, then don’t use the ! at the beginning of the line. [/powerkit_alert]

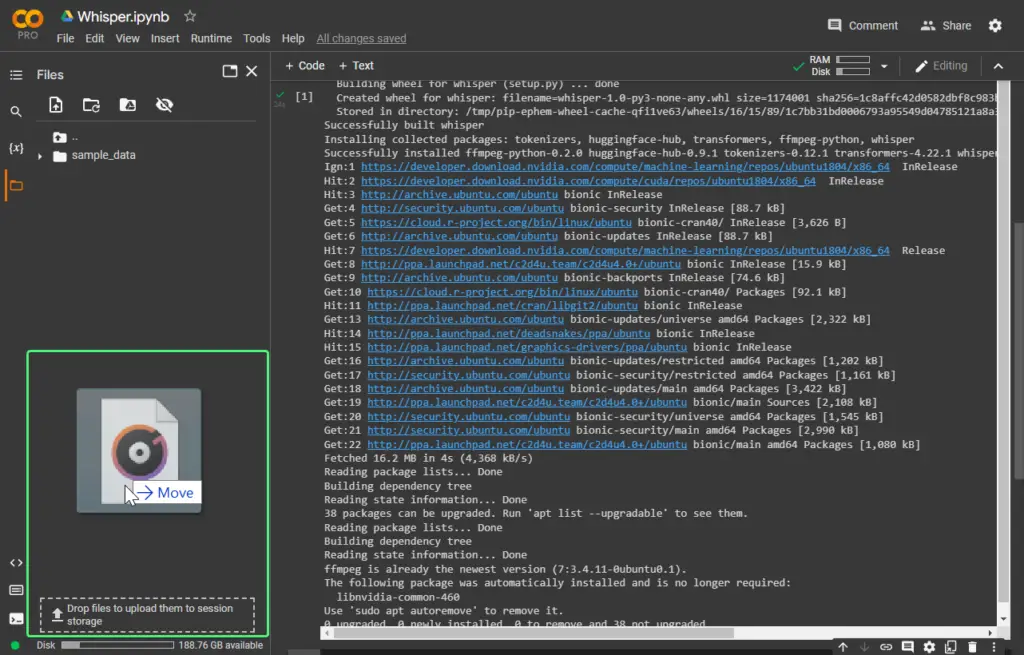

Now, we can upload a file to transcribe it. To do this, open the File Browser at the left of the notebook by pressing the folder icon .

Now you can press the upload file button at the top of the file browser, or just drag and drop a file from your computer and wait for it to finish uploading.

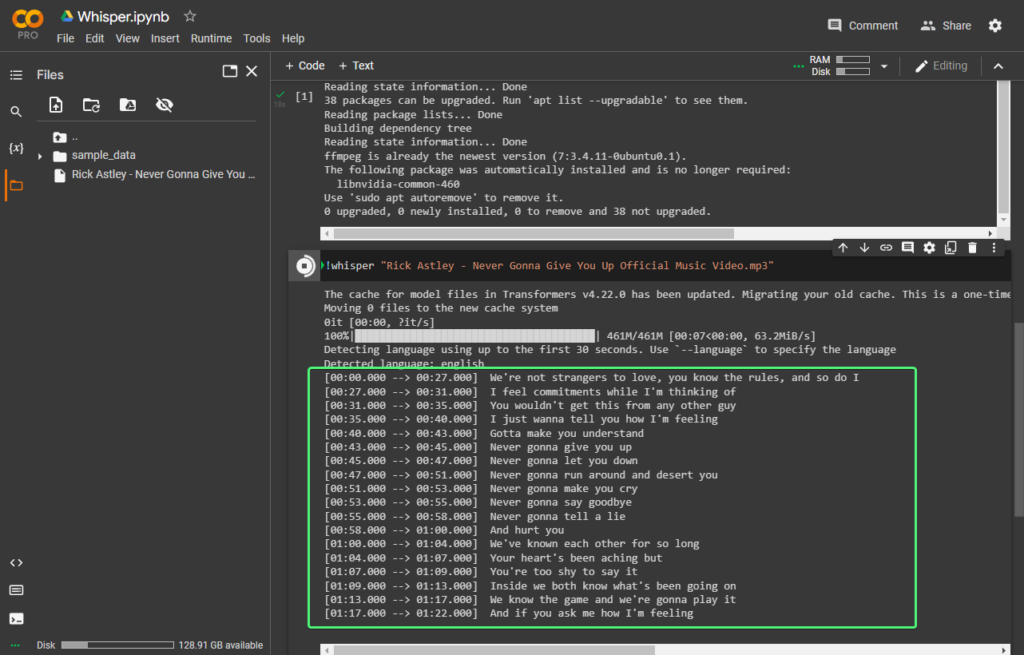

Next, we can simply run Whisper to transcribe the audio file using the following command. If this is the first time you’re running Whisper, it will first download some dependencies.

In less than a minute, it should start transcribing.

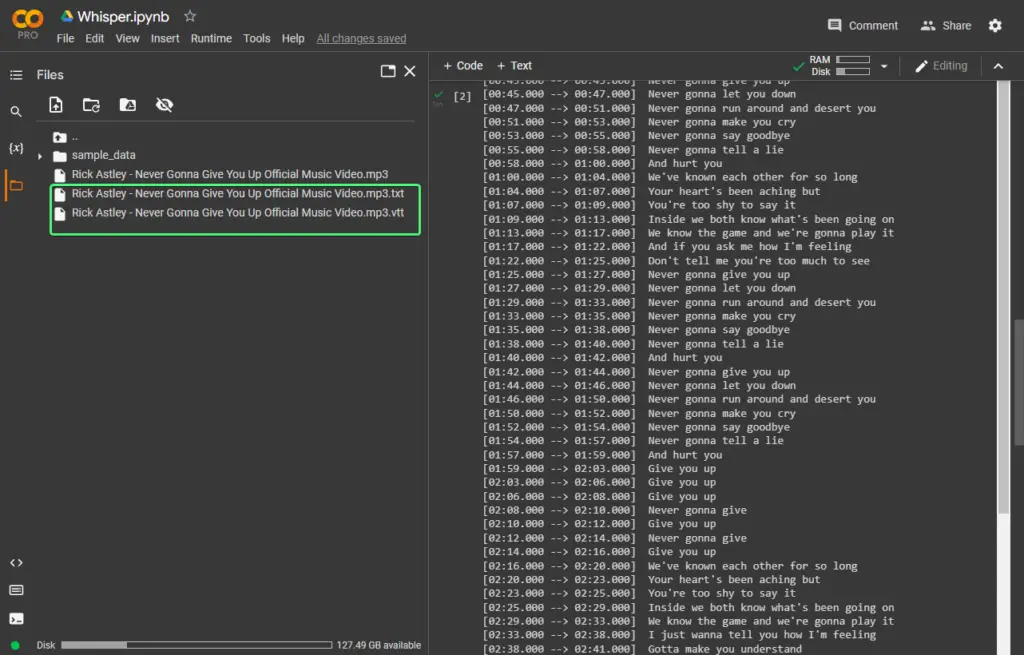

When it’s finished, you can find the transcription files in the same directory in the file browser:

Whisper comes with multiple models . You can read more about Whisper’s models here .

By default, it uses the small model. It’s faster but not as accurate as a larger model . For example, let’s use the medium model.

We can do this by running the command:

In my case:

The result is more accurate when using the medium model than the small one.

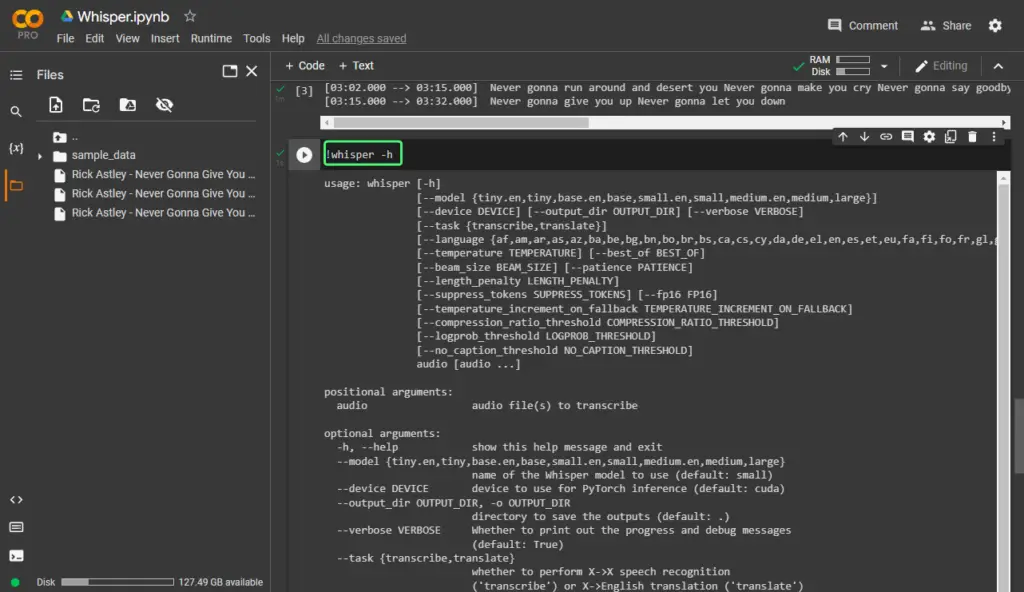

You can check out all the options you can use in the command-line for Whisper by running !whisper -h in Google Colab:

In this tutorial, we covered the basic usage of Whisper by running it via the command-line in Google Colab. This tutorial was meant for us to just to get started and see how OpenAI’s Whisper performs.

You can easily use Whisper from the command-line or in Python, as you’ve probably seen from the Github repository. We’ll most likely see some amazing apps pop up that use Whisper under the hood in the near future.

- The GitHub Repository for Whisper – https://github.com/openai/whisper . It has useful information on Whisper, as well as some nice examples of using Whisper from the command-line, or in Python.

- OpenAI Whisper – MultiLingual AI Speech Recognition Live App Tutorial – https://www.youtube.com/watch?v=ywIyc8l1K1Q . A very useful intro to Whisper, as well as a great demo on how to use it with a simple Web UI using Gradio .

- Hacker News Thread – https://news.ycombinator.com/item?id=32927360 . You can find some great insights in the comments.

worked great – THANK YOU !!

I’m using this to transcribe voice audio files from clients… super helpful.

Hi! Thanks for commenting! Glad to help! I’m happy you found it useful!

Great tip to use it on Colab instead of locally. WAY faster.

Hi! Thank you! Glad to be of service!

What’s the best way to use it for long transcriptions? Say 1-2 hours?

I’m using it to do 2-3 hour files and its working great.

Thank you!! Very helpful for my 8-mins talk.

Hi! Glad to help! Also thanks for the feedback. It is very much appreciated!

I tried several files and they kept erroring out and follow this to a t. channel element 0.0 is not allocated

Does not work errors everywhere dvck all geeks.

Hi, Ally. Thanks for commenting. Would you mind sharing a screenshot with the errors you’re getting? I just tried it now to make sure there haven’t been any updates on their end to cause errors.

Don’t know what she’s talking about. Still works great. This is fantastic.

Nice! Thanks for commenting! I’m happy to hear!

what is the progress bar indicating? when I use it on linux machine I get “FP16 is not supported on cpu using FP32 instead” what does this mean? is there a way to speed up the transcription? Thank you.

Hi, thanks for commenting. I believe it needs a GPU to speed up the transcription, and because it wasn’t able to use one it used your CPU, which is slower.

The message “FP16 is not supported on CPU, using FP32 instead” means that the hardware isn’t capable of performing the quicker, but less precise, FP16 computations, so it’s defaulting to the slower, but more precise, FP32 calculations. This could slow down the transcription.

For it to be faster you’d need a good Nvidia GPU, with CUDA toolkit installed, which is a software that allows you to use your GPU for this type of task.

Hope that helps. Let me know if there’s anything I can help with. Thank you!

Omg, this way faster then doing it locally. It helped me a lot, and it’s very easy to use. Thank you very much!!!

Hi, Mustafa. Glad it helped and thanks for commenting and letting us know! It’s very much appreciated!

I have material in m3u8 format. How can I make transcriptions? This guide doesn’t work

Hi Parker. I believe m3u8 files that only contain the location of the actual videos. So they’re like files containing a playlist.

I’m not sure where you got it from, but if it’s from a website where there was an embedded video you can use https://www.downloadhelper.net/ to reliable download the actual videos.

Hope this helps. I’m aware it may be confusing. I was confused the first time when dealing with m3u8 files.

this comes from the chrome extension Twitch vod downloader sample m3u8: https://dgeft87wbj63p.cloudfront.net/668f26476ac0d6233d08_demonzz1_40330491285_1703955163/chunked/index-dvr.m3u8

I am looking for a program that will quickly transcribe broadcast recordings from Twitch and I will not have to download them due to the slow Internet. I am looking for a program that will transcribe 4 hours of vod up to a maximum of 30 minutes. Do you have any idea?

I haven’t tried it out but I’m thinking this might work https://github.com/collabora/WhisperLive

It seems it also comes with a Chrome or Firefox extension which captures the audio from your current tab and transcribes it.

Unlike traditional speech recognition systems that rely on continuous audio streaming, we use voice activity detection (VAD) to detect the presence of speech and only send the audio data to whisper when speech is detected. This helps to reduce the amount of data sent to the whisper model and improves the accuracy of the transcription output.

It looks pretty cool.

I don’t know if I’ll be able to try it out myself anytime soon, however, do to other work I am involved in at the moment.

I hope it helps.

Hi. This tutorial always worked perfect for me since I found it and recommended it to everyone. I am a journalist and in my profession it is a wonderful thing. But since some time ago there was an update that makes a lot of nVidia dependencies to load, and the two minutes that Whisper took before to be ready went to almost twenty… Cheers!

Hearing this made my day. I’m really happy it helps! Thanks so much for the kind words, Marcelo. I very much appreciate it!

Thanks to you! In fact, I adapted what you wrote and made a tutorial in Spanish for my co-workers.

How to Use ESRGAN: Free AI Tool to Upscale Unlimited Images

How to use stable diffusion infinity for outpainting (colab), you may also like.

- 3 minute read

How to Run ERNIE-ViLG AI Art Generator in Google Colab Free

- November 2, 2022

- 6 minute read

CPU vs GPU vs TPU: Differences, Uses, Pros & Cons Explained

- by Khalid Faiz

- August 7, 2022

- 17 minute read

11 Best AI Image Colorizers – Turn B/W Photos to Color

- December 11, 2022

What is Hugging Face – A Beginner’s Guide

- October 18, 2022

- 25 minute read

Get Started with Scikit-Learn: A Machine Learning Guide

- by Mahmud Hasan Saikot

- January 24, 2023

- 21 minute read

10 of the Best AI Story Generators for Creative Writing

Navigation Menu

Search code, repositories, users, issues, pull requests..., provide feedback.

We read every piece of feedback, and take your input very seriously.

Saved searches

Use saved searches to filter your results more quickly.

To see all available qualifiers, see our documentation .

- Notifications You must be signed in to change notification settings

An Open Source text-to-speech system built by inverting Whisper.

collabora/WhisperSpeech

Folders and files.

| Name | Name | |||

|---|---|---|---|---|

| 234 Commits | ||||

| workflows | workflows | |||

Repository files navigation

Whisperspeech.

An Open Source text-to-speech system built by inverting Whisper. Previously known as spear-tts-pytorch .

We want this model to be like Stable Diffusion but for speech – both powerful and easily customizable.

We are working only with properly licensed speech recordings and all the code is Open Source so the model will be always safe to use for commercial applications.

Currently the models are trained on the English LibreLight dataset. In the next release we want to target multiple languages (Whisper and EnCodec are both multilanguage).

Sample of the synthesized voice:

Progress update [2024-01-29]

We successfully trained a tiny S2A model on an en+pl+fr dataset and it can do voice cloning in French:

We were able to do this with frozen semantic tokens that were only trained on English and Polish. This supports the idea that we will be able to train a single semantic token model to support all the languages in the world. Quite likely even ones that are not currently well supported by the Whisper model. Stay tuned for more updates on this front. :)

Progress update [2024-01-18]

We spend the last week optimizing inference performance. We integrated torch.compile , added kv-caching and tuned some of the layers – we are now working over 12x faster than real-time on a consumer 4090!

We can mix languages in a single sentence (here the highlighted English project names are seamlessly mixed into Polish speech):

To jest pierwszy test wielojęzycznego Whisper Speech modelu zamieniającego tekst na mowę, który Collabora i Laion nauczyli na superkomputerze Jewels .

We also added an easy way to test voice-cloning. Here is a sample voice cloned from a famous speech by Winston Churchill (the radio static is a feature, not a bug ;) – it is part of the reference recording):

You can test all of these on Colab (we optimized the dependencies so now it takes less than 30 seconds to install). A Huggingface Space is coming soon.

Progress update [2024-01-10]

We’ve pushed a new SD S2A model that is a lot faster while still generating high-quality speech. We’ve also added an example of voice cloning based on a reference audio file.

As always, you can check out our Colab to try it yourself!

Progress update [2023-12-10]

Another trio of models, this time they support multiple languages (English and Polish). Here are two new samples for a sneak peek. You can check out our Colab to try it yourself!

English speech, female voice (transferred from a Polish language dataset):

A Polish sample, male voice:

Older progress updates are archived here

We encourage you to start with the Google Colab link above or run the provided notebook locally. If you want to download manually or train the models from scratch then both the WhisperSpeech pre-trained models as well as the converted datasets are available on HuggingFace.

- Gather a bigger emotive speech dataset

- Figure out a way to condition the generation on emotions and prosody

- Create a community effort to gather freely licensed speech in multiple languages

- Train final multi-language models

Architecture

The general architecture is similar to AudioLM , SPEAR TTS from Google and MusicGen from Meta. We avoided the NIH syndrome and built it on top of powerful Open Source models: Whisper from OpenAI to generate semantic tokens and perform transcription, EnCodec from Meta for acoustic modeling and Vocos from Charactr Inc as the high-quality vocoder.

We gave two presentation diving deeper into WhisperSpeech. The first one talks about the challenges of large scale training:

Tricks Learned from Scaling WhisperSpeech Models to 80k+ Hours of Speech - video recording by Jakub Cłapa, Collabora

The other one goes a bit more into the architectural choices we made:

Open Source Text-To-Speech Projects: WhisperSpeech - In Depth Discussion

Whisper for modeling semantic tokens

We utilize the OpenAI Whisper encoder block to generate embeddings which we then quantize to get semantic tokens.

If the language is already supported by Whisper then this process requires only audio files (without ground truth transcriptions).

EnCodec for modeling acoustic tokens

We use EnCodec to model the audio waveform. Out of the box it delivers reasonable quality at 1.5kbps and we can bring this to high-quality by using Vocos – a vocoder pretrained on EnCodec tokens.

Appreciation

This work would not be possible without the generous sponsorships from:

- Collabora – code development and model training

- LAION – community building and datasets (special thanks to

- Jülich Supercomputing Centre - JUWELS Booster supercomputer

We gratefully acknowledge the Gauss Centre for Supercomputing e.V. ( www.gauss-centre.eu ) for funding part of this work by providing computing time through the John von Neumann Institute for Computing (NIC) on the GCS Supercomputer JUWELS Booster at Jülich Supercomputing Centre (JSC), with access to compute provided via LAION cooperation on foundation models research.

We’d like to also thank individual contributors for their great help in building this model:

- inevitable-2031 ( qwerty_qwer on Discord) for dataset curation

We rely on many amazing Open Source projects and research papers:

Contributors 10

- Jupyter Notebook 98.5%

- Python 1.5%

Get the Reddit app

OpenAI is an AI research and deployment company. OpenAI's mission is to ensure that artificial general intelligence benefits all of humanity. We are an unofficial community. OpenAI makes ChatGPT, GPT-4o, Sora, and DALL·E 3.

Instantly transcribe voice messages to text on your iPhone with Whisper AI

I've made a Shortcut that uses Whisper AI API to convert audio to text from the iPhone.

It’s a million times better than iPhone’s native speech-to-text 😅

You can use with:

Existing audio notes (like in whatsapp, Telegram or voice memos)

Or by recording a new voice note with the Shortcut directly

Here you have the guide on how to set it up and the link to the shortcut ;) ↓

https://giacomomelzi.com/transcribe-audio-messages-iphone-ai/

What do you think? 😄

P.S. work on the Mac as well

By continuing, you agree to our User Agreement and acknowledge that you understand the Privacy Policy .

Enter the 6-digit code from your authenticator app

You’ve set up two-factor authentication for this account.

Enter a 6-digit backup code

Create your username and password.

Reddit is anonymous, so your username is what you’ll go by here. Choose wisely—because once you get a name, you can’t change it.

Reset your password

Enter your email address or username and we’ll send you a link to reset your password

Check your inbox

An email with a link to reset your password was sent to the email address associated with your account

Choose a Reddit account to continue

How to Turn Your Voice to Text in Real Time With Whisper Desktop

Your changes have been saved

Email is sent

Email has already been sent

Please verify your email address.

You’ve reached your account maximum for followed topics.

The very same people behind ChatGPT have created another AI-based tool you can use today to boost your productivity. We're referring to Whisper, a voice-to-text solution that eclipsed all similar solutions that came before it.

You can use Whisper in your programs or the command line. And yet, that defeats its very purpose: typing without a keyboard. If you need to type to use it, why use it to avoid typing? Thankfully, you can now use Whisper through a desktop GUI. Even better, it can also transcribe your voice almost in real time. Let's see how you can type with your voice using Whisper Desktop.

What Is OpenAI's Whisper?

OpenAI's Whisper is an Automatic Speech Recognition system (ASR for short) or, to put it simply, is a solution for converting spoken language into text.

However, unlike older dictation and transcription systems, Whisper is an AI solution trained on over 680,000 hours of speech in various languages. Whisper offers unparalleled accuracy and, quite impressively, not only is it multilingual, but it can also translate between languages.

More importantly, it's free and available as open source. Thanks to that, many developers have forked its code into their own projects or created apps that rely on it, like Whisper Desktop.

If you'd prefer the "vanilla" version of Whisper and the versatility of the terminal instead of clunky GUIs, check our article on how to turn your voice into text with OpenAI's Whisper for Windows .

Are Whisper and Whisper Desktop the Same?

Despite its official-sounding name, Whisper Desktop is a third-party GUI for Whisper, made for everyone who'd prefer to click buttons instead of typing commands.

Whisper Desktop is a standalone solution that doesn't rely on an existing Whisper installation. As a bonus, it uses an alternative, optimized version of Whisper, so it should perform better than the standalone version.

You're on the other end of the spectrum, and instead of seeking an easier way to use Whisper than the terminal you're seeking ways to implement it in your own solutions? Rejoice, for OpenAI has opened access to ChatGPT and Whisper APIs .

Download & Install Whisper Desktop

Although Whisper Desktop is easier to use than the standalone Whisper, its installation is more convoluted than repeatedly clicking Next in a wizard.

Transcribing With Whisper Desktop

Transcribing with Whisper Desktop is easy, but you may still need one or two clicks to use the app.

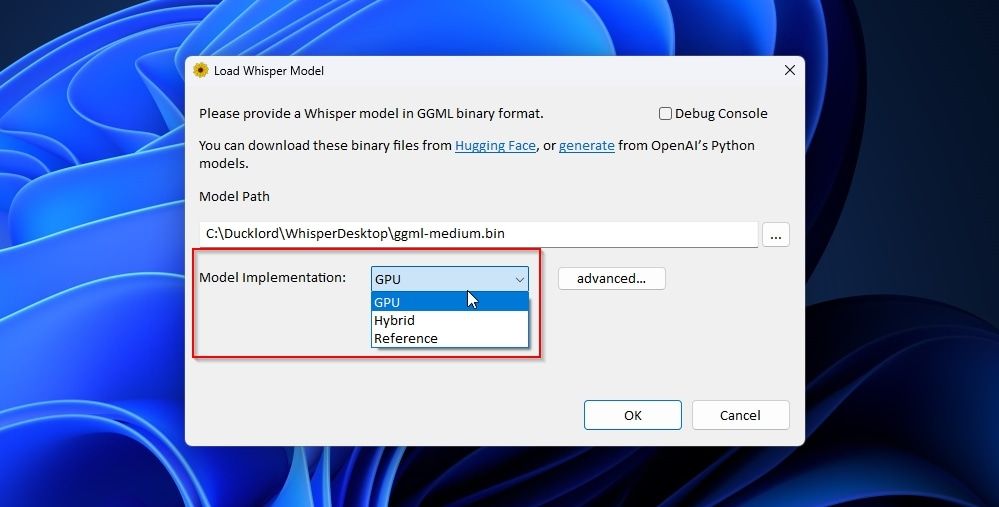

Rerun Whisper Desktop. Does it (still) miss the correct path to your downloaded language model? Click on the button with the three dots on the right of the field and manually select the file you downloaded from Hugging Face.

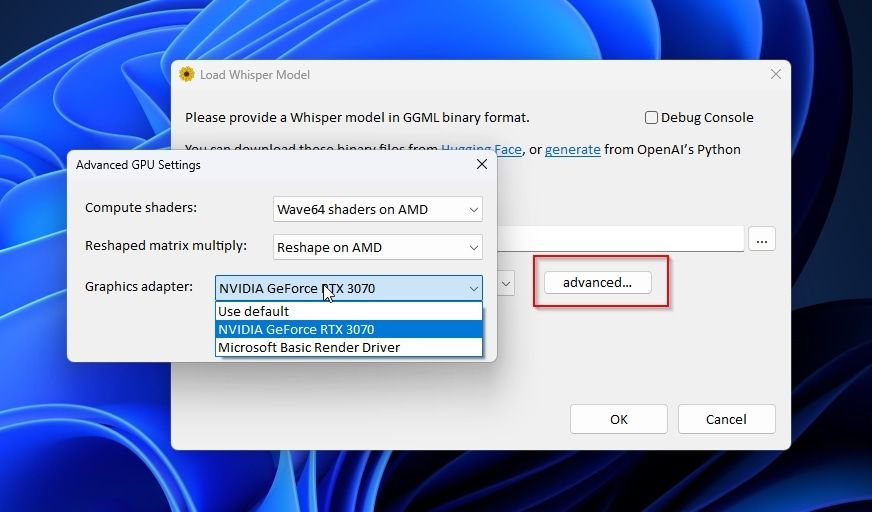

From this spot, you can also use the drop-down menu next to Model Implementation to choose if you want to run Whisper on your GPU ( GPU ), on both the CPU and GPU ( Hybrid ), or only on the CPU ( Reference ).

The Advanced button leads to more options that affect how Whisper will run on your hardware. However, since the button clearly states they are advanced, we suggest you only tweak them if you are troubleshooting or know what you are doing. Setting the wrong options values here can impose a performance penalty or render the app unusable.

Click on OK to move to the app's main interface.

If you already have a recording of your voice you want to turn into written text, click on Transcribe File and select it. Still, we will use Whisper Desktop for live transcription for this article.

The options offered are straightforward. You can select the language Whisper will use, choose if you want to translate between languages and enable the app's Debug Console .

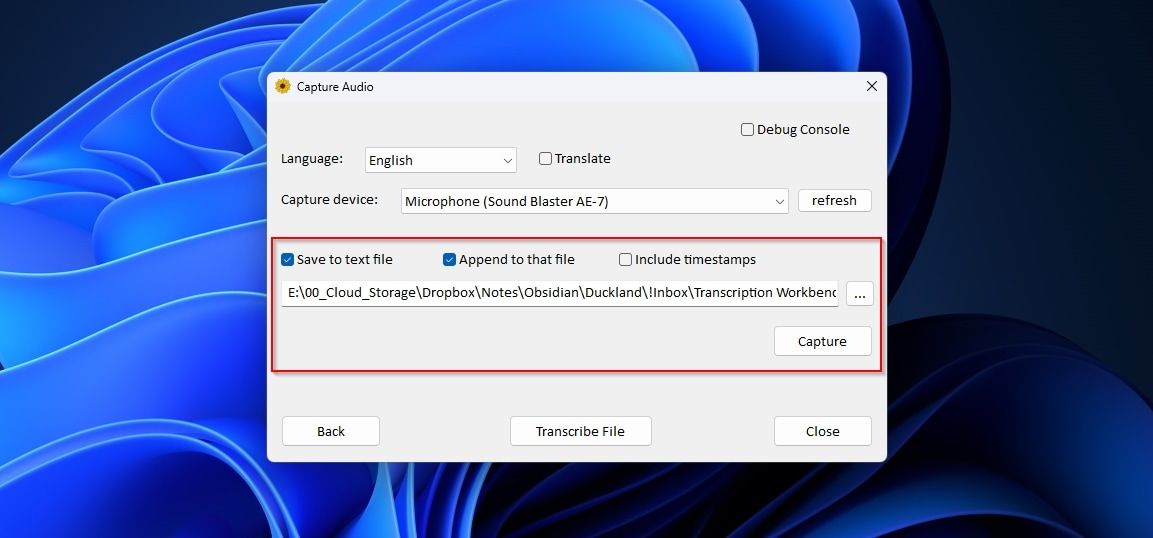

Most English-speaking users can safely skip those options and only ensure the correct audio input is selected from the pull-down menu next to Capture Device .

Make sure Save to text file and Append to that file are enabled to have Whisper Desktop save its output to a file without overwriting its content. Use the button with the three dots on the right of the file's path field to define said text file.

Click on Capture to begin transcribing your speech to text.

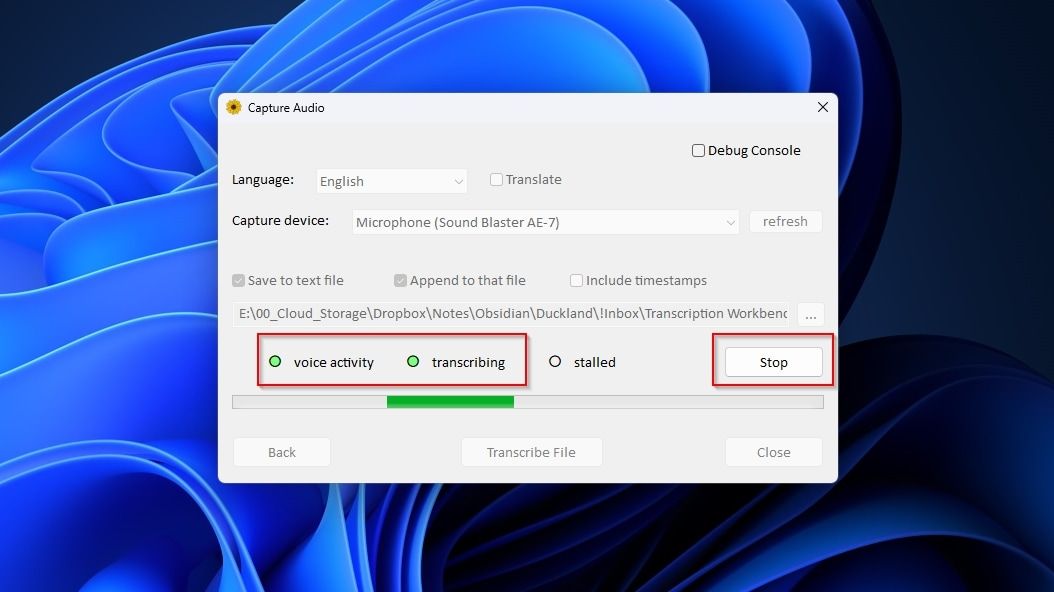

Whisper Desktop will show you three indicators for when it detects voice activity, when it's actively transcribing, and when the process is stalled.

You can keep talking for as long as you like, and you should occasionally see the two first indicators flashing while the app turns your voice into text. Click Stop when done.

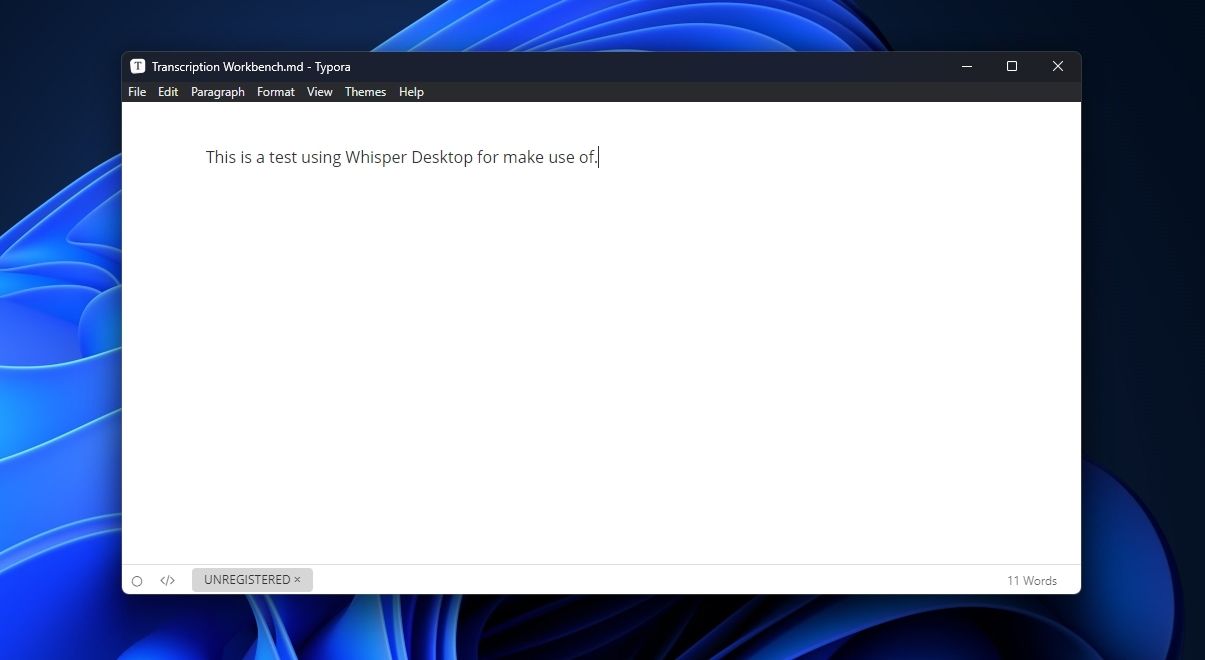

The text file you selected should open in your default text editor, containing in written form everything you said until you clicked Stop .

We should note that you can also do the opposite of what we saw here: convert any text to speech. This way you can listen to anything as if it were a podcast instead of tiring your eyes squinting at screens. For more info on that, check our article on some of the best free online tools to download text-to-speech as MP3 audio .

Whisper Desktop Voice-Typing Tips

Although Whisper Desktop can be a lifesaver, enabling you to write with your voice much quicker than you could type, it's far from perfect.

During our testing, we found that it may occasionally stutter, skip some words, fail to transcribe until you manually stop and restart the process, or get stuck in a loop and keep re-transcribing the same phrase repeatedly.

We believe those are temporary glitches that will be fixed since the standalone Whisper doesn't exhibit the same issues.

Apart from those minor bumps, turning your voice to text should be effortless with Whisper Desktop. Still, during our tests, we found that it can perform even better if...

- Instead of uttering only two or three words and then pausing, Whisper can understand you better if you go on longer. Try to at least give it an entire sentence at a time.

- For the same reason, avoid repeatedly starting and stopping the transcription process.

- Whenever you realize you made a mistake, ignore it and keep going. Loading and unloading the language model seems to be the most time-consuming part of the process with the current state of Whisper and our available hardware. So, it's quicker to keep talking and then edit out your mistakes afterward.

- As with the standalone version of Whisper, it's best to use the optimal language model for your available hardware. You can use up to the medium model if your GPU has 8GB of VRAM. For less VRAM, go for the smaller models. Only choose the slightly more accurate but also much more demanding large model if you use a GPU with 16GB of VRAM or more.

- Remember that the larger the language model, the slower the transcription process. Don't go for a model larger than needed. You'll probably find Whisper Desktop can already "understand you" most of the time with the medium or smaller models, with only one or two errors per paragraph.

Are You Still Typing? Use Your Voice With Whisper

Despite requiring some time to set up, as you will see when you try it, Whisper Desktop performs much better than most alternatives, with much higher accuracy and better speed.

After you start using it to type with your voice, your keyboard may look like a relic from ancient times long gone.

- Transcription

OpenAI Whisper Audio Transcription Benchmarked on 18 GPUs: Up to 3,000 WPM

We tested it on everything from an RTX 4090 to an Intel Arc A380.

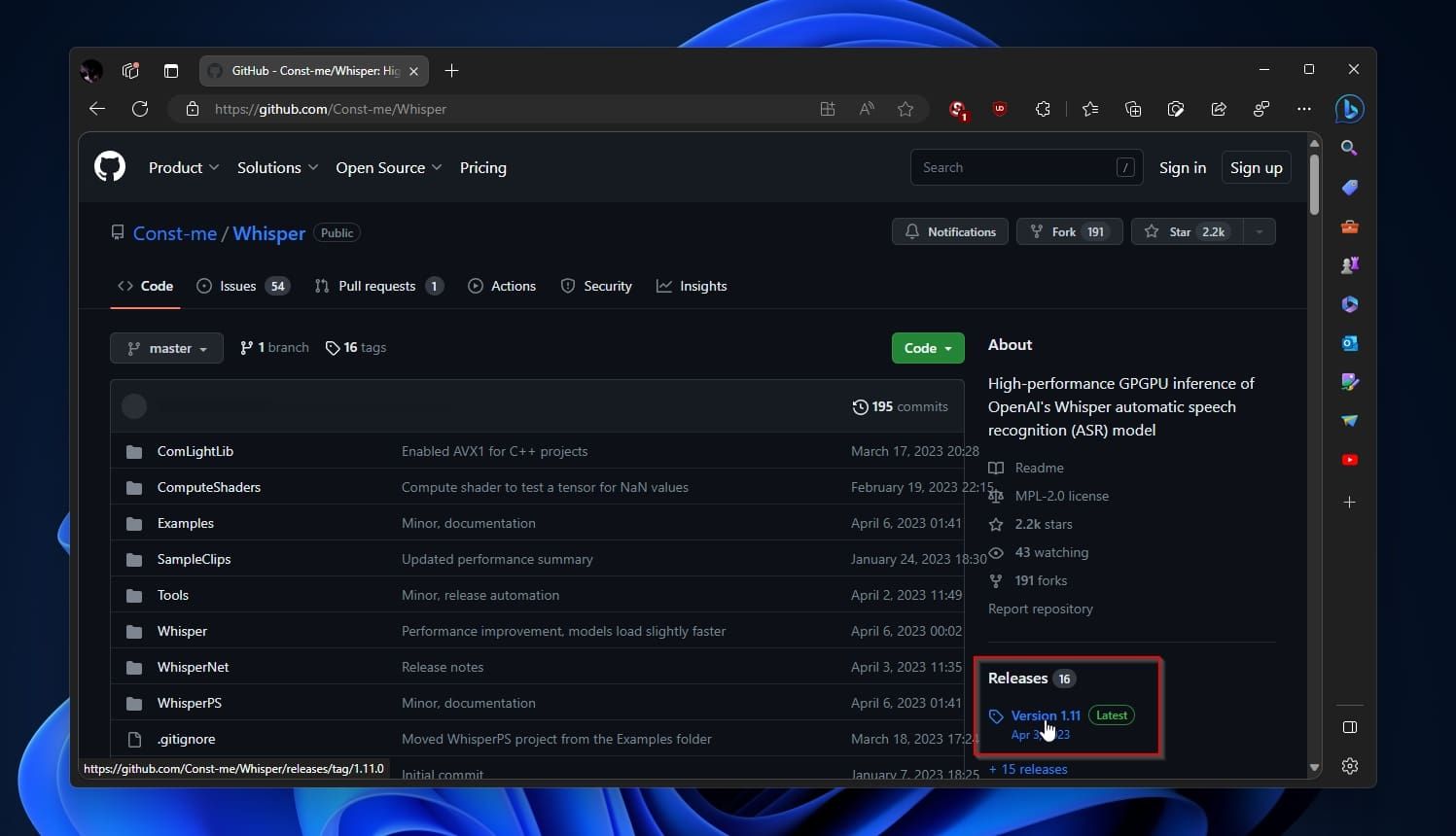

The best graphics cards aren't just for gaming, especially not when AI-based algorithms are all the rage. Besides ChatGPT , Bard , and Bing Chat (aka Sydney), which all run on data center hardware, you can run your own local version of Stable Diffusion , Text Generation , and various other tools... like OpenAI's Whisper . The last one is our subject today, and it can provide substantially faster than real-time transcription of audio via your GPU, with the entire process running locally for free. You can also run it on your CPU, though the speed drops precipitously. Note also that Whisper can be used in real-time to do speech recognition, similar to what you can get through Windows or Dragon NaturallySpeaking. We did not attempt to use it in that fashion, as we were more interesting in checking performance. Real-time speech recognition only needs to keep up with maybe 100–150 words per minute (maybe a bit more if someone is a fast talker). We wanted to let the various GPUs stretch their legs a bit and show just how fast they can go. There are a few options for running Whisper, on Windows or otherwise. Of course there's the OpenAI GitHub (instructions and details below). There's also this Const-Me project , WhisperDesktop, which is a Windows executable written in C++. That uses DirectCompute rather than PyTorch, which means it will run on any DirectX 11 compatible GPU — yes, including things like Intel integrated graphics. It also means that it's not using special hardware like Nvidia's Tensor cores or Intel's XMX cores. Getting WhisperDesktop running proved very easy, assuming you're willing to download and run someone's unsigned executable . (I was, though you can also try to compile the code yourself if you want.) Just grab WhisperDesktop.zip and extract it somewhere. Besides the EXE and DLL, you'll need one or more of the OpenAI models, which you can grab via the links from the application window. You'll need the GGML versions — we used ggml-medium.en.bin (1.42GiB) and ggml-large.bin (2.88GiB) for our testing.

You can do live speech recognition (there's about a 5–10 second delay, so it's not as nice as some of the commercial applications), or you can transcribe an audio file. We opted for the latter for our benchmarks . The transcription isn't perfect, even with the large model, but it's reasonably accurate and can finish way faster than any of us can type. Actually, that's underselling it, as even the slowest GPU we tested ( Arc A380 ) managed over 700 words per minute. That's substantially faster than even the fastest typist in the world, over twice as fast. That's also using the medium language model, which will run on cards with 3GB or more VRAM — the large model requires maybe 5GB or more VRAM, at least with WhisperDesktop. Also, the large model is roughly half as fast. If you're planning to use the OpenAI version, note that the requirements are quite a bit higher, 5GB for the medium model and 10GB for the large model. Plan accordingly.

Whisper Test Setup

TOM'S HARDWARE TEST PC Intel Core i9-13900K MSI MEG Z790 Ace DDR5 G.Skill Trident Z5 2x16GB DDR5-6600 CL34 Sabrent Rocket 4 Plus-G 4TB be quiet! 1500W Dark Power Pro 12 Cooler Master PL360 Flux Windows 11 Pro 64-bit Samsung Neo G8 32 GRAPHICS CARDS Nvidia RTX 4090 Nvidia RTX 4080 Nvidia RTX 4070 Ti Nvidia RTX 4070 Nvidia RTX 30-Series AMD RX 7900 XTX AMD RX 7900 XT AMD RTX 6000-Series Intel Arc A770 16GB Intel Arc A750 Intel Arc A380

Our test PC is our standard GPU testing system, which comes with basically the highest possible performance parts (within reason). We did run a few tests on a slightly slower Core i9-12900K and found performance was only slightly lower, at least for WhisperDesktop, but we're not sure how far down the CPU stack you can go before it will really start to affect performance. For our test input audio, we've grabbed an MP3 from this Asus RTX 4090 Unboxing that we posted last year. It's a 13 minute video, which is long enough to give the faster GPUs a chance to flex their muscle. As noted above, we've run two different versions of the Whisper models, medium.en and large. We tested each card with multiple runs, discarding the first and using the highest of the remaining three runs. Then we converted the resulting time into words per minute — the medium.en model transcribed 1,570 words while the large model resulted in 1,580 words. We also collected data on GPU power use while running the transcription, using an Nvidia PCAT v2 device. We start logging power use right before starting the transcription, and stop it right after the transcription is finished. The GPUs generally don't end up anywhere near 100% load during the workload, so power ends up being quite a bit below the GPUs' rated TGPs. Here are the results.

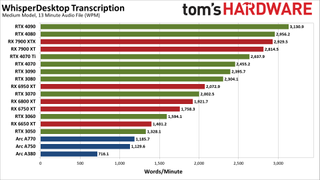

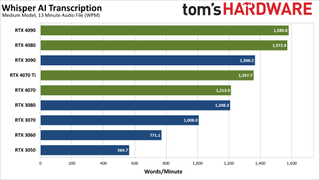

WhisperDesktop Medium Model, GPU Performance

Our base performance testing uses the medium model, which tends to be a bit less accurate overall. There are a few cases where it got the transcription "right" while the large model was incorrect, but there were far more cases where the reverse was true. We're probably getting close to CPU limits as well, or at least the scaling doesn't exactly match up with what we'd expect. Nvidia's RTX 4090 and RTX 4080 take the top two spots, with just over/under 3,000 words per minute transcribed. AMD's RX 7900 XTX and 7900 XT come next, followed by the RTX 4070 Ti and 4070, then the RTX 3090 and 3080. Then the RX 6950 XT comes in just ahead of the RTX 3070 — definitely not the expected result. If you check our GPU benchmarks hierarchy , looking just at rasterization performance, we'd expect the 6950 XT to place closer to the 3090 and 4070 Ti, with the 6800 XT close to the 3080 and 4070. RX 6750 XT should also be ahead of the 3070, and the same goes for the 6650 XT and the 3060. Meanwhile, Intel's Arc GPUs place at the bottom of the charts. Again, normally we'd expect the A770 and A750 to be a lot closer to the RTX 3060 in performance. Except, here we're looking at a different sort of workload than gaming, and potentially the Arc Alchemist architecture just doesn't handle this as well. It's also possible that there are simply driver inefficiencies at play. Let's see what happens with the more complex large model.

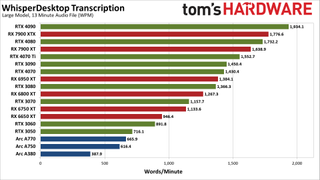

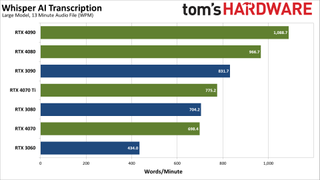

WhisperDesktop Large Model, GPU Performance

The large model definitely hits the GPU harder. Where the RTX 4090 was 2.36X faster than the RTX 3050 with the medium.en model, it's 2.7X faster using the large model. However, scaling is still nothing at all like we see in gaming benchmarks, where (at 1440p) the RTX 4090 is 3.7X faster than the RTX 3050. Some of the rankings change a bit as well, with the 7900 XTX placing just ahead of the RTX 4080 this time. The 6950 XT also edges past the 3080, 6800 XT moves ahead of the 3070, and the 6650 XT moves ahead of the 3060. Intel's Arc GPUs still fall below even the RTX 3050, however. It looks like the large L2 caches on the RTX 40-series GPUs help more here than in games. The gains aren't quite as big with the large model, but the 3080 for example usually beats the 4070 in gaming performance by a small amount, where here the 4070 takes the lead.

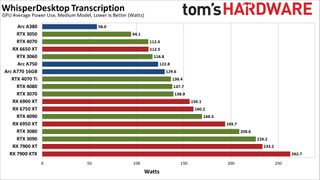

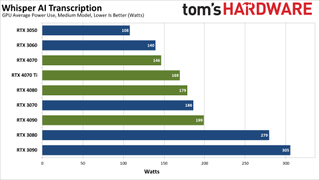

WhisperDesktop GPU Power Use and Efficiency

Finally, we've got power use while running the medium and large models. The large model requires more power on every GPU, though it's not a huge jump. The RTX 4090 for example uses just 6% more power. The biggest change we measured was with the RX 6650 XT, which used 14% more power. The RTX 4090 and RX 6950 XT increased power use by 10%, but some of the other GPUs only show a 1–3 precent delta. One thing that's very clear is that the new Nvidia RTX 40-series GPUs are generally far more power efficient than their AMD counterparts. Conversely, the RTX 30-series (particularly 3080 and 3090) aren't quite as power friendly. AMD's new RX 7900 cards however end up being some of the biggest power users.

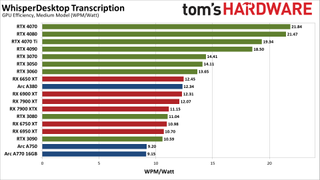

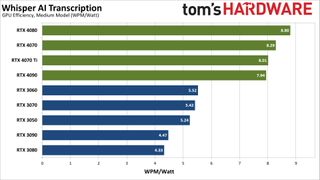

Converting to efficiency in words per minute per watt provides what is arguably the better view of the power and performance equation. The Ada Lovelace RTX 40-series cards end up being far more efficient than the competition. The lower tier Ampere RTX 30-series GPUs like the 3070, 3060, and 3050 come next, followed by a mix of RX 6000/7000 cards and the lone Arc A380. At the bottom of the charts, the Arc A750 and A770 are the least efficient overall, at least for this workload. RTX 3090, RX 6950 XT, RX 6750 XT, RTX 3080, and RX 7900 XTX are all relatively similar at 10.6–11.2 WPM/watt.

Stay On the Cutting Edge: Get the Tom's Hardware Newsletter

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Whisper OpenAI GitHub Testing

For what will become obvious reasons, WhisperDesktop is the preferred way of using Whisper on PCs right now. Not only does it work with any reasonably modern GPU, but performance tends to be much better than the PyTorch version from OpenAI, at least right now. If you want to try the official repository, however, we've got some additional testing results. Getting Whisper running on an Nvidia GPU is relatively straightforward. If you're using Linux, you should check these instructions for how to get the CUDA branch running via ROCm on AMD GPUs. For now, I have opted to skip jumping through hoops and am just sticking to Nvidia. (Probably just use WhisperDesktop for AMD and Intel.) Here are our steps for Nvidia GPUs, should you want to give it a shot. 1. Download and install Miniconda 64-bit for Windows . (We used the top link on that page, though others should suffice.) 2. Open a Miniconda prompt (from the Start Menu). 3. Create a new conda environment for Whisper.

4. Activate the environment .

5. Install a whole bunch of prerequisites.

Note: This will take at least several minutes, more depending on your CPU speed and internet bandwidth. It has to download and then compile a bunch of Python and other packages. 6. Download OpenAI's Whisper.

7. Transcribe an audio file , alternatively specifying language, model, and device. We're using an Nvidia GPU with CUDA support, so our command is:

If you want a potentially better transcription using bigger model, or if you want to transcribe other languages:

The first time you run a model, it will need to be downloaded. The medium.en model is 1.42GiB, while the large model is 2.87GiB. (The models are stored in %UserProfile%\.cache\Whisper , if you're wondering.) There's typically a several second delay (up to maybe 10–15 seconds, depending on your GPU) as Python gets ready to process the specified file, after which you should start seeing timestamps and transcriptions appear. When the task is finished, you'll also find plain text, json, SRT, TSV, and VTT versions of the source audio file, which can be used as subtitles if needed.

Whisper PyTorch Testing on Nvidia GPUs

Our test PC is the same as above, but this time the CPU appears to be a bigger factor. We ran a quick test on an older Core i9-9900K with an RTX 4070 Ti and it took over twice as long to finish the same transcription as with the Core i9-13900K, so CPU bottlenecks are very much a reality with the faster Nvidia GPUs on the PyTorch version of Whisper. We should note here that we don't know precisely how all the calculations are being done in PyTorch. Are the models leveraging the tensor core hardware? Most likely not. Calculations could be using FP32 as well, which would be a pretty big hit to performance compared to FP16. In other words, there's probably a lot of room for additional optimizations. As before, we're running two different versions of the Whisper models, medium.en and large (large-v2). The 8GB cards in our test suite were unable to run the large model using PyTorch, and the larger model puts more of a load on the GPU, which means the CPU becomes a bit less of a factor. We created a script to measure the amount of time it took to transcribe the audio file, including the "startup" time. We also collected data on GPU power use while running the transcription, using an Nvidia PCAT v2 device. Here are the results.

Whisper PyTorch Medium Model, GPU Performance

Like we said, performance with the PyTorch version (at least using the installation instructions above) ends up being far lower than with WhisperDesktop. The fastest GPUs are about half the speed, dropping from over 3,000 WPM to a bit under 1,600 WPM. Similarly, the RTX 3050 went from 1,328 WPM to 565 WPM, so in some cases PyTorch is less than half as fast. We're hitting CPU limits as you can tell by how the RTX 4090 and RTX 4080 deliver essentially identical results. Yes, the 4090 is technically 0.5% faster, but that's margin of error. Moving down the chart, the RTX 3090 and RTX 4070 Ti are effectively tied, and the same goes for the RTX 4070 and RTX 3080. Each step is about 10–15 percent slower than the tier above it, until we get to the bottom two cards. The RTX 3060 is 24% slower than the RTX 3070, and then the RTX 3050 is 27% slower than the 3060. Let's see what happens with the more complex large model.

Whisper PyTorch Large Model, GPU Performance

The large model increases the VRAM requirements to around 10GB with PyTorch, which means the RTX 3070 and RTX 3050 can't even try to run the transcription. Performance drops by about 40% on most of the GPUs, though the 4090 and 4080 see less of a drop due to the CPU limits. It's pretty wild to see how much faster the Const-Me C++ version runs. Maybe it all just comes down to having FP16 calculations (which are required for DX11 certification). Not only does it run faster, but we could even run the large model with a 6GB card, while the PyTorch code needs at least a 10GB card.

Whisper PyTorch, GPU Power and Efficiency

We only collected power use while running the medium model — mostly because we didn't think to collect power data until we had already started testing. Because we're hitting CPU limits on the fastest cards, it's no surprise that the 4090 and 4080 come in well below their rated TGP (Total Graphics Power). The 4090 needs just under 200W while the 4080 is a bit more efficient at less than 180W. Both GPUs also use more power with PyTorch than they did using WhisperDesktop, however. The RTX 3080 and 3090 even get somewhat close to their TGPs.

Converting to efficiency in words per minute per watt, the Ada Lovelace RTX 40-series cards are anywhere from 43% to 103% more efficient than the Ampere RTX 30-series GPUs . The faster GPUs of each generation tend to be slightly less efficient, except for the RTX 4080 that takes the top spot. Again, it's clear the OpenAI GitHub source code hasn't been fully tuned for maximum performance — not even close. We can't help but wonder what performance might look like with compiled C++ code that actually uses Nvidia's tensor cores. Maybe there's a project out there that has already done that, but I wasn't able to find anything in my searching.

Whisper AI Closing Thoughts