- My W3C Account

Community & Business Groups

- Home /

- Second Screen Commun... /

Presentation API demos

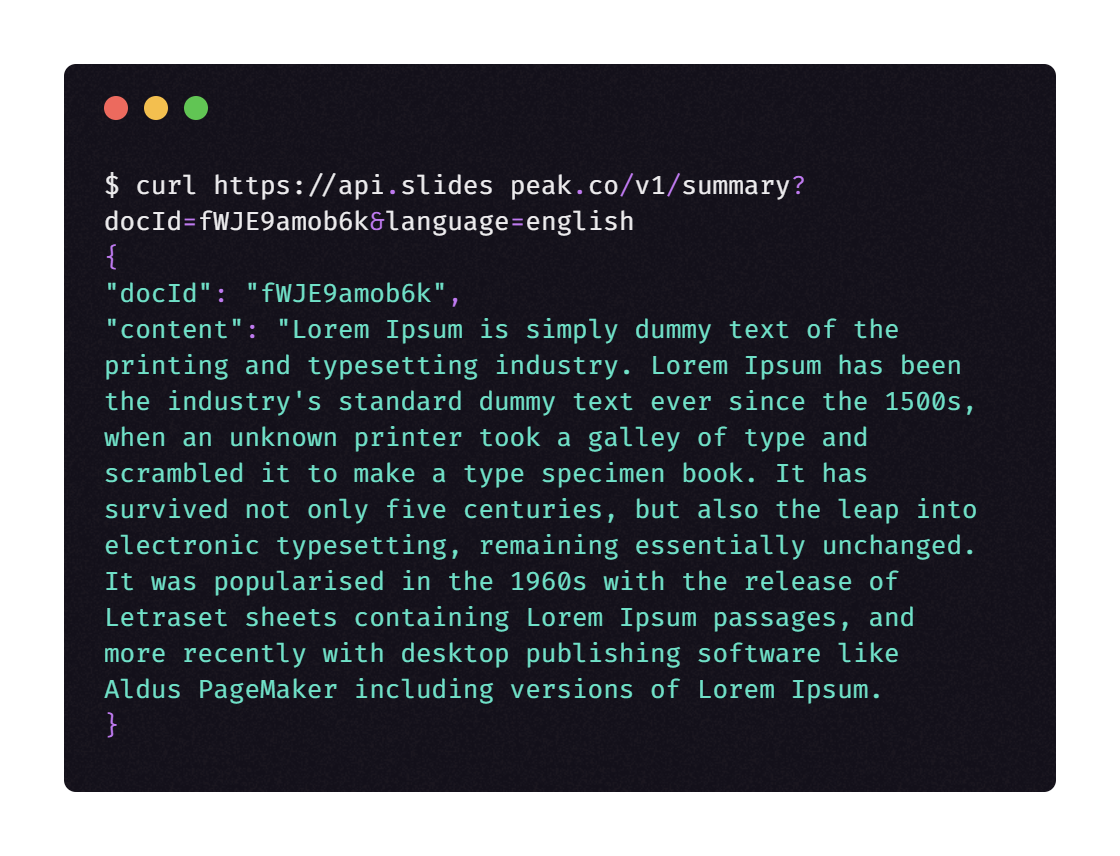

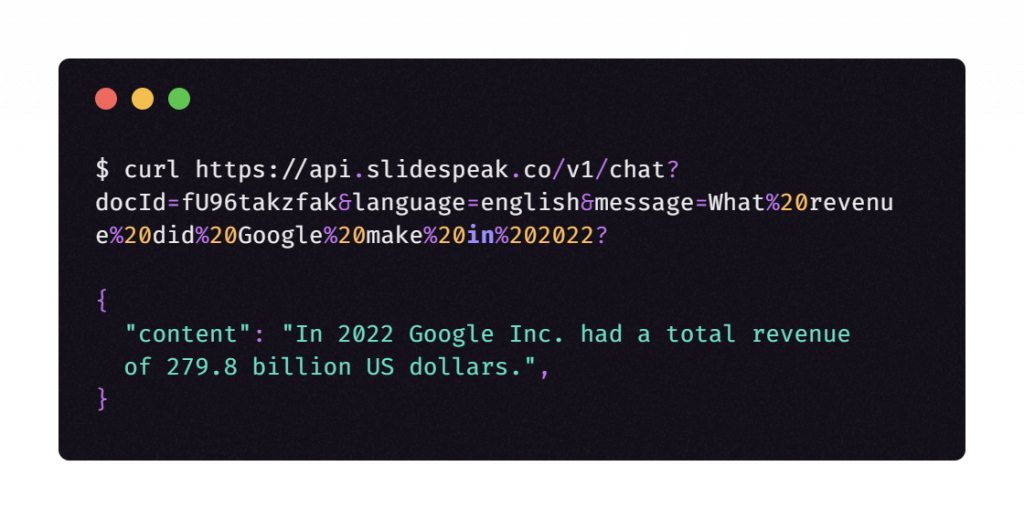

In the spirit of experimentation, the Second Screen Presentation Community Group has been working on a series of proof-of-concept demos for the Presentation API, using custom browser builds and/or existing plug-ins to implement or emulate the Presentation API, when available, or falling back to opening content in a separate browser window otherwise.

Except where otherwise noted, the source code of these demos is available on GitHub under the Second Screen Presentation Community Group organization .

Video sharing demo

The video sharing demo lets one present a video on a second screen.

Note that the demo does not present the video directly on the second screen. It rather presents an HTML video player and passes the URL of the video to play to that player afterwards. In particular, the video player is controlled through messages exchanged between the controlling and the presenting sides.

The demo supports second screens attached through a video link or through some wireless equivalent provided that the provided custom build of Chromium is used.

<video> sharing demo

As opposed to the first demo, the <video> sharing demo presents the video directly to a second screen. Control of the presented video is done from the controlling side using the usual HTMLMediaElement methods such as play(), pause() or fastSeek().

The demo supports second screens attached through a video link or through some wireless equivalent provided that the provided custom build of Chromium is used. The demo also supports Chromecast devices provided that the Google Cast extension is available.

HTML Slidy remote

The HTML Slidy remote demo takes the URL of a slide show made with HTML Slidy as input and presents that slide show on a second screen, turning the first screen into a slide show remote.

FAMIUM Presentation API demos

The Fraunhofer FOKUS’ Competence Center Future Applications and Media (FAME) offers different implementations of the Presentation API as part of FAMIUM, an end-to-end prototype implementation for early technology evaluation and interoperability testing introduced by FAME.

Implementations support virtual displays that can be opened in any Web browser, Chromecast devices, turning Android and desktop devices into second screens, and features a prototype Web browser that implements the Presentation API and supports additional protocols such as WiDi, Miracast, and Network Service Discovery (mDNS/DNS-SD). The source code of these different implementations is not yet available.

One Response to Presentation API demos

Pingback: Chrome 48 Updates And News | Ido Green

Leave a Reply Cancel reply

Your email address will not be published. Required fields are marked *

Before you comment here, note that this forum is moderated and your IP address is sent to Akismet , the plugin we use to mitigate spam comments.

- December 2019

- September 2016

- December 2014

- August 2014

- December 2013

- Announcements

- Uncategorized

Footer Navigation

- Participate

Contact W3C

- Help and FAQ

- Sponsor / Donate

- Feedback ( archive )

W3C Updates

Remember me

Log in or Request an account

Presentation API

W3C Editor's Draft 23 August 2024

Copyright © 2024 World Wide Web Consortium . W3C ® liability , trademark and permissive document license rules apply.

This specification defines an API to enable Web content to access presentation displays and use them for presenting Web content.

Status of This Document

This section describes the status of this document at the time of its publication. A list of current W3C publications and the latest revision of this technical report can be found in the W3C technical reports index at https://www.w3.org/TR/.

This document was published by the Second Screen Working Group as an Editor's Draft.

Since publication as Candidate Recommendation on 01 June 2017 , the Working Group updated the steps to construct a PresentationRequest to ignore a URL with an unsupported scheme, placed further restrictions on how receiving browsing contexts are allowed to navigate themselves, and dropped the definition of the BinaryType enum in favor of the one defined in the HTML specification. Other interfaces defined in this document did not change other than to adjust to WebIDL updates. Various clarifications and editorial updates were also made. See the list of changes for details.

No feature has been identified as being at risk .

The Second Screen Working Group will refine the test suite for the Presentation API during the Candidate Recommendation period and update the preliminary implementation report . For this specification to advance to Proposed Recommendation, two independent, interoperable implementations of each feature must be demonstrated, as detailed in the Candidate Recommendation exit criteria section.

Publication as an Editor's Draft does not imply endorsement by W3C and its Members.

This is a draft document and may be updated, replaced or obsoleted by other documents at any time. It is inappropriate to cite this document as other than work in progress.

This document was produced by a group operating under the W3C Patent Policy . W3C maintains a public list of any patent disclosures made in connection with the deliverables of the group; that page also includes instructions for disclosing a patent. An individual who has actual knowledge of a patent which the individual believes contains Essential Claim(s) must disclose the information in accordance with section 6 of the W3C Patent Policy .

This document is governed by the 03 November 2023 W3C Process Document .

1. Introduction

This section is non-normative.

The Presentation API aims to make presentation displays such as projectors, attached monitors, and network-connected TVs available to the Web. It takes into account displays that are attached using wired (HDMI, DVI, or similar) and wireless technologies (Miracast, Chromecast, DLNA, AirPlay, or similar).

Devices with limited screen size lack the ability to show Web content to a larger audience: a group of colleagues in a conference room, or friends and family at home, for example. Web content shown on a larger presentation display has greater perceived quality, legibility, and impact.

At its core, the Presentation API enables a controller page to show a presentation page on a presentation display and exchange messages with it. How the presentation page is transmitted to the display and how messages are exchanged between it and the controller page are left to the implementation; this allows the use of a wide variety of display technologies.

For example, if the presentation display is connected by HDMI or Miracast, which only allow audio and video to be transmitted, the user agent (UA) hosting the controller will also render the presentation . It then uses the operating system to send the resulting graphical and audio output to the presentation display. We refer to this situation as the 1-UA mode implementation of the Presentation API. The only requirements are that the user agent is able to send graphics and audio from rendering the presentation to the presentation display, and exchange messages internally between the controller and presentation pages.

If the presentation display is able to render HTML natively and communicate with the controller via a network, the user agent hosting the controller does not need to render the presentation . Instead, the user agent acts as a proxy that requests the presentation display to load and render the presentation page itself. Message exchange is done over a network connection between the user agent and the presentation display. We refer to this situation as the 2-UA mode implementation of the Presentation API.

The Presentation API is intended to be used with user agents that attach to presentation displays in 1-UA mode , 2-UA mode , and possibly other means not listed above. To improve interoperability between user agents and presentation displays, standardization of network communication between browsers and displays is being considered in the Second Screen Community Group .

2. Use cases and requirements

Use cases and requirements are captured in a separate Presentation API Use Cases and Requirements document.

3. Conformance

As well as sections marked as non-normative, all authoring guidelines, diagrams, examples, and notes in this specification are non-normative. Everything else in this specification is normative.

The key words MAY , MUST , MUST NOT , OPTIONAL , SHOULD , and SHOULD NOT in this document are to be interpreted as described in BCP 14 [ RFC2119 ] [ RFC8174 ] when, and only when, they appear in all capitals, as shown here.

Requirements phrased in the imperative as part of algorithms (such as "strip any leading space characters" or "return false and terminate these steps") are to be interpreted with the meaning of the key word (" MUST ", " SHOULD ", " MAY ", etc.) used in introducing the algorithm.

Conformance requirements phrased as algorithms or specific steps may be implemented in any manner, so long as the result is equivalent. (In particular, the algorithms defined in this specification are intended to be easy to follow, and not intended to be performant.)

3.1 Conformance classes

This specification describes the conformance criteria for two classes of user agents .

Web browsers that conform to the specifications of a controlling user agent must be able to start and control presentations by providing a controlling browsing context as described in this specification. This context implements the Presentation , PresentationAvailability , PresentationConnection , PresentationConnectionAvailableEvent , PresentationConnectionCloseEvent , and PresentationRequest interfaces.

Web browsers that conform to the specifications of a receiving user agent must be able to render presentations by providing a receiving browsing context as described in this specification. This context implements the Presentation , PresentationConnection , PresentationConnectionAvailableEvent , PresentationConnectionCloseEvent , PresentationConnectionList , and PresentationReceiver interfaces.

One user agent may act both as a controlling user agent and as a receiving user agent , if it provides both browsing contexts and implements all of their required interfaces. This can happen when the same user agent is able to host the controlling browsing context and the receiving browsing context for a presentation, as in the 1-UA mode implementation of the API.

Conformance requirements phrased against a user agent apply either to a controlling user agent , a receiving user agent or to both classes, depending on the context.

4. Terminology

The terms JavaScript realm and current realm are used as defined in [ ECMASCRIPT ]. The terms resolved and rejected in the context of Promise objects are used as defined in [ ECMASCRIPT ].

The terms Accept-Language and HTTP authentication are used as defined in [ RFC9110 ].

The term cookie store is used as defined in [ RFC6265 ].

The term UUID is used as defined in [ RFC4122 ].

The term DIAL is used as defined in [ DIAL ].

The term reload a document refers to steps run when the reload () method gets called in [ HTML ].

The term local storage area refers to the storage areas exposed by the localStorage attribute, and the term session storage area refers to the storage areas exposed by the sessionStorage attribute in [ HTML ].

This specification references terms exported by other specifications, see B.2 Terms defined by reference . It also references the following internal concepts from other specifications:

- parse a url , defined in HTML [ HTML ]

- creating a new browsing context , defined in HTML [ HTML ]

- session history , defined in HTML [ HTML ]

- allowed to navigate , defined in HTML [ HTML ]

- navigating to a fragment identifier , defined in HTML [ HTML ]

- unload a document , defined in HTML [ HTML ]

- database , defined in Indexed Database API [ INDEXEDDB ]

5. Examples

This section shows example codes that highlight the usage of main features of the Presentation API. In these examples, controller.html implements the controller and presentation.html implements the presentation. Both pages are served from the domain https://example.org ( https://example.org/controller.html and https://example.org/presentation.html ). These examples assume that the controlling page is managing one presentation at a time. Please refer to the comments in the code examples for further details.

5.1 Monitoring availability of presentation displays

This code renders a button that is visible when there is at least one compatible presentation display that can present https://example.com/presentation.html or https://example.net/alternate.html .

Monitoring of display availability is done by first creating a PresentationRequest with the URLs you want to present, then calling getAvailability to obtain a PresentationAvailability object whose change event will fire when presentation availability changes state.

5.2 Starting a new presentation

When the user clicks presentBtn , this code requests presentation of one of the URLs in the PresentationRequest . When start is called, the browser typically shows a dialog that allows the user to select one of the compatible displays that are available. The first URL in the PresentationRequest that is compatible with the chosen display will be presented on that display.

The start method resolves with a PresentationConnection object that is used to track the state of the presentation, and exchange messages with the presentation page once it's loaded on the display.

5.3 Reconnecting to an existing presentation

The presentation continues to run even after the original page that started the presentation closes its PresentationConnection , navigates, or is closed. Another page can use the id on the PresentationConnection to reconnect to an existing presentation and resume control of it. This is only guaranteed to work from the same browser that started the presentation.

5.4 Starting a presentation by the controlling user agent

Some browsers have a way for users to start a presentation without interacting directly with the controlling page. Controlling pages can opt into this behavior by setting the defaultRequest property on navigator.presentation , and listening for a connectionavailable event that is fired when a presentation is started this way. The PresentationConnection passed with the event behaves the same as if the page had called start .

5.5 Monitoring the connection state and exchanging data

Once a presentation has started, the returned PresentationConnection is used to monitor its state and exchange messages with it. Typically the user will be given the choice to disconnect from or terminate the presentation from the controlling page.

Since the the controlling page may connect to and disconnect from multiple presentations during its lifetime, it's helpful to keep track of the current PresentationConnection and its state. Messages can only be sent and received on connections in a connected state.

5.6 Listening for incoming presentation connections

This code runs on the presented page ( https://example.org/presentation.html ). Presentations may be connected to from multiple controlling pages, so it's important that the presented page listen for incoming connections on the connectionList object.

5.7 Passing locale information with a message

5.8 creating a second presentation from the same controlling page.

It's possible for a controlling page to start and control two independent presentations on two different presentation displays. This code shows how a second presentation can be added to the first one in the examples above.

6.1 Common idioms

A presentation display refers to a graphical and/or audio output device available to the user agent via an implementation specific connection technology.

A presentation connection is an object relating a controlling browsing context to its receiving browsing context and enables two-way-messaging between them. Each presentation connection has a presentation connection state , a unique presentation identifier to distinguish it from other presentations , and a presentation URL that is a URL used to create or reconnect to the presentation . A valid presentation identifier consists of alphanumeric ASCII characters only and is at least 16 characters long.

Some presentation displays may only be able to display a subset of Web content because of functional, security or hardware limitations. Examples are set-top boxes, smart TVs, or networked speakers capable of rendering only audio. We say that such a display is an available presentation display for a presentation URL if the controlling user agent can reasonably guarantee that presentation of the URL on that display will succeed.

A controlling browsing context (or controller for short) is a browsing context that has connected to a presentation by calling start or reconnect , or received a presentation connection via a connectionavailable event. In algorithms for PresentationRequest , the controlling browsing context is the browsing context whose JavaScript realm was used to construct the PresentationRequest .

The receiving browsing context (or presentation for short) is the browsing context responsible for rendering to a presentation display . A receiving browsing context can reside in the same user agent as the controlling browsing context or a different one. A receiving browsing context is created by following the steps to create a receiving browsing context .

In a procedure, the destination browsing context is the receiving browsing context when the procedure is initiated at the controlling browsing context , or the controlling browsing context if it is initiated at the receiving browsing context .

The set of controlled presentations , initially empty, contains the presentation connections created by the controlling browsing contexts for the controlling user agent (or a specific user profile within that user agent). The set of controlled presentations is represented by a list of PresentationConnection objects that represent the underlying presentation connections . Several PresentationConnection objects may share the same presentation URL and presentation identifier in that set, but there can be only one PresentationConnection with a specific presentation URL and presentation identifier for a given controlling browsing context .

The set of presentation controllers , initially empty, contains the presentation connections created by a receiving browsing context for the receiving user agent . The set of presentation controllers is represented by a list of PresentationConnection objects that represent the underlying presentation connections . All presentation connections in this set share the same presentation URL and presentation identifier .

In a receiving browsing context , the presentation controllers monitor , initially set to null , exposes the current set of presentation controllers to the receiving application. The presentation controllers monitor is represented by a PresentationConnectionList .

In a receiving browsing context , the presentation controllers promise , which is initially set to null , provides the presentation controllers monitor once the initial presentation connection is established. The presentation controllers promise is represented by a Promise that resolves with the presentation controllers monitor .

In a controlling browsing context , the default presentation request , which is initially set to null , represents the request to use when the user wishes to initiate a presentation connection from the browser chrome.

The task source for the tasks mentioned in this specification is the presentation task source .

Unless otherwise specified, the JavaScript realm for script objects constructed by algorithm steps is the current realm .

6.2 Interface Presentation

The presentation attribute is used to retrieve an instance of the Presentation interface. It MUST return the Presentation instance.

6.2.1 Controlling user agent

Controlling user agents MUST implement the following partial interface:

The defaultRequest attribute MUST return the default presentation request if any, null otherwise. On setting, the default presentation request MUST be set to the new value.

The controlling user agent SHOULD initiate presentation using the default presentation request only when the user has expressed an intention to do so via a user gesture, for example by clicking a button in the browser chrome.

To initiate presentation using the default presentation request , the controlling user agent MUST follow the steps to start a presentation from a default presentation request .

Support for initiating a presentation using the default presentation request is OPTIONAL .

6.2.2 Receiving user agent

Receiving user agents MUST implement the following partial interface:

The receiver attribute MUST return the PresentationReceiver instance associated with the receiving browsing context and created by the receiving user agent when the receiving browsing context is created . In any other browsing context (including child navigables of the receiving browsing context ) it MUST return null .

Web developers can use navigator.presentation.receiver to detect when a document is loaded as a presentation.

6.3 Interface PresentationRequest

A PresentationRequest object is associated with a request to initiate or reconnect to a presentation made by a controlling browsing context . The PresentationRequest object MUST be implemented in a controlling browsing context provided by a controlling user agent .

When a PresentationRequest is constructed, the given urls MUST be used as the list of presentation request URLs which are each a possible presentation URL for the PresentationRequest instance.

6.3.1 Constructing a PresentationRequest

When the PresentationRequest constructor is called, the controlling user agent MUST run these steps:

- If the document object's active sandboxing flag set has the sandboxed presentation browsing context flag set, then throw a SecurityError and abort these steps.

- If urls is an empty sequence, then throw a NotSupportedError and abort all remaining steps.

- If a single url was provided, let urls be a one item array containing url .

- Let presentationUrls be an empty list of URLs.

- Let A be an absolute URL that is the result of parsing U relative to the API base URL specified by the current settings object .

- If the parse a URL algorithm failed, then throw a SyntaxError exception and abort all remaining steps.

- If A 's scheme is supported by the controlling user agent , add A to presentationUrls .

- If presentationUrls is an empty list, then throw a NotSupportedError and abort all remaining steps.

- If any member of presentationUrls is not a potentially trustworthy URL , then throw a SecurityError and abort these steps.

- Construct a new PresentationRequest object with presentationUrls as its presentation request URLs and return it.

6.3.2 Selecting a presentation display

When the start method is called, the user agent MUST run the following steps to select a presentation display .

- If the document's active window does not have transient activation , return a Promise rejected with an InvalidAccessError exception and abort these steps.

- Let topContext be the top-level browsing context of the controlling browsing context .

- If there is already an unsettled Promise from a previous call to start in topContext or any browsing context in the descendant navigables of topContext , return a new Promise rejected with an OperationError exception and abort all remaining steps.

- Let P be a new Promise .

- Return P , but continue running these steps in parallel .

- If the user agent is not monitoring the list of available presentation displays , run the steps to monitor the list of available presentation displays in parallel .

- Let presentationUrls be the presentation request URLs of presentationRequest .

- Request user permission for the use of a presentation display and selection of one presentation display.

- The list of available presentation displays is empty and will remain so before the request for user permission is completed.

- No member in the list of available presentation displays is an available presentation display for any member of presentationUrls .

- Reject P with a NotFoundError exception.

- Abort all remaining steps.

- If the user denies permission to use a display, reject P with an NotAllowedError exception, and abort all remaining steps.

- Otherwise, the user grants permission to use a display; let D be that display.

- Run the steps to start a presentation connection with presentationRequest , D , and P .

6.3.3 Starting a presentation from a default presentation request

When the user expresses an intent to start presentation of a document on a presentation display using the browser chrome (via a dedicated button, user gesture, or other signal), that user agent MUST run the following steps to start a presentation from a default presentation request . If no default presentation request is set on the document, these steps MUST not be run.

- If there is no presentation request URL for presentationRequest for which D is an available presentation display , then abort these steps.

- Run the steps to start a presentation connection with presentationRequest and D .

6.3.4 Starting a presentation connection

When the user agent is to start a presentation connection , it MUST run the following steps:

- Let I be a new valid presentation identifier unique among all presentation identifiers for known presentation connections in the set of controlled presentations . To avoid fingerprinting, implementations SHOULD set the presentation identifier to a UUID generated by following forms 4.4 or 4.5 of [ rfc4122 ].

- Create a new PresentationConnection S .

- Set the presentation identifier of S to I .

- Set the presentation URL for S to the first presentationUrl in presentationUrls for which there exists an entry (presentationUrl, D) in the list of available presentation displays .

- Set the presentation connection state of S to connecting .

- Add S to the set of controlled presentations .

- If P is provided, resolve P with S .

- Queue a task to fire an event named connectionavailable , that uses the PresentationConnectionAvailableEvent interface, with the connection attribute initialized to S , at presentationRequest . The event must not bubble and must not be cancelable.

- Let U be the user agent connected to D.

- If the next step fails, abort all remaining steps and close the presentation connection S with error as closeReason , and a human readable message describing the failure as closeMessage .

- Using an implementation specific mechanism, tell U to create a receiving browsing context with D , presentationUrl , and I as parameters.

- Establish a presentation connection with S .

6.3.5 Reconnecting to a presentation

When the reconnect method is called, the user agent MUST run the following steps to reconnect to a presentation:

- Return P , but continue running these steps in parallel.

- Its controlling browsing context is the current browsing context

- Its presentation connection state is not terminated

- Its presentation URL is equal to one of the presentation request URLs of presentationRequest

- Its presentation identifier is equal to presentationId

- Let existingConnection be that PresentationConnection .

- Resolve P with existingConnection .

- If the presentation connection state of existingConnection is connecting or connected , then abort all remaining steps.

- Set the presentation connection state of existingConnection to connecting .

- Establish a presentation connection with existingConnection .

- Its controlling browsing context is not the current browsing context

- Create a new PresentationConnection newConnection .

- Set the presentation identifier of newConnection to presentationId .

- Set the presentation URL of newConnection to the presentation URL of existingConnection .

- Set the presentation connection state of newConnection to connecting .

- Add newConnection to the set of controlled presentations .

- Resolve P with newConnection .

- Queue a task to fire an event named connectionavailable , that uses the PresentationConnectionAvailableEvent interface, with the connection attribute initialized to newConnection , at presentationRequest . The event must not bubble and must not be cancelable.

- Establish a presentation connection with newConnection .

6.3.6 Event Handlers

The following are the event handlers (and their corresponding event handler event types) that must be supported, as event handler IDL attributes, by objects implementing the PresentationRequest interface:

| Event handler | Event handler event type |

|---|---|

6.4 Interface PresentationAvailability

A PresentationAvailability object exposes the presentation display availability for a presentation request. The presentation display availability for a PresentationRequest stores whether there is currently any available presentation display for at least one of the presentation request URLs of the request.

The presentation display availability for a presentation request is eligible for garbage collection when no ECMASCript code can observe the PresentationAvailability object.

If the controlling user agent can monitor the list of available presentation displays in the background (without a pending request to start ), the PresentationAvailability object MUST be implemented in a controlling browsing context .

The value attribute MUST return the last value it was set to. The value is initialized and updated by the monitor the list of available presentation displays algorithm.

The onchange attribute is an event handler whose corresponding event handler event type is change .

6.4.1 The set of presentation availability objects

The user agent MUST keep track of the set of presentation availability objects created by the getAvailability method. The set of presentation availability objects is represented as a set of tuples ( A , availabilityUrls ) , initially empty, where:

- A is a live PresentationAvailability object.

- availabilityUrls is the list of presentation request URLs for the PresentationRequest when getAvailability was called on it to create A .

6.4.2 The list of available presentation displays

The user agent MUST keep a list of available presentation displays . The list of available presentation displays is represented by a list of tuples (availabilityUrl, display) . An entry in this list means that display is currently an available presentation display for availabilityUrl . This list of presentation displays may be used for starting new presentations, and is populated based on an implementation specific discovery mechanism. It is set to the most recent result of the algorithm to monitor the list of available presentation displays .

While the set of presentation availability objects is not empty, the user agent MAY monitor the list of available presentation displays continuously, so that pages can use the value property of a PresentationAvailability object to offer presentation only when there are available displays. However, the user agent may not support continuous availability monitoring in the background; for example, because of platform or power consumption restrictions. In this case the Promise returned by getAvailability is rejected , and the algorithm to monitor the list of available presentation displays will only run as part of the select a presentation display algorithm.

When the set of presentation availability objects is empty (that is, there are no availabilityUrls being monitored), user agents SHOULD NOT monitor the list of available presentation displays to satisfy the power saving non-functional requirement . To further save power, the user agent MAY also keep track of whether a page holding a PresentationAvailability object is in the foreground. Using this information, implementation specific discovery of presentation displays can be resumed or suspended.

6.4.3 Getting the presentation displays availability information

When the getAvailability method is called, the user agent MUST run the following steps:

- If there is an unsettled Promise from a previous call to getAvailability on presentationRequest , return that Promise and abort these steps.

- Otherwise, let P be a new Promise constructed in the JavaScript realm of presentationRequest .

- Reject P with a NotSupportedError exception.

- Abort all the remaining steps.

- Resolve P with the request's presentation display availability .

- Set the presentation display availability for presentationRequest to a newly created PresentationAvailability object constructed in the JavaScript realm of presentationRequest , and let A be that object.

- Create a tuple ( A , presentationUrls ) and add it to the set of presentation availability objects .

- Run the algorithm to monitor the list of available presentation displays . Note The monitoring algorithm must be run at least one more time after the previous step to pick up the tuple that was added to the set of presentation availability objects .

- Resolve P with A .

6.4.4 Monitoring the list of available presentation displays

If the set of presentation availability objects is non-empty, or there is a pending request to select a presentation display , the user agent MUST monitor the list of available presentation displays by running the following steps:

- Let availabilitySet be a shallow copy of the set of presentation availability objects .

- Let A be a newly created PresentationAvailability object.

- Create a tuple ( A , presentationUrls ) where presentationUrls is the PresentationRequest 's presentation request URLs and add it to availabilitySet .

- Let newDisplays be an empty list.

- If the user agent is unable to retrieve presentation displays (e.g., because the user has disabled this capability), then skip the following step.

- Retrieve presentation displays (using an implementation specific mechanism) and set newDisplays to this list.

- Set the list of available presentation displays to the empty list.

- Set previousAvailability to the value of A 's value property.

- Let newAvailability be false .

- Insert a tuple (availabilityUrl, display) into the list of available presentation displays , if no identical tuple already exists.

- Set newAvailability to true .

- If A 's value property has not yet been initialized, then set A 's value property to newAvailability and skip the following step.

- Set A 's value property to newAvailability .

- Fire an event named change at A .

When a presentation display availability object is eligible for garbage collection, the user agent SHOULD run the following steps:

- Let A be the newly deceased PresentationAvailability object

- Find and remove any entry ( A , availabilityUrl ) in the set of presentation availability objects .

- If the set of presentation availability objects is now empty and there is no pending request to select a presentation display , cancel any pending task to monitor the list of available presentation displays for power saving purposes, and set the list of available presentation displays to the empty list.

6.4.5 Interface PresentationConnectionAvailableEvent

A controlling user agent fires an event named connectionavailable on a PresentationRequest when a connection associated with the object is created. It is fired at the PresentationRequest instance, using the PresentationConnectionAvailableEvent interface, with the connection attribute set to the PresentationConnection object that was created. The event is fired for each connection that is created for the controller , either by the controller calling start or reconnect , or by the controlling user agent creating a connection on the controller's behalf via defaultRequest .

A receiving user agent fires an event named connectionavailable on a PresentationReceiver when an incoming connection is created. It is fired at the presentation controllers monitor , using the PresentationConnectionAvailableEvent interface, with the connection attribute set to the PresentationConnection object that was created. The event is fired for all connections that are created when monitoring incoming presentation connections .

The connection attribute MUST return the value it was set to when the PresentationConnection object was created.

When the PresentationConnectionAvailableEvent constructor is called, the user agent MUST construct a new PresentationConnectionAvailableEvent object with its connection attribute set to the connection member of the PresentationConnectionAvailableEventInit object passed to the constructor.

6.5 Interface PresentationConnection

Each presentation connection is represented by a PresentationConnection object. Both the controlling user agent and receiving user agent MUST implement PresentationConnection .

The id attribute specifies the presentation connection 's presentation identifier .

The url attribute specifies the presentation connection 's presentation URL .

The state attribute represents the presentation connection 's current state. It can take one of the values of PresentationConnectionState depending on the connection state:

- connecting means that the user agent is attempting to establish a presentation connection with the destination browsing context . This is the initial state when a PresentationConnection object is created.

- connected means that the presentation connection is established and communication is possible.

- closed means that the presentation connection has been closed, or could not be opened. It may be re-opened through a call to reconnect . No communication is possible.

- terminated means that the receiving browsing context has been terminated. Any presentation connection to that presentation is also terminated and cannot be re-opened. No communication is possible.

When the close method is called on a PresentationConnection S , the user agent MUST start closing the presentation connection S with closed as closeReason and an empty message as closeMessage .

When the terminate method is called on a PresentationConnection S in a controlling browsing context , the user agent MUST run the algorithm to terminate a presentation in a controlling browsing context using S .

When the terminate method is called on a PresentationConnection S in a receiving browsing context , the user agent MUST run the algorithm to terminate a presentation in a receiving browsing context using S .

The binaryType attribute can take one of the values of BinaryType . When a PresentationConnection object is created, its binaryType attribute MUST be set to the string " arraybuffer ". On getting, it MUST return the last value it was set to. On setting, the user agent MUST set the attribute to the new value.

When the send method is called on a PresentationConnection S , the user agent MUST run the algorithm to send a message through S .

When a PresentationConnection object S is discarded (because the document owning it is navigating or is closed) while the presentation connection state of S is connecting or connected , the user agent MUST start closing the presentation connection S with wentaway as closeReason and an empty closeMessage .

If the user agent receives a signal from the destination browsing context that a PresentationConnection S is to be closed, it MUST close the presentation connection S with closed or wentaway as closeReason and an empty closeMessage .

6.5.1 Establishing a presentation connection

When the user agent is to establish a presentation connection using a presentation connection , it MUST run the following steps:

- If the presentation connection state of presentationConnection is not connecting , then abort all remaining steps.

- Request connection of presentationConnection to the receiving browsing context . The presentation identifier of presentationConnection MUST be sent with this request.

- Set the presentation connection state of presentationConnection to connected .

- Fire an event named connect at presentationConnection .

- If the connection cannot be completed, close the presentation connection S with error as closeReason , and a human readable message describing the failure as closeMessage .

6.5.2 Sending a message through PresentationConnection

Let presentation message data be the payload data to be transmitted between two browsing contexts. Let presentation message type be the type of that data, one of text or binary .

When the user agent is to send a message through a presentation connection , it MUST run the following steps:

- If the state property of presentationConnection is not connected , throw an InvalidStateError exception.

- If the closing procedure of presentationConnection has started, then abort these steps.

- Let presentation message type messageType be binary if messageOrData is of type ArrayBuffer , ArrayBufferView , or Blob . Let messageType be text if messageOrData is of type DOMString .

- Using an implementation specific mechanism, transmit the contents of messageOrData as the presentation message data and messageType as the presentation message type to the destination browsing context .

- If the previous step encounters an unrecoverable error, then abruptly close the presentation connection presentationConnection with error as closeReason , and a closeMessage describing the error encountered.

To assist applications in recovery from an error sending a message through a presentation connection , the user agent should include details of which attempt failed in closeMessage , along with a human readable string explaining the failure reason. Example renditions of closeMessage :

- Unable to send text message (network_error): "hello" for DOMString messages, where "hello" is the first 256 characters of the failed message.

- Unable to send binary message (invalid_message) for ArrayBuffer , ArrayBufferView and Blob messages.

6.5.3 Receiving a message through PresentationConnection

When the user agent has received a transmission from the remote side consisting of presentation message data and presentation message type , it MUST run the following steps to receive a message through a PresentationConnection :

- If the state property of presentationConnection is not connected , abort these steps.

- Let event be the result of creating an event using the MessageEvent interface, with the event type message , which does not bubble and is not cancelable.

- If messageType is text , then initialize event 's data attribute to messageData with type DOMString .

- If messageType is binary , and binaryType attribute is set to " blob ", then initialize event 's data attribute to a new Blob object with messageData as its raw data.

- If messageType is binary , and binaryType attribute is set to " arraybuffer ", then initialize event 's data attribute to a new ArrayBuffer object whose contents are messageData .

- Queue a task to fire event at presentationConnection .

If the user agent encounters an unrecoverable error while receiving a message through presentationConnection , it MUST abruptly close the presentation connection presentationConnection with error as closeReason . It SHOULD use a human readable description of the error encountered as closeMessage .

6.5.4 Interface PresentationConnectionCloseEvent

A PresentationConnectionCloseEvent is fired when a presentation connection enters a closed state. The reason attribute provides the reason why the connection was closed. It can take one of the values of PresentationConnectionCloseReason :

- error means that the mechanism for connecting or communicating with a presentation entered an unrecoverable error.

- closed means that either the controlling browsing context or the receiving browsing context that were connected by the PresentationConnection called close() .

- wentaway means that the browser closed the connection, for example, because the browsing context that owned the connection navigated or was discarded.

When the reason attribute is error , the user agent SHOULD set the message attribute to a human readable description of how the communication channel encountered an error.

When the PresentationConnectionCloseEvent constructor is called, the user agent MUST construct a new PresentationConnectionCloseEvent object, with its reason attribute set to the reason member of the PresentationConnectionCloseEventInit object passed to the constructor, and its message attribute set to the message member of this PresentationConnectionCloseEventInit object if set, to an empty string otherwise.

6.5.5 Closing a PresentationConnection

When the user agent is to start closing a presentation connection , it MUST do the following:

- If the presentation connection state of presentationConnection is not connecting or connected then abort the remaining steps.

- Set the presentation connection state of presentationConnection to closed .

- Start to signal to the destination browsing context the intention to close the corresponding PresentationConnection , passing the closeReason to that context. The user agent does not need to wait for acknowledgement that the corresponding PresentationConnection was actually closed before proceeding to the next step.

- If closeReason is not wentaway , then locally run the steps to close the presentation connection with presentationConnection , closeReason , and closeMessage .

When the user agent is to close a presentation connection , it MUST do the following:

- If there is a pending close the presentation connection task for presentationConnection , or a close the presentation connection task has already run for presentationConnection , then abort the remaining steps.

- If the presentation connection state of presentationConnection is not connecting , connected , or closed , then abort the remaining steps.

- If the presentation connection state of presentationConnection is not closed , set it to closed .

- Remove presentationConnection from the set of presentation controllers .

- Populate the presentation controllers monitor with the set of presentation controllers .

- Fire an event named close , that uses the PresentationConnectionCloseEvent interface, with the reason attribute initialized to closeReason and the message attribute initialized to closeMessage , at presentationConnection . The event must not bubble and must not be cancelable.

6.5.6 Terminating a presentation in a controlling browsing context

When a controlling user agent is to terminate a presentation in a controlling browsing context using connection , it MUST run the following steps:

- If the presentation connection state of connection is not connected or connecting , then abort these steps.

- Set the presentation connection state of known connection to terminated .

- Fire an event named terminate at known connection .

- Send a termination request for the presentation to its receiving user agent using an implementation specific mechanism.

6.5.7 Terminating a presentation in a receiving browsing context

When any of the following occur, the receiving user agent MUST terminate a presentation in a receiving browsing context :

- The receiving user agent is to unload a document corresponding to the receiving browsing context , e.g. in response to a request to navigate that context to a new resource.

This could happen by an explicit user action, or as a policy of the user agent. For example, the receiving user agent could be configured to terminate presentations whose PresentationConnection objects are all closed for 30 minutes.

- A controlling user agent sends a termination request to the receiving user agent for that presentation.

When a receiving user agent is to terminate a presentation in a receiving browsing context , it MUST run the following steps:

- Let P be the presentation to be terminated, let allControllers be the set of presentation controllers that were created for P , and connectedControllers an empty list.

- If the presentation connection state of connection is connected , then add connection to connectedControllers .

- Set the presentation connection state of connection to terminated .

- If there is a receiving browsing context for P , and it has a document for P that is not unloaded, unload a document corresponding to that browsing context , remove that browsing context from the user interface and discard it.

Only one termination confirmation needs to be sent per controlling user agent .

6.5.8 Handling a termination confirmation in a controlling user agent

When a receiving user agent is to send a termination confirmation for a presentation P , and that confirmation was received by a controlling user agent , the controlling user agent MUST run the following steps:

- If the presentation connection state of connection is not connected or connecting , then abort the following steps.

- Fire an event named terminate at connection .

6.5.9 Event Handlers

The following are the event handlers (and their corresponding event handler event types) that must be supported, as event handler IDL attributes, by objects implementing the PresentationConnection interface:

| Event handler | Event handler event type |

|---|---|

6.6 Interface PresentationReceiver

The PresentationReceiver interface allows a receiving browsing context to access the controlling browsing contexts and communicate with them. The PresentationReceiver interface MUST be implemented in a receiving browsing context provided by a receiving user agent .

On getting, the connectionList attribute MUST return the result of running the following steps:

- If the presentation controllers promise is not null , return the presentation controllers promise and abort all remaining steps.

- Otherwise, let the presentation controllers promise be a new Promise constructed in the JavaScript realm of this PresentationReceiver object.

- Return the presentation controllers promise .

- If the presentation controllers monitor is not null , resolve the presentation controllers promise with the presentation controllers monitor .

6.6.1 Creating a receiving browsing context

When the user agent is to create a receiving browsing context , it MUST run the following steps:

- Create a new top-level browsing context C , set to display content on D .

- Set the session history of C to be the empty list.

- Set the sandboxed modals flag and the sandboxed auxiliary navigation browsing context flag on C .

- If the receiving user agent implements [ PERMISSIONS ], set the permission state of all permission descriptor types for C to "denied" .

- Create a new empty cookie store for C .

- Create a new empty store for C to hold HTTP authentication states.

- Create a new empty storage for session storage areas and local storage areas for C .

- If the receiving user agent implements [ INDEXEDDB ], create a new empty storage for IndexedDB databases for C .

- If the receiving user agent implements [ SERVICE-WORKERS ], create a new empty list of registered service worker registrations and a new empty set of Cache objects for C .

- Navigate C to presentationUrl .

- Start monitoring incoming presentation connections for C with presentationId and presentationUrl .

All child navigables created by the presented document, i.e. that have the receiving browsing context as their top-level browsing context , MUST also have restrictions 2-4 above. In addition, they MUST have the sandboxed top-level navigation without user activation browsing context flag set. All of these browsing contexts MUST also share the same browsing state (storage) for features 5-10 listed above.

When the top-level browsing context attempts to navigate to a new resource and runs the steps to navigate , it MUST follow step 1 to determine if it is allowed to navigate . In addition, it MUST NOT be allowed to navigate itself to a new resource, except by navigating to a fragment identifier or by reloading its document .

This allows the user to grant permission based on the origin of the presentation URL shown when selecting a presentation display .

If the top-level-browsing context was not allowed to navigate , it SHOULD NOT offer to open the resource in a new top-level browsing context , but otherwise SHOULD be consistent with the steps to navigate .

Window clients and worker clients associated with the receiving browsing context and its descendant navigables must not be exposed to service workers associated with each other.

When the receiving browsing context is terminated, any service workers associated with it and the browsing contexts in its descendant navigables MUST be unregistered and terminated. Any browsing state associated with the receiving browsing context and the browsing contexts in its descendant navigables , including session history , the cookie store , any HTTP authentication state, any databases , the session storage areas , the local storage areas , the list of registered service worker registrations and the Cache objects MUST be discarded and not used for any other browsing context .

This algorithm is intended to create a well defined environment to allow interoperable behavior for 1-UA and 2-UA presentations, and to minimize the amount of state remaining on a presentation display used for a 2-UA presentation.

The receiving user agent SHOULD fetch resources in a receiving browsing context with an HTTP Accept-Language header that reflects the language preferences of the controlling user agent (i.e., with the same Accept-Language that the controlling user agent would have sent). This will help the receiving user agent render the presentation with fonts and locale-specific attributes that reflect the user's preferences.

Given the operating context of the presentation display , some Web APIs will not work by design (for example, by requiring user input) or will be obsolete (for example, by attempting window management); the receiving user agent should be aware of this. Furthermore, any modal user interface will need to be handled carefully. The sandboxed modals flag is set on the receiving browsing context to prevent most of these operations.

As noted in Conformance , a user agent that is both a controlling user agent and receiving user agent may allow a receiving browsing context to create additional presentations (thus becoming a controlling browsing context as well). Web developers can use navigator.presentation.receiver to detect when a document is loaded as a receiving browsing context.

6.7 Interface PresentationConnectionList

The connections attribute MUST return the non-terminated set of presentation connections in the set of presentation controllers .

6.7.1 Monitoring incoming presentation connections

When the receiving user agent is to start monitoring incoming presentation connections in a receiving browsing context from controlling browsing contexts , it MUST listen to and accept incoming connection requests from a controlling browsing context using an implementation specific mechanism. When a new connection request is received from a controlling browsing context , the receiving user agent MUST run the following steps:

- If presentationId and I are not equal, refuse the connection and abort all remaining steps.

- Set the presentation URL of S to presentationUrl .

- Establish the connection between the controlling and receiving browsing contexts using an implementation specific mechanism.

- If connection establishment completes successfully, set the presentation connection state of S to connected . Otherwise, set the presentation connection state of S to closed and abort all remaining steps.

- Add S to the set of presentation controllers .

- Let the presentation controllers monitor be a new PresentationConnectionList constructed in the JavaScript realm of the PresentationReceiver object of the receiving browsing context .

- If the presentation controllers promise is not null , resolve the presentation controllers promise with the presentation controllers monitor .

- Queue a task to fire an event named connectionavailable , that uses the PresentationConnectionAvailableEvent interface, with the connection attribute initialized to S , at the presentation controllers monitor . The event must not bubble and must not be cancelable.

6.7.2 Event Handlers

The following are the event handlers (and their corresponding event handler event types) that must be supported, as event handler IDL attributes, by objects implementing the PresentationConnectionList interface:

| Event handler | Event handler event type |

|---|---|

7. Security and privacy considerations

7.1 personally identifiable information.

The change event fired on the PresentationAvailability object reveals one bit of information about the presence or absence of a presentation display , often discovered through the browser's local area network. This could be used in conjunction with other information for fingerprinting the user. However, this information is also dependent on the user's local network context, so the risk is minimized.

The API enables monitoring the list of available presentation displays . How the user agent determines the compatibility and availability of a presentation display with a given URL is an implementation detail. If a controlling user agent matches a presentation request URL to a DIAL application to determine its availability, this feature can be used to probe information about which DIAL applications the user has installed on the presentation display without user consent.

7.2 Cross-origin access

A presentation is allowed to be accessed across origins; the presentation URL and presentation identifier used to create the presentation are the only information needed to reconnect to a presentation from any origin in the controlling user agent. In other words, a presentation is not tied to a particular opening origin.

This design allows controlling contexts from different origins to connect to a shared presentation resource. The security of the presentation identifier prevents arbitrary origins from connecting to an existing presentation.

This specification also allows a receiving user agent to publish information about its set of controlled presentations , and a controlling user agent to reconnect to presentations started from other devices. This is possible when the controlling browsing context obtains the presentation URL and presentation identifier of a running presentation from the user, local storage, or a server, and then connects to the presentation via reconnect .

This specification makes no guarantee as to the identity of any party connecting to a presentation. Once connected, the presentation may wish to further verify the identity of the connecting party through application-specific means. For example, the presentation could challenge the controller to provide a token via send that the presentation uses to verify identity and authorization.

7.3 User interface guidelines

When the user is asked permission to use a presentation display during the steps to select a presentation display , the controlling user agent should make it clear what origin is requesting presentation and what origin will be presented.

Display of the origin requesting presentation will help the user understand what content is making the request, especially when the request is initiated from a child navigable . For example, embedded content may try to convince the user to click to trigger a request to start an unwanted presentation.

The sandboxed top-level navigation without user activation browsing context flag is set on the receiving browsing context to enforce that the top-level origin of the presentation remains the same during the lifetime of the presentation.

When a user starts a presentation , the user will begin with exclusive control of the presentation. However, the Presentation API allows additional devices (likely belonging to distinct users) to connect and thereby control the presentation as well. When a second device connects to a presentation, it is recommended that all connected controlling user agents notify their users via the browser chrome that the original user has lost exclusive access, and there are now multiple controllers for the presentation.

In addition, it may be the case that the receiving user agent is capable of receiving user input, as well as acting as a presentation display . In this case, the receiving user agent should notify its user via browser chrome when a receiving browsing context is under the control of a remote party (i.e., it has one or more connected controllers).

7.4 Device Access

The presentation API abstracts away what "local" means for displays, meaning that it exposes network-accessible displays as though they were directly attached to the user's device. The Presentation API requires user permission for a page to access any display to mitigate issues that could arise, such as showing unwanted content on a display viewable by others.

7.5 Temporary identifiers and browser state

The presentation URL and presentation identifier can be used to connect to a presentation from another browsing context. They can be intercepted if an attacker can inject content into the controlling page.

7.6 Private browsing mode and clearing of browsing data

The content displayed on the presentation is different from the controller. In particular, if the user is logged in in both contexts, then logs out of the controlling browsing context , they will not be automatically logged out from the receiving browsing context . Applications that use authentication should pay extra care when communicating between devices.

The set of presentations known to the user agent should be cleared when the user requests to "clear browsing data."

When in private browsing mode ("incognito"), the initial set of controlled presentations in that browsing session must be empty. Any presentation connections added to it must be discarded when the session terminates.

7.7 Messaging between presentation connections

This spec will not mandate communication protocols between the controlling browsing context and the receiving browsing context , but it should set some guarantees of message confidentiality and authenticity between corresponding presentation connections .

A. IDL Index

B.1 terms defined by this specification.

- 1-UA mode §1.

- 2-UA mode §1.

- allowed to navigate §4.

- available presentation display §6.1

- binaryType attribute for PresentationConnection §6.5

- change §6.4

- close method for PresentationConnection §6.5

- close a presentation connection §6.5.5

- enum value for PresentationConnectionState §6.5

- enum value for PresentationConnectionCloseReason §6.5.4

- connect §6.5.9

- "connected" enum value for PresentationConnectionState §6.5

- "connecting" enum value for PresentationConnectionState §6.5

- attribute for PresentationConnectionAvailableEvent §6.4.5

- member for PresentationConnectionAvailableEventInit §6.4.5

- connectionavailable §6.3.6

- connectionList attribute for PresentationReceiver §6.6

- connections attribute for PresentationConnectionList §6.7

- for PresentationRequest §6.3

- for PresentationConnectionAvailableEvent §6.4.5

- for PresentationConnectionCloseEvent §6.5.4

- controlling browsing context §6.1

- Controlling user agent §3.1

- create a receiving browsing context §6.6.1

- creating a new browsing context §4.

- database §4.

- default presentation request §6.1

- defaultRequest attribute for Presentation §6.2.1

- destination browsing context §6.1

- "error" enum value for PresentationConnectionCloseReason §6.5.4

- establish a presentation connection §6.5.1

- getAvailability method for PresentationRequest §6.4.3

- id attribute for PresentationConnection §6.5

- list of available presentation displays §6.4.2

- local storage area §4.

- attribute for PresentationConnectionCloseEvent §6.5.4

- member for PresentationConnectionCloseEventInit §6.5.4

- monitor the list of available presentation displays §6.4.4

- monitoring incoming presentation connections §6.7.1

- navigating to a fragment identifier §4.

- onchange attribute for PresentationAvailability §6.4

- onclose attribute for PresentationConnection §6.5.9

- onconnect attribute for PresentationConnection §6.5.9

- attribute for PresentationRequest §6.3.6

- attribute for PresentationConnectionList §6.7.2

- onmessage attribute for PresentationConnection §6.5.9

- onterminate attribute for PresentationConnection §6.5.9

- parse a url §4.

- presentation attribute for Navigator §6.2

- Presentation interface §6.2

- presentation connection §6.1

- presentation connection state §6.1

- presentation controllers monitor §6.1

- presentation controllers promise §6.1

- presentation display §6.1

- presentation display availability §6.4

- presentation identifier §6.1

- presentation message data §6.5.2

- presentation message type §6.5.2

- presentation request URLs §6.3

- presentation URL §6.1

- PresentationAvailability interface §6.4

- PresentationConnection interface §6.5

- PresentationConnectionAvailableEvent interface §6.4.5

- PresentationConnectionAvailableEventInit dictionary §6.4.5

- PresentationConnectionCloseEvent interface §6.5.4

- PresentationConnectionCloseEventInit dictionary §6.5.4

- PresentationConnectionCloseReason enum §6.5.4

- PresentationConnectionList interface §6.7

- PresentationConnectionState enum §6.5

- PresentationReceiver interface §6.6

- PresentationRequest interface §6.3

- receive a message §6.5.3

- receiver attribute for Presentation §6.2.2

- receiving browsing context §6.1

- Receiving user agent §3.1

- reconnect method for PresentationRequest §6.3.5

- reload a document §4.

- select a presentation display §6.3.2

- send method for PresentationConnection §6.5

- send a message §6.5.2

- Send a termination request §6.5.6

- session history §4.

- session storage area §4.

- set of controlled presentations §6.1

- set of presentation availability objects §6.4.1

- set of presentation controllers §6.1

- start method for PresentationRequest §6.3.2

- start a presentation connection §6.3.4

- start a presentation from a default presentation request §6.3.3

- start closing a presentation connection §6.5.5

- state attribute for PresentationConnection §6.5

- terminate method for PresentationConnection §6.5

- terminate a presentation in a controlling browsing context §6.5.6

- terminate a presentation in a receiving browsing context §6.5.7

- "terminated" enum value for PresentationConnectionState §6.5

- unload a document §4.

- url attribute for PresentationConnection §6.5

- user agents §3.1

- valid presentation identifier §6.1

- value attribute for PresentationAvailability §6.4

- "wentaway" enum value for PresentationConnectionCloseReason §6.5.4

B.2 Terms defined by reference

- creating an event

- Event interface

- EventTarget interface

- fire an event

- JavaScript realm

- Blob interface

- active sandboxing flag set (for Document )

- active window (for navigable )

- browsing context

- child navigable

- current settings object

- descendant navigables (for Document )

- event handler

- event handler event type

- EventHandler

- in parallel

- localStorage attribute (for WindowLocalStorage )

- MessageEvent interface

- Navigator interface

- Queue a task

- reload() (for Location )

- sandboxed auxiliary navigation browsing context flag

- sandboxed modals flag

- sandboxed presentation browsing context flag

- sandboxed top-level navigation without user activation browsing context flag

- sessionStorage attribute (for WindowSessionStorage )

- task source

- top-level browsing context

- transient activation

- permission descriptor types (for powerful feature)

- permission state

- cookie store

- Accept-Language

- HTTP authentication

- potentially trustworthy URL

- Cache interface

- service worker registrations

- service workers

- window client (for service worker client)

- worker client (for service worker client)

- ArrayBuffer interface

- ArrayBufferView

- boolean type

- DOMString interface

- [Exposed] extended attribute

- FrozenArray interface

- InvalidAccessError exception

- InvalidStateError exception

- NotAllowedError exception

- NotFoundError exception

- NotSupportedError exception

- OperationError exception

- Promise interface

- [SameObject] extended attribute

- [SecureContext] extended attribute

- SecurityError exception

- SyntaxError exception

- throw (for exception )

- undefined type

- USVString interface

- RTCDataChannel interface

- BinaryType enum

C. Acknowledgments

Thanks to Addison Phillips, Anne Van Kesteren, Anssi Kostiainen, Anton Vayvod, Chris Needham, Christine Runnegar, Daniel Davis, Domenic Denicola, Erik Wilde, François Daoust, 闵洪波 (Hongbo Min), Hongki CHA, Hubert Sablonnière, Hyojin Song, Hyun June Kim, Jean-Claude Dufourd, Joanmarie Diggs, Jonas Sicking, Louay Bassbouss, Mark Watson, Martin Dürst, Matt Hammond, Mike West, Mounir Lamouri, Nick Doty, Oleg Beletski, Philip Jägenstedt, Richard Ishida, Shih-Chiang Chien, Takeshi Kanai, Tobie Langel, Tomoyuki Shimizu, Travis Leithead, and Wayne Carr for help with editing, reviews and feedback to this draft.

AirPlay , HDMI , Chromecast , DLNA and Miracast are registered trademarks of Apple Inc., HDMI Licensing LLC., Google Inc., the Digital Living Network Alliance, and the Wi-Fi Alliance, respectively. They are only cited as background information and their use is not required to implement the specification.

D. Candidate Recommendation exit criteria

For this specification to be advanced to Proposed Recommendation, there must be, for each of the conformance classes it defines ( controlling user agent and receiving user agent ), at least two independent, interoperable implementations of each feature. Each feature may be implemented by a different set of products, there is no requirement that all features be implemented by a single product. Additionally, implementations of the controlling user agent conformance class must include at least one implementation of the 1-UA mode , and one implementation of the 2-UA mode . 2-UA mode implementations may only support non http/https presentation URLs. Implementations of the receiving user agent conformance class may not include implementations of the 2-UA mode .

The API was recently restricted to secure contexts. Deprecation of the API in non secure contexts in early implementations takes time. The group may request transition to Proposed Recommendation with implementations that still expose the API in non secure contexts, provided there exists a timeline to restrict these implementations in the future.

For the purposes of these criteria, we define the following terms:

- implements one of the conformance classes of the specification.

- is available to the general public. The implementation may be a shipping product or other publicly available version (i.e., beta version, preview release, or "nightly build"). Non-shipping product releases must have implemented the feature(s) for a period of at least one month in order to demonstrate stability.

- is not experimental (i.e. a version specifically designed to pass the test suite and not intended for normal usage going forward).

E. Change log

This section lists changes made to the spec since it was first published as Candidate Recommendation in July 2016, with links to related issues on the group's issue tracker.

E.1 Changes since 01 June 2017

- Added a note about receiving browsing contexts starting presentations ( #487 )

- Removed the definition of the BinaryType enum ( #473 )

- Updated WebIDL to use constructor operations ( #469 )

- Clarified how receiving browsing contexts are allowed to navigate ( #461 )

- Added explanatory text to the sample code ( #460 )

- Added sample code that starts a second presentation from the same controller ( #453 )

- Updated the steps to construct a PresentationRequest to ignore a URL with an unsupported scheme ( #447 )

- Clarified restrictions on navigation in receiving browsing contexts ( #434 )

- Updated WebIDL to use [Exposed=Window] ( #438 )

- Various editorial updates ( #429 , #431 , #432 , #433 , #441 , #442 , #443 , #454 , #465 , #482 , #483 , #486 )

E.2 Changes since 14 July 2016

- Fixed document license ( #428 )

- Updated termination algorithm to also discard the receiving browsing context and allow termination in a connecting state ( #421 , #423 )

- Dropped sandboxing section, now integrated in HTML ( #437 in the Web Platform Working Group issue tracker)

- Relaxed exit criteria to match known implementations plans ( #406 )

- The sandboxed top-level navigation browsing context flag and the sandboxed modals flag are now set on the receiving browsing context to prevent top-level navigation and the ability to spawn new browsing contexts ( #414 )

- Moved sandboxing flag checks to PresentationRequest constructor ( #379 , #398 )

- Updated normative references to target stable specifications ( #295 , #396 )

- Made display selection algorithm reject in ancestor and descendant browsing context ( #394 )

- Renamed PresentationConnectionClosedReason to PresentationConnectionCloseReason ( #393 )

- Fixed getAvailability and monitoring algorithms ( #335 , #381 , #382 , #383 , #387 , #388 , #392 )

- Assigned correct JavaScript realm to re-used objects ( #391 )

- API now restricted to secure contexts ( #380 )

- Set the state of receiving presentation connections to terminated before unload ( #374 )

- Defined environment for nested contexts of the receiving browsing context ( #367 )

- Removed [SameObject] from Presentation.receiver and PresentationReceiver.connectionList ( #365 , #407 )

- Replaced DOMString with USVString for PresentationRequest URLs ( #361 )

- Added a presentation task source for events ( #360 )

- Changed normative language around UUID generation ( #346 )

- Added failure reason to close message ( #344 )

- Added error handling to establish a presentation connection algorithm ( #343 )

- Made navigator.presentation mandatory ( #341 )

- Used current settings object in steps that require a settings object ( #336 )

- Updated security check step to handle multiple URLs case ( #329 )

- Made PresentationConnection.id mandatory ( #325 )

- Renamed PresentationConnectionClosedEvent to PresentationConnectionCloseEvent ( #324 )

- Added an implementation note for advertising and rendering a user friendly display name ( #315 )

- Added note for presentation detection ( #303 )

- Various editorial updates ( #334 , #337 , #339 , #340 , #342 , #345 , #359 , #363 , #366 , #397 )

F. References

F.1 normative references, f.2 informative references.

Referenced in:

- § 1. Introduction

- § 3.1 Conformance classes

- § 6.6.1 Creating a receiving browsing context

- § D. Candidate Recommendation exit criteria

- § 6.6.1 Creating a receiving browsing context (2)

- § D. Candidate Recommendation exit criteria (2) (3)

- § 6.3.2 Selecting a presentation display (2)

- § 6.3.4 Starting a presentation connection

- § 6.3.5 Reconnecting to a presentation

- § 6.4.1 The set of presentation availability objects

- § 6.4.2 The list of available presentation displays (2) (3) (4)

- § 6.4.4 Monitoring the list of available presentation displays (2) (3)

- § 6.4.5 Interface PresentationConnectionAvailableEvent

- § 6.5 Interface PresentationConnection (2) (3) (4) (5) (6)

- § 6.5.1 Establishing a presentation connection

- § 6.5.2 Sending a message through PresentationConnection

- § 6.5.3 Receiving a message through PresentationConnection (2)

- § 6.5.4 Interface PresentationConnectionCloseEvent

- § 6.5.5 Closing a PresentationConnection (2)

- § 3.1 Conformance classes (2) (3)

- § 6.1 Common idioms (2)

- § 6.2.1 Controlling user agent (2) (3) (4)

- § 6.3 Interface PresentationRequest

- § 6.3.1 Constructing a PresentationRequest (2)

- § 6.3.3 Starting a presentation from a default presentation request

- § 6.4 Interface PresentationAvailability

- § 6.4.4 Monitoring the list of available presentation displays

- § 6.4.5 Interface PresentationConnectionAvailableEvent (2)

- § 6.5 Interface PresentationConnection